CHAPTER 247 General and Historical Considerations of Radiotherapy and Radiosurgery

In the little more than a century since its discovery, ionizing radiation has become an indispensable tool in neurosurgical practice. The past 113 years have seen significant growth in our understanding and use of ionizing radiation to treat neurosurgical disorders. Therapeutic radiation interacts with cellular components at the subatomic level causing DNA damage, either through directly ionizing action or through indirectly ionizing events mediated through free radical formation.1–3 Currently, radiation therapy is most commonly administered as fractionated radiotherapy (FRT), whereby modest doses from a limited number of beams are applied daily for several weeks, and as stereotactic radiosurgery (SR), whereby in a just a few sessions,1–5 much larger doses are delivered to a precisely defined target from a large number of fixed or rotational beams.4 Although not currently in widespread use, interest continues in more specialized techniques, such as brachytherapy and particle-beam radiation therapy. In brachytherapy, high doses are delivered internally and continuously by the implantation of radioactive isotopes, and in particulate irradiation, advantage is taken of the unique physical and radiobiologic properties of cyclotron or reactor-generated particles, such as protons, neutrons, and carbon and helium nuclei.

FRT has been proved to extend the lives of patients with malignant gliomas5–7 and metastatic brain and extradural spine tumors8–12 at the prospective randomized clinical trial level (class 1 evidence).13 It can prolong local control for patients with benign brain tumors such as meningiomas,14,15 pituitary adenomas,16,17 craniopharyngiomas,18,19 and schwannomas.20,21 SR has reached class 1 evidence level for metastatic brain tumors.22,23 It is showing tremendous promise for patients with benign brain tumors, including schwannomas,24,25 meningiomas,26,27 pituitary adenomas,28–31 craniopharyngiomas,32 and glomus tumors.33,34 In addition, SR is unique in its ability to obliterate vascular malformations35,36 and treat functional conditions such as trigeminal neuralgia,37,38 movement disorders,39,40 and certain select epilepsy41 and psychiatric disorders.42,43 Brachytherapy is currently used to control the secretions of tumor cyst walls44–46 as well as in select brain tumor resection cavity settings.47–49

Although the field of radiation oncology shares a common ancestry, with (diagnostic) radiology dating back to Roentgen,50 Becquerel,51 and Curie,52 diverging technologic, biologic, and professional developments gave rise to separate fields by mid-century. To a great extent, the history of radiation oncology in the 20th century involved the search for ways to boost efficacy of treatment through the development of technology to deliver ever higher doses at greater tissue depth or to minimize collateral damage by delivering dose more accurately. Among the many developments, four general themes emerge: (1) the evolution of more powerful radiation generators capable of producing beams sufficiently penetrating to treat deep-seated tumors, (2) the elucidation of the principles of radiation biology, (3) the application of increasingly sophisticated imaging and computational technology, and (4) the search for novel forms of radiation.

The Beginning

X-rays were discovered by Roentgen in 1895,50 natural radioactivity by Becquerel in 1896,51 and radium by the Curies in 1898.52 Recognizing the potential of these new forms of radiant energy, not only for imaging but also for therapy, physicians soon applied them to malignant disease. The first empirical treatment of a patient with cancer (advanced breast cancer) occurred in 1896,53 and the first cancer patient was cured by radiation (basal cell skin cancer) in 1899,54 only 4 years after Roentgen’s discovery. Isodose lines were already being used for x-ray therapy by the early 1920s.55 Although Stenbeck was the first to treat cancer with multiple doses,56 it was not until Coutard’s work that radiation therapy became recognizably modern, featuring multiple doses (fractions) externally applied using radiation beams (Fig. 247-1A).57,58

The first use of ionizing radiation to treat primary brain tumors paralleled the advent of neurosurgery as a separate specialty and antedated the dawn of FRT. In 1909, Gramegna treated a patient with acromegaly using x-rays and noted visual improvement.59 The first neurosurgical use of brachytherapy was also for acromegaly in 1912, when Hirsch placed radium into the sella turcica after transsphenoidal tumor resection.60 Throughout the 1920s, Harvey Cushing used “Roentgen therapy” for select cases of gliomas and medulloblastomas,61–64 as well as pituitary adenomas,61,64,65 and even tried using it for cerebral angiomas (arteriovenous malformations [AVMs]).61,63,66 In 1931, Ernest Sachs reported on a technique of intraoperative x-ray therapy designed to take advantage of the lack of intervening skull and scalp.67 The first report of using FRT for metastatic brain tumors was by Chao and colleagues in 1954,68 and a subsequent report was made by Chu and Hilaris in 1961 (see Fig. 247-1A).69

The Search for Energy And Penetration

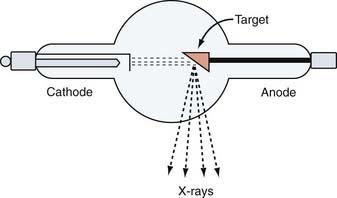

The earliest generation of radiation units based on vacuum tube technology were capable of producing low-energy x-rays only suitable for treating superficial targets such as skin cancers and small lymph nodes. The Coolidge tube (140 kV), developed in 1913, was the first step toward a consistent and reliable therapeutic x-ray machine (Fig. 247-2).70 A 200-kV machine became available in 1922 (see Fig. 247-1A).54 These earliest x-rays machines suffered from poor tissue penetration and high rates of superficial skin burns. The units of subsequent generations, such as Van de Graaf generators, cyclotrons, synchrocyclotrons, betatrons, and bevatrons, were eventually capable of producing high-energy x-rays but were impractical because of high cost, low beam output, or other technical factors (Table 247-1; see Fig. 247-1A).

TABLE 247-1 Photon (X-Ray and Gamma Ray) Therapy Categories and Energies

| NAME | PHOTON ENERGY |

|---|---|

| Kilovoltage therapy | 20 to 200 kV |

| Orthovoltage therapy | 200 to 500 kV |

| Supervoltage therapy | 500 to 1000 kV |

| Megavoltage therapy | >1000 kV to ≥1 MV |

The modern megavoltage teletherapy era began in the 1950s with the first commercially available teletherapy units based initially on radium-226 then subsequently on cobalt-60 (1.2 to 1.25 MV).71 At this energy, photons could reach any depth in the human body with residual energy high enough to have an ionizing therapeutic effect. The first commercially available cobalt teletherapy machine became commercially available in 1951, and 1120 machines were sold to hospitals over the first 10 years.71

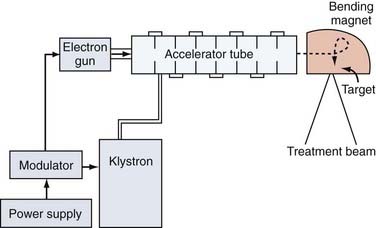

The first linear accelerator (LINAC) designed for therapeutic use was installed in the United Kingdom in 1943.72,73 However, LINACs did not become commercially available until 1953, and the first therapeutic LINAC was not installed in the United States until 1957.74 Unlike their vacuum tube or isotope-based predecessors, LINACs produce high-energy x-rays by accelerating electrons and directing them onto a tungsten target where x-rays are generated through a process known as bremsstrahlung (Fig. 247-3). Unlike isotope-based teletherapy, LINACs have the advantage that their radiation source does not decay over time and are capable of even higher-energy, more penetrating beams than isotopic sources (up to 24 MV compared with1.25 MV for cobalt-60) with the potential for also producing electron beams. Their superior beams (initially able to reach energies of 1.2 to 4 MV and now even as high as 24 MV), their finer handling characteristics, and elimination of the need to deal with Nuclear Regulatory Commission oversight led to the eventual eclipse of cobalt-60 teletherapy in the United States by the late 1960s (see Fig. 247-1B).

The Establishment of Consistency and Reproducibility

Major landmarks in the history of moving from modern megavoltage teletherapy toward therapeutic standardization were the establishment of the American Society for Therapeutic Radiology and Oncology (ASTRO) in 1958,75 the International Society for Research in Stereoencephalotomy in 1961 (now known as the American Society for Stereotactic and Functional Neurosurgery as well as the Joint Section for Stereotactic and Functional Surgery of both the American Association of Neurological Surgeons and the Congress of Neurological Surgeons76), the European Organisation for the Research and Treatment of Cancer (EORTC) in 1962,77 and the Radiation Therapy Oncology Group (RTOG) in 1967.78

In the United States, the RTOG had particular influence on this process. It is currently a National Cancer Institute–funded multidisciplinary cooperative group that runs prospective randomized clinical trials (RCTs) that include ionizing radiation in the treatment of cancer. It was a series of RTOG RCTs in the 1970s and early 1980s that established the standard fractionation schemes still in use for metastatic brain tumors8,10 and contributed to establishing the fractionation schemes still in use for primary brain tumors. RTOG has also had an influence on standard practices for SR.23,79,80 In the United States, it is largely the empirical data from RTOG RCTs, coupled with the professional education efforts of ASTRO and the neurosurgery national societies and sections, that have led to standard and reproducible clinical results using ionizing radiation to treat neurological conditions at most medical centers across the country.

Emergence of Radiobiology and Limitation of Radiation Injury

In 1934, Coutard established fractionation as the preferred radiotherapy modality, ushering in the modern practice of FRT.58 In his work with laryngeal cancer, fractionation—the administration of small doses repetitively over time—enabled the delivery of higher total doses than was possible with single large fractions so that therapeutic doses could be given without excessive normal tissue toxicity (skin burns). The importance of understanding radiobiology thus emerges as the second major theme of radiation oncology history. This was critical to limit patient toxicity without sacrificing optimal therapeutic effect. However, experimental study and theory would take time to catch up with the essentially empirically derived technique of fractionation in order to provide the rationale for the dose-fractionation schedules already standardized in the clinic.

The emergence of the modern study of radiobiology can be traced back at least as far as 1953 when Gray carried out the first studies on oxygen and radiation-induced growth inhibition81 (see Fig. 247-1B). In 1965, Elkind and associates discovered sublethal damage repair and linked this finding to dose fractionation.82 As it related to the central nervous system (CNS), the 1970s and 1980s were the key decades for autopsy, experimental animal, and in vitro work, with seminal contributions by Sheline,83,84 Fowler,85 Hall,74,86,87 and many others (Fig. 247-1C).

Key concepts emerged that are discussed in detail in Chapter 249 on radiobiology in this textbook; these include Sheline’s formulation of differential tissue effects, including early acute reactions, early delayed reactions, and late delayed reactions and the corresponding separation of tissue into early- and late-responding tissue,83,84 and the classic four Rs (repair, reassortment of cells within the cell cycle, repopulation, and reoxygenation) of radiobiology (Table 247-2).84 Further theoretical refinements involved modeling biologic effects through isoeffect plots including the empirically derived nominal single dose (NSD) and time-dose fractionation (TDF) model88,89 and the linear quadratic formula.90–93 In particular, insights derived from the linear quadratic formula’s empirically derived α/ß ratios helped suggest dose-fractionation schedules to either enhance tumoricidal efficacy or to diminish normal tissue toxicity. The simultaneously accumulating empirical RCT data from the RTOG, as well as the EORTC, among others, eventually confirmed the translational validity and utility of these experimentally derived concepts.

| CONCEPT | RATIONALE |

|---|---|

| Reoxygenation | Hypoxic cells or hypoxemic areas within tumors are relatively more resistant to a given dose of radiation. Dynamic biologic changes within the tumor suggest that cells that are hypoxic during one fraction may be less so during subsequent fractions, and fractionation will thus increase the chances of desired effect on the largest number of cells. |

| Reassortment | A given dose of photons is most likely to irreversibly damage DNA if the cell is in mitosis and the DNA is condensed as chromosomes. Cells that are not in mitosis during one fraction may be so during subsequent fractions, so fractionation will increase the chances of desired effect on the largest number of cells. |

| Repair | The time between fractions allows for repair of sublethally damaged cells before the next dose. This is an advantage for fractionation only if normal tissue in the treatment volume is more efficient at this process than tumor cells, which is usually the case. |

| Repopulation | The time between fractions allows for replacement of lost cells before the next dose. This is an advantage for fractionation only if normal tissue in the treatment volume is more efficient at this process than tumor cells, which may or may not be the case for a given tumor type. |

Neurosurgery played an important part in this work, particularly as it related to assessing single-dose tolerance of normal CNS tissue for SR. Kjellberg developed his 1% radiation necrosis risk isoeffect line by extrapolating from a combination of autopsy and animal data.94 This was later improved on by Flickinger’s more sophisticated 3% radiation necrosis risk isoeffect curve for SR using the linear quadratic formula.95

Imaging and Targeting

It is axiomatic that to optimally hit a target you must be able to accurately see it—advances in imaging represent the third major theme in radiation oncology history. However, until the advent of tomographic imaging with computed tomography (CT) in the late 1970s and magnetic resonance imaging (MRI) in the late 1980s, the targeting of CNS lesions was somewhat problematic. Up to that point, the state of the art consisted of nuclear brain scans, air ventriculograms, angiograms, and the craniotomy defect and standard anatomic landmarks on skull x-rays. In fact, glycerol rhizotomy for trigeminal neuralgia derived from early attempts to instill radiopaque tantalum powder into the trigeminal cistern so that the ganglia could be imaged for SR (glycerol was originally only the vehicle for the tantalum powder used for imaging).96

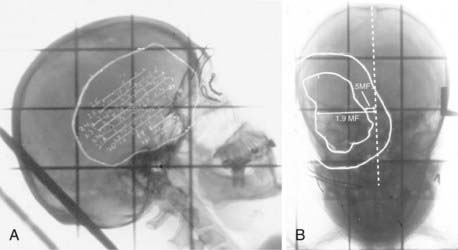

Indeed, before the late 1980s, it was difficult to know whether the inconsistent or poor FRT outcomes were from resistant tumors or just poor targeting. Uncertainties in localization required treatment plans with large margins, increasing neurotoxicity. For the purposes of radiation planning, the reconstruction of space was as much art as science. As late as 1990, it was possible for a planning technique dependent on overhead projectors, push-pins, and wax pencils to be presented at national conferences.97 Also as late as 1990, it was not uncommon for radiation oncologists at the University of Pittsburgh to walk into the neuroradiology reading room with a lateral scout skull film and ask the neuroradiologist to please draw the limits of the tumor derived from CT or MRI onto the film with a wax pencil for target planning purposes (Fig. 247-4).

The neurosurgical concept of stereotaxis for three-dimensional space registration was a key evolutionary advance adopted by FRT. Although frame-based stereotaxis had been widely used for SR since it was conceived in 1951,98 it was the development of frameless stereotaxy with virtual reconstruction of anatomic space, three-dimensional surface coregistration, and reliable tracking navigation techniques that allowed FRT planning to achieve consistent and reliable targeting accuracy. The first neurosurgery proprioceptive arm frameless stereotaxy system was not approved by the U.S. Food and Drug Administration until 1993,99 and the first infrared optical tracking systems100 did not become commercially available for neurosurgery until 1996. Applications of these concepts and this technology to FRT began soon thereafter (see Fig. 247-1C). Currently, FRT applications of frameless stereotaxic localization and tracking rely on relocatable custom-molded fixation masks. Although almost all current systems are based on CT targeting, image fusion techniques have allowed MRI data and even molecular-metabolic (i.e., positron emission tomography [PET]) imaging data to be secondarily incorporated.

Computation Advances

Computers began to be introduced into FRT planning in the late 1970s,71 but it was not until the late 1980s and early 1990s that they found widespread application. The coupling of planar tomographic imaging and stereotaxis with treatment planning software designed to take advantage of the increasingly more powerful computational engines made three-dimensional voxel-by-voxel calculation possible. Before this, two-dimensional FRT planning used single-plane dose calculations based on hand-measured external contours. Only crude blocking with metal alloys poured into Styrofoam molds based on orthogonal x-rays were possible, and typically only a limited2–4 number of beams (i.e., ports) were possible.

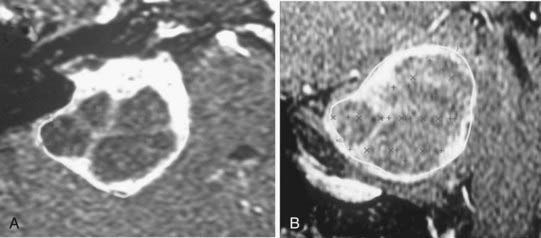

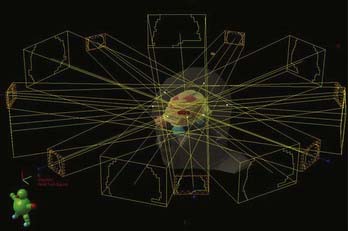

With the advent of three-dimensional treatment planning, dose distribution could be calculated, and therefore optimized, in all three dimensions. More beams could be used, including non-coplanar beams, because the target and normal tissue anatomy could be reconstructed from any orientation in a “beam’s eye view” (BEV). Dose could be better sculpted to the three-dimensional target volume, which was directly visualized on the target images so that normal structures could be more easily excluded. The more powerful planning systems also made practical the calculation and analysis of dose-volume histograms for which the impact on clinical practice is still being assessed. Moreover, the more powerful computational engines also made practical (i.e., faster) more sophisticated beam-modeling algorithms such as superposition-convolution and fast Monte Carlo dose calculations, although the ultimate goal of true Monte Carlo dose calculation has not yet become routine.101–107 In SR, separate isocenter interactions, as well as dynamic rotational arc isocenter contributions, could be rapidly and accurately calculated for the first time, opening up another level of SR planning (Fig. 247-5). Indeed, mobile LINAC SR and complex multi-isocenter treatment planning for fixed-position SR units became practical for the first time.

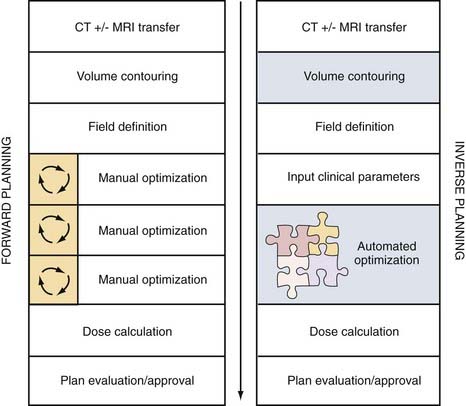

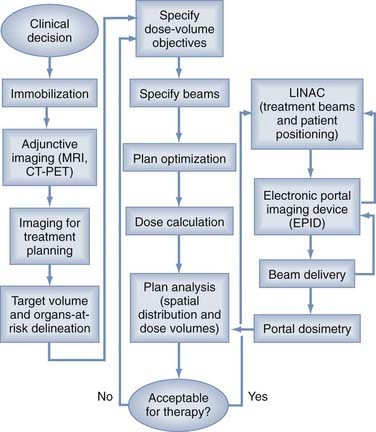

Inverse planning is the most recent advance in treatment planning and is critically dependent on the computational power of computers (Fig. 247-6). Before this development, beam design was empirical, starting from an initial estimate of beam number and orientation and then progressively refined through a process of iterative assessment and redesign. Often, optimization relied on the individual experience and persistence of those planning. In contrast, with inverse planning, the end-result dose distribution, dose-volume constraints, and dose limits to nearby structures are specified first, followed by automated beam optimization (number of beams, their relative weights and intensities, and customized beam shapes) through the repeated analysis and refinement of iteratively generated treatment combinations. Inverse planning yields the ultimate in FRT patient customization for the constraints and capabilities of each modern radiation delivery system.

Robotic Positioning and Automated Collimation

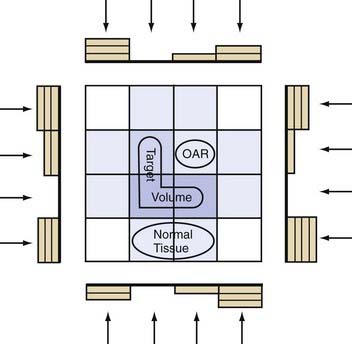

A multileaf collimator (MLC) consists of shielding material shaped into shutters, slats, or vanes positioned between the x-ray source and the target in order to determine beam shape by subtracting portions of the field (Fig. 247-7). In the past, lead blocks, molded Cerrobend, and machined attenuators (such as wedges) locked by hand into hardware mounts in front of the treatment head imposed some degree of beam shaping for the modest dose calculation planning that was then possible. The computer-controlled MLCs that came next were a major advance. Now, systems could automatically change aperture shape within the treatment head as the LINAC moved from position to position, improving accuracy as well as conformality while decreasing treatment times compared with hand-mounted blocking. Far more complex and individually customized beam shapes were now possible. Additionally, more than just providing automated beam shaping through the dynamic control of leaf openings as they translate across the beam, MLCs make intensity modulation within individual beams possible (Fig. 247-8).

Brachytherapy

Brachytherapy exploits the potential biologic advantages of continuous ionizing energy delivery from the temporary or permanent insertion of radioactive isotopes. For neurosurgical applications, brachytherapy sources are divided into gamma and beta emitters. Among gamma emitters, I-125 (U.S.) and Ir-192 (Europe) are the most widely used. Their low radiation energies (30 keV for I-125 and 380 keV for Ir-192) result in a sharp dose fall-off (half-dose distance about 2 cm), ideal for local radiation “boosts” given either before or after FRT. Both high-activity and low-activity I-125 seeds have been used for the treatment of gliomas,108–111 but the high-activity sources require a second operation for removal and are associated with a relatively high reoperation rate for radionecrosis.109,110 Recently, liquid iodine-125 instilled into the outer compartment of a “double-hulled” temporarily implantable balloon left in the tumor resection cavity has been reported for both primary gliomas and metastatic brain tumors after initial resection.47,49 At present, brachytherapy with gamma emitters has been limited by high rates of radionecrosis and the failure to consistently show benefit for glioma patients in RCTs.109,110

Among beta-emitting (electron) brachytherapy sources, P-32 (U.S.) and Y-90 (Europe) are the most widely used. The short range of penetration of their beta particles (2 mm) and their availability in colloidal form make them ideal for intracavitary instillation into cystic lesions, such as craniopharyngioma and cystic gliomas. High doses can be delivered to the cyst lining with very low risk for toxicity to adjacent sensitive structures, preventing cyst volume reaccumulation and even causing involution of some tumor cysts.44,46

Stereotactic Radiosurgery

SR, a neurosurgical technique conceived and developed by neurosurgeons, has now developed into a powerful multidisciplinary tool for neurosurgical practice.4 The concept, first applications, and even the name were all defined by the Swedish neurosurgeon Lars Leksell in 1951.98 His initial goal was to deliver focused ionizing radiation to tissue at depth in a way that could create lesions or ablate pathology in a very precise and controlled manner without skin incision. Accuracy was to be ensured with stereotactic targeting using a frame Leksell developed in 1948.112 After experimenting with several methods of dose delivery including LINAC and proton beams,113 Leksell eventually developed a 179-source cobalt-60 prototype Gamma Knife (GK), first used clinically in 1967.114 This further evolved into the third-generation and first commercially available GK-U unit installed in Argentina in 1984.115 The first U.S. gamma unit was installed at the University of Pittsburgh in 1987.116 The U unit was followed in the late 1990s by the B and C units and eventually by the 192-source GK Perfexion unit in 2006 (see Fig. 247-1B and C).117 As the technology developed, the goal of therapy also evolved to include biologic inactivation of neoplasia short of ablation as well as the concept of one to five delivery sessions.4

In the early 1950s, charged-particle SR exploiting the Bragg peak effect was further developed using helium ions by Ernest Lawrence and then protons by Jacob Fabrikant in California, who were among the first to successfully treat patients with AVMs and pituitary adenomas (see Fig. 247-1B).118,119 That same decade, Kjellberg working in Boston also began treating patients with pituitary tumors and AVMs using protons.94,118 Charged-particle SR continues to this day but is now almost exclusively proton based. These early charged-particle SR pioneers established the first clinical experience for patients with AVMs and pituitary tumors. Although Leksell and his GK team also treated AVMs and pituitary tumors in the 1960s and 1970s,120–123 they were the first to use SR to create functional lesions (thalamotomy, capsulotomy, trigeminal neuralgia)124–126 as well as the first to apply the technique to other benign tumors such as craniopharyngiomas, schwannomas, and meningiomas.127–129 Application to malignant tumors occurred much later in the late 1980s and early 1990s.130–137

In the 1980s, the first LINAC radiosurgery prototypes were developed, which were critically dependent on advances in treatment planning and hardware engineering (see Fig. 247-1C). The first efforts used multiple non-coplanar arc rotational beams with either a mobile table or LINAC source. The prototypes were developed by Betti,138 Colombo,139 and Winston and Lutz,140 among others. The first commercially available LINAC SR unit became available in 1992 (X-Knife, Radionics, Burlington, MA). Further LINAC SR developments included more sophisticated software packages, the development of iterative robotic LINAC source positioning, and MLCs to allow the transition from rotational arc SR to “step-and-shoot” techniques, including the CyberKnife (Accuray, Sunnyvale, CA), the Novalis system (BrainLAB AG, Feldkirchen, Germany), and the Varian Trilogy system (Varian Medical Systems, Inc., Palo Alto, CA). Other developments include noninvasive relocatable frames and frameless stereotactic optical tracking systems, discussed previously.

Spinal SR began after the development of non-coplanar arc LINAC SR technology but required an incision to mount a spine “frame” for the rotational LINAC as a reference for targeting.141–143 The newer step-and-shoot versions of LINAC SR with frameless image-guidance techniques and or image guidance coupled with intensity-modulated radiotherapy (IMRT) techniques, including helical tomotherapy (discussed later), are the key advances that have extended the application of SR beyond the confines of the cranium.144–148

Intensity-Modulated Radiotherapy

IMRT is the newest development in FRT.149–151 While taking advantage of the computational, inverse planning, automated MLC and mMLC advances of three-dimensional standard conformal FRT, IMRT uses multiple computer-optimized, segmented beams of nonuniform intensities. Instead of a limited number of beams2–4 of uniform cross-sectional intensity distribution (fluence) designed by projecting the anatomy onto the BEV, IMRT uses a large number of beams7–9 divided into a large number (100s) of MLC-controlled beam segments, whose “beamlet” intensities are optimized to meet the dose-volume specification of the inverse planning algorithms. The significance for neurosurgical practice is that IMRT achieves the convex surface conformality of three-dimensional conformal FRT but is also capable of conforming to concave surfaces so that the spinal cord, optic nerve, or brainstem might be spared even if nestled within the concavity in question (Figs. 247-8 and 247-9).

FIGURE 247-9 Intensity-modulated radiotherapy (IMRT) process flow. Left column depicts clinical processes before inverse planning. Middle column depicts dose optimization and plan analysis (see also Fig. 247-6). Right column depicts treatment using adaptive radiotherapy. CT, computed tomography; LINAC, linear accelerator; MRI, magnetic resonance imaging; PET, positron emission tomography.

Mackie and colleagues distinguished between serial tomotherapy such as described for the NOMOS system, in which the target is treated by serially adjoined “slices,” and helical tomotherapy, in which the target is treated in helical manner as the treatment head rotates around a longitudinally translating patient.152 The TomoTherapy system (TomoTherapy, Madison, WI) has a therapeutic LINAC built directly within the targeting CT scanner with a radiation detector on the other side of the CT gantry. The actual measurement of radiation delivered in each fraction is a unique advantage that allows for detection of variance between planned and actual dose that can be accounted for during subsequent dose fractions. Tomotherapy also has the new capability of being able to deliver hollow dose plans through tangential spiral dose administration. Although this has application for treating the pleura of the chest and lungs while sparing the heart, it also might have potential application for concentrically compressing spinal cord lesions.

Of the techniques that use conventional MLCs, sliding window or dynamic MLC controls beam fluence by optimizing the size and speed of MLC pairs as they translate across the beam. In step-and-shoot MLC (e.g., the Novalis [BrainLAB AG, Feldkirchen, Germany] and the Trilogy [Varian Medical Systems, Inc., Palo Alto, CA] systems), the beam is turned on only as discrete beam segments are produced by optimized MLC configurations.151 Finally, in intensity-modulated arc therapy (IMAT), photon fluence is modulated during beam arc rotation by MLC configurations dependent on gantry angle.153,154

Particulate and other Novel Radiation

Exploration of novel types of radiation forms the fourth theme of radiation oncology history. Charged-particle FRT in neurosurgical practice has a long history, and proton irradiation currently is most commonly used in the treatment of malignant, refractory skull base tumors such as chordomas and chondrosarcomas.155–157 Heavier charged particles such as the carbon ion beams being developed in Europe and Japan are for the treatment of malignant gliomas.158 Particulate irradiation offers two potential advantages over conventional photon irradiation: one physical and the other radiobiologic.

Heavier charged particles (e.g., carbon atoms and helium nuclei) have an additional advantage of higher radiobiologic effectiveness (RBE) compared with conventional photon irradiation. Among several factors, cell kill depends on the number of ionizations per particulate track length, a concept known as linear energy transfer (LET). High LET radiation causes more biologic damage per track length than low LET radiation. Conventional photon irradiation is sparsely ionizing at about 0.3 keV/µm, compared with 100 to 2000 keV/µm of densely ionizing, heavy charged particles. The cytotoxic efficiency of particulate irradiation compared with conventional photon irradiation (i.e., RBE) increases dramatically in the range between 10 and 100 keV/µm, after which RBE falls owing to the “overkill” effect.87 In general, the larger the particle, the higher the LET. Protons, although a type of particulate radiation, have only the Bragg peak advantage because their RBE, once thought to be about 1.3:1, has since been scaled back to 1:1 to 1.1:1.159

Neutrons like protons are nuclear particles with an atomic mass unit of 1 but without any charge. However, unlike protons, fast neutrons have no special dose deposition qualities but do have a higher RBE for tumors such as sarcomas and salivary gland carcinomas as well as neural tissue. Fast neutrons were first used to treat malignant primary brain tumors in 1938 with disappointing results (see Fig. 247-1A).160 Subsequent clinical trials with fast neutrons in brain tumors demonstrated frequent coagulative necrosis to the point of sterilization of the tumor, but without any gain in survival and with the suggestion of a detrimental effect on quality of life.161,162

Boron-neutron capture therapy (BNCT) is a binary FRT based on a local intracellular reaction whereby slow (or thermal) neutrons combine with boron-10 to release a recoil Li-7 atom, a gamma ray, and an alpha particle (helium nucleus). The alpha particle has a range of only 4 to 7 µm (about one cell diameter) and is densely ionizing with a high LET and an RBE of about 20:1. In BNCT, patients are injected with a boron-10–containing compound designed to be preferentially taken up by brain tumor cells and are then exposed to thermal neutron beams extracted from a modified nuclear reactor core. The resulting release of short-range, high-LET alpha particles leads to highly localized tumor cytotoxicity. Conceptually introduced by Locher in 1936,163 BNCT for brain tumors was first attempted in 1951 using intravenous borax.164–167 Attempts from 1951 through 1972 continued to be disappointing.164–168 Newer and more selective boronated agents, including boron sulfhydryl (BSH), sodium borocaptate, and boron phenylalanine (BPA), are showing promise and are undergoing phase I and II clinical trials for malignant gliomas.169–174 However, even with better neutron capture agents, thermal neutrons still suffer from rapid tissue attenuation and thus may only be potentially applicable to tumors at depths less than 3 to 4 cm beneath the scalp surface.170

Barnett GH, Linskey ME, Adler JR, et al. Stereotactic radiosurgery: an organized neurosurgery-sanctioned definition. J Neurosurg. 2007;106:1-5.

Becquerel H. Sur les radiations émises par phosphorescence. Comptes Rendus. 1896;122:420-421.

Bernier J, Hall EJ, Giaccia A. Radiation oncology: a century of achievements. Nat Rev Cancer. 2004;4:737-747.

Coolidge WD. A powerful roentgen ray tube with a pure electron discharge. Phys Rev. 1913;2:409-430.

(Mme)Curie P, Curie P, Bémont G. Sur une nouvelle substance fortement radio-active, continue dans la pechblende. Comptes Rendus. 1898;127:1215-1217.

Dubois JB, Ash D. Radiation Oncology: A Century of Progress and Achievement: 1895-1995. In: Berbier J, editor. Radiation Oncology: A Century of Progress and Achievement: 1895-1995. Brussels: ESTRO Publication; 1995:79-98.

Elkind MM, Sutton-Gilbert H, Moses WB, et al. Radiation response of mammalian cells in culture: V. Temperature dependence of the repair of X-ray damage in surviving cells (aerobic and hypoxic). Radiat Res. 1965;25:359-376.

Fowler JF. Development of radiobiology for oncology—a personal view. Phys Med Biol. 2006;51:R263-R286.

Gray LH, Conger AD, Ebert M, et al. The concentration of oxygen dissolved in tissues at the time of irradiation as a factor in radiotherapy. Br J Radiol. 1953;26:638-648.

Hall EJ. The Janeway Lecture 1992. Nine decades of radiobiology: is radiation therapy any the better for it? Cancer. 1993;71:3753-3766.

Hall EJ, Astor M, Bedford J, et al. Basic radiobiology. Am J Clin Oncol. 1988;11:220-252.

Hall EJ, Giaccia AJ. Radiobiology for the Radiologist. Philadelphia: Lippincott William & Wilkins; 2005.

Leksell L. The stereotaxic method and radiosurgery of the brain. Acta Chir Scand. 1951;102:316-319.

Lunsford LD, Alexander EIII, Loeffler JS. General introduction: History of radiosurgery. In: Alexander EIII, Loeffler JS, Lunsford LD, editors. Stereotactic Radiosurgery. New York: McGraw-Hill; 1993:1-6.

Mayneord W. The Physics of X-Ray Theory. London: Churchill; 1929.

Perez CA, Brady LW. Overview. In: Perez CA, Brady LW, editors. Principles and Practice of Radiation Oncology. 2nd ed. Philadelphia: JB Lippincott; 1992:1-63.

Robison; Robison RFVice-Chair, ASTRO History Committee. History of radiation therapy. The evolution of therapeutic radiology. http://www.rtanswers.org/about/history.htm. Accessed 07/01/08

Roentgen WC. On a new kind of rays (preliminary communication). Translation of a paper read before the Physikalizchemedicinischen Gesellschaft of Wutzberg on December 28, 1895. Br J Radiol. 1931;4:32.

Sheline GE. Irradiation injury of the human brain: a review of clinical experience. In: Gilbert H, Kagan AR, editors. Radiation Damage to the Nervous System. New York: Raven Press; 1980:39-52.

Sheline GE, Wara WM, Smith V. Therapeutic irradiation and brain injury. Int J Radiat Oncol Biol Phys. 1980;6:1215-1228.

1 Fowler JF. Development of radiobiology for oncology—a personal view. Phys Med Biol. 2006;51:R263-R286.

2 Hall EJ. The Janeway Lecture 1992. Nine decades of radiobiology: is radiation therapy any the better for it? Cancer. 1993;71:3753-3766.

3 Hall EJ, Astor M, Bedford J, et al. Basic radiobiology. Am J Clin Oncol. 1988;11:220-252.

4 Barnett GH, Linskey ME, Adler JR, et al. Stereotactic radiosurgery: an organized neurosurgery-sanctioned definition. J Neurosurg. 2007;106:1-5.

5 Walker MD, Alexander EJr, Hunt WE. Evaluation of BCNU and/or radiotherapy in the treatment of anaplastic gliomas. A cooperative clinical trial. J Neurosurg. 1978;49:333-343.

6 Kristiansen K, Hagen S, Kollevold T. Combined modality therapy of operated astrocytomas grade III and IV. Confirmation of the value of postoperative irradiation and lack of potentiation of bleomycin on survival time: a prospective multicenter trial of the Scandinavian glioblastoma study group. Cancer. 1981;47:649-652.

7 Chang CH, Horton J, Schoenfeld D. Comparison of postoperative radiotherapy and chemotherapy in the multidisciplinary management of malignant gliomas. A joint Radiation Therapy Oncology Group and Eastern Cooperative Oncology Group Study. Cancer. 1983;52:997-1007.

8 Borgelt B, Gelber R, Kramer S, et al. The palliation of brain metastases: final results of the first two studies by the Radiation Therapy Oncology Group. Int J Radiat Oncol Biol Phys. 1980;6:1-9.

9 Gaspar L, Scott C, Rotman M, et al. Recursive partitioning analysis (RPA) of prognostic factors in three Radiation Therapy Oncology Group (RTOG) brain metastases trials. Int J Radiat Oncol Biol Phys. 1997;37:745-751.

10 Gelber RD, Larson M, Borgelt BB, et al. Equivalence of radiation schedules for the palliative treatment of brain metastases in patients with favorable prognosis. Cancer. 1981;48:1749-1753.

11 Patchell RA, Tibbs PA, Regine WF, et al. Postoperative radiotherapy in the treatment of single metastases to the brain: a randomized trial. JAMA. 1998;280:1485-1489.

12 Young RF, Post EM, King GA. Treatment of spinal epidural metastases: randomized prospective comparison of laminectomy and radiotherapy. J Neurosurg. 1980;53:741-748.

13 Linskey ME. Evidence-based medicine for neurosurgeons: introduction and methodology. Prog Neurol Surg. 2006;19:1-53.

14 Goldsmith BJ, Wara WM, Wilson CB, Larson DA. Postoperative irradiation for subtotally resected meningiomas. A retrospective analysis of 140 patients treated from 1967 to 1990. J Neurosurg. 1994;80:195-201.

15 Maguire PD, Clough R, Friedman AH, Halperin EC. Fractionated external-beam radiation therapy for meningiomas of the cavernous sinus. Int J Radiat Oncol Biol Phys. 1999;44:75-79.

16 Brada M, Rajan B, Traish D, et al. The long-term efficacy of conservative surgery and radiotherapy in the control of pituitary adenomas. Clin Endocrinol. 1993;38:571-578.

17 Rush SC, Newall J. Pituitary adenoma: the efficacy of radiotherapy as the sole treatment. Int J Radiat Oncol Biol Phys. 1989;17:165-169.

18 Rajan B, Ashley S, Gorman C, et al. Craniopharyngioma—long-term results following limited surgery and radiotherapy. Radiat Oncol. 1993;26:1-10.

19 Hetelekidis S, Barnes PD, Tao ML, et al. 20-Year experience in childhood craniopharyngiomas. Int J Radiat Oncol Biol Phys. 1993;27:189-195.

20 Wallner KE, Sheline GE, Pitts LH, et al. Efficacy of irradiation for incompletely excised acoustic neurilemomas. J Neurosurg. 1987;67:858-863.

21 Combs SE, Volk S, Schulz-Ertner D, et al. Management of acoustic neuromas with fractionated stereotactic radiotherapy (FSRT): long-term results in 106 patients treated in a single institution. Int J Radiat Oncol Biol Phys. 2005;63:75-81.

22 Kondziolka D, Patel A, Lunsford LD, et al. Stereotactic radiosurgery plus whole brain radiotherapy versus whole brain radiotherapy alone for patients with multiple brain metastases. Int J Radiat Oncol Biol Phys. 1999;45:427-434.

23 Andrews DW, Scott CB, Sperduto PW, et al. Whole brain radiation therapy with or without stereotactic radiosurgery boost for patients with one to three brain metastases: phase III results of the RTOG 9508 randomised trial. Lancet. 2004;363:1665-1672.

24 Kondziolka D, Lunsford LD, McLaughlin MR, Flickinger JC. Long-term outcomes after radiosurgery for acoustic neuromas. N Engl J Med. 1998;339:1426-1433.

25 Chopra R, Kondziolka D, Niranjan A, et al. Long-term follow-up of acoustic schwannoma radiosurgery with marginal tumor doses of 12 to 13 Gy. Int J Radiat Oncol Biol Phys. 2007;68:845-851.

26 Kondziolka D, Mathieu D, Lunsford LD, et al. Radiosurgery as definitive management of intracranial meningiomas. Neurosurgery. 2008;62:53-58.

27 Linskey ME, Davis SA, Ratanatharathorn V. Relative roles of microsurgery and stereotactic radiosurgery for the treatment of patients with cranial meningiomas: a single surgeon 4-year integrated experience using both modalities. J Neurosurg. 2005;102(suppl):59-70.

28 Sheehan JP, Niranjan A, Sheehan JM, et al. Stereotactic radiosurgery for pituitary adenomas: an intermediate review of its safety, efficacy, and role in the neurosurgical treatment armamentarium. J Neurosurg. 2005;102:678-691.

29 Pouratian N, Sheehan J, Jagannathan J, et al. Gamma knife radiosurgery for medically and surgically refractory prolactinomas. Neurosurgery. 2006;59:255-266.

30 Jagannathan J, Sheehan JP, Pouratian N, et al. Gamma Knife surgery for Cushing’s disease. J Neurosurg. 2007;106:980-987.

31 Pollock BE, Jacob JT, Brown PD, Nippoldt TB. Radiosurgery of growth hormone-producing pituitary adenomas: factors associated with biochemical remission. J Neurosurg. 2007;106:833-838.

32 Kobayashi T, Kida Y, Mori Y, Hasegawa T. Long-term results of Gamma Knife surgery for the treatment of craniopharyngioma in 98 consecutive cases. J Neurosurg. 2005;103(suppl):482-488.

33 Foote RL, Pollock BE, Gorman DA, et al. Glomus jugulare tumor: tumor control and complications after stereotactic radiosurgery. Head Neck. 2002;24:33233-33238.

34 Varma A, Nathoo N, Neyman G, et al. Gamma knife radiosurgery for glomus jugulare tumors: volumetric analysis in 17 patients. Neurosurgery. 2006;59:1030-1036.

35 Flickinger JC, Kondziolka D, Maitz AH, et al. An analysis of the dose-response for arteriovenous malformation radiosurgery and other factors affecting obliteration. Radiother Oncol. 2002;63:347-354.

36 Ogilvy CS, Stieg PE, Awad I, et al. AHA scientific statement. Recommendations for the management of intracranial arteriovenous malformations: a statement for healthcare professionals from a special writing group of the Stroke Council, American Stroke Association. Stroke. 2001;32:1458-1471.

37 Kondziolka D, Lunsford LD, Flickinger JC, et al. Stereotactic radiosurgery for trigeminal neuralgia: a multiinstitutional study using the gamma unit. J Neurosurg. 1996;84:940-945.

38 Linskey ME, Ratanatharathorn V, Penagaricano J. A prospective cohort study of microvascular decompression and gamma knife stereotactic radiosurgery for patients with trigeminal neuralgia. J Neurosurg. 2008;109:160-172.

39 Ohye C, Shibazaki T, Sato S. Gamma knife thalamotomy for movement disorders: evaluation of the thalamic lesion and clinical results. J Neurosurg. 2005;102(suppl):234-240.

40 Young RF, Jacques S, Mark R, et al. Gamma knife thalamotomy for treatment of tremor: long-term results. J Neurosurg. 2000;93(suppl 3):128-135.

41 Bartolomei F, Hayashi M, Tamura M, et al. Long-term efficacy of gamma knife radiosurgery in mesial temporal lobe epilepsy. Neurology. 2008;70:1658-1663.

42 Quigg M, Barbaro NM. Stereotactic radiosurgery for treatment of epilepsy. Arch Neurol. 2008;65:177-183.

43 Rück C, Karlsson A, Steele JD, et al. Capsulotomy for obsessive-compulsive disorder: long-term follow-up of 25 patients. Arch Gen Psychiatry. 2008;65:914-921.

44 Hasegawa T, Kondziolka D, Hadjipanayis CG, et al. Management of cystic craniopharyngiomas with phosphorus-32 intracavitary irradiation. Neurosurgery. 2004;54:813-820.

45 Julow J, Backlund EO, Lányi F, et al. Long-term results and late complications after intracavitary yttrium-90 colloid irradiation of recurrent cystic craniopharyngiomas. Neurosurgery. 2007;61:288-295.

46 Taasan V, Shapiro B, Taren JA, et al. Phosphorus-32 therapy of cystic grade IV astrocytomas: technique and preliminary application. J Nuclear Med. 1985;26:1335-1338.

47 Gabayan AJ, Green SB, Sanan A, et al. GliaSite brachytherapy for treatment of recurrent malignant gliomas: a retrospective multi-institutional analysis. Neurosurgery. 2006;58:701-709.

48 Welsh J, Sanan A, Gabayan AJ, et al. GliaSite brachytherapy boost as part of initial treatment of glioblastoma multiforme: a retrospective multi-institutional pilot study. Int J Radiat Oncol Biol Phys. 2007;68:159-165.

49 Rogers LR, Rock JP, Sills AK, et al. Results of a phase II trial of the GliaSite radiation therapy system for the treatment of newly diagnosed, resected single brain metastases. J Neurosurg. 2006;105:375-384.

50 Roentgen WC. “On a new kind of rays (preliminary communication).” Translation of a paper read before the Physikalizchemedicinischen Gesellschaft of Wutzberg on December 28, 1895. Br J Radiol. 1931;4:32.

51 Becquerel H. Sur les radiations émises par phosphorescence. Comptes Rendus. 1896;122:420-421.

52 (Mme)Curie P, Curie P, Bémont G. Sur une nouvelle substance fortement radio-active, continue dans la pechblende. Comptes Rendus. 1898;127:1215-1217.

53 Grubbé EH. Priority in the therapeutic use of X-rays. Radiology. 1933;21:156-162.

54 Perez CA, Brady LW. Overview. In: Perez CA, Brady LW, editors. Principles and Practice of Radiation Oncology. 2nd ed. Philadelphia: JB Lippincott; 1992:1-63.

55 Mayneord W. The Physics of X-Ray Theory. London: Churchill; 1929.

56 Dubois JB, Ash D, Berbier J, editors. Radiation Oncology: A Century of Progress and Achievement: 1895-1995. Brussels: ESTRO Publication. 1995:79-98.

57 Coutard H. Roentgentherapy of epitheliomas of the tonsillar region, hypopharynx and larynx from 1920 to 1926. AJR Am J Roentgenol. 1932;28:313-331.

58 Coutard H. Principles of x-ray therapy of malignant diseases. Lancet. 1934;2:1-8.

59 Gramegna A. Un cas d acromegalie traite par la radiotherapie. Rev Neurol (Paris). 1909;17:15-17.

60 Hirsch O. Die operative behandlung von hypophysis-tumoren: Nach endonasalen methoden. Arch Laryngol Rhinol. 1912;26:529.

61 Bailey P. The results of roentgen therapy on brain tumors. AJR Am J Roentgenol. 1925;13:48-53.

62 Bailey P. Further notes on the cerebellar medulloblastomas. The effect of roentgen radiation. Am J Pathol. 1930;6:125-136.

63 Bailey P, Sosman MC, van Dessel A. Roentgen therapy of gliomas of the brain. Am J Roentgenol. 1928;19:203-264.

64 Cushing H. Intracranial Tumors. Springfield, IL: Charles C Thomas; 1932.

65 Towne EB. Treatment of pituitary tumors; role of roentgen-ray and surgery therein. Ann Surg. 1930;91:29-36.

66 Cushing H, Bailey P. Tumors Arising from the Blood Vessels of the Brain. Springfield, IL: Charles C. Thomas; 1928.

67 Sachs E. The Diagnosis and Treatment of Brain Tumors. St Louis: CV Mosby; 1931.

68 Chao J, Phillips R, Nickson JJ. Roentgen-ray therapy of cerebral metastases. Cancer. 1954;7:682-689.

69 Chu FC, Hilaris BB. Value of radiation therapy in the management of intracranial metastases. Cancer. 1961;14:577-581.

70 Coolidge WD. A powerful roentgen ray tube with a pure electron discharge. Phys Rev. 1913;2:409-430.

71 Robison RFVice-Chair, ASTRO History Committee. History of radiation therapy. The evolution of therapeutic radiology. http://www.rtanswers.org/about/history.htm. Accessed 07/01/08

72 Fry DW, Harvie RB, Mullet LB, Walkinshaw W. Travelling wave linear accelerator for electrons. Nature. 1947;160:351.

73 Fry DW, et al. A travelling wave linear accelerator for 4MeV electrons. Nature. 1948;162:859.

74 Bernier J, Hall EJ, Giaccia A. Radiation oncology: a century of achievements. Nat Rev Cancer. 2004;4:737-747.

75 American Society for Therapeutic Radiology and Oncology (ASTRO). About us. http://www.astro.org/aboutus/index.asp. Accessed 07/01/08

76 AANS/CNS Joint Section for Stereotactic and Functional Surgery—American Society for Stereotactic and Functional Neurosurgery. http://www.assfn.org/history/societies.asp. Accessed 07/01/08

77 European Organisation for the Research and Treatment of Cancer (EORTC). History. http://www.eortc.be/about/directory2008-2009/01%20background.htm. Accessed 07/01/08

78 Radiation Therapy Oncology Group. http://www.rtog.org/pdf_document/rtog_brochure.pdf. Accessed 07/01/08

79 Shaw E, Scott C, Souhami L, et al. Single dose radiosurgical treatment of recurrent previously irradiated primary brain tumors and brain metastases: final report of RTOG protocol 90-05. Int J Radiat Oncol Biol Phys. 2000;47:291-298.

80 Souhami L, Seiferheld W, Brachman D, et al. Randomized comparison of stereotactic radiosurgery followed by conventional radiotherapy with carmustine to conventional radiotherapy with carmustine for patients with glioblastoma multiforme: report of Radiation Therapy Oncology Group 93-05 protocol. Int J Radiat Oncol Biol Phys. 2004;60:853-860.

81 Gray LH, Conger AD, Ebert M, et al. The concentration of oxygen dissolved in tissues at the time of irradiation as a factor in radiotherapy. Br J Radiol. 1953;26:638-648.

82 Elkind MM, Sutton-Gilbert H, Moses WB, et al. Radiation response of mammalian cells in culture: V. Temperature dependence of the repair of X-ray damage in surviving cells (aerobic and hypoxic). Radiat Res. 1965;25:359-376.

83 Sheline GE. Irradiation injury of the human brain: a review of clinical experience. In: Gilbert H, Kagan AR, editors. Radiation Damage to the Nervous System. New York: Raven Press; 1980:39-52.

84 Sheline GE, Wara WM, Smith V. Therapeutic irradiation and brain injury. Int J Radiat Oncol Biol Phys. 1980;6:1215-1228.

85 Fowler JF. Development of radiobiology for oncology—a personal view. Phys Med Biol. 2006;51:R263-R286.

86 Hall EJ, Astor M, Bedford J, et al. Basic radiobiology. Am J Clin Oncol. 1988;11:220-252.

87 Hall EJ, Giaccia AJ. Radiobiology for the Radiologist. Philadelphia: Lippincott William & Wilkins; 2005.

88 Orton CG, Ellis F. A simplification in the use of the NSD concept in practical radiotherapy. Br J Radiol. 1973;46:529-537.

89 Ellis F. Is NSD-TDF useful to radiotherapy? Int J Radiat Oncol Biol Phys. 1985;11:1685-1697.

90 Douglas BG, Fowler J. The effect of multiple small doses of x rays on skin reactions in the mouse and a basic interpretation. Radiat Res. 1976;66:401-426.

91 Barendsen GW. Dose fractionation, dose rate and isoeffect relationships for normal tissue responses. Int J Radiat Oncol Biol Phys. 1982;8:1981-1997.

92 Dale RG. The application of the linear-quadratic dose-effect equation to fractionated and protracted radiotherapy. Br J Radiol. 1985;58:515-528.

93 Fowler J. The linear quadratic formula and progress in fractionated radiotherapy. Br J Radiol. 1989;62:679-694.

94 Kjellberg RN, Hanamura T, Davis KR, et al. Bragg-peak proton-beam therapy for arteriovenous malformations of the brain. N Engl J Med. 1983;309:269-274.

95 Flickinger JC. The integrated logistic formula and prediction of complications from radiosurgery. Int J Radiat Oncol Biol Phys. 1989;17:879-885.

96 Hakanson S. Trigeminal neuralgia treated by the injection of glycerol into the trigeminal cistern. Neurosurgery. 1981;9:638-646.

97 Goldwein JW, Zimmerman R. A simple method for defining target volumes on orthogonal simulation films using magnetic resonance images. Int J Radiat Oncol Biol Phys. 1990;19(suppl 1):245.

98 Leksell L. The stereotaxic method and radiosurgery of the brain. Acta Chir Scand. 1951;102:316-319.

99 Sandeman DR, Patel N, Chandler C, et al. Advances in image-directed neurosurgery: preliminary experience with the ISG Viewing Wand compared with the Leksell G frame. Br J Neurosurg. 1994;8:529-544.

100 Smith KR, Frank KJ, Bucholz RD. The NeuroStation—a highly accurate, minimally invasive solution to frameless stereotactic neurosurgery. Comput Med Imaging Graph. 1994;18:247-256.

101 Boyer AL, Mok EC. A photon dose distribution model employing convolution calculations. Med Phys. 1985;12:169-177.

102 Mackie TR, Scrimber JW, Battista JJ. A convolution method of calculating dose for 15 MV x-rays. Med Phys. 1985;12:188-196.

103 DeMarco JJ, Solbert TD, Smathers JB. A CT-based Monte Carlo simulation tool for dosimetry planning and analysis. Med Phys. 1998;25:1-11.

104 Ma CM, Mok E, Kapur A, et al. Clinical implementation of a Monte Carlo treatment planning system for radiotherapy. Med Phys. 1999;26:2133-2143.

105 Keall PJ, Siebers JV, Arnfield M, et al. Monte Carlo dose calculations for dynamic IMRT treatments. Phys Med Biol. 2001;46:929-941.

106 Rogers DWO. Fifty years of Monte Carlo simulations for medical physics. Phys Med Biol. 2006;51:R287-R301.

107 Chetty IJ, Curran B, Cygler JE, et al. Report of the AAPM Task Group No. 105: Issues associated with clinical implementation of Monte Carlo-based photon and electron external beam treatment planning. Med Phys. 2007;34:4818-4853.

108 Florell RC, Macdonald DR, Irish WD, et al. Selection bias, survival, and brachytherapy for glioma. J Neurosurg. 1992;76:179-183.

109 Laperriere NJ, Leung PM, McKenzie S, et al. Randomized study of brachytherapy in the initial management of patients with malignant astrocytoma. Int J Radiat Oncol Biol Phys. 1998;41:1005-1011.

110 Selker RG, Shapiro WG, Burger P, et al. The Brain Tumor Cooperative Group NIH Trial 87-01: a randomized comparison of surgery, external radiotherapy, and carmustine versus surgery, interstitial radiotherapy boost, external radiation therapy, and carmustine. Neurosurgery. 2002;51:343-355.

111 Chen AM, Chang S, Pouliot J, et al. Phase I trial of gross total resection, permanent iodine-125 brachytherapy, and hyperfractionated radiotherapy for newly diagnosed glioblastoma multiforme. Int J Radiat Oncol Biol Phys. 2007;69:825-830.

112 Leksell L. A stereotactic apparatus for intracerebral surgery. Acta Chir Scand. 1949;99:229-233.

113 Larsson B, Leksell L, Rexed B, et al. The high-energy proton beam as a neurosurgical tool. Nature. 1958;182:1222-1223.

114 Backlund EO. The history and development of radiosurgery. ed. Lunsford LD, editor. Stereotactic Radiosurgery Update. New York: Elsevier. 1992:3-9.

115 Lunsford LD, Alexander EII, Loeffler JS. History of radiosurgery. In: Alexander EIII, Loeffler JS, Lunsford LD, editors. Stereotactic Radiosurgery. New York: McGraw-Hill; 1993:1-4.

116 Lunsford LD, Flickinger JC, Lindner G, et al. Stereotactic radiosurgery of the brain using the first United States 201, cobalt-60 source gamma knife. Neurosurgery. 1989;24:151-159.

117 Regis J, Manabu T, Guillot C, et al. Radiosurgery of the head and neck with the world’s fully robotized 192 Cobalt-60 source Leksell Gamma Knife Perfexion in clinical use. http://www.elekta.com/healthcare_international_leksell_gamma_knife_perfexion.php. Accessed 7/1/08

118 Kirn TF. Proton radiotherapy: some perspectives. JAMA. 1988;259:787-788.

119 Fabrikant JI, Lyman JT, Frankel KA. Heavy charged-particle Bragg peak radiosurgery for intracranial vascular disorders. Radiat Res Suppl. 1985;104:8244-8258.

120 Steiner L, Leksell L, Greitz T, Forster DM, et al. Stereotaxic radiosurgery for cerebral arteriovenous malformations. Report of a case. Acta Chir Scand. 1972;138:459-464.

121 Steiner L, Leksell L, Forster DM, et al. Stereotactic radiosurgery in intracranial arterio-venous malformations. Acta Neurochir (Wien). 1974;21(suppl):195-209.

122 Backlund EO, Rähn T, Sarby B, et al. Stereotaxic hypophysectomy by means of 60 Co gamma radiation. Acta Radiol Ther Phys Biol. 1972;11:545-555.

123 Rähn T, Thorén M, Hall K, Backlund EO. Stereotactic radiosurgery in Cushing’s syndrome: acute radiation effects. Surg Neurol. 1980;14:85-92.

124 Leksell L. Cerebral radiosurgery. I. Gammathalamotomy in two cases of intractable pain. Acta Chir Scand. 1968;134:585-595.

125 Leksell L, Backlund EO. [Radiosurgical capsulotomy—a closed surgical method. for psychiatric surgery]. Lakartidningen. 1978;75:546-547. (Swedish)

126 Leksell L. Sterotaxic radiosurgery in trigeminal neuralgia. Acta Chir Scand. 1971;137:311-314.

127 Backlund EO, Johansson L, Sarby B. Studies on craniopharyngiomas. II. Treatment by stereotaxis and radiosurgery. Acta Chir Scand. 1972;138:749-759.

128 Leksell L. A note on the treatment of acoustic tumors. Acta Chir Scand. 1969;137:763-765.

129 Steiner L. Stereotactic radiosurgery with the Cobalt-60 gamma unit in the surgical management of intracranial tumors and arteriovenous malformations. In: Schmidek HH, Sweet WH, editors. Operative Neurosurgical Techniques: Indicatons, Methods and Results. Philadelphia: Saunders; 1988:515-529.

130 Loeffler JS, Alexander E, Shea M, et al. Radiosurgery as part of the initial management of patients with malignant gliomas. J Clin Oncol. 1992;10:1379-1385.

131 Coffey RJ, Lunsford LD, Flickinger JC. The role of radiosurgery in the treatment of malignant brain tumors. Neurosurg Clin N Am. 1992;3:231-244.

132 Sturm V, Kober B, Höver KH, et al. Stereotactic percutaneous single dose irradiation of brain metastases with a linear accelerator. Int J Radiat Oncol Biol Phys. 1987;13:279-282.

133 Loeffler JS, Kooy HM, Wen PY, et al. The treatment of recurrent brain metastases with stereotactic radiosurgery. J Clin Oncol. 1990;8:576-582.

134 Coffey RJ, Flickinger JC, Bissonette DJ, et al. Radiosurgery for solitary brain metastases using the cobalt-60 gamma unit: methods and results in 24 patients. Int J Radiat Oncol Biol Phys. 1991;20:1287-1295.

135 Kihlström L, Karlsson B, Lindquist C, et al. Gamma knife surgery for cerebral metastasis. Acta Neurochir Suppl (Wien). 1991;52:87-89.

136 Adler JR, Cox RS, Kaplan I, et al. Stereotactic radiosurgical treatment of brain metastases. J Neurosurg. 1992;76:444-449.

137 Flickinger JC, Kondziolka D, Lunsford LD, et al. A multi-institutional experience with stereotactic radiosurgery for solitary brain metastasis. Int J Radiat Oncol Biol Phys. 1994;28:797-802.

138 Betti OO, Derenchinsky VE. Hyperselective encephalic irradiation with a linear accelerator. Acta Neurochir (Wien). 1984;33(suppl):385-390.

139 Colombo F, Pozza F, Chierego G, et al. Linear accelerator surgery: Current status and perspectives. In: Lunsford LD, editor. Stereotactic Radiosurgery Update. New York: Elsevier; 1992:37-46.

140 Winston KR, Lutz W. Linear accelerator as a neurosurgical tool for stereotactic radiosurgery. Neurosurgery. 1988;22:454-464.

141 Hamilton AJ, Lulu BA. A prototype device for linear accelerator-based extracranial radiosurgery. Acta Neurochir (Wien). 1995;63(suppl):40-43.

142 Hamilton AJ, Lulu BA, Fosmire H, et al. Preliminary clinical experience with linear accelerator-based spinal stereotactic radiosurgery. Neurosurgery. 1995;36:311-319.

143 Hamilton AJ, Lulu BA, Fosmire H, et al. LINAC-based spinal stereotactic radiosurgery. Stereotact Funct Neurosurg. 1996;66:1-9.

144 Murphy MJ, Adler JRJr, Bodduluri M, et al. Image-guided radiosurgery for the spine and pancreas. Comput Aided Surg. 2000;5:278-288.

145 Ryu SI, Chang SD, Kim DH, et al. Image-guided hypo-fractionated stereotactic radiosurgery to spinal lesions. Neurosurgery. 2001;49:838-846.

146 Ryu S, Fang Yin F, Rock J, et al. Image-guided and intensity-modulated radiosurgery for patients with spinal metastasis. Cancer. 2003;97:2013-2018.

147 Gerszten PC, Ozhasoglu C, Burton SA, et al. Evaluation of CyberKnife frameless real-time image-guided stereotactic radiosurgery for spinal lesions. Stereotact Funct Neurosurg. 2003;81:84-89.

148 Gerszten PC, Ozhasoglu C, Burton SA, et al. CyberKnife frameless stereotactic radiosurgery for spinal lesions: clinical experience in 125 cases. Neurosurgery. 2004;55:89-98.

149 Eisbruch A. Intensity-modulated radiotherapy: a clinical perspective. Introduction. Semin Radiat Oncol. 2002;12:197-198.

150 Nutting C, Dearnaley DJ, Webb S. Intensity modulated radiation therapy: a clinical review. Br J Radiol. 2000;73:459-469.

151 Webb S. The physical basis of IMRT and inverse planning. Br J Radiol. 2003;76:678-689.

152 Mackie TR, Holmes T, Swerdloff S, et al. Tomotherapy: a new concept for the delivery of dynamic conformal radiotherapy. Med Phys. 1993;20:1709-1719.

153 Yu CX. Intensity modulated arc therapy with dynamic multileaf collimation: an alternative to tomotherapy. Phys Med Biol. 1995;40:1435-1449.

154 Yu CX, Li XA, Ma L, et al. Clinical implementation of intensity-modulated arc therapy. Int J Radiat Oncol Biol Phys. 2002;53:453-463.

155 Hug EB, Loredo LN, Slater JD, et al. Proton radiation therapy for chordomas and chondrosarcomas of the skull base. J Neurosurg. 1999;91:432-439.

156 Munzenrider JE, Leibsch NJ. Proton therapy for tumors of the skull base. Strahlenther Onkol. 1999;175(suppl 2):57-63.

157 Noël G, Habrand JL, Jauffret E, et al. Radiation therapy for chordoma and chondrosarcoma of the skull base and the cervical spine. Prognostic factors and patterns of failure. Strahlenther Onkol. 2003;179:241-248.

158 Mizoe JE, Tsujii H, Hasegawa A, et al. Phase I/II clinical trial of carbon ion radiotherapy for malignant gliomas: combined X-ray radiotherapy, chemotherapy, and carbon ion radiotherapy. Int J Radiat Oncol Biol Phys. 2007;69:390-396.

159 Paganetti H, Niemierko A, Ancukiewicz M, et al. Relative biological effectiveness (RBE) values for proton beam therapy. Int J Radiat Oncol Biol Phys. 2002;53:407-421.

160 Stone RS. Neutron therapy and specific ionization. AJR Am J Roentgenol. 1940;59:771-785.

161 Parker RG, Berry HC, Gerdes AJ, et al. Fast neutron beam radiotherapy of glioblastoma multiforme. AJR Am J Roentgenol. 1976;127:331-335.

162 Griffin TW, Davis R, Laramore G, et al. Fast neutron radiation therapy for glioblastoma multiforme: results of an RTOG study. Am J Clin Oncol. 1983;6:661-667.

163 Locher GL. Biological effects and therapeutic possibilities of neutrons. AJR Am J Roentgenol. 1936;36:1-13.

164 Sweet WH. The use of nuclear disintegrations in the diagnosis and treatment of brain tumor. N Engl J Med. 1951;245:875-878.

165 Sweet WH, Javid M. The possible use of neutron-capturing isotopes such as boron-10 in the treatment of neoplasms, I. Intracranial tumors. J Neurosurg. 1952;9:200-209.

166 Farr LE, Sweet WH, Robertson JS, et al. Neutron capture therapy with boron in the treatment of glioblastoma multiforme. AJR Am J Roentgenol. 1954;71:279-291.

167 Godwin JT, Farr LE, Sweet WH, et al. Pathological study of eight patients with glioblastoma multiforme treated with by neutron capture radiation using boron 10. Cancer. 1955;8:601-615.

168 Asbury AK, Ojean RG, Nielsen SL, et al. Neuropathologic study of fourteen cases of malignant brain tumor treated by Boron-10 slow neutron capture therapy. J Neuropathol Exp Neurol. 1972;31:278-303.

169 Hatanaka H, Kamano S, Amano K, et al. Clinical experience of boron-neutron capture therapy for gliomas: a comparison with conventional chemo-immunoradio-therapy. In: Hatanaka H, editor. Boron Neutron Capture Therapy for Tumors. Niigata: Nishimura Co; 1986:349-378.

170 Barth RF, Soloway AH, Goodman JH, et al. Boron neutron capture therapy of brain tumors: an emerging therapeutic modality. Neurosurgery. 1999;44:433-450.

171 Hatanaka H, Kamano S, Amano K, et al. Clinical experience of boron-neutron capture therapy for gliomas: a comparison with conventional chemo-immunoradio-therapy. In: Hatanaka H, editor. Boron Neutron Capture Therapy for Tumors. Niigata: Nishimura Co; 1986:349-378.

172 Harling OK, Moulin D, Chabeuf JM, et al. On line beam monitoring for boron neutron capture therapy at the MIT Research Reactor. In: Mishima Y, editor. Cancer Neutron Capture Therapy. New York: Plenum Press; 1996:261-269.

173 Coderre JA, Elowitz EH, Chadha M, et al. Boron neutron capture therapy for glioblastoma multiforme using p-boronophenylalanine and epithermal neutrons: trial design and early clinical results. J Neurooncol. 1997;33:141-152.

174 Elowitz EH, Chadha M, Iwai J, et al. A phase I/II trial of boron neutron capture therapy (BNCT) for glioblastoma multiforme using intravenous boronophenylalanine-fructose complex and epithermal neutrons: Early clinical results. In: Larsson B, Crawford J, Weinreich R, editors. Advances in Neutron Capture Therapy: Medicine and Physics, Vol 1. Amsterdam: Elsevier; 1997:56-64.