The Past Decade in Pediatric Education: Progress, Concerns, and Questions

The 1990s ended optimistically for the United States and for pediatric education: the country had accumulated a financial surplus, the pediatrics community had come together to deliberate the Future of Pediatric Education II, and medical education had a new paradigm (the Outcome Project). The past decade has been a tumultuous one, and optimism is now tempered by concerns and questions. Any attempt to discuss medical education needs to take into account the societal forces that affect health care, which is the context for medical education. Of the 10 issues addressed in this article, the first 5 are societal factors with implications for education; the last 5 are issues within medical education, with emphasis on pediatric education. The 10 are discussed individually but clearly interact and overlap. The order in which they are discussed is not intended to convey a rank of importance.

Societal factors with implications for medical education

1. Focus on patient safety

At the close of 1999, the Institute of Medicine (IOM) released its landmark report To Err is Human: Building a Safer Health System [1]. The report received widespread exposure and drew public attention to the need to reduce medical errors and increase patient safety, a need the IOM termed urgent. Public concern about the safety of care rendered by trainees has led to efforts to reduce the effect of fatigue on trainees by limiting the consecutive number of hours they are permitted to care for patients and to increase supervision by faculty.

Implications for education: graduate medical education work hour regulations

Consideration of the effect of resident fatigue on the care of patients largely stems from the death of 18-year-old Libby Zion in 1986. She was admitted to New York Hospital with fever, agitation, and abdominal pain. She did not reveal that she was taking a monoamine oxidase (MAO) inhibitor, phenelzine, prescribed by her psychiatrist. A resident on duty in the early hours of the morning prescribed meperidine to assuage her pain, and the combination of meperidine with the MAO inhibitor resulted in the young woman’s death. Sidney Zion, Libby’s father, a newspaper columnist and attorney, was convinced that the hospital was responsible for the death of his daughter. In the aftermath of Libby Zion’s death, a commission was formed, with Bertrand Bell as its leader. The Bell Commission identified both faculty supervision and resident fatigue from long work hours as issues that needed to be addressed. The resulting New York 405 Rules limited continuous work hours for residents to 24 with an additional 3 hours of nonpatient care activity permitted for education and to ensure adequate transfer of responsibility to another physician [2]. Hospitals that did not adhere to the Rules were subject to a fine, imposed daily until compliance was attained. In October, 2002, 13 years after the implementation of the 405 Rules, 75 of the 118 residency programs (64%) were found to be out of compliance [3], evidence of the inability of the profession to address what was publicly perceived to be a dangerous patient care situation.

In 2001, Representative Conyers proposed legislative action in the form of the Patient and Physician Safety Act, and Senator Corzine sponsored the act in the Senate in 2002. The Accreditation Council for Graduate Medical Education (ACGME) responded by developing work hour regulations that went into effect on July 1, 2003, forestalling federal legislation [4]. Continuous work hours were limited to 24 as in the 405 Rules, but an additional 6 hours were permitted for education and patient transfer, rather than 3. The total number of hours a resident could work per week was capped at 80, averaged over 4 weeks. A minimum of 10 hours was required between shifts (rather than 8 in the 405 Rules), and 1 day (24 continuous hours) free of clinical responsibility had to be provided per week, averaged over 4 weeks [2].

Although not a surprise to medical educators, a restriction in work hours did not result in a sharp decrease in mortality, and patient harm remained “common, with little evidence of widespread improvement” [5]. Some studies reported that residents were getting more sleep but felt no less stressed by the responsibilities of residency [6]; a study of residents in 3 large pediatric residency programs documented increased sleep and decreased rates of resident burnout but no change in medication errors, resident depression, or resident injuries [7]. Three potential reasons were proposed to explain the lack of consistent improvements: (1) work hours were still too long; (2) Compliance with the standard was lacking; (3) benefits from reduced fatigue might be offset by worsened continuity of care and inadequate handovers [8].

Evidence began to accumulate that a further reduction of work hours was associated with fewer medical errors and injuries [9,11]. Congress continued to apply pressure. The IOM responded by convening a panel and issuing Resident Duty Hours: Enhancing Sleep, Supervision, and Safety in December, 2008 [12]. Recommendations included keeping the 80 hours per week cap but without the flexibility to average over 4 weeks as in the 2003 ACGME regulations. The key changes were limiting shifts to no more than 16 hours unless 5 hours of protected sleep were provided and not permitting more than 4 night shifts in a row. Also included was the recommendation that interns be supervised by senior residents or faculty, not junior residents.

To assess public opinion regarding resident work hours, a national survey between November, 2009, and January, 2010, polled individuals from a sample designed to reflect the US Census estimates by region, gender, age, and race [13]. Of the 1200 respondents, only 1% approved of shifts lasting more than 24 hours. Respondents believed the maximum shift duration should be 10.9 hours and the maximum work week 50 hours; 81% believed that reducing resident work hours would be “very” or “somewhat” effective in reducing medical errors. A total of 81% believed patients should be informed if a treating resident had been working for more than 24 hours; 80% would then want a different doctor.

In response to the IOM report, the ACGME convened its own panel and issued regulations in 2010 [14]. The ACGME regulations were clearly influenced by the IOM recommendations but included some differences: flexibility to average the 80 hours per week cap over 4 weeks was maintained; the maximum number of night shifts in a row was set at 6, rather than 4. The most dramatic change recommended by the IOM (limiting shifts to a maximum of 16 hours) was adopted for interns, although residents beyond internship were still permitted 24-hour shifts, as in the 2003 regulations, but 4 hours for education and transfer of patient care rather than 6. The new ACGME regulations become effective on July 1, 2011. As of this writing, residency program directors are working to devise schedules that satisfy the new requirements [15].

Concerns and questions

Concern was raised in 2003 that an increased number of transitions of care (variably referred to as handovers, handoffs, signovers, or signouts) might offset any benefit to patients from being cared for by less fatigued residents. In the past 5 years, there has been more literature on the subject of handovers than in the previous 25 years, but the conclusion in 2010 is that “the ‘perfect’ handoff process does not yet exist” [16]. One proposed framework for handovers uses the mnemonic TEAM to identify 4 major domains: time, elements, anticipatory management, and mutual trust [16]. Several mnemonics have been developed to ensure that the necessary elements of handovers are addressed, but they can only ensure structure, not quality. As Philibert notes: “Much of the work to improve patient handoffs has focused on enhancing communication and access to data. Yet the handoff is first and foremost a clinical task that relies on participants’ ability to discern and interpret information about the patient’s condition, including the likelihood that it will worsen and the warning signs that this is occurring. Development of clinical acumen is critical to the emergence of this aspect of handoff skills that allows residents to convey this information when they are in the role as the outgoing physician and to understand it when they are the recipient” [17].

The ACGME Common Program Requirements for 2011 address the need to ensure effective transitions of care (“Sponsoring institutions and programs must ensure and monitor effective, structured handover processes to facilitate both continuity of care and patient safety”) but paradoxically state “Programs must design clinical assignments to minimize the number of transitions in patient care” and reduce work hours, inevitably creating more transitions [18].

It is not clear whether the reduced hours benefit patient care, as shown in studies and simulation [19], or whether more handovers increase errors. In addition, the effect on education is unknown. Will the reduced hours during internship make the transition from intern to supervising resident more difficult? Might a crisis of coverage prompt more than an exercise in rescheduling, resulting in a creative reengineering of how residents’ time is spent? Concerns about the effect on professionalism and the promotion of a shift mentality are being voiced again, as they were when the 2003 ACGME Requirements were instituted [4].

Implications for education: increased faculty supervision and direct involvement in patient care

Although increased supervision of residents was a major thrust of the Bell Commission and was reiterated by the IOM and the ACGME, the concern was largely lost in the tumult caused by the recommendations for reduced work hours. Direct involvement of teaching faculty in patient care has increased markedly in the past few decades (more because of reimbursement issues than because of concerns for patient safety or the quality improvement movement). In 1996, the Health Care Financing Administration (currently named the Centers for Medicare and Medicaid Services) issued Intermediary Letter 372 (IL-372), which prohibited teaching physicians from submitting bills to Medicare unless they personally provided services to the patient beyond supervising a resident and documented their services in the medical record [20]. Because academic departments have become increasingly dependent on clinical revenue (and test cases showed the resolve of the government to recover Medicare payments when documentation did not confirm the personal provision of services by faculty [21,22]), pressure was brought to bear on teaching physicians to be more directly involved in the care of patients. In recent years, additional factors may have resulted in increased direct care by teaching faculty: reduced resident presence (from restricted work hours and additional responsibilities such as continuity clinic); caps on the number of patients residents are permitted to admit; and the increasing number of hospitalists.

Concerns and questions

As trainee work hours are shortened and teaching physicians assume more direct responsibility for patient care, it becomes more challenging to ensure sufficient decision-making opportunities to prepare interns to become senior residents and to prepare senior residents for independent practice. Residents and faculty differ in their assessments of the degree of independence that should be permitted in various situations [23]. Trying to achieve and maintain a balance between supervision and autonomy “is among the greatest challenges in medical education. The ‘hidden curriculum’ that overvalues physician autonomy must be put aside for an explicit curriculum of safety, quality, and humility. Master clinician-educators at the bedside must provide ‘supervised autonomy’” [24]. Babbott refers to the process as “watching closely at a distance” [25].

A prospective study in 1 large residency program before the 2003 ACGME work hour restrictions showed that the introduction of a hospitalist program was associated with decreases in senior resident reports of their decision-making abilities and autonomy in the first few years of the program; the ratings then returned to the levels recorded before the hospitalist program [26]. The investigators speculate that the dip in ratings might have represented resident dissatisfaction with change, inexperienced hospitalists, or statistical fluctuation; they speculate further that the return to baseline levels may be because of the newer senior residents’ lack of awareness of the previous system or the learning curve of hospitalists. The data do not address the objective outcome of decision making when the senior residents graduated and were expected to function independently. Outcome data are the critical piece regarding preparation for practice, and are lacking.

2. The quality improvement movement

In 2001, 2 years after releasing To Err is Human: Building a Safer Health System [1], the IOM followed up with Crossing the Quality Chasm: A New Health System for the 21st Century [27]. Increasingly, the public, particularly payers, wanted to know what they were getting for their money, and report cards created awareness within health care systems of a higher level of accountability than previously.

Implications for education: ACGME Outcome Project identification of practice-based learning improvement and systems-based practice as core competencies

The education community was involved in quality improvement at the same time as the IOM reports. In 1999, the ACGME launched the Outcome Project (see number 6, later discussion) [28]. In conjunction with the American Board of Medical Specialties (ABMS) and its 24 Member Boards, the Project delineated 6 General Competencies, among which were 2 new ones: practice-based learning and improvement and systems-based practice. Both relate to quality improvement. The important distinction between the two is that practice-based learning applies to the improvements individuals can make in their own practice; systems-based practice involves making changes in a system of care. The requirement for practice-based learning and improvement was stated as follows: “Residents must be able to investigate and evaluate their patient care practices, appraise and assimilate scientific evidence, and improve their patient care practices. Residents are expected to: analyze practice experience and perform practice-based improvement activities using a systematic methodology; locate, appraise, and assimilate evidence from scientific studies related to their patients’ health problems; obtain and use information about their own population of patients and the larger population from which their patients are drawn; apply knowledge of study designs and statistical methods to the appraisal of clinical studies and other information on diagnostic and therapeutic effectiveness; use information technology to manage information, access online medical information, and support their own education; and facilitate the learning of students and other health care professionals.”

The ABMS and its 24 Member Boards embraced practice-based improvement as part of a transition from recertification to a more continuous maintenance of certification (MOC) process [29]. Part IV of MOC requires that physicians show that they can assess the quality of care they render and improve that care using follow-up measurements. During the past decade, the Accreditation Council for Continuing Medical Education (ACCME) has aligned its goals with those of the ACGME and ABMS MOC to require an assessment of the outcome of continuing medical education (CME) activities (ie, how they improve patient care) [30]. The move is away from random lectures and toward a process that begins with identifying gaps in care and what practitioners need to address such gaps. Evaluation of CME activities previously was generally limited to assessments of the speaker; now, the key question is whether clinical practice changes as a result of the activity.

Concerns and questions

The potential for the movement to improve quality has been shown in multiple individual projects, but it is not yet clear what the effect will be on the quality of care rendered by individual practitioners or the health of the public. Moreover, because report cards and related activities have increased the competitiveness of hospitals regarding quality outcomes, pressures on supervising physicians can affect education at all levels, not just the autonomy of senior residents discussed earlier. For example, formerly, the attending could teach, and the students and residents would do the charting: a trade-off in terms of time; now, the attending is required to document personally and extensively, resulting in less time available for teaching. As stated by Irby and Wilkerson, “Teaching—a time-intensive and largely unsponsored activity—has been slowly crowded out of the schedules of both full-time and voluntary medical school faculty members” [31]. Ludmerer suggests that we are returning to the pre-Flexner era, in which hospitals, rather than universities, directed medical education [32].

3. Advances in technology

Technology has advanced so rapidly that it is difficult to recall the era before the widespread access to the Internet, the ubiquitous use of cell phones, and affordable fast computer processors coupled with memory of sufficient capacity to enable complex activities such as simulation to be commonplace. It is difficult to overestimate the effect that advances in technology have had on daily life.

Implications for education

Electronic health records (EHRs) permit ready review of patient records for multiple purposes, including education and research. For example, practice-based learning is greatly facilitated by easily being able to identify patients with a particular condition and retrieve their records. Drug interactions can be identified as prescriptions are being written and as part of computerized physician order entry (CPOE) in hospitals. Programs that provide information when key words are clicked or that offer assistance with diagnoses can be incorporated in EHRs. This just-in-time education is valuable for patient care, patient safety, and education. Students and residents who receive training in an environment rich in technology resources and then move to institutions with less sophisticated technology perceive the latter as having lower “quality of care in many domains surveyed, including safety, efficiency, and system learning. Of considerable note, this group reported having less confidence in their knowledge about drug interactions and drug management than they did during their training, even months after changing institutions. Additionally, many respondents felt weakened in their ability to prescribe medications safely” [33].

The term e-learning comprises all forms of electronically supported learning and teaching, including distance learning and computer-assisted instruction [34]. Modules and entire courses can be accessed electronically. Relevant examples range from medical school courses that permit students to be widely dispersed, CME offerings in Web sites such as the American Academy of Pediatrics (AAP) PediaLink, and CME Master’s Programs in Medical Education [35]. The transition from books to electronic access has even permitted many medical libraries to go bookless, a cure for the increasing problem of finding space for the ever-increasing number of journals and books. Moreover, live webcasts can be interactive, and telemedicine instruction and consultation are becoming more widespread [36]. Software that can reduce or accelerate speech without altering pitch permits learners to process lectures at their own pace, improving both efficiency and effectiveness.

Simulation, of one sort or another, is currently used by all medical schools in the United States and Canada [37]. “The power of simulation,” as noted by Satava, “is that it gives ‘permission to fail’ in a safe environment (the laboratory setting), so students learn from their mistakes. Until now, whenever an error was committed, the patient suffered” [38]. The use of mannequins and software of ever-increasing sophistication permits the acquisition of competence under conditions that are controlled and can be altered to achieve specific learning objectives. The ability to simulate medical crises is particularly important for the training of pediatricians, because crises are rare events in children, making mastery of the skills and decision-making difficult to teach, to learn, and to assess. The difficulty is compounded by the unique features of neonates and children that limit the ability to transfer directly the skills acquired during the care of adults.

Computerized cases combine the benefits of simulation and e-learning. Educational objective 2 (ED-2) of the accrediting body for medical schools, the Liaison Committee for Medical Education (LCME), requires that faculty define “the types of patients and clinical conditions that students must encounter” and that “if a student does not encounter patients with a particular clinical condition (eg, because it is seasonal), the student should be able to remedy the gap by a simulated experience (such as standardized patient experiences, online or paper cases, etc.), or in another clerkship” [39]. Because many common conditions in pediatrics are seasonal (eg, respiratory syncytial virus infection) and others are important but not of such frequency as to ensure a clinical encounter during the brief period of a clerkship, computer-based cases have come into widespread use. In a 2005 survey, 83% of clerkships were using computer simulations, such as CLIPP (Computer-assisted Learning in Pediatrics Program), to meet ED-2 [40]. CLIPP consists of 32 cases designed to encompass the learning objectives of the Council on Medical Student Education in Pediatrics (COMSEP) curriculum [41]. Cases address child care at different ages and such situations as a newborn with respiratory distress, a 6-day-old with jaundice, a 2-week-old with lethargy, a 6-month-old with fever, a 10-month-old with a cough, an 18-month-old with congestion, a 16-month-old with a first seizure, and others. The cases include images, sound (eg, murmurs), and progressively revealed data, with frequent questions posed (and answered) along the way. In a study of CLIPP use and integration in pediatric clerkships, students spent an average of 17 hours using CLIPP and assessed it as being more valuable and effective than traditional learning methods; “reduction or elimination of redundant teaching activities such as required textbook reading or other unrelated assignments,” to make time for CLIPP, was associated with increased perception of integration, greater satisfaction, and increased perceived learning [42].

Searching the medical literature used to require visiting a medical library and flipping through countless pages of Index Medicus. Now, with programs such as PubMed, the medical literature can be searched anywhere a user has a computer (or handheld device) with Internet access.

Handheld devices with cameras and software enable physical findings to be captured and shared, references to be queried instantly, and communication to occur without having to return to an office. Trainees and practitioners stymied by a rash can capture its appearance digitally and solicit consultation without delay; they can become familiar with drugs their patients are taking, without having to leave the room and find a reference book; they can ensure that drug interactions do not occur before prescribing; and the list goes on. In 1 study, 70% of medical residents reported using a personal digital assistant (PDA) daily, most commonly for referencing medication-prescribing guides, medical textbooks, patient documentation programs, or medical calculators [43]. PDAs have also been shown to increase entries in patient logs by medical students when compared with paper logs, facilitating not only documentation but reflection and learning [44].

Concerns and questions

Implementing an EHR system is associated with decreased physician productivity because of the increased time required to record information. In 1 study spanning 2003 to 2006, the initial decrease in productivity was 25% to 33%, compared with data collected before the introduction of the EHR. In subsequent months, productivity by internists increased but neither pediatricians nor family physicians were able to return to their original productivity levels [45]. It is possible that more current EHR systems may be more user friendly and have less of a deleterious effect on productivity, but that remains to be shown. Many pediatricians are also concerned that attention to an inputting device draws attention away from the patient and family and may have a deleterious effect on rapport and the doctor-patient relationship. A creative solution for both productivity and rapport has been the use of scribes to enter information into the EHR [46]. There may be value not only for physicians but also for the scribes; undergraduates interested in medicine or preclinical medicine students can gain exposure to clinical encounters and become familiar with the questions used in the medical history and the findings elicited during physician examination.

The introduction of EHRs and CPOE in teaching hospitals has been accompanied by prohibition of students writing in the medical record in many hospitals [47,48]. The effect on learning and on developing a sense of responsibility for the patient (ownership) is concerning [47].

Although searching the medical literature has never been easier, it requires skill to avoid being overwhelmed by a long list of articles. The result may be reliance on the first page of references, which may not include the most useful article. If the journal article is not readily available on line, the abstract may be used as evidence, without recognition of the limitations of the study. Another alternative to a skillful search is to obtain a quick response from a generic search engine or online encyclopedia, the accuracy of which cannot always be verified.

Simulation is resource intensive, and the effect of medical school simulation training on later performance has not been well characterized. The Millennium Conference 2007 on Education Research identified studying the effect of simulation as the highest rated priority for education research [49]. Also, the role of computer-assisted instruction needs to be maintained as a complement to interaction with physician-instructors and real patient encounters, rather than as a replacement [42].

A growing concern relates to social networking and professional boundaries [50]. What may be considered humorous when whispered in the hospital may be considered offensive and unprofessional by the public. Students and physicians who use social networking sites may make a distinction between their personal life and their professional life, but such a distinction may not be made by the public, including their patients. The following is a case in point: “A patient’s family member requested a change in resident physician because of questionable behavior exhibited on a resident’s personal MySpace page. Such anecdotal reports continue to increase in frequency” [50].

4. Recognition of a need for more physicians

The public has been made aware that the aging of baby boomers will be associated with a shortage of physicians. By increasing the number of individuals who can access health care, health care reform legislation is expected to exacerbate the looming shortage. The Association of American Medical Colleges (AAMC) Center for Workforce Studies estimated in June, 2010, that the physician shortage in all specialties would reach 91,500 in 2020 and 130,600 in 2025 [51].

Implications for education: new medical schools, increased class sizes

In 2006, the AAMC recommended a 30% increase in US medical school enrollment by 2015 [52]. Compared with the first-year enrollment in 2002 (16,488), this recommendation would require an increase of 4946 students per year (to 21,434). One new medical school was authorized in 2000, after more than 2 decades in which no new allopathic medical schools were established [53]. Between 2006 and 2010, 15 institutions notified the LCME of their intent to start a new medical school; of these, 6 have received preliminary accreditation and 2 have suspended plans. New osteopathic schools have also been established or are in planning stages. In addition, 82% of the 125 medical schools accredited in 2002 have expanded their enrollment, and satellite training sites are now common. The most current projection is that first-year medical school enrollment in 2014 will be 20,281, a 23% increase over 2002. The 30% targeted increase is projected to be reached by 2018 [54].

Meeting the needs of a greatly increased number of medical students requires using facilities outside the medical school and its primary hospital, but there is also good reason to engage these sites. In 1999, the federal Council on Graduate Medical Education (COGME) recommended that “clinical education should occur in settings that are representative of the environment in which graduates will eventually practice. Medical schools and residency training programs should develop or acquire clinical teaching sites that offer the best learning opportunities and the highest standards of clinical practice” [55]. An additional hope of using community and rural sites is that familiarity and comfort in such settings might attract graduates and, thereby, help address the shortage of primary care physicians and the geographic maldistribution of primary care for children [56]. Previous attempts to attract physicians to primary care by simply providing more time in ambulatory settings have shown that dysfunction settings can discourage trainees from entering primary care [57]. Well-functioning community practices afford the opportunity to expose learners to optimal real-world primary care environments and achieve desired outcomes. Programs designed to increase the rural physician supply through a focused admissions process or extended rural clinical curriculum have been successful, with a rate of graduates practicing in rural areas of 53% to 64% [58]. Students in regional settings have scores on standardized tests at the end of clerkships comparable with students in tertiary settings but rate the experience more highly [59]. Pediatric residents whose continuity experience was in community practices have been shown to be particularly well prepared for primary care practice [60,61].

Concerns and questions

As noted earlier, the LCME requires that clerkship rotations at different sites be comparable and that experiences with types and numbers of patients be equivalent. Technology has been a valuable tool for meeting this requirement, but concerns about adequate space, faculty, community faculty development, staff, time, and finances remain. In the most recent AAMC survey, 73% of medical schools expressed concern with their supply of qualified preceptors, and 58% were concerned with the number of clinical training sites. Twelve schools indicated plans to reduce enrollment, mainly because of fiscal pressures [54]. As Mallon points out, the previous expansion in medical school enrollment in the 1960s and 1970s was fueled by a large influx of federal funding; such financial support “does not appear on today’s horizon” [62].

Concerns are also expressed about maintaining the quality of medical school applicants [63]. One ready source might be the US citizens who would otherwise attend international medical schools (USIMGs). However, these candidates score less well on various measures than graduates of US medical school and non-US international medical graduates [63]. Of the USIMGs who graduated from medical school between 1980 and 2000 and applied for certification by the Education Commission for Foreign Medical Graduates, 30% had not achieved certification by 2005 [63].

Perhaps most important, increasing the number of medical school graduates does not address the looming shortage of practicing physicians without a corresponding increase in the number of residency positions [64]. Teaching hospitals are already grappling with what reduced work hours for interns will mean for staffing costs in the immediate future. The Affordable Care Act (Health Care Reform) includes $168 million for training more than 500 new primary care physicians by 2015 [65]. Still, in the present financial climate, it is not clear that the number of graduate medical education positions will parallel the increase in medical school graduates (or address a looming physician shortage) any time soon.

5. The current generation

Generations at Work, published in 2000, was one of the seminal publications at the start of the decade that brought differences among generations into pop culture [66]. The investigators characterized 4 generations, which they dubbed the “veterans” (born before 1943), the “boomers” (born between 1943 and 1960), “generation Xers” (born between 1960 and 1980), and “nexters” (born between 1980 and 2000). Other investigators have applied different labels (eg, the “silent generation” instead of “veterans”; “millennials” or “generation Y” instead of “nexters”) and slightly different years of birth (using 20-year increments starting in 1945), but the general concepts have been widely acknowledged.

Implications for education

The subtitle of Generations at Work is worth noting: “Managing the clash of veterans, boomers, Xers, and nexters in your workplace” [66]. Present-day learners are “technology natives” (compared with faculty, who are “technology immigrants”), meaning that they have grown up with technology and are more facile than the members of previous generations who have to adapt to use current technology. Students (and, increasingly, residents) prefer their computer or handheld device to books or the library as a central source of information. Search engines, such as Google, and online sources, including Wikipedia, have largely replaced standard textbooks and the regular review of peer-reviewed journals. The current generation of learners is also characterized as more interested in learning in groups than previous generations.

The attempt to achieve work-life balance is more overt and active. For example, during the past decade, the rate of pediatricians working part-time progressively increased from 15% in 2000 to 20% in 2003 and 23% in 2006, a 53% relative increase in 6 years; in pediatricians younger than 40 years, the rate increased from 18% to 29% [67]. Although there is no evidence that trainees and young pediatricians are less committed to patients or dedicated to their profession, they clearly are more likely to seek and expect shorter, better-defined hours than a generation or 2 ago (as reflected in the percentage who choose part-time work, for example). The night-call burden on practitioners has been ameliorated in many areas by the availability of nurse call-in services and relieved further by the presence of hospitalists on inpatient services. The desire of trainees and young pediatricians for increased control over their schedules is a reflection of generational changes that extend to the community at large.

Concerns and questions

As awareness of work-life balance has heightened the difficulty of achieving such balance has become more apparent and more readily expressed. In an AAP Annual Survey, fewer than half of the 1020 respondents identified themselves as satisfied with time with their children, time with spouse/partner, time with elderly parents, time for personal interests, or hours worked per week [67]. The quest for balance may account in part for reduced participation in professional activities, such as membership of the AAP, despite the AAP attempt to attract young pediatricians by creating a Section specifically for them in 2000. It seems paradoxic that the current generation is characterized as preferring to learn in groups and work in teams yet chooses not to join organizations or attend meetings as previous generations of pediatricians did; it may be that electronic social networking has efficiently and effectively replaced the social function of professional meetings. The implications for the other function of meetings (CME) are not clear.

Issues within medical education

6. The ACGME outcome project

In 1999, the ACGME acknowledged that accreditation of residency programs was based on structure and process components, what Hodges calls the “tea-steeping” model “in which the student ‘steeps’ in an educational program for a historically determined fixed time period to become a successful practitioner” [68]. The Outcome Project proposed a paradigm shift, designed to move to an assessment of accomplishments [28]. For example, instead of requiring programs simply to have written goals and objectives, the Outcome Project also required programs to assess whether residents were achieving the learning objectives and to provide supportive evidence. The ACGME, in conjunction with the ABMS and its Member Boards, solicited input to identify core competencies expected of all physicians, regardless of specialty. The Outcome Project Advisory Group reviewed the list of 86 statements that were proposed and drew from them 6 General Competencies: patient care; medical knowledge; interpersonal and communication skills; practice-based learning and improvement; professionalism; and systems-based practice.

The Outcome Project was designed to be implemented in 4 phases:

In 2009, the ACGME and the American Board of Pediatrics (ABP) launched the Pediatrics Milestone Project to further define the 6 competencies as they apply in pediatrics and to identify by what point in the continuum of education and training the milestones should be achieved [69]. The third goal of the project is to identify meaningful tools to assess performance.

The 6 Competencies have been accepted not only in graduate medical education but in continuing education as well. In 1996, 3 years before the Outcome Project, the AAMC launched the Medical Student Objectives Project (MSOP) [70]. Rather than specify competencies, the MSOP identified 4 attributes that medical students should possess when they graduate and defined 6 or 7 objectives to guide the attainment of the attributes: physicians must be altruistic; physicians must be knowledgeable; physicians must be skillful; and physicians must be dutiful. That this is a more meaningful approach for undergraduate medical education than the Competencies was reaffirmed in 2004 [71]. However, as medical students look ahead to residency, they become mindful of the ACGME Competencies. The Pediatric Subinternship Curriculum developed for fourth-year medical students by the curriculum task forces of COMSEP and the Association of Pediatric Program Directors (APPD) is organized according to the ACGME Competencies [72]. So it is fair to say that the ACGME influence has extended throughout medical education.

Concerns and questions

During the first few years after the launch of the Outcome Project, residency program directors learned the new language of the ACGME and accommodated by reclassifying observations by Competency rather than by transforming education to assess the outcome of training [73]. One major concern raised about the Competencies is whether the whole equals the sum of its parts; that is, whether achieving each Competency adds up to being a competent physician [73,74]: “Documenting that a graduating resident has mastered, at some predetermined level, the knowledge, skills, and attitudes associated with each of the core competencies, while informative, does not ensure that the individual is a competent physician. Something more is needed: graduating residents must be able to translate and integrate their knowledge, skills, and attitudes so they can perform the complex tasks required to deliver high-quality medical care” [75]. A conceptual framework to bridge the gap, proposed by ten Cate and colleagues [76], is based on the assessment of the “critical activities that constitute a specialty—all the elements that society and experts consider to belong to that profession, the activities of which we would all agree should be only performed by a trained specialist”; ten Cate labels these activities “Entrustable Professional Activities (EPAs).” These EPAs map back to Competencies and may be a fruitful direction for assessing the outcome of training [77].

The critical assessment as to whether training has been completed successfully remains the opinion of the program director, as was the case before the Outcome Project; although this opinion may be better informed than previously, critics point out that there is no standardized examination of performance required to graduate from residencies, only tests of medical knowledge. Assessment of performance in the first months after residency by a senior physician (rather than self-assessment of preparation) would reflect the thrust of the Outcome Project; however, few examples exist [60].

7. Assessment of performance

The ACGME recognized when the Outcome Project was launched that the key to moving from a time-served model to identifying whether training objectives were achieved was improved assessment of performance. Carraccio and Englander [78] proposed 4 requisites of assessment: (1) the tasks being evaluated should be authentic and directly observed; (2) the evaluation should be criterion based rather than norm based; (3) feedback should be given to aid improvement; and (4) a breadth of tools should be used to encompass the 6 Competencies. They also proposed the use of a portfolio containing a reflective component as well as the quantitative assessments of performance.

The infrequency with which students and residents are directly observed by faculty has long been recognized as a problem in medical education. One barrier has been faculty time. An approach to addressing this is the structured clinical observation method, introduced as a “feasible, inexpensive, qualitatively effective method of teaching clinical skills” [79]. Faculty were trained in a 2-hour session to use standardized observation forms to assess history-taking, physical-examination and information-giving skills on brief segments of encounters. Because the observation process took only 5 minutes, many more direct observations of performance were made in each clinic session than formerly. Although proposed as a teaching tool, faculty also reported an effect on assessment, changing their opinions of two-thirds of the students, generally in a more positive direction. Of the students 12% reported inconsistencies in the feedback, which could represent the limited generalizability of 5-minute observations. Multistation objective structured clinical examinations can assess a broader array of skills in a controlled environment, but are resource intensive [80]. Trained, standardized patients (SPs) are now widely accepted for both training and assessment; students identify that a particular benefit from SPs is feedback from the patient perspective [81].

To illustrate the importance of criterion-based rather than norm-based assessment, Carraccio and Englander [80] point out: “It makes little difference to have one candidate score better than another, and potentially allow the better-scoring one to pass, when neither reach baseline competency for independent practice”. The absence of benchmarks at various stages of training to determine whether performance is at the level expected for a trainee at that stage remains a major obstacle to applying criterion-based assessment. The Pediatric Milestone Project is attempting to address this [69,82].

For the breadth of tools needed to encompass the 6 Competencies, the ACGME developed a tool box and required programs to specify the tools used to assess performance [83]. Terms like “360° assessment” became part of the medical education lexicon to indicate the inclusion in the evaluation of trainees of multiple individuals who interacted with the trainee, including individuals from various disciplines and patients, in addition to supervising physicians. In 2007 to 2008, the ACGME convened an Advisory Committee on Educational Outcome Assessment to identify high-quality assessment methods for use by all programs in a specialty [84]. The committee considered the following characteristics: reliability; validity; ease of use; resources required; ease of interpretation; and educational effect. Of current assessment methods, 9 with published evidence were selected for review and classification according to the following scheme:

Of the methods reviewed, no class 1 methods were identified.

Concerns and questions

The traditional basis for assessing clinical performance, the observations of supervising faculty, has many shortcomings. Supervising faculty may have few direct observations on which to base their assessment, and the observations in 1 setting or clinical situation may not be representative. Moreover, faculty interpret observations differently, based on several factors, one of which is their level of expectation [85], which may, in turn, be affected by their own degree of clinical skill [86]. Another confounder is the learner’s social skills; affective, nonverbal behaviors have been shown to account for nearly half the variance in medical student grades [87]. Affective skills are clearly important, but the possibility of a halo effect extending to the assessment of other skills and knowledge exists. And although criterion-based assessment is clearly preferable to norm-based assessment, even the latter may be difficult for faculty without years of experience and an extensive mental database from which to draw comparisons and apply an expected level of performance to match the learner’s stage of training.

The reliability and validity of current assessment tools have been questioned [88]. As noted earlier, the ACGME Advisory Committee on Educational Outcome Assessment was unable to identify a class 1 method [84]. In addition to the limitations of the tools is the “inconsistent use and interpretation of such instruments by unskilled faculty” [89]. What is clear is that robust, psychometrically sound, standardized assessment tools and defined benchmarks for performance at different levels of trainees are needed, with faculty development to promote reliable implementation.

8. Faculty development and educational scholarship

Faculty development, as applied to medical educators, includes programs to improve activities such as teaching, evaluation of learners, curriculum building, and educational scholarship. Mentoring, a long-established method by which senior physicians aid the development of junior physicians, is a form of faculty development that has received increased interest during the past decade [90,91]. Organizations have created awards to acknowledge the value of mentorship, including the APPD Robert S. Holm Award and the Academic Pediatric Association (APA) Miller-Sarkin Mentorship Award. In addition to traditional dyadic mentoring is “facilitated peer group mentoring,” in which a group of junior peers works as a team with a senior mentor as facilitator. An example involving the Associate Program Directors in the APPD identifies the importance of a common project/goal and planned, regular communication; as a result of the facilitated peer-group mentoring, “the group participants expanded their efforts, forming a professional network that has resulted in a community of scholars who provide mutual support, rewarding collaboration, opportunities for scholarly projects, as well as offering additional leadership opportunities” [92].

Particular attention has been directed to voluntary community-based faculty, considered a priority in the 1999 COGME report cited earlier [55]. The federal agency that convened COGME, the Bureau of Health Professions in the Health Resources and Services Administration (HRSA), had been awarding Title VII faculty development grants for 2 decades but was concerned that the funding had benefitted only individual programs rather than having a national effect. In response to this concern, the APA developed a program to train individuals from all 10 APA regions to conduct faculty development activities locally, in their regions, and nationally, creating the first national network of faculty development in pediatrics [93]. The HRSA/APA Faculty Development Scholars Program consisted of 3 tracks: community teaching, educational scholarship, and executive leadership. Two cohorts of pediatric faculty, totaling 112 scholars, participated in train-the-trainer workshops for a 2-year period, enabling them to conduct their own faculty development workshops: Even before the last session of the program was convened, the scholars had presented 438 local workshops and 161 regional/national workshops to a total of 7989 participants. HRSA/APA Faculty Development Scholars Program graduates assumed leadership roles in pediatric education, such as the presidency of organizations (COMSEP, APPD) and created the APA Educational Scholars Program (see “Educational scholarship,” later discussion) [94]. Although other national faculty development programs preceded the HRSA/APA Faculty Development Scholars Program (and a new one has been announced as recently as December, 2010 [95]), the HRSA/APA Program is singled out because it remains the only one for pediatric faculty exclusively.

Faculty development programs to improve the quality of teaching have largely been voluntary. Participants express satisfaction and perceive their teaching is better; evidence of improved outcomes (eg, gains by learners) is limited but generally positive [96]. One benefit commonly voiced by participants is the creation of a community, echoed by participants of facilitated peer-group mentoring. During the past decade, medical schools have increasingly built on this idea of community by creating academies of educators. Irby and colleagues [97] describe 4 defining characteristics of academies:

The intent of academies goes beyond developing individual faculty and seeks to “transform the basic culture of academic medicine” and “place education at the center of academic medicine” by creating a critical mass [95]. If academies are to succeed in their mission, the key piece is likely to be educational scholarship.

By the end of the 1990s, educational scholarship was coming into its own in medical education generally [98] and in pediatrics specifically. Examples of the latter are the educational scholarship track in the HRSA/APA National Faculty Development Scholars Program; the revision of ABP criteria for eligibility to be certified in a subspecialty, broadening the definition of “accomplishment in research” to include educational scholarship [99]; and the launch of the APA Educational Scholars Program to “assist pediatric educators in developing themselves as productive, advancing and fulfilled faculty members and to increase the quality, status and visibility of pediatric educators in academia” [94]. The 3-year curriculum of the Educational Scholars Program includes didactic sessions, participation in and review of workshops, and a mentored project. The program is offered to members of the Pediatric Academic Societies, and successful completion is acknowledged with a Certificate of Excellence in Educational Scholarship. Since its inception, the program has awarded Certificates to 31 scholars, and another 40 are currently enrolled.

To distinguish the scholarship of teaching from scholarly teaching, 3 criteria have been proposed: the work must be made public; the work must be available for peer review and critique according to accepted standards; and the work must be able to be reproduced and built on by other scholars [98]. Opportunities to accomplish these 3 standards have increased markedly in the past decade. In addition to journals devoted to medical education, general pediatrics journals have members of their editorial boards seeking articles related to education. The AAMC, in conjunction with the American Dental Education Association, created MedEdPORTAL as a “a free online peer-reviewed publication service … designed to promote educational collaboration by facilitating the open exchange of peer-reviewed teaching resources such as tutorials, virtual patients, simulation cases, lab guides, videos, podcasts, assessment tools, etc.” [100]. MedEdPORTAL enables medical educators to disseminate their ideas (and show scholarship) for their educational innovations. The APPD has created a “share warehouse” with similar intent [101]. In addition, APPD has launched a new Longitudinal Education Assessment Research Network (LEARN) to “provide an infrastructure for multi-centered, collaborative research projects and a centralized data collection system” [102]. LEARN is intended to “facilitate programs coming together to address an assessment question, test instruments, test curricula, and ultimately share these products with other programs and help shape future program accreditation.”

Concerns and questions

Research in medical education is complicated and difficult [103]. In the 3 cohorts of fellows who have completed fellowship since the revision of the ABP acceptance of educational scholarship to satisfy the requirement for scholarly activity during fellowship, fewer than 2% of candidates have taken advantage of the opportunity. Attempts at creating a critical mass of educational scholars locally (eg, academies) and nationally (eg, the Educational Scholars Program) may succeed, but only time will tell if the current enthusiasm is sustainable or succumbs to financial pressures and the demand for ever-increasing clinical productivity. The lowest rated priority topic by participants of the Millennium Conference 2007 on Educational Research was “related to the essential elements or impact of faculty development about adult learning theory” [49].

9. Pediatric initiatives to improve education

In 1976, a Task Force on the Future of Pediatric Education was formed with representatives from 10 collaborating societies [104]. In its report, the Task Force recommended, among other things, that residency be extended to 3 years and that more emphasis be placed on behavioral and development issues, adolescent medicine, ambulatory care, and working in teams with other health professionals. Nearly a quarter of a century later, a second Task Force was convened, comprised again of leaders from various organizations, to meet 3 overarching goals: “To evaluate the 1978 Report with respect to its relevancy to the education of pediatricians and others providing health care to children in the 21st century; to provide direction for the improvement of pediatric education, with special emphasis on workforce requirements, new instructional methodologies, and the financing of pediatric education; and to recommend essential changes in the educational process to meet the current and future health care needs of all infants, children, adolescents, and young adults” [105]. To achieve the 3 goals, 5 Workgroups were formed: the Pediatric Generalists of the Future Workgroup; the Pediatric Subspecialists of the Future Workgroup; the Pediatric Workforce Workgroup; the Financing of Pediatric Education Workgroup; and the Education of the Pediatrician Workgroup. Through surveys, interviews, questionnaires, literature reviews, and deliberations, each Workgroup developed a report. The Task Force addressed the changing pediatric practice environment, recognizing changing demographics, biomedical advances, technological advances, changes in health care delivery, changing patterns of morbidity, and changes in the pediatric workforce. In its final, extensive (149 pages, 99 references) report in 2000, the Task Force issued 34 recommendations and reaffirmed 19 fundamental educational principles, concepts, and recommendations from the original 1978 Future of Pediatric Education report.

The leaders of the Task Force identified that a major limitation of the effect of the original Future of Pediatric Education report was the lack of a body with the responsibility and authority to implement the recommendations. They addressed this as follows: “The FOPE II Task Force recommends that the oversight for implementation of the recommendations in this report be vested in the Federation of Pediatric Organizations (FOPO). The Task Force further suggests that FOPO hire an Executive Director and appropriate staff to coordinate implementation. Additionally, FOPO should consider delegating recommendations from this report to various, appropriate organizations within the pediatric community for implementation and monitoring” [105] (Recommendation 34). FOPO, formed in the late 1980s, consists of 7 member organizations: APA, AAP, ABP, American Pediatric Society, Association of Medical School Pediatric Department Chairs (AMSPDC), APPD, and the Society for Pediatric Research [106]. Representatives of the 7 organizations had been meeting semiannually, largely for information sharing. A few FOPO Statements had been developed, but the process was arduous, because FOPO’s bylaws required all decisions of the group to be unanimous. FOPO accepted the recommendations of the Task Force, including the hiring of an Executive Director (Richard Behrman) and staff. It took the additional step of establishing a Pediatric Education Steering Committee (PESC), comprised of the 7 FOPO organizations plus the Council on Medical Student Education in Pediatrics. Decisions made by the PESC did not have to be unanimous, but approval was required by FOPO, in essence retaining the unanimity standard. As suggested in Recommendation 34, the constituent organizations that comprise FOPO were invited to identify which Task Force recommendations they were positioned to address. Some key areas (such as improving the financing of graduate medical education) were not under the control of any of the organizations.

In 2000, the APA responded to the paradigm shift at the ACGME and the need for pediatric residency programs to have specified goals and objectives to meet the 6 general competencies by initiating a revision of its Educational Guidelines for Residency Training in General Pediatrics [107]. To accomplish the task, the pediatrics community was engaged by the creation of an Advisory Board, with representatives from 7 organizations, and by the inclusion of 50 contributors and reviewers [108]. To enhance the availability and usefulness of the final Guidelines, the format was Web-based, with search capability and the opportunity to download and modify the content for local application. Since the Guidelines were posted on the APA Web site in 2004, 97% of residency programs have used them [109].

In 2005, the Residency Review and Redesign Project (R3P) was begun, with the desire initially to provide the specific blueprint for graduate education that had not been furnished by the FOPE II Task Force [110]. The R3P leadership committee consisted of 13 pediatricians; 34 individuals served as the Project Group. Three colloquia were conducted to inform the process: I. The future of pediatric health care delivery and education; II. The theory and practice of graduate medical education; and III. Challenges for pediatric graduate medical education and how to meet them. Input was also derived from resident, subspecialty fellow, and practitioner surveys. It became apparent that one size does not fit all residency programs and that a single blueprint was more likely to stifle progress than to advance it. After extensive deliberation, the conclusion of the Project was that “children, adolescents, and young adults would be best served by a process of deliberate, careful experimentation across residency programs, which would allow for examination of new ways to organize resident learning experiences and different ways of assessing learning outcomes” [110].

Based on the R3P’s conclusion, the Initiative for Innovation in Pediatric Education (IIPE) was developed [111]. The IIPE Mission Statement mirrors the R3P conclusion: “To initiate, facilitate and oversee innovative change in pediatric residency education through carefully monitored, outcome-directed experimentation.” The aim of the IIPE is “to support high-quality research in medical education through its call for proposals, Innovation/Improvement Plans (I-Plans), and promote the development of evidence-based medical education.” Governance is provided by an Oversight Committee, consisting of 2 representatives each from the ABP, AMSPDC, APPD, and the AAP Section on Medical Students, Residents, and Fellowship Trainees. The Oversight Committee also has 1 liaison representative each from the ABP and the ACGME. The IIPE does not directly fund experimental initiatives in residency programs but provides valuable assistance to programs identified through a competitive application process. In its first year (2009), IIPE approved 4 applications, 2 addressing handovers, 1 assessing structured extended career-centered block time, and 1 creating a rigorous faculty development program. In year 2 (2010), IIPE approved 1 application, a longitudinal curriculum to improve residents’ self-evaluation and self-improvement. A third cycle is planned.

Concerns and questions

The R3P project “challenged the pediatric community to confront the need for change but, importantly, declined to prescribe solutions” [112]. Those words, written by the chair of the R3P Committee in 2010, echo what Abraham Flexner wrote 100 years earlier: “The endeavor to improve medical education through iron-clad prescription of curriculum or hours is a wholly mistaken effort” [113]. The pediatrics community has been engaged in identifying the challenge; the question now is whether it is able to meet the challenge in an era of increased regulation and limited financial resources.

10. Collaboration

FOPE, FOPE II, R3P, and the revision of the APA Educational Guidelines are examples of engaging the pediatrics community under the leadership of one of the pediatric organizations. During the past decade, collaboration among the organizations has begun to flourish. For example, the APPD established a Task Force structure that parallels that of COMSEP, enabling the 2 organizations to work together in areas of common interest: curriculum, evaluation, faculty and professional development, learning technology, and research. One product of this collaboration is the Pediatric Subinternship Curriculum, launched in 2009 [72]. Also in 2009, COMSEP and APPD held their annual meetings together for the first time [73], and a second joint meeting is scheduled for 2013.

In 1998, the ABP established a Program Directors Committee. Through the work of the Committee this past decade, the ABP and APPD have produced a guide for program directors on teaching and assessing professionalism [114] and are working on a general assessment guide.

The APA, COMSEP, and APPD jointly sponsored a Pediatric Educational Excellence Across the Continuum meeting in 2009, in conjunction with the Council of Pediatric Subspecialties (CoPS). The creation of CoPS is itself a case study of collaboration [115]. CoPS addresses the difficulties of communication across pediatric subspecialties and even within individual pediatric subspecialties; for example, within pediatric endocrinology, there is the Lawson Wilkins Pediatric Endocrinology Society, the AAP Section on Endocrinology, and the ABP Pediatric Endocrinology Sub-board Committee. Multiple organizations faced the frustration of communicating with the numerous entities that were not linked to respond with a single voice, such as when the ABP sought to revise its requirements for subspecialty training programs and desired input from subspecialty groups; when the APPD sought to reach out to subspecialty fellowship program directors; when the AAP Resident Section wanted a uniform application process for fellowships; and when FOPO desired to implement its Policy Statement on the Fellowship Application Process in Pediatrics. In 2006, a planning group developed an organizational model and obtained sponsorship from AMSPDC and APPD. The first meeting of CoPS was held in 2006, with 34 participants representing 17 pediatric subspecialties and related specialties and 6 pediatric organizations; 21 potential issues were identified to be addressed by the organization, including several that relate to education, such as fellowship training; workforce and pipeline; faculty development; development and sharing a core curriculum for subspecialty training; development and sharing evaluations of the core competencies; and subspecialty-specific CME courses.

Two other key organizations that have agreed to collaborate on a pediatrics project are AAMC and ACGME. The Pediatric Redesign Pilot Project will integrate the educational path for selected medical students from undergraduate medical education through residency.

Concerns and questions

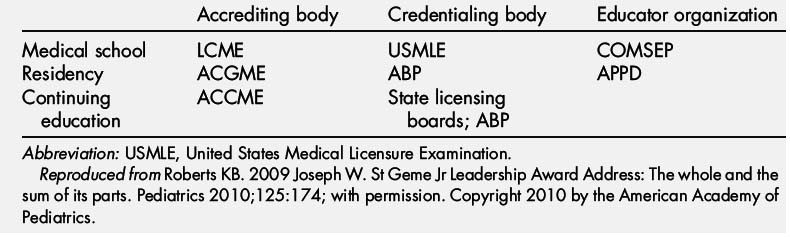

Undergraduate, graduate, and continuing education are often spoken of as a continuum of medical education, yet it is clear that they are more discrete than continuous (Table 1) [116]. We are beginning to see collaboration of the major organizations in undergraduate and graduate education (eg, the Pediatric Redesign Pilot Project). A redefinition of the fourth year of medical school would certainly be fertile ground for collaboration. The COMSEP-APPD Subinternship Curriculum is a start, but there is little consensus about the most beneficial use of the remainder of the fourth year [117].

Table 1 Noncontinuum of education at different levels: different accrediting bodies, credentialing bodies, and educator organizations

Within the pediatrics community, organizations such as COMSEP, APPD, and the APA are comprised of volunteers, who frequently refer to their organization as their home. What will be necessary to achieve full synergy is for these homes to become neighborhoods. The annual meetings of the 3 organizations are now separate, but the leaders recognize the value and importance of collaboration, an ongoing work in progress.

Now and the future

As noted at the beginning of this article, the past decade has been a time of great activity, but it can be questioned whether major change has occurred. The Carnegie Foundation for the Advancement of Education, which sponsored the Flexner Report, has supported a 100-year follow-up study. The authors of the current report characterize the current situation in medical education as follows: “As we reflect on medical education in the United States at the beginning of the 21st century, we find, like our famous forbearer, that it is lacking. Medical training is inflexible, overly long, and not learner-centered. Clinical education for both students and residents excessively emphasizes mastery of facts, inpatient clinical experience, teaching by residents, supervision by clinical faculty who have less and less time to teach, and hospitals with marginal capacity or willingness to support the teaching mission. We observed poor connections between formal knowledge and experiential learning and inadequate attention to patient populations, health care delivery, patient safety, and quality improvement. Learners lack a holistic view of patient experience and poorly understand the broader civic and advocacy roles of physicians. Finally, the pace and commercial nature of health care often impede the inculcation of fundamental values of the profession” [118].

With all the concerns and questions, should we be pessimistic? I, for one, am not. Noting that 15 major US and Canadian reports that have been written in the past decade, all calling for significant change in medical education, Skochelak notes: “We are experiencing the 100-year echo of 1901–1910, the decade of medical education reform that culminated in the Carnegie Foundation report by Abraham Flexner” [119], implying that the coming decade may be a momentous one. In her review, Skochelak found “remarkable congruence” in the recommendations of the 15 reports and concludes “We can be assured that we don’t need to keep asking ‘What should we do?’ but rather ‘How do we get there?’” The pediatrics community is getting there by coming together, collaborating, and supporting 2 key projects: the Pediatrics Milestone Project and IIPE. In addition, a third project, the Pediatric Redesign Pilot Project, has the potential to inform efforts to create a true continuum of preparation for a career in pediatrics. The pediatrician leader of all 3 efforts is Carol Carraccio, so it seems fitting to allow her the final word: “This is an exciting time in medical education. Many of the national initiatives are beginning to connect and provide synergy for each other. If we continue on this current trajectory, we will have the opportunity to make transformative change in pediatric medical education and training” [120].

Acknowledgments

The author gratefully acknowledges the valuable assistance of the following valued colleagues: to create and consolidate a top ten list, Drs Carol Carraccio, Larrie Greenberg, Stephen Ludwig, Robert Perelman, and Christopher White; to suggest valuable references, Drs Kevin Johnson and Joseph Lopreiato; to confirm facts, Drs Gail McGuinness, Daniel Rauch, and Steven Shelov, Ms Jean Bartholomew (ABP), Ms Julie Raymond (AAP), and Mr Kenneth Slaw (AAP).

References

[1] Institute of Medicine. To err is human: building a safer health system. Washington, DC: Institute of Medicine, National Academies Press; 1999.

[2] IPRO. ACGME-NYS 405 regulations resident work limits comparison guideline. Available at: http://www.docstoc.com/docs/17820605/ACGME-NYS-405-comparison-resident-work-llimits Accessed December 20, 2010

[3] T. Johnson. Limitations on residents’ working hours at New York teaching hospitals: a status report. Acad Med. 2003;78:3-8.

[4] I. Philibert. The 2003 common duty hour limits: process, outcome, and lessons learned. J Grad Med Educ. 2009;1:334-337.

[5] C. Landrigan, G. Parry, C. Bones, et al. Temporal trends in rates of patient harm resulting from medical care. N Engl J Med. 2010;363:2124-2134.

[6] K. Ludmerer. Resident burnout: working hours or working environment? J Grad Med Educ. 2009;1:169-171.

[7] C.P. Landrigan, A. Fahrenkopf, D. Lewin, et al. Effects of the accreditation council for graduate medical education duty hour limits on sleep, work hours, and safety. Pediatrics. 2008;122:250-258.

[8] K. Volpp, C. Landrigan. Building physician work hour regulations from first principles and best evidence. JAMA. 2008;300:1197-1199.

[9] C. Landrigan, J. Rothschild, J. Cronin, et al. Effect of reducing interns’ work hours on serious medical errors in intensive care units. N Engl J Med. 2004;351:1838-1848.

[10] S. Lockley, J. Cronin, E. Evans, et al. Effect of reducing interns’ weekly work hours on sleep and attentional failures. N Engl J Med. 2004;351:1829-1837.

[11] A. Levine, J. Adusumilli, C. Landrigan. Effects of reducing or eliminating resident work shifts over 16 hours: a systematic review. Sleep. 2010;33(8):1043-1053.

[12] Institute of Medicine. Resident duty hours: enhancing sleep, supervision, and safety. Washington, DC: Institute of Medicine, National Academies Press; 2008.

[13] A. Blum, R. Raiszadeh, S. Shea, et al. US public opinion regarding proposed limits on resident physician work hours. BMC Med. 2010;8:33-44.

[14] Accreditation Council for Graduate Medical Education. Information Related to the ACGME’s Effort to Address Resident Duty Hours and Other Relevant Resource Materials. Available at: http://www.acgme.org/DutyHours/dutyHrs_Index.asp Accessed December 21, 2010

[15] A. Burke, J. Rushton, S. Guralnick, et al. Resident work hour requirements: medical educators’ perspectives. Acad Pediatr. 2010;10:369-371.

[16] L. Logio, A. Djuricich. Handoffs in teaching hospitals: situation, background, assessment, and recommendation. Am J Med. 2010;123:563-567.

[17] I. Philibert. Supervision, preoccupation with failure, and the cultural shift in patient handover. J Grad Med Educ. 2010;2:144-145.

[18] Accreditation Council for Graduate Medical Education. Common Program Requirements VIB. Available at: http://www.acgme.org/acWebsite/home/Common_Program_Requirements_07012011.pdf Accessed December 21, 2010

[19] J. Gordon, E. Alexander, S. Lockley, et al. Does simulator-based clinical performance correlate with actual hospital behavior? The effect of extended work hours on patient care provided by medical interns. Acad Med. 2010;85:1583-1588.

[20] J. Cohen, R. Dickler. Auditing the Medicare-billing practices of teaching physicians–welcome accountability, unfair approach. N Engl J Med. 1997;336:1317-1320.

[21] Association of American Medical Colleges. AAMC-Physicians at Teaching Hospitals (PATH) audits. Available at: https://www.aamc.org/advocacy/teachphys/73578/teachphys_phys0040.html Accessed December 21, 2010

[22] Medicare fraud case settled for $30 million. Healthc Financ Manag. February 1996.

[23] A. Sterkenburg, P. Barach, C. Kalkman, et al. When do supervising physicians decide to entrust residents with unsupervised tasks? Acad Med. 2010;85:1408-1417.

[24] R. Bush. Supervision in medical education: logical fallacies and clear choices. J Grad Med Educ. 2010;2:141-143.

[25] S. Babbott. Watching closely at a distance: key tensions in supervising resident physicians. Acad Med. 2010;85:1399-1400.

[26] C. Landrigan, S. Muret-Wagstaff, V. Chiang, et al. Senior resident autonomy in a pediatric hospitalist system. Arch Pediatr Adolesc Med. 2003;157:206-207.

[27] Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: Institute of Medicine, National Academies Press; 2001.

[28] Accreditation Council for Graduate Medical Education. The outcome project. Available at: http://www.acgme.org/outcome/ Accessed December 21, 2010

[29] American Board of Medical Specialties. Maintenance of certification. Available at: http://www.abms.org/Maintenance_of_Certification/ABMS_MOC.aspx Accessed December 21, 2010

[30] Accreditation Council for Continuing Medical Education. 2006 Updated decision-making criteria relevant to the essential areas and elements. Available at: http://www.accme.org/dir_docs/doc_upload/f4ee5075-9574-4231-8876-5e21723c0c82_uploaddocument.pdf. Accessed December 21, 2010. p. 2–3.

[31] D. Irby, L. Wilkerson. Educational innovations in academic medicine and environmental trends. J Gen Intern Med. 2003;18:370-376.

[32] K. Ludmerer. Time to heal. New York: Oxford University Press; 1999.

[33] K. Johnson, D. Chark, Q. Chen, et al. Performing without a net: transitioning away from a health information technology-rich training environment. Acad Med. 2008;83:1179-1186.

[34] J. Ruiz, M. Mintzer, R. Leipzig. The impact of e-learning in medical education. Acad Med. 2006;81:207-212.

[35] R. Baker, K. Lewis. Online master’s degree in education for healthcare professionals: early outcomes of a new program. Med Teach. 2007;29:987-989.

[36] S. Spooner, E. Gotlieg. Steering Committee on Clinical Information Technology and Committee on Medical Liability. American Academy of Pediatrics Technical Report. Telemedicine: pediatric applications. Pediatrics. 2004;113:e639-e643.

[37] M. Anderson, S. Kanter. Medical education in the United States and Canada, 2010. Acad Med. 2010;85(Suppl 9):S2-S18.

[38] R. Satava. The revolution in medical education–the role of simulation. J Grad Med Educ. 2009;1:172-175.

[39] Liaison Committee on Medical Education. Functions and structure of a medical school. Available at: http://www.lcme.org/functions2010jun.pdf. Accessed December 22, 2010. p. 6–7.

[40] S. Li, S. Smith, J. Gigante. A national survey of pediatric clerkship directors’ approaches to meeting the LCME ED-2 requirement for quantified patient criteria for medical students. Teach Learn Med. 2007;19:352-356.

[41] CLIPP. Available at: http://www.med-u.org/virtual_patient_cases/clipp. Accessed December 22, 2010.

[42] N. Berman, L. Fall, S. Smith, et al. Integration strategies for using virtual patients in clinical clerkships. Acad Med. 2009;84:942-949.

[43] M. Tempelhof. Personal digital assistants: a review of current and potential utilization among medical residents. Teach Learn Med. 2009;21:100-104.

[44] K. Ho, H. Lauscher, M. Broudo, et al. The impact of a personal digital assistant (PDA) case log in a medical student clerkship. Teach Learn Med. 2009;21:318-326.

[45] J. Conn. EHRs have varying effects on productivity: UC Davis study. Modern healthcare. Posted December 17, 2010. Available at: http://www.modernhealthcare.com/article/20101217/NEWS/312179998 Accessed December 22, 2010

[46] Scribes, EMR please docs, save $600,000. ED Manag. 2009;21:117-118.

[47] E. Friedman, M. Sainte, R. Fallar. Taking note of the perceived value and impact of medical student chart documentation on education and patient care. Acad Med. 2010;85:1440-1444.

[48] M. Mintz, H. Narvarte, K. O’Brien, et al. Use of electronic medical records by physicians and students in academic internal medicine settings. Acad Med. 2009;84:1698-1704.

[49] R.-M. Fincher, C. White, G. Huang, et al. Toward hypothesis-driven medical education research: task force report from the Millennium Conference 2007 on Educational Research. Acad Med. 2010;85:821-828.

[50] J. Farnan, J. Paro, J. Higa, et al. The relationship of digital media and professionalism: it’s complicated. Acad Med. 2009;84:1479-1481.

[51] Association of American Medical Colleges. Physician shortages to worsen without increases in residency training. Available at: http://www.communityoncology.org/wp-content/uploads/AAMC-MD-Shortage.pdf Accessed December 22, 2010

[52] Association of American Medical Colleges. AAMC Statement on the Physician Workforce. Available at: https://www.aamc.org/download/55458/data/workforceposition.pdf Accessed December 22, 2010

[53] M. Whitcomb. New medical schools in the United States. N Engl J Med. 2010;362:1255-1258.

[54] Association of American Medical Colleges. Results of the 2009 Medical School Enrollment Survey. Center for Workforce Studies. March 2010. Available at: https://www.aamc.org/download/124780/data/enrollment2010.pdf.pdf Accessed December 22, 2010

[55] Council on Graduate Medical Education. Thirteenth report: physician education for a changing health care environment. Bethesda (MD): US Department of Health and Human Services, Bureau of Health Professions, Health Care Resources and Services Administration; 1999.