CHAPTER 15 Pain Management

“We must all die. But that I can save (a person) from days of torture, that is what I feel as my great and ever new privilege. Pain is a more terrible lord of mankind than even death itself” (Albert Schweitzer). The treatment and alleviation of pain is a basic human right that exists regardless of age (Yaster et al., 1997; Schechter et al., 2003). The old “wisdom” that young children neither respond to, nor remember, painful experiences to the same degree that adults do is simply untrue (Taddio and Katz, 2005). Many, if not all, of the nerve pathways essential for the transmission and perception of pain are present and functioning by 24 weeks’ gestation (Lee et al., 2005; Lowery et al., 2007). Furthermore, recent research in newborn animals has revealed that the failure to provide analgesia for pain results in “rewiring” the nerve pathways responsible for pain transmission in the dorsal horn of the spinal cord and results in increased pain perception with future painful insults (Fitzgerald and Beggs, 2001; Pattinson and Fitzgerald, 2004). This confirms human newborn research in which the failure to provide anesthesia or analgesia for newborn circumcision resulted not only in short-term physiologic perturbations but also in longer term behavioral changes, particularly during immunization (Taddio et al., 1995; Maxwell et al., 1987; Taddio and Katz, 2005; Anand et al., 2006).

Providing effective analgesia to infants, preverbal children, adolescents, and the mentally and physically disabled poses unique challenges to those who practice pediatric medicine and surgery. In the past, several studies documented that physicians, nurses, and parents underestimate the amount of pain experienced by children and that they overestimate the risks inherent in the drugs used in the treatment of pain (Schechter et al., 1986; Finley et al., 1996; McGrath and Finley, 1996). This is not at all surprising; the guiding principle of medical practice is to do no harm, primum non nocere. Physicians are taught throughout their training that opioids, the analgesics most commonly prescribed in moderate to severe pain, cause respiratory depression, cardiovascular collapse, depressed levels of consciousness, constipation, nausea, vomiting, and, with repeated use, tolerance and addiction. Less potent analgesics, such as nonsteroidal antiinflammatory drugs (NSAIDs), can also cause problems such as bleeding, liver dysfunction, coagulopathies, and impaired wound and bone healing. Thus, physicians at times prescribe insufficiently potent analgesics, recommend inadequate doses, or use pharmacologically irrational dosing regimens because of their overriding concern that children may be harmed by the use of these drugs. The resulting conundrum often results in inadequate treatment for pain and for painful procedures. On the other hand, the adverse effects of pain and the failure to treat it are rarely discussed. In addition to its impact on neurodevelopment, it is known that unrelieved pain interferes with sleep, leads to fatigue and a sense of helplessness, enhances the stress and inflammatory response, and may result in increased morbidity or mortality (Anand et al., 1987).

Nurses may be wary of physicians’ orders (and patients’ requests) as well. The most common prescription order for potent analgesics is “to give as needed” (pro re nata, or PRN). Thus, the patient must know or remember to ask for pain medication, or the nurse must identify when a patient is in pain. These requirements may not always be met by children in pain. Children younger than 3 years of age may be unable to adequately verbalize when or where they hurt. Alternatively, they may be afraid to report their pain. Many children withdraw or deny their pain if pain relief involves yet another terrifying and painful experience—the intramuscular injection or “shot.” Finally, several studies have documented the inability of nurses, physicians, and parents to correctly identify and treat pain, even in postoperative pediatric patients (McGrath and Finley, 1996; Romsing et al., 1996; Fortier et al., 2009).

Societal fears of opioid addiction and lack of advocacy are also causal factors in the under treatment of pediatric pain. Unlike adult patients, pain management in children is often dependent on the ability of parents to recognize and assess pain and on their decision to treat or not treat it (Romsing and Walther-Larsen, 1996; Sutters and Miaskowski, 1997). Even in hospitalized patients, most of the pain that children experience is managed by the patient’s parents (Greenberg et al., 1999; Krane, 2008). Parental misconceptions concerning pain assessment and pain management may therefore result in inadequate pain treatment (Romsing and Walther-Larsen, 1996; Fortier et al., 2009). This is particularly true in patients who are too young or too developmentally handicapped to report their pain themselves. Parents may fail to report pain, either because they are unable to assess it or they are afraid of the consequences of pain therapy. False beliefs about addiction and the proper use of acetaminophen and other analgesics resulted in the failure to provide analgesia to children (Forward et al., 1996; Fortier et al., 2009). In another study, the belief that pain was useful or that repeated doses of analgesics lead to medication not working well resulted in the failure of the parents to provide or ask for prescribed analgesics to treat their children’s pain (Finley et al., 1996). Parental education is therefore essential if children are to be adequately treated for pain. Unfortunately, the ability to educate parents properly about this issue is often limited by insufficient resources, time, and personnel (Greenberg et al., 1999).

Fortunately, the past 25 years have seen an increase in research and interest in pediatric pain management and in the development of pediatric pain services, primarily under the direction of pediatric anesthesiologists (Shapiro et al., 1991; Nelson et al., 2009). Pediatric-pain service teams provide pain management for acute, postoperative, terminal, neuropathic, and chronic pain. This chapter reviews the recent advances in opioid and local anesthetic pharmacology, as well as the various modalities that are useful in the treatment of acute childhood pain.

Pain assessment

The International Association for the Study of Pain (IASP) defines pain as “an unpleasant sensory and emotional experience associated with actual or potential tissue damage, or described in terms of such damage” (Merskey et al., 1979). Pain is a subjective experience; operationally it can be defined as “what the patient says hurts” and exists “when the patient says it does hurt.” Infants, preverbal children, and children between the ages of 2 and 7 (Piaget’s Preoperational Thought stage) may be unable to describe their pain or their subjective experiences. This has led many to conclude incorrectly that these children don’t experience pain in the same way as adults. Clearly, children do not have to know (or be able to express) the meaning of an experience in order to have the experience (Anand and Craig, 1996). On the other hand, because pain is essentially a subjective experience, focusing on the child’s perspective of pain is an indispensable facet of pediatric pain management and an essential element in the specialized study of childhood pain. Indeed, pain assessment and management are interdependent and one is essentially useless without the other. The goal of pain assessment is to provide accurate data about the location and intensity of pain, as well as the effectiveness of measures used to alleviate or abolish it.

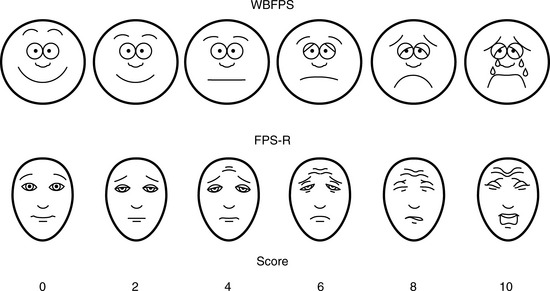

Multiple validated instruments currently exist to measure and assess pain in children of all ages (von Baeyer and Spagrud, 2007; Crellin et al., 2007; Franck et al., 2000). The sensitivity and specificity of these instruments have been widely debated and have resulted in a plethora of studies to validate their reliability and validity. The most commonly used instruments that measure the quality and intensity of pain are “self-report measures.” In older children and adults, the most commonly used self-report instruments are visual analogue scales (VASs) and numerical rating scales (0 = no pain; 10 = worst pain). However, pain intensity or severity can also be measured in children as young as 3 years of age by using pictures or word descriptors to describe pain. Two common examples include the Oucher Scale (developed by Dr. Judy Beyer), a two-part scale with a vertical numerical scale (0–100) on one side and six photographs of a young child on the other, or the Six-Face Pain Scale, first developed by Dr. Donna Wong and later modified by Bieri et al. (Fig. 15-1) (Beyer and Wells, 1989; Wong and Baker, 1988; Beyer et al., 1990). Because of its simplicity, the Six-Face Pain Scale-Revised is commonly used (Hicks et al., 2001; Bieri et al., 1990). Alternatively, color, word-graphic rating scales, and poker chips have been used to assess the intensity of pain in children. One obvious limitation of all of these self-report measures is their inability to be used in cognitively impaired children or in intubated, sedated, and paralyzed patients.

FIGURE 15-1 Six-Face Pain Scale (Top). Original scale developed by Wong et al. Lower, Modified scale by Bieri et al.

(From Bieri D et al.: The Faces Pain Scale for the self-assessment of the severity of pain experienced by children: development, initial validation, and preliminary investigation for ratio scale properties, Pain 41:139, 1990; Beyer JE et al.: Discordance between self-report and behavioral pain measures in children aged 3-7 years after surgery, J Pain Symptom Manage 5:350, 1990.)

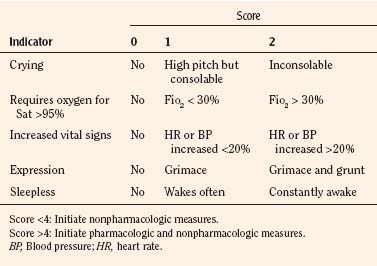

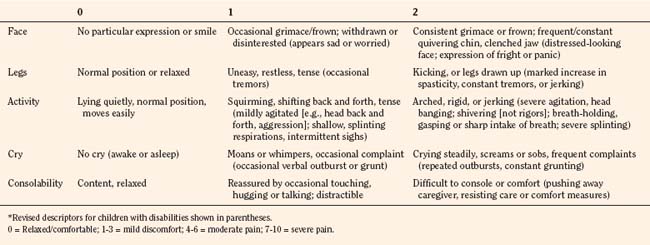

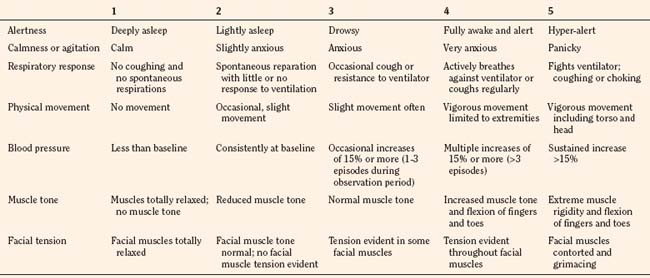

In infants, newborns, and the cognitively impaired, pain has been assessed by measuring physiologic responses to nociceptive stimuli, such as blood pressure and heart rate changes (observational pain scales, OPSs), or by measuring levels of adrenal stress hormones (Krechel and Bildner, 1995). Alternatively, behavioral approaches have used facial expression, body movements, and the intensity and quality of crying as indices of response to nociceptive stimuli. The most appropriate are the Crying, Requires oxygen, Increased vital signs, Expression, and Sleepless (CRIES) score for newborns and the revised Face, Legs, Activity, Cry, and Consolability (FLACC) pain tool for children who have difficulty verbalizing pain (Tables 15-1 and 15-2) (Grunau et al., 1990; Hadjistavropoulos et al., 1994; Voepel-Lewis et al., 2002). Another commonly used pain and sedation tool that uses both behaviors and physiologic parameters is the COMFORT scale, which relies on the measurement of five behavioral variables (alertness, facial tension, muscle tone, agitation, and movement) and three physiologic variables (heart rate, respiration, and blood pressure) (Table 15-3) (Ambuel et al., 1992; Bear and Ward-Smith, 2006). Each is assigned a score ranging from 1 to 5, to give a total score ranging from 8 (deep sedation) to 40 (alert and agitated). A modified COMFORT scale that eliminates physiologic parameters has also been developed (Ista et al., 2005). It is also important to define accurately the location of pain. This is readily accomplished by using either dolls or action figures or by using drawings of body outlines, both front and back. Finally, in the research laboratory, sophisticated new tools such as functional magnetic resonance imaging (MRI) are being used to objectively assess and map pain and its pathways through the central nervous system (CNS) (Tracey and Mantyh, 2007).

Neurophysiology of pain

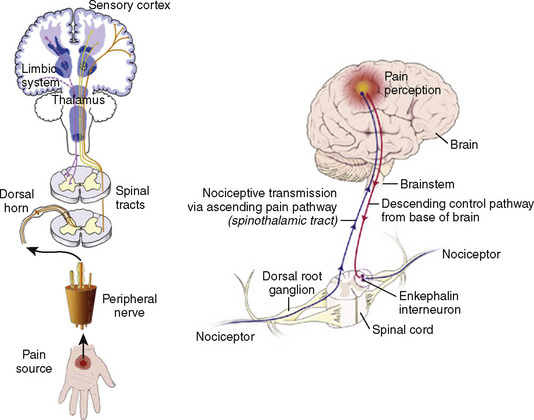

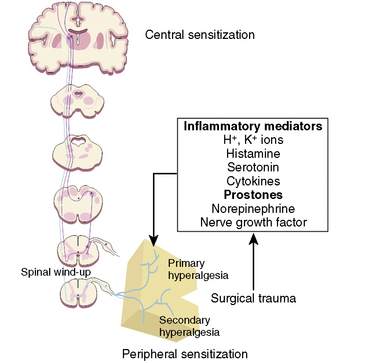

Many if not all of the nerve pathways essential for the transmission, perception, and modulation of pain are present and functioning by 24 weeks of gestation (Fig. 15-2) (Lowry et al., 2007; Lee et al., 2005). Although neural transmission in peripheral nerves is slower in neonates because myelination is incomplete at birth, the major nociceptive neurons in neonates as well as in adults are either unmyelinated C fibers or thinly myelinated Aδ fibers. After an acute injury such as surgical or accidental trauma, inflammatory mediators are released; they lower the pain threshold at the site of injury (primary hyperalgesia) and in the surrounding uninjured tissue (secondary hyperalgesia). These inflammatory mediators, which include hydrogen and potassium ions, histamine, leukotrienes, prostaglandins, cytokines, serotonin (5-HT), bradykinins, and nerve-growth factors make a “sensitizing soup,” which together with repeated stimuli of the nociceptive fibers cause decreased excitatory thresholds and result in peripheral sensitization. They are also targets of therapeutic intervention (Fig. 15-3). Secondary effects of peripheral sensitization include hyperalgesia, the increased response to a noxious stimulus and allodynia, whereby non-nociceptive fibers transmit noxious stimuli resulting in the sensation of pain from non-noxious stimuli.

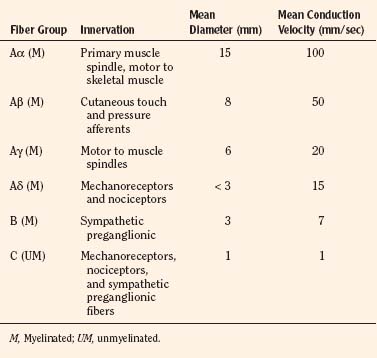

Sensory afferent neurons have a unipolar cell body located in the dorsal root ganglion and are classified by fiber size into three major groups (A, B, C) (Table 15-4). Group A is further subclassified into four subgroups. Sensory fibers that respond to noxious stimulation include small caliber myelinated (Aδ) or fine unmyelinated C fibers. These fibers originate as free nerve endings that can be characterized by their response to specific stimuli such as pressure, heat, and chemical irritants and arise from epidermal and internal receptive fields, including the periosteum, joints, and viscera. The Aδ nociceptors transmit “first pain,” which is well localized, sharp, and lasts as only as long as the original stimulus. The C-fiber, polymodal nociceptors display a slow conduction velocity and respond to mechanothermal and chemical stimuli. This “second pain” is diffuse, persistent, burning, slow to be perceived, and lasts well beyond the termination of the stimulus.

As the primary afferent neurons enter the spinal cord they segregate and occupy a lateral position in the dorsal horn (Fig. 15-4). The Aδ fibers terminate in laminae I, II (substantia gelatinosa), V (nucleus proprius), and X (central canal). The C fibers terminate in laminae I, II, and V, and some enter the dorsal horn through the ventral root. These afferent neurons release one or more excitatory amino acids (e.g., glutamate and aspartate) or peptide neurotransmitters (e.g., substance P, neurokinin A, calcitonin gene-related peptide [CGRP], cholecystokinin, and somatostatin). Second-order neurons that receive these chemical signals integrate the afferent input with facilitatory and inhibitory influences of interneurons and descending neuronal projections. It is this convergence within the dorsal horn that is responsible for much of the processing, amplification, and modulation of pain. Furthermore, the ability to simultaneously process noxious and innocuous stimuli underlies the gate-control theory of pain described by Melzack and Wall (Melzack and Wall, 1965; DeLeo, 2006).

Nociceptive activity in the spinal cord and the ascending spinothalamic, spinoreticular, and spinomesencephalic tracts carry messages to supraspinal centers (e.g., periaqueductal gray, locus coeruleus, hypothalamus, thalamus, and cerebral cortex) where they are modulated and integrated with autonomic, homeostatic, and arousal processes. This modulation, particularly by the endogenous opioids, γ-aminobutyric acid (GABA), and norepinephrine (NE), can either facilitate pain transmission or inhibit it. Modulating pain at peripheral, spinal, and supraspinal sites helps achieve better pain management than targeting only one site and is the underlying principle of treating pain in a multimodal fashion (Fig. 15-3).

Although pain pathways are present at birth, they are often immature (Lee et al., 2005; Lowery et al., 2007). As a result, there are considerable differences in how an infant responds to injury compared with the way an adult does. Within the developing nervous system, inhibitory mechanisms in the dorsal horn of the spinal cord are immature, and inhibition of nociceptive input in the dorsal horn of the spinal cord is less than in the adult. Furthermore, dorsal horn neurons in the newborn have wider receptive fields and lower excitatory thresholds than those in older children (Torsney and Fitzgerald, 2003; Bremner and Fitzgerald, 2008; Fitzgerald and Walker, 2009). Thus, compared with adults, young infants have exaggerated reflex responses to pain. Furthermore, recent research in newborn animals has revealed that the failure to provide analgesia for pain results in “rewiring” the nerve pathways responsible for pain transmission in the dorsal horn of the spinal cord and results in increased pain perception for future painful insults (Fitzgerald and Beggs, 2001; Pattinson and Fitzgerald, 2004). This confirms human newborn research in which the failure to provide anesthesia or analgesia for newborn circumcision resulted not only in short-term physiologic perturbations but also in longer term behavioral changes, particularly during immunization (Maxwell et al., 1987; Taddio et al., 1995; Taddio and Katz, 2005).

Preemptive Analgesia, Preventive Analgesia, and Multimodal Analgesia

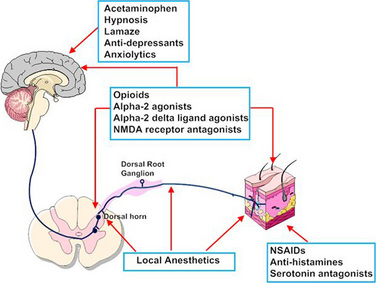

The possibility that pain after surgery might be preemptively prevented or ameliorated by the use of opioids or local anesthetics given preoperatively has been a concept under review (Katz and McCartney, 2002; Moiniche et al., 2002; Oong et al., 2005). Over the past few years the concept of preemptive analgesia has expanded and evolved to include the reduction of nociceptive inputs during and after surgery. This expanded conceptual framework, which includes preoperative, intraoperative, and postoperative analgesia, targets multiple sites along the pain pathway and is referred to as preventive or multimodal analgesia (Ballantyne, 2001; Katz and McCartney, 2002). Indeed, acute pediatric (and adult) pain management is increasingly characterized by a multimodal or “balanced” approach in which smaller doses of opioid and nonopioid analgesics, such NSAIDs, local anesthetics, NMDA antagonists, and α2-adrenergic agonists, are combined to maximize pain control and minimize drug-induced adverse side effects (Fig. 15-5) (DeLeo, 2006). Additionally, a multimodal approach also uses nonpharmacologic complementary and alternative medicine therapies. These alternative medical therapies include distraction, guided imagery, hypnosis, relaxation techniques, biofeedback, transcutaneous nerve stimulation, and acupuncture (Rusy and Weisman, 2000). Taking this approach, activation of peripheral nociceptors can be attenuated with the use of NSAIDs, antihistamines, 5-HT antagonists, and local anesthetics (Fig. 15-5). Within the dorsal horn, nociceptive transmission and processing can be further affected by the administration of local anesthetics, neuraxial opioids, α2-adrenergic agonists (e.g., clonidine and dexmedetomidine), and NMDA receptor antagonists (e.g., ketamine and methadone). Within the CNS, pain can be ameliorated by systemic opioids, α2-agonists, anticonvulsants (e.g., gabapentin and pregabalin), pharmacologic therapies (e.g., benzodiazepines, α2-agonists), and nonpharmacologic therapies (e.g., hypnosis, Lamaze, and acupuncture) that reduce anxiety and induce rest and sleep.

Pharmacologic management of pain: the conundrum of “off-label” drug use

Unfortunately, very few studies have evaluated the pharmacokinetic and pharmacodynamic properties of drugs in children (Conroy and Peden, 2001; Katz and Kelly, 1993). Most pharmacokinetic studies are performed using healthy adult volunteers, adult patients who are only minimally ill, or adult patients in a stable phase of a chronic disease. These data are then extrapolated to infants, children, and adolescents, and the medications are prescribed “off-label.” So little pharmacokinetic and pharmacodynamic testing has been performed in children that they are often considered “therapeutic orphans” (Blumer, 1999). In addition, drug formulations designed for adults are often manipulated and altered by practitioners for use in children (e.g., tablets are dissolved to make a liquid formulation, or suppositories are cut in half). The U.S. Congress has enacted the Best Pharmaceuticals for Children Act, the Pediatric Research Equity Act, and the Food and Drug Administration (FDA) Amendments Act to promote standards and requirements for the use and labeling of pediatric drugs (BPCA, 2002; PREA, 2003; FDAAA, 2007).

Pharmacologic management of pain

Nonopioid Analgesics (or Weaker Analgesics with Antipyretic Activity)

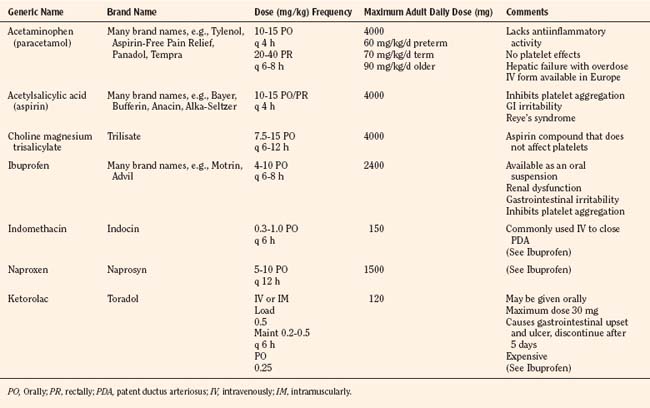

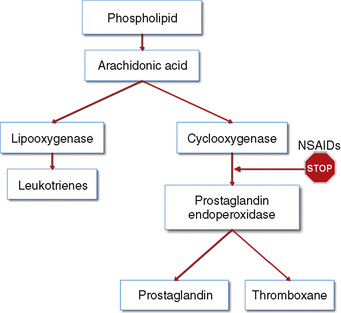

The weaker or milder analgesics with antipyretic activity, of which acetaminophen (paracetamol), salicylate (aspirin), ibuprofen, naproxen, ketoprofen, and diclofenac are common examples, comprise a heterogenous group of NSAIDs and nonopioid analgesics (Table 15-5) (Agency for Health Care Policy and Research, 1992; Yaster, 1997; Tobias, 2000b; Kokki, 2003). They produce their analgesic, antiinflammatory, antiplatelet, and antipyretic effects primarily by blocking peripheral and central prostaglandin and thromboxane production by inhibiting cyclooxygenase (COX) types 1, 2, and 3 (Fig. 15-6). These metabolites of cyclooxygenase sensitize peripheral nerve endings and vasodilate the blood vessels causing pain, erythema, and inflammation.

These analgesic agents are administered enterally via the oral or, on occasion, the rectal route and are particularly useful for inflammatory, bony, or rheumatic pain. Parenterally administered agents, such as ketorolac and acetaminophen, are available for use in children in whom the oral or rectal routes of administration are not possible (Murat et al., 2005). Unfortunately, regardless of dose, the nonopioid analgesics are limited by a “ceiling effect” above which pain cannot be relieved by these drugs alone. Because of this, these weaker analgesics are often administered in oral combination forms with opioids such as codeine, oxycodone, or hydrocodone.

Only a few trials have compared the efficacy of these drugs in head to head competition, and in general these studies have shown that there are no major differences in their analgesic effects when appropriate doses of each drug are used. The commonly used NSAIDs, such as ketorolac, diclofenac, ibuprofen, and ketoprofen, have reversible antiplatelet adhesion and aggregation effects that are attributable to the inhibition of thromboxane synthesis (Niemi et al., 1997; Munsterhjelm et al., 2006). As a result, bleeding times are usually slightly increased, but in most instances they remain within normal limits in children with normal coagulation systems. Nevertheless this side effect is of such great concern, particularly in surgical procedures in which even a small amount of bleeding can be catastrophic (e.g., tonsillectomy and neurosurgery), that few clinicians prescribe them even though the evidence supporting increased bleeding is equivocal at best (Moiniche et al., 2003; Cardwell et al., 2005). Finally, many orthopedic surgeons are also concerned about the negative influence of all NSAIDs, both selective and nonselective COX inhibitors, on bone growth and healing (Simon et al., 2002; Einhorn, 2003; Dahners and Mullis, 2004). Thus, most pediatric orthopedic surgeons have recommended that these drugs not be used in their patients in the postoperative period.

The discovery of at least three COX isoenzymes (COX-1, COX-2, and COX-3) has enhanced our knowledge of NSAIDs (Cashman, 1996; Vane and Botting, 1998). The COX isoenzymes share structural and enzymatic similarities, but they are specifically regulated at the molecular level and may be distinguished by their functions. Protective prostaglandins, which preserve the integrity of the stomach lining and maintain normal renal function in a compromised kidney, are synthesized by COX-1 (Vane and Botting, 1998; Moiniche et al., 2003; Levesque et al., 2005). The COX-2 isoenzyme is inducible by proinflammatory cytokines and growth factors, implying a role for COX-2 in both inflammation and control of cell growth. In addition to the induction of COX-2 in inflammatory lesions, it is expressed constitutively in the brain and spinal cord, where it may be involved in nerve transmission, particularly for pain and fever. Prostaglandins made by COX-2 are also important in ovulation and in the birth process (Vane and Botting, 1998; Moiniche et al., 2003; Levesque et al., 2005). The discovery of COX-2 has made possible the design of drugs that reduce inflammation without removing the protective prostaglandins in the stomach and kidneys made by COX-1. In fact, developing a more specific COX-2 inhibitor was a “holy grail” of drug research, because this class of drug was postulated to have all of the desired antiinflammatory and analgesic properties with none of the gastrointestinal and antiplatelet side effects. Unfortunately, the controversy regarding the potential adverse cardiovascular risks of prolonged use of COX-2 inhibitors has dampened much of the enthusiasm for these drugs and has led to the removal of rofecoxib from the market by its manufacturer (Johnsen et al., 2005; Levesque et al., 2005).

Aspirin

Aspirin, one of the oldest and most effective nonopioid analgesics, has been largely abandoned in pediatric practice because of its possible role in Reye’s syndrome, its effects on platelet function, and its gastric irritant properties. Despite these problems, a “sister” compound, choline-magnesium trisalicylate is still prescribed, particularly in the management of postoperative pain and in the child with cancer. Choline-magnesium trisalicylate is a unique aspirin-like compound that does not bind to platelets and therefore has minimal, if any, effects on platelet function (Yaster, 1997). As a result, it can be prescribed to patients with low platelet counts (cancer patients), dysfunctional platelets (uremia), and in the postoperative period. It is a convenient drug to give to children because it is available in both a liquid and tablet form and is administered either twice a day or every 6 hours. However, the association of salicylates with Reye’s syndrome limits its use, even though the risk of developing this syndrome postoperatively is extremely unlikely.

Acetaminophen

The most commonly used nonopioid analgesic in pediatric practice remains acetaminophen, although its analgesic effectiveness in the neonate is unclear (Shah et al., 1998; Anderson, 2008). Unlike aspirin and other NSAIDs, acetaminophen produces analgesia centrally as a COX-3 inhibitor and via activation of descending serotonergic pathways (Graham and Scott, 2005; Anderson, 2008). It is also thought to produce analgesia as a cannabinoid agonist and by antagonizing NMDA and substance P in the spinal cord (Bertolini et al., 2006). Acetaminophen is an antipyretic analgesic with minimal, if any, antiinflammatory and antiplatelet activity and takes about 30 minutes to provide effective analgesia. When administered orally in standard doses, 10 to 15 mg/kg acetaminophen is extremely safe, effective, and has few serious side effects. When administered rectally, higher doses of 25 to 40 mg/kg are required (Rusy et al., 1995; Birmingham et al., 1997). Because of its known association with fulminant hepatic necrosis, the daily maximum acetaminophen dosages, regardless of formulation or route of delivery, in the preterm infant, full-term infant, and older child are 60, 80, and 90 mg/kg respectively (Table 15-5). Thus, when administering acetaminophen rectally it should be given every 8 hours rather than every 4 hours. Finally, an intravenous formulation of acetaminophen is now available in Europe and can be used in patients in whom the enteral route is unavailable. This formulation has been associated with better analgesia than oral acetaminophen in clinical trials in adult patients and is equally effective and less painful than the prodrug formulation of the drug in children (Murat et al., 2005).

Opioids

Overview

Over the past 30 years, multiple opioid receptors and subtypes have been identified and classified. There are 3 primary opioid receptor types, designated mu (µ) (for morphine), kappa (κ), and delta (δ). These receptors are primarily located in the brain and spinal cord, but they also exist peripherally on peripheral nerve cells, immune cells, and other cells (e.g., oocytes) (Sabbe and Yaksh, 1990; Snyder and Pasternak, 2003; Stein and Rosow, 2004). The μ-receptor is further subdivided into several subtypes such as the μ1 (supraspinal analgesia), μ2 (respiratory depression, inhibition of gastrointestinal motility), and μ3 (antiinflammation, leukocytes), which affects the pharmacologic profiles of different opioids (Pasternak, 2001a, 2001b, 2005; Bonnet et al., 2008). Both endogenous and exogenous agonists and antagonists bind to various opioid receptors.

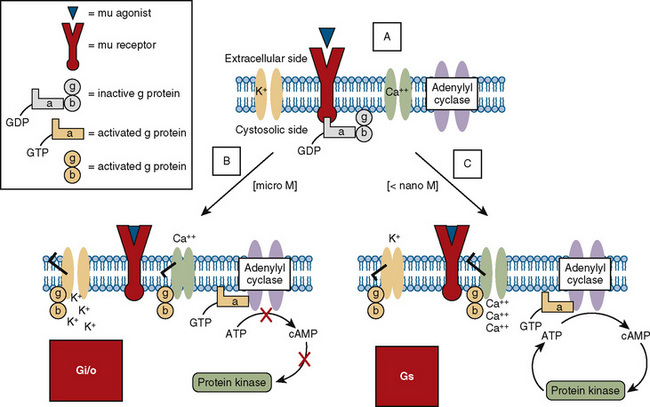

Opioid receptors, which are found anchored to the plasma membrane both presynaptically and postsynaptically, decrease the release of excitatory neurotransmitters from terminals carrying nociceptive stimuli. These receptors belong to the steroid superfamily of G protein–coupled receptors. Their protein structure contains seven transmembrane regions with extracellular loops that confer subtype specificity and intracellular loops that mediate subreceptor phenomena (Stein and Rosow, 2004). These receptors are coupled to guanine nucleotide (GTP)-binding regulatory proteins (G proteins) and regulate transmembrane signaling by regulating adenylate cyclase (and therefore cyclic adenosine monophosphate [cAMP]), various ion channels (K+, Ca,++, Na+) and transport proteins, neuronal nitric oxide synthetase, and phospholipase C and A2 (Fig. 15-7) (Standifer and Pasternak, 1997; Maxwell et al., 2005; Pasternak, 2005). Signal transduction from opioid receptors occurs via bonding to inhibitory G proteins (Gi and Go). Analgesic effects are mediated by decreased neuronal excitability from an inwardly rectifying K+ current, which hyperpolarizes the neuronal membrane, decreases cAMP production, increases nitric oxide synthesis, and increases the production of 12-lipoxygenase metabolites. Indeed, synergism between opioids and NSAIDs occurs as a result of the greater availability of arachidonic acid for metabolism by the 12-lipooxygenase pathway, after blockade of prostaglandin production by NSAIDs (Vaughan et al., 1997). Some of the unwanted side effects of opioids, such as pruritus, may be the result of opioid binding to stimulatory G proteins (Gs) and may be antagonized by low dose infusions of naloxone (Fig. 15-7) (Crain and Shen, 1998, 1996; Maxwell et al., 2005).

Pharmacokinetics

For opioids to effectively relieve or prevent most pain, the agonist must reach the receptor in the CNS. There are essentially two ways that this occurs, either via the bloodstream (after intravenous, intramuscular, oral, nasal, transdermal, or mucosal administration) or by direct application into the cerebrospinal fluid (intrathecal or epidural). Agonists administered via the bloodstream must cross the blood-brain barrier, a lipid membrane interface between the endothelial cells of the brain vasculature and the extracellular fluid of the brain, to reach the receptor. Normally, highly lipid-soluble agonists, such as fentanyl, rapidly diffuse across the blood-brain barrier, whereas agonists with limited lipid solubility, such as morphine, have limited brain uptake. This rule, however, does not hold true for patients of all ages. The blood-brain barrier may be immature at birth and is known to be more permeable in neonates to morphine. Indeed, Kupferberg and Way (1963) demonstrated in a classic paper that morphine concentrations were two to four times greater in the brains of younger rats than older rats despite equal blood concentrations. Spinal administration, either intrathecally (subarachnoid) or epidurally, bypasses the blood and directly places an agonist into the cerebrospinal fluid, which bathes the receptor sites in the spinal cord (substantia gelatinosa) and brain. This “back door” to the receptor significantly reduces the amount of agonist needed to relieve pain and to induce opioid side effects such as pruritus, urinary retention, and respiratory depression (Cousins and Mather, 1984; Sabbe and Yaksh, 1990). After spinal administration, opioids are absorbed by the epidural veins and redistributed to the systemic circulation, where they are metabolized and excreted. Hydrophilic agents such as morphine cross the dura more slowly than more lipid-soluble agents such as fentanyl or meperidine. This physical chemical property is responsible for the more prolonged duration of action of spinal morphine and its very slow onset of action after epidural administration (Sabbe and Yaksh, 1990).

Biotransformation

Effects of Age and Disease

Morphine, meperidine, methadone, codeine, and fentanyl are biotransformed in the liver before excretion by the kidneys. Many of these reactions are catalyzed in the liver by glucuronidation or microsomal mixed-function oxidases that require the cytochrome P450 system, nicotinamide adenine dinucleotide phosphate (NADPH), and oxygen. The cytochrome P450 system is immature at birth and does not reach adult levels of activity until the first month or two of life. The immaturity of this hepatic enzyme system may explain the prolonged clearance or elimination of some opioids in the first few days to weeks of life (Anderson and Lynn, 2009). On the other hand, the P450 system can be induced by various drugs (such as phenobarbital) and substrates, and once the infant is born, it matures regardless of gestational age. Thus, it is the age from birth and not the duration of gestation that determines how premature and full-term infants metabolize drugs.

Morphine is primarily glucuronidated into two forms: an inactive form, morphine-3-glucuronide, and an active form, morphine-6-glucuronide. Both glucuronides are excreted by the kidney. In patients with renal failure, morphine-6-glucuronide can accumulate and cause toxic side effects including respiratory depression (Murtagh et al., 2007; Lotsch, 2005). This is important to consider not only when prescribing morphine but when administering other opioids that are metabolized into morphine, such as codeine.

Distribution and Clearance

The pharmacokinetics of morphine have been extensively studied in adults, older children, and in premature and full-term newborns (Lynn et al., 1991, 1993). After an intravenous bolus, 30% of morphine is protein-bound in the adult vs. only 20% in the newborn. This increase in unbound (“free”) morphine allows a greater proportion of active drug to penetrate the brain. This may in part explain the observation of Way et al. (1965) of increased brain levels of morphine in the newborn and its more profound respiratory depressant effects. The elimination half-life of morphine in adults and older children is 3 to 4 hours and is consistent with its duration of analgesic action. The half-life of elimination (t½β) is more than twice as long in newborns younger than 1 week of age than in older children and adults and is even longer in premature infants (Lynn and Slattery, 1987). Clearance is similarly decreased in the newborn compared with the older child and adult. Thus, infants younger than 1 month of age attain higher serum levels that decline more slowly than older children and adults. This may also account for the increased respiratory depression associated with morphine in this age group. Interestingly, the t½β and clearance of morphine in children older than 2 months of age are similar to adult values; thus, the hesitancy in prescribing and administering morphine in children younger than 1 year of age may not always be warranted. On the other hand, the use of any opioids in children who were born prematurely (fewer than 37 weeks’ gestation) and are less than 52 to 60 weeks’ postconceptional age or who were born at term and are younger than 2 months of age must be restricted to a monitored setting.

Based on its relatively short half-life (3 to 4 hours), one would expect older children and adults to require morphine supplementation every 2 to 3 hours when being treated for pain, particularly if the morphine is administered intravenously. This has led to the use of continuous infusion regimens of morphine and patient-controlled analgesia (see related section) or, alternatively, administration of longer-acting agonists such as methadone. When administered by continuous infusion, the duration of action of opioids that are rapidly redistributed (such as fentanyl, sufentanil, and alfentanil) and are thought to be short-acting may be longer than would be predicted simply by their pharmacokinetics (see Chapter 7, Pharmacology of Pediatric Anesthesia). An exception to this is remifentanil, a μ-opioid receptor agonist with unique pharmacokinetic properties (Burkle et al., 1996).

The pharmacokinetics of remifentanil are characterized by a small volume of distribution, rapid clearances, and low interpatient variability, compared with other intravenous anesthetic and analgesic agents. The drug has a rapid onset of action (the half-time for equilibration between blood and the effect compartment is 1.3 minutes) and a short context-sensitive half-life (3 to 5 minutes) (Bailey, 2002; Welzing and Roth, 2006). This latter property is attributable to hydrolytic metabolism of the compound by nonspecific tissue and plasma esterases. Virtually all (99.8%) of an administered remifentanil dose is eliminated during the half-life of redistribution t½α (0.9 minutes) and the t½β (6.3 minutes). The pharmacokinetics of remifentanil suggest that within 10 minutes of starting an infusion, remifentanil blood levels will have nearly reached steady state. Thus, changing the infusion rate of remifentanil produces rapid changes in drug effect. The rapid metabolism of remifentanil and its small volume of distribution mean that remifentanil does not accumulate. Discontinuing the drug rapidly terminates its effects and has significant intraoperative implications. When remifentanil is administered intraoperatively as part of a balanced or primary opioid general anesthetic, some patients have reportedly awakened in severe pain. This can be because of inadequate loading of a longer-acting opioid, as well as “opioid-induced hyperalgesia,” a paradoxical process by which opioid administration, even for short periods of time, increases the sensitivity to pain and worsens pain when the opioid is discontinued (Crawford et al., 1992; Chu et al., 2008). Finally, remifentanil may be a reasonable alternative to inhaled general anesthetics in newborn infants undergoing surgery, because it only briefly interferes with the control of breathing and because this effect is terminated shortly after discontinuing the drug (Davis et al., 2001; Galinkin et al., 2001).

Commonly Used Oral Opioids

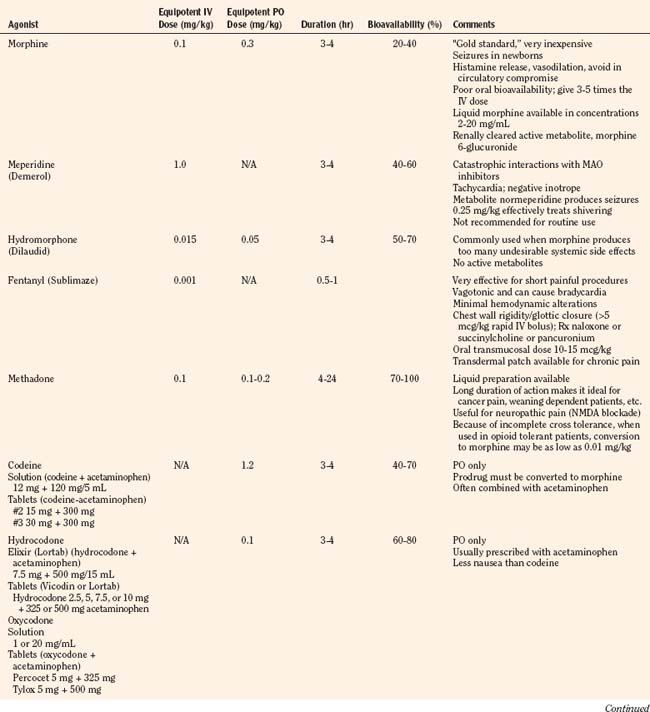

Codeine, oxycodone (the opioid in Tylox and Percocet), and hydrocodone (the opioid in Vicodin and Lortab) are opioids that are commonly used to treat pain in children and adults, and are quite useful when making the transition from parenteral to enteral analgesia (Table 15-6). Methadone and sustained release formulations of morphine, oxycodone, oxymorphone, and hydromorphone are commonly used to treat chronic medical pain (e.g., cancer) and in postoperative surgical or trauma patients with long recuperative times (e.g., pectus excavatum or posterior spine surgery). Codeine, oxycodone, and hydrocodone are most commonly administered in the oral form, often in combination with acetaminophen. Although the combination drugs are convenient, and acetaminophen potentiates the analgesia produced by codeine—allowing the practitioner to use less opioid to achieve satisfactory analgesia—the combination of drugs significantly increases the risk of acetaminophen toxicity. Acetaminophen toxicity may result from a single toxic dose, from repeated ingestion of large doses of acetaminophen (e.g., in adults 7.5 to 10 g/day for 1 to 2 days, in children 60 to 420 mg/kg per day for 1 to 42 days), or from chronic ingestion. However, hepatotoxicity can also occur inadvertently when patients with poorly controlled pain increase the number of combination tablets they need to control their pain or when they are receiving more than one source of acetaminophen (Heubi et al., 1998). The latter occurs because so many prescription and over-the-counter drug products contain acetaminophen (e.g., cold remedies). Because of this risk, the preferred method is to prescribe opioids and acetaminophen (or ibuprofen) separately.

In equipotent doses, oral analgesics have similar effects and side effects including analgesia, sedation, cough suppression, pruritus, nausea, vomiting, constipation and respiratory depression (Table 15-6). Yet the responses of patients to individual opioids can vary markedly, even among these μ-opioid agonists (Pasternak, 2001a, 2005). Understanding this variability greatly enhances the ability to treat patients appropriately. Codeine is a case in point; although readily available, it is very nauseating, and many patients claim they are allergic to it because it so commonly induces vomiting. On the other hand, some differences may be more based on folklore rather than reality. Many physicians falsely believe that meperidine has less of an effect on the sphincter of Oddi than other opioids and therefore prescribe it for patients with gallbladder disease. In fact, meperidine offers no advantage over other opioids and has a serious disadvantage, namely catastrophic interactions with monoamine oxidase (MAO) inhibitors.

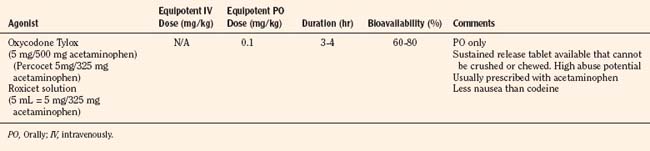

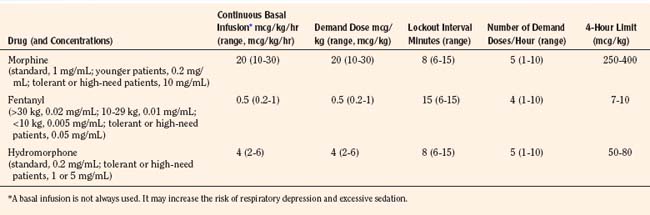

Patient-Controlled Analgesia

Historically, pain medications have been administered on a demand or PRN basis (Krane, 2008). When drugs are given PRN, the patient or caregiver must recognize that pain exists, summon a nurse and await the preparation and administration of the analgesic. Even in the best of circumstances, there is a delay between the patient’s request and the provider’s response (Krane, 2008). Around-the-clock administration of analgesics at intervals based on population pharmacokinetics (e.g., every 4 hours) is not always effective because there are enormous individual variations in pain perception and opioid metabolism. Knowledge of opioid pharmacokinetics suggests that intravenous boluses of intermediate-acting opioids such as morphine may be needed as often as every 1 to 2 hours in order to avoid marked fluctuations in plasma drug levels, but generally they are ordered no more frequently than every 4 hours. One way to achieve this goal is via continuous intravenous opioid infusions. This approach provides steady analgesic levels and has been used with great safety and efficacy in children; however, because neither the perception nor the intensity of pain is constant, continuous opioid infusions do not adequately treat pain in all patients (Lynn et al., 2000). For example, a postoperative patient may be very comfortable resting in bed and may require little adjustment in opioid dosing. This same patient may experience excruciating pain when coughing, voiding, or getting out of bed. Receiving the same dose of opioid in both instances may result in either oversedation or under treatment. Thus, rational pain management requires some form of titration to effect whenever any opioid is administered. In order to give patients some measure of control over their pain therapy, analgesia on demand or patient-controlled analgesia (PCA) devices were developed (Berde et al., 1991; Yaster et al., 1997). These are microprocessor driven pumps that the patient controls to self-administer intermittent, predetermined, small doses of opioid whenever a need for more pain relief is felt. The opioid, usually morphine, hydromorphone, or fentanyl is administered either intravenously or subcutaneously (Table 15-7). The dosage of opioid, number of demand doses (“boluses”) per hour, and the time interval between boluses (the “lock-out period”) are programmed into the equipment by the pain-service physician to allow maximum patient flexibility and sense of control with minimal risk of overdosage. Generally, because older patients know that if they have severe pain they can obtain relief immediately, many prefer dosing regimens that result in mild to moderate pain in exchange for fewer side effects such as nausea or pruritus. Morphine is the most commonly prescribed opioid. Typically it is prescribed at a bolus dose of 20 mcg/kg, at a rate of up to 5 boluses/hour, and with a lock-out interval between each bolus of 6 to 8 minutes (Table 15-7). Variations include larger boluses (30 to 50 mcg/kg) and shorter time intervals (5 minutes).

The PCA pump computer stores within its memory how many boluses the patient has received, as well as how many attempts the patient has made at receiving boluses. This allows the provider to evaluate how well the patient understands the use of the pump and provides information to program the pump more efficiently. In addition, most PCA units allow low, continuous “background” infusions (e.g., morphine, 20 to 30 mcg/kg per hour, hydromorphone 3 to 4 mcg/kg per hour or fentanyl 0.5 mcg/kg per hour) in addition to self-administered boluses. Continuous background infusions can facilitate more restful sleep by preventing the patient from awakening in pain (Doyle et al., 1993). Although in adults a background infusion increases the potential for overdosage without significantly improving analgesia, this has not been the experience in pediatrics and in adult cancer patients (Fleming and Coombs, 1992). In these patients, a continuous infusion improves analgesia and makes the life easier for the health care provider, because with better analgesia there are fewer phone calls to rewrite orders or to change therapy (Monitto et al., 1998; Yildiz et al., 2003; Nelson et al., 2009). Contraindications to the use of PCA include inability to push the bolus button (because of weakness or arm restraints), inability to understand how to use the machine, and a patient’s desire not to assume responsibility for personal care. Difficulties with PCA include increased costs; patient age limitations; and the need for physician, nursing, pharmacy protocols, education; and storage arrangements that must be instituted before its implementation. These policies, procedures, and protocols are essential for the safe use of opioids regardless of the method of administration. Essential features include age-appropriate parameters for monitoring respiratory status (e.g., respiratory rate and oxygen saturation), patient alertness, and pain assessment, as well as weight-based dosing. Additionally, because accurate prescribing requires a correct weight, proper conversion of pounds to kilograms, and the choice of an appropriate medication preparation and concentration, the computerization of analgesic medication prescribing is an important patient safety strategy (Wrona et al., 2007; Lee et al., 2008). Figure 15-8 is an example of an electronic, computerized PCA provider order set used in the Children’s Center of the Johns Hopkins Hospital that incorporates many of these features.

Parent- and Nurse-Controlled Analgesia (Surrogate PCA or PCA by Proxy)

Independent use of PCA requires a patient with sufficient understanding, manual dexterity, and strength to initiate a demand dose. Thus, it was initially limited to adolescents and teenagers, but over time the lower age limit of patients has fallen. In general, many have found that any child able to play a video game can successfully operate a PCA pump independently. However, in very young children or children with developmental or physical handicaps, a similar but alternate mode of therapy is parent- or nurse-controlled analgesia (PNCA), sometimes referred to as PCA by proxy or surrogate PCA (Monitto et al., 2000). When using this technique, the child receives a basal opioid infusion when PCA bolus doses are initiated by a designated surrogate, generally a parent or nurse, when they perceive that the child appears to be in pain (Monitto et al., 2000; Nelson et al., 2009).

Allowing parents or nurses to initiate a PCA bolus is controversial. In 2004, the Joint Commission on Accreditation of Healthcare Organizations (JCAHO) issued a sentinel event alert warning that serious adverse events can result when surrogates become involved in administering analgesia by proxy (Joint Commission on Accreditation of Healthcare Organizations, 2004). Of note, the JCAHO based this warning on a series of adverse events in adults who for the most part developed complications as a result of the preemptive use of the PCA bolus by spouses, children, or nurses when the patient was asleep. Although this alert was not meant to address cases in which caregivers were authorized to administer PCA boluses, it nevertheless raised serious concerns in the pediatric pain management community and changed the practice at some hospitals. Published studies have reported that up to 1% to 3% of patients receiving intravenous PCA by proxy may receive naloxone to treat cardiopulmonary complications (Monitto et al., 2000; Anghelescu et al., 2005; Voepel-Lewis et al., 2008). Interestingly, in a study comparing pediatric intravenous PCA and intravenous PCA by proxy, complication rates were similar between the two modes of therapy, but the use of naloxone was higher in the younger patients. However, it was unclear whether providers had a lower threshold for treating these children with naloxone given their younger age and an associated increased incidence of comorbidities (Voepel-Lewis et al., 2008). Because respiratory depression does occur, safe institution of PCA by proxy as well as PCA requires close patient monitoring, established nursing protocols, and an understanding by surrogates of the appropriate use of the bolus dose.

Transdermal and Transmucosal Fentanyl

Because fentanyl is extremely lipophilic, it can be readily absorbed across any biological membrane, including the skin. Thus, fentanyl can be administered painlessly by nonintravenous routes, including transmucosal (nose and mouth) and transdermal routes. The transmucosal route of fentanyl administration is extremely effective for acute pain relief. When given intranasally (2 mcg/kg), it produces rapid analgesia that is equivalent to intravenously administered fentanyl (Galinkin et al., 2000). For transoral absorption, fentanyl is also available in a candy matrix (Actiq) attached to a plastic applicator device that looks like a lollipop. As the patient sucks on the lollipop, fentanyl is absorbed across the buccal mucosa and is rapidly absorbed (10 to 20 minutes) into the systemic circulation (Goldstein-Dresner et al., 1991; Streisand et al., 1989, 1991; Ashburn et al., 1993; Schechter et al., 1995). If excessive sedation occurs, the fentanyl is removed from the patient’s mouth by the applicator. Transmucosal absorption is more efficient than ordinary oral-gastric intestinal administration because it bypasses the efficient first-pass hepatic metabolism of fentanyl that occurs after enteral absorption into the portal circulation. The candy matrix of fentanyl has been approved by the FDA for use in children for premedication before surgery and for procedure-related pain (e.g., lumbar puncture or bone marrow aspiration) (Dsida et al., 1998). It is also useful in the treatment of cancer pain and as a supplement to transdermal fentanyl (Portenoy et al., 1999). When administered transmucosally, a fentanyl dose of 10 to 15 mcg/kg is effective within 20 minutes and lasts approximately 2 hours. Because approximately 25% to 33% of the given dose is absorbed, blood levels equivalent to 3 to 5 mcg/kg intravenous fentanyl are achieved with this dose. The major side effect of treatment is nausea and vomiting, which occurs in approximately 20% to 33% of patients who receive it (Epstein et al., 1996). Finally, a new, rapidly dissolving, effervescent fentanyl buccal tablet, Fentora, has become available for breakthrough pain in patients who are already receiving opioids for persistent pain and who are tolerant to opioid therapy (Messina et al., 2008; Weinstein et al., 2009). These tablets come in various doses (100, 200, 400, 600, and 800 mcg). In adults, fentanyl buccal tablets have an absolute bioavailability of 65%; approximately 50% of the total administered dose is absorbed transmucosally, and the remaining half is swallowed and undergoes slow absorption from the gastrointestinal tract. There is little published pediatric experience with this drug.

The transdermal route is commonly used to administer many drugs, including scopolamine, clonidine, and nitroglycerin. Many factors, including body site, skin temperature, skin damage, ethnicity, and age affect the absorption of transdermally administered drugs. The transdermal fentanyl patch has revolutionized adult and pediatric cancer pain management (Zernikow et al., 2007; Finkel et al., 2005). Placed in a selective semipermeable membrane patch, which is attached to the skin by a contact adhesive, a reservoir of fentanyl provides slow, steady-state absorption of drug across the skin. As fentanyl is painlessly absorbed across the skin, a substantial amount is stored in the upper skin layers, which then act as a secondary reservoir. The presence of this “skin depot” has several implications:

Indeed, the amount of fentanyl remaining within the system and skin depot after removal of the patch is substantial. At the end of a 24-hour period, a fentanyl patch releasing drug at the rate of 100 mcg/hr, 1.07 ± 0.43 mg fentanyl (approximately 30% of the total delivered dose from the patch) remains in the skin depot. Thus, even after removal of the patch, fentanyl continues to be absorbed from the subcutaneous fat reservoir for almost 24 hours (Grond et al., 2000).

Because of its long onset time, inability to rapidly adjust drug delivery, and long t½β, transdermal fentanyl is contraindicated for acute pain management. In fact, the use of this drug delivery system to treat acute pain has resulted in the death of an otherwise healthy patient. Transdermal fentanyl is appropriate only for patients who have developed opioid tolerance and for those with chronic pain (e.g., cancer) (Zernikow et al., 2007; Finkel et al., 2005). Even when transdermal fentanyl is appropriate, the vehicle imposes its own constraints; the patch with the lowest dose delivers 12.5 mcg of fentanyl per hour; others deliver 25, 50, 75, and 100 mcg of fentanyl per hour. The patches cannot be physically cut in smaller pieces to deliver less fentanyl.

Methadone

Primarily thought of as a drug to treat or wean patients who are addicted to or dependent on opioid, methadone is increasingly being used in the management of acute and chronic intractable pain. Unique among the opioids, methadone exists as a racemic mixture of two active isomers; one isomer binds as an agonist to the μ-receptor and the other as an antagonist at the NMDA receptor. It is this latter property that makes methadone unique among opioids. The NMDA system is involved in wind-up and the maintenance of chronic pain as well as opioid-induced hyperalgesia and the development of tolerance (Ebert et al., 1998; Gagnon and Bruera, 1999; Gorman et al., 1997). Thus, blockade of NMDA receptors can act to acutely enhance opioid-induced antinociception, impair the development of tolerance, and prevent the development of chronic pain (Raffa, 1996).

In addition, methadone is noted for its slow t½β, very long duration of effective analgesia, high oral bioavailability, and inactive metabolites. The t½β of methadone averages 19 hours, and clearance averages 5.4 mL/kg per minute in children 1 to 18 years of age (Berde et al., 1991). Methadone has the longest t½β of any of the commonly available opiates and can provide up to 12 to 36 hours of analgesia after a single intravenous or oral dose (Gourlay et al., 1986, 1982, 1984; Berde 1989; Shannon and Berde, 1989). Pharmacokinetically, children are indistinguishable from young adults. Because a single dose of methadone can achieve and sustain a high drug-plasma level, it is a convenient way to provide prolonged analgesia without requiring an intramuscular injection or a continuous infusion. Berde et al. (1989) recommend methadone as a “poor man’s PCA” and suggest loading patients with an initial dose of intravenous methadone (0.1 to 0.2 mg/kg) and then titrating in 0.05-mg/kg increments every 10 to 15 minutes until analgesia is achieved. Supplemental methadone can be administered in 0.05- to 0.1-mg/kg increments administered by slow intravenous infusion every 4 to 12 hours as needed. Shannon and Berde (1989) have also reported the use of small incremental doses administered by sliding scale. “Small increments of methadone are administered intravenously over 20 minutes every 4 hours via a ‘sliding’ scale on a ‘reverse prn’ (the nurse asks the patient) basis: 0.07 to 0.08 mg/kg for severe pain; 0.05 to 0.06 mg/kg for moderate pain; 0.03 mg/kg for little or no pain, if the patient is alert; and no drug if the patient has little pain and is somnolent” (Berde 1989; Shannon and Berde, 1989).

In addition, both methadone and sustained relief morphine can be used to wean patients who have become physically dependent on opioids after prolonged analgesic therapy (Yaster et al., 1996; Suresh and Anand, 1998; Tobias, 2000a). Finally, because methadone is extremely well absorbed from the gastrointestinal tract and has a bioavailability of 80% to 90%, it is extremely easy to convert intravenous dosing regimens to oral ones. Recently, however, the conversion dose of morphine to methadone has been challenged. Traditionally, it has been thought that the ratio of morphine to methadone was approximately 1:1; it now appears that when tolerance develops and morphine doses are “high,” it is closer to 1:0.25 or even 1:0.1 (Lawlor et al., 1998; Ripamonti et al., 1998a, 1998b; Gagnon and Bruera, 1999). Tolerance to morphine and other opioids such as fentanyl is a significant problem in patients being treated for pain chronically or acutely in the intensive care unit setting. When this occurs, substituting methadone for morphine or fentanyl at the lower doses discussed in the previous section can rapidly reestablish analgesia even though all of these opioids work at the same μ-opioid receptor. This occurs because of “incomplete cross tolerance,” which may be because methadone’s antagonist actions at the NMDA receptor or because of multiple μ-receptor subtypes (Gorman et al., 1997; Ebert et al., 1998; Trujillo and Akil, 1991; Pasternak, 2001a).

Among opioid analgesics, methadone is unique as a potent blocker of the delayed rectifier potassium ion channel. This results in QT prolongation, can produce torsade de pointes ventricular tachycardia in susceptible individuals, and may explain the sudden death associated with its use (Andrews et al., 2009). The effects of methadone on the QT interval may be enhanced by hypokalemia, drugs that increase the QT interval such as erythromycin and ondansetron, or by CYP 3A4 inhibitors such as fluoxetine, fluconazole, valproate, and clarithromycin (Ehret et al., 2006). An updated list of medications causing torsade de pointes ventricular tachycardia can be found at www.azcert.org. Indeed, this is such a serious consequence of therapy that some have recommended that all patients treated with methadone have routine screening ECGs before or during their treatment.

Tramadol

Tramadol, a synthetic 4-phenylpiperidine analogue of codeine, is a centrally acting synthetic analgesic that has been used for 30 years in Europe and was approved by the FDA for adult use in the United States in 1995 (Raffa 1996; Minto and Power, 1997). It is a racemic mixture of two enantiomers: + tramadol and –tramadol (Berde, 1989; Glare and Lickiss, 1992). The +enantiomer has a moderate affinity for the µ-opioid receptor that is greater than that of the −enantiomer (Raffa, 1993). In addition, the +enantiomer inhibits 5-HT uptake and the –enantiomer blocks the reuptake of NE, complementary properties that result in a synergistic antinociceptive interaction between the two enantiomers. Tramadol may also produce analgesia as an α2-agonist (Desmeules et al., 1996). A metabolite (O-desmethyltramadol) binds to opioid receptors with a greater affinity than the parent compound and could contribute to tramadol’s analgesic effects as well. However, in most animal tests and human clinical trials, the analgesic effect of tramadol is only partially blocked by the opioid antagonist naloxone, suggesting an important nonopioid mechanism. Thus, tramadol provides analgesia synergistically by opioid (direct binding to the μ-opioid receptor by the parent compound and its metabolite) and nonopioid mechanisms (an increase in central neuronal synaptic levels of 5-HT and NE).Finally, animal and human studies have suggested that tramadol may have a selective spinal and local anesthetic action on peripheral nerves. Tramadol has been shown to provide effective, long-lasting analgesia after extradural administration in both adults and children and prolongs the duration of action of local anesthetics when used for brachial plexus and epidural blockade (Kapral et al., 1999; Prosser et al., 1997).

Tramadol’s intravenous analgesic effect has been reported to be 10 to 15 times less than that of morphine and is roughly equianalgesic with NSAIDs (Raffa, 1996; Naguib et al., 1998). Unlike NSAIDs and opioid mixed agonist/antagonists, the therapeutic use of tramadol has not been associated with the clinically important side effects such as respiratory depression, constipation, or sedation. In addition, analgesic tolerance has not been a serious problem during repeated administration, and neither psychological dependence nor euphoric effects are observed in long-term clinical trials. Thus, tramadol may offer significant advantages in the management of pain in children by virtue of its dual mechanism of action, its lack of a ceiling effect, and its minimal respiratory depression.

Tramadol may be administered orally, rectally, intravenously, or epidurally (Gunes et al., 2004; Bozkurt, 2005). Oral and intravenous tramadol is administered in doses of 1 to 2 mg/kg; the higher dose provides a longer duration of action without increasing side effects (Finkel et al., 2002; Rose, 2003; Bozkurt, 2005).

Complications of opioid therapy

Regardless of the method of administration, all opioids commonly produce unwanted side effects such as pruritus, nausea and vomiting, constipation, urinary retention, cognitive impairment, tolerance, and dependence (Yaster et al., 2003). Many patients suffer needlessly from pain in order to avoid these debilitating induced side effects (Watcha and White, 1992). Additionally, physicians are often reluctant to prescribe opioids because of these side effects and because of their fear of other, less common but more serious side effects such as respiratory depression. Several clinical and laboratory studies have demonstrated that low-dose naloxone infusions (0.25 to 1 mcg/kg per hour) can treat or prevent opioid-induced side effects without affecting the quality of analgesia or opioid requirements in adults, children, and adolescents (Maxwell et al., 2005; Gan et al., 1997).

Opioid-Induced Bowel Dysfunction

Opioid-induced bowel dysfunction (OBD), often described as constipation, is a constellation of symptoms that includes delayed gastric emptying, slow bowel motility, incomplete evacuation, bloating, abdominal distention, and gastric reflux. OBD occurs whether opioids are administered acutely or chronically, is found in 90% of patients treated with opioids, and is a significant problem in 50% to 60% of adult patients with advanced cancer (Glare and Lickiss, 1992; Fallon and Hanks, 1999). OBD is not really a side effect of opioid therapy; rather the effects of opioids on gastric emptying, peristalsis, and bowel motility are intrinsic opioid actions. Indeed, opium has been used in the treatment of dysentery for thousands of years. However, unlike the analgesic effects of opioids, the gastrointestinal ones are not accompanied by the development of tolerance.

Therefore, patients treated with opioids, regardless of drug, route, or method of delivery, should be considered for a prophylactic bowel regimen of stool softeners and bulking agents (e.g., senna and lubiprostone) as soon as the patient can eat or drink. Indeed, there may be value in starting these agents preoperatively. Alternatively, several new peripherally-acting opioid antagonists, methylnaltrexone and alvimopan, have recently been approved by the FDA and may be of great utility (Moss and Rosow, 2008). These medications offer promise in the treatment of OBD in patients with late-stage advanced illness (methylnaltrexone), as well as postoperative ileus in adult patients (alvimopan). However, neither of these medications has been studied or approved for use in children. If these conservative measures fail, then stimulant laxatives (e.g., polyethylene glycol 3550) and enemas should be added to this regimen.

Opioid-Induced Pruritus

Opioid-induced pruritus (OIP) is one of the most common adverse side effects associated with opioid use. The incidence of OIP varies between 20% and 100% when opioids are administered neuroaxially (intrathecally or epidurally) and between 20% to 60% when administered intravenously (Ganesh and Maxwell, 2007). Surprisingly, the pathophysiology of clinical itch remains unclear. Over 300 years ago, the German physician Samuel Hafenreffer described itch as an “unpleasant sensation that elicits the desire to scratch,” and this definition is still valid today. Much of the current research on itch focuses on identifying the neuronal mechanisms and the mediators responsible for itch in dermatological and systemic disease. OIP is primarily mediated by binding of μ-agonists with central μ-opioid receptors in the brain and spinal cord and can be blocked with centrally acting μ-receptor antagonists (Ko and Naughton, 2000). Binding of the dopamine D2 receptor and the release of prostaglandin E1 and E2 have also been implicated in the development of OIP. Blockade of the D2 receptor by antagonists such as droperidol or by inhibiting prostaglandin production with NSAIDs significantly reduces OIP (Horta et al., 1996). Whereas histamine is a potent pruritic agent, opioid-induced histamine release from mast cells plays almost no part in OIP.

A wide variety of drugs with different mechanisms of action have been used to treat or prevent OIP. Antihistamines, such as diphenhydramine or hydroxyzine are the most common and perhaps least effective drugs used to treat established OIP. They primarily interrupt the itch-scratch cycle by providing needed sleep but are not really effective at reducing the severity of itch. A more effective approach is to prophylactically administer a low-dose intravenous infusion of the opioid antagonist naloxone (0.25 to 1 mcg/kg per hour) or the partial agonist/antagonist nalbuphine (50 mcg/kg, maximum 5 mg/dose) (Kendrick et al., 1996; Maxwell and Yaster, 2003; Nakatsuka et al., 2006). Other strategies involve rotating opioids, reducing opioid dose, or switching to the oral route of administration.

Opioid-Induced Nausea and Vomiting

A wide variety of drugs with different mechanism of actions have been used to treat or prevent opioid-induced nausea and vomiting (Watcha and White, 1992). The most commonly used antiemetics are antihistamines (diphenhydramine), phenothiazines (prochlorperazine and promethazine), butyrophenones (haloperidol and droperidol), benzamides (metoclopramide), 5-HT3-receptor antagonists (ondansetron, dolasetron, granisetron), and dexamethasone. A low-dose intravenous naloxone infusion (1 mcg/kg per hour) has also been shown to be effective (Maxwell and Yaster, 2003). Many of the agents listed above work synergistically. Although it does not make sense to combine two drugs that act via the same mechanism (e.g., two antihistamines such as diphenhydramine and hydroxyzine), combinations of drugs that act via different mechanisms (e.g., ondansetron, a selective inhibitor of 5-HT3 receptors and the steroid dexamethasone) can be effective (McKenzie et al., 1994). Finally, if these measures fail, opioid dose reduction, with or without the addition of NSAIDs, or opioid rotation may be effective.

Opioid-Induced Sedation

The sedating effects of opioids in opioid-naive patients are well known and can be thought of as either a desired effect or an adverse side effect. Although tolerance to sedation and drowsiness often develop, in some patients sedation and mental clouding are so debilitating that it leads to a reduced quality of life. Treatment is often dose reduction, opioid rotation, or the use of psychostimulants. Methylphenidate is the most commonly used stimulant to improve drowsiness, but others include dextroamphetamine, caffeine, and modafinil (Rozans et al., 2002; Prommer, 2006).

Tolerance, Dependence, and Withdrawal

Tolerance is the development of a need to increase the dose of an opioid (or benzodiazepine) agonist to achieve the same analgesic (or sedative) effect previously achieved with a lower dose (Nutt, 1996; Wise, 1996). Whereas tolerance to the sedative and analgesic effects of opioids usually develops after 5 to 21 days of morphine administration, tolerance to the constipating effects of opioids rarely occur. Additionally, cross-tolerance develops between all μ-opioid receptor agonists. However, because this cross tolerance is rarely complete, opioid rotation, that is, changing from one opioid (morphine, fentanyl, or hydromorphone) to another (usually methadone) can be helpful in preventing a continuous escalation in analgesic dosing. When it is necessary to switch, careful consideration must be given to the choice of opioid, dose, and expected degree of cross-tolerance. For example, when switching from high-dose morphine to methadone in patients who are tolerant of opioid, the equianalgesic dose is decreased by a factor of four- to fivefold. Even with this reduction, the calculated dose of methadone may be so high that it warrants a stepwise conversion while the patient remains in a high-surveillance care unit.

Physical dependence, sometimes referred to as neuroadaptation, is caused by repeated administration of an opioid that necessitates the continued administration of the drug to prevent the development of a withdrawal or abstinence syndrome characteristic for that particular drug (O’Brien, 1996). Physical dependence usually occurs after 2 to 3 weeks of morphine administration, but when high doses of opioid are administered it may occur after only a few days of therapy. Very young infants treated with high-dose fentanyl infusions after surgical repair of congenital heart disease and those who require extracorporeal membrane oxygenation (ECMO) have been identified to be at particular risk of developing dependence and withdrawal on discontinuation of therapy (Arnold et al., 1991, 1990; Kauffman 1991; Lane et al., 1991).

Physical dependence must be differentiated from addiction (O’Brien, 1996). Addiction is a term used to connote a severe degree of drug abuse and dependence that is an extreme of behavior in which drug use pervades the total life activity of the user and of the range of circumstances in which drug use controls the user’s behavior. Patients who are addicted to opioids often spend large amounts of time acquiring or using the drug, abandon social or occupational activities because of drug use, and continue to use the drug despite adverse psychological or physical effects. In a sense, addiction is a subset of physical dependence. Anyone who is addicted to an opioid is physically dependent; however, not everyone who is physically dependent is addicted. Patients appropriately treated with opioid agonists for pain can become tolerant and physically dependent. They rarely become psychologically dependent or addicted (Porter and Jick, 1980).

When physical dependence has been established, sudden discontinuation of an opioid or benzodiazepine agonist produces a withdrawal syndrome within 24 hours of drug cessation. Symptoms reach their peak within 72 hours and include abdominal cramping, vomiting, diarrhea, tachycardia, hypertension, diaphoresis, restlessness, insomnia, movement disorders, reversible neurologic abnormalities, and seizures (O’Brien, 1996; Anand and Arnold, 1994; Katz et al., 1994; Nestler 1994, 1996; Norton 1988).

Clinical and experimental data suggest that the duration of opioid receptor occupancy is an important factor in the development of tolerance and dependence. Thus, continuous infusions may produce tolerance more rapidly than intermittent therapy (Katz et al., 1994; Anand and Arnold, 1994). This is particularly true for highly lipid-soluble opioids such as fentanyl. Tolerance and dependence predictably develops after only 5 to 10 days (2.5 mg/kg total fentanyl dose) of continuous fentanyl infusions (Arnold et al., 1991, 1990; Anand and Arnold, 1994; Katz et al., 1994). Nevertheless, prolonged therapy in excess of 10 days, even by intermittent bolus administration, should be expected to produce opioid dependence. As a result, tolerance and physical dependence to both opioid and benzodiazepines is a common phenomenon in the intensive care unit (Suresh and Anand, 1998; Anand and Arnold, 1994; O’Brien, 1996; Yaster et al., 1996; Koob and Nestler, 1997; Tobias, 2000a).

Withdrawal Scales and Weaning Strategies

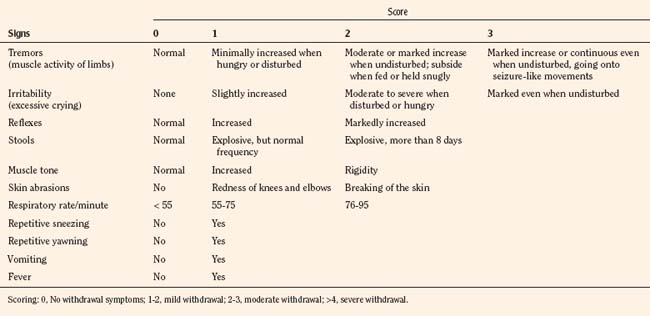

Withdrawal and Abstinence Scales for Infants and Children

The most widely used tool to assess neonatal abstinence is the Finnegan scale (Table 15-8). This tool is also among the most commonly used withdrawal scales in older infants and children, even though it has never been validated for this use. The Finnegan scale is a complicated assessment measure that uses a weighted scoring of 31 items that requires training and when used clinically, some assessment of interrater reliability. As a result, it may be too complicated for routine use. An alternative, the Lipsitz scale, offers the advantage of being a relatively simple numerical system, with a reported 77% sensitivity using a value greater than 4 as an indication of significant signs of withdrawal (Table 15-9) (Lipsitz, 1975). The Lipsitz scale and the need for a scoring system to guide therapy was recommended by the American Academy of Pediatrics (AAP) in a 1998 consensus statement (AAP Committee on Drugs, 1998).

| Sign/Symptoms | Score |

| Cry | |

| Excessive | 2 |

| Continuous | 3 |

| Sleep (# hours after feeding) | |

| <1 Hour | 3 |

| <2 Hours | 2 |

| <3 Hours | 1 |

| Moro Reflex | |

| Hyperactive | 2 |

| Markedly hyperactive | 3 |

| Tremors | |

| Mild | 1 |

| Moderate-severe | 2 |

| Moderate-severe when undisturbed | 3 |

| Increased tone | 2 |

| Frequent yawning | 2 |

| Sneezing | 1 |

| Nasal congestion | 1 |

| Nasal flaring | 2 |

| Respiratory Rate | |

| >60 Breaths per minute | 1 |

| >60 Breaths per minute with retractions | 2 |

| Excoriation | 1 |

| Seizures | 5 |

| Sweating | 1 |

| Fever | |

| 100-101oF | 1 |

| >101oF | |

| Mottling | 1 |

| Excessive sucking | 1 |

| Poor feeding | 2 |

| Regurgitation | 2 |

| Projectile vomiting | 3 |

| Stooling | |

| Loose | 2 |

| Watery | 3 |

Scoring: 0-7, Mild symptoms of withdrawal; 8-11, moderate withdrawal; 12-15, severe withdrawal.

When pharmacologic treatment is needed to treat withdrawal, the AAP recommends dilute tincture of opium for neonatal opiate withdrawal. For sedative-hypnotic withdrawal, phenobarbital is the agent of choice. Agthe and others (2009) demonstrated that when tincture of opium is supplemented with oral clonidine (1 mcg/kg) every 4 hours, the duration of pharmacotherapy for neonatal abstinence syndrome is dramatically reduced. However, despite clear, evidence-based recommendations from the AAP, the management of the newborn with psychomotor behavior consistent with withdrawal varies widely. In a recently published survey of neonatal withdrawal treatment, Sarkar and Donn (2006) found inconsistent policies, scale utilization, and treatment regimens between institutions and individual physicians. These results reflect similar findings of earlier studies and reemphasize the disparity between the published evidence and recommendations supporting the use of withdrawal scoring and current clinical practice for neonatal withdrawal treatment.

Franck et al. (2004) investigated the use of an adapted neonatal assessment tool to older children. This 21-item checklist was initially used for opioid weaning and modified for the evaluation of opioid and benzodiazepine withdrawal symptoms (Franck et al., 2004). Their small study demonstrated good interrater reliability and content validity of the tool, as well as applicability to a wide range of ages (6 to 28 months). Thus, this withdrawal scale, named the Opioid and Benzodiazepine Withdrawal Score (OBWS), has a wide range of applicability. Adult withdrawal assessment tools often involve personal reporting of symptoms by the patient. Recently, a clinician-administered tool (the Clinical Opiate Withdrawal Scale [COWS]) was developed to provide a simplified 11-question score to assess withdrawal symptoms and to review its applicability in iatrogenic and abuse-related opioid withdrawal scenarios (Wesson and Ling, 2003). Although simplistic and promising, its applicability to other agents (such as benzodiazepines) and to the pediatric age group is unknown.

Weaning Strategies in Infants and Children

As previously discussed, tolerance and physical dependence develop as a result of the drug, dose, method of delivery, and duration of therapy. When the risk of withdrawal is high, weaning patients should be slow (Yaster et al., 1997, 1996). Unfortunately, abrupt withdrawal of opioids and sedatives to facilitate extubation and transfer of patients out of the PICU is commonplace. If sedative or opioid use has been of short duration (i.e., less than 72 hours), acute discontinuation is reasonable. If a patient has required infusions or repeated administration of an agent for more than 5 days, then an agent-specific weaning strategy should be employed.

One approach to the weaning process is to convert all of the patient’s analgesic and sedative medications to intermittent parenteral therapy whenever possible. All forms of the drugs being used therapeutically must be counted in this conversion, including PRN medications. Furthermore, because it is quite common for patients to receive multiple opioids and sedatives, all of the opioids should be converted to morphine equivalents and the benzodiazepines to diazepam equivalents (Yaster et al., 1996). How to proceed with weaning beyond this first step is not always clear, and there are no published evidence-based studies.