Update on Nutritional Recommendations for the Pediatric Patient

In the last several years there have been several reports with new nutritional recommendations for infants and children. These reports have included new ideas on how to prevent the development of atopic disease that suggest that the established practices of restricting the introduction of complementary foods to infants may promote atopic disease [1]. There are new recommendations for iron supplementation for infants and toddlers that point out the importance of introducing iron-rich complementary foods much earlier in the diet of infants, particularly if they are exclusively breastfed [2]. There are also new recommendations for increased supplements of vitamin D for infants, children, and adolescents [3,4]. In addition, there are new recommended nutritional guidelines for children at risk for overweight hypercholesterolemia [5], and a new American Academy of Pediatrics (AAP) statement that questions the benefits of the addition of probiotics and prebiotics to the diet of healthy children [6]. These new recommendations are reviewed in this article for pediatric health care providers.

Prevention of atopic disease with nutritional interventions early in life

In the past 30 years, the incidence of atopic diseases such as asthma, atopic dermatitis, and food allergies have increased dramatically [1]. The incidence of peanut allergy has increased threefold and the incidence of asthma has increased 160%. It has long been believed that the diet in early childhood has been important in the development of atopic disease, including the diet of the mother during pregnancy and lactation. The AAP has previously recommended that lactating mothers with infants at high risk for developing allergy avoid peanuts, cow milk, and fish in their diets while lactating [7].

However, studies have not supported a protective effect of a maternal exclusion diet during pregnancy or lactation on the development of atopic disease in infants [1]. Even though dietary food allergens can be detected in breast milk, it cannot be concluded from published studies to date that the exclusion of eggs, cow milk, fish, or peanuts from the diet of lactating women prevents atopic disease in the infant [1].

Breastfeeding has long been believed to prevent atopic disease in children. Although there is evidence that exclusive breastfeeding protects against wheezing in early life (generally believed to be related to infection and airway size), evidence that exclusive breastfeeding prevents asthma in children older than 6 years is unconvincing [1]. There is evidence that mothers with asthma who exclusively breastfeed increase the risk of asthma in their children [1]. For breastfeeding, the most convincing evidence to date is for the prevention of atopic dermatitis. For infants at high risk of developing atopic disease (parent or sibling with allergic disease) there is evidence that exclusive breastfeeding for at least 4 months, compared with feeding intact cow milk protein formula, decreases the incidence of atopic dermatitis and cow milk allergy during the first 2 years of life. However, there is not enough evidence to conclude that exclusive breastfeeding for at least 4 months prevents the development of food allergy (discussed later) [1].

The role of special infant formulas in preventing atopic disease has also been reviewed by the AAP and other groups [1]. There is no convincing evidence that the use of soy-based formula prevents allergy [1,8], and the use of amino acid–based formulas for the prevention of atopic disease has not been studied [1].

The role of hydrolyzed formula is less clear and most of the studies have been done in infants at high risk of developing allergy. No studies have compared completely or partially hydrolyzed formula with exclusive breastfeeding, so there is no evidence that the use of these formulas is any better than human milk in the prevention of atopic disease [1]. One of the largest studies, the German Infant Nutritional Intervention Program, showed the complexity of comparing various hydrolysate formulas [9]. This study included 945 infants who were at high risk for allergy and who were initially breastfed. The study compared a cow milk formula with 1 of 3 other formulas: partially hydrolyzed whey-based formula, an extensively hydrolyzed whey-based formula, and an extensively hydrolyzed casein-based formula. These formulas were started with weaning or when breast milk was not available. This study showed that different hydrolysates have different effects on atopic disease in infants who were initially breastfed and at high risk for the development of atopic disease. The best results for prevention of atopic disease (atopic dermatitis, urticaria, food allergy) were found for the extensively hydrolyzed casein-based formula compared with cow milk formula (odds ratio [OR] 0.51; 95% CI 0.28–0.92; P<.025). Lesser effects were seen with the partially hydrolyzed whey-based formula (OR 0.65; 95% CI 0.38–1.1) and extensively hydrolyzed whey-based formula (OR 0.86; 95% CI 0.52–1.4), compared with cow milk formula [9]. Why extensively hydrolyzed whey-based formula was less effective than partially hydrolyzed whey-based formula was not answered in this study. Follow-up of these infants has shown that the preventive effect of hydrolyzed infant formulas persists to a lesser degree until 6 years of age [10]. However, the higher cost of the hydrolyzed formulas must be considered in any decision-making process for their use. The most convincing data supporting their use are only for children at high risk for atopic disease who were initially receiving breast milk.

Although there have been many studies of the duration of exclusive breastfeeding and its effect on atopic disease, fewer studies have examined the timing of the introduction of complementary foods as an independent risk factor in breastfed or formula-fed infants. Most groups have recommended exclusive breastfeeding for between 4 and 6 months. However, there is no current convincing evidence that delaying the introduction of complementary food beyond this period has a significant protective effect on the development of atopic disease, regardless of whether infants are fed cow milk protein formula or human milk [1]. However, there is evidence that the early introduction of some complementary foods between 4 and 6 months may prevent food allergy [1,11], including foods that are highly allergic, such as wheat/gluten, fish, eggs, and foods containing peanut protein [1,11–19]. For instance, introducing cereal grains before 6 months of age (compared with later introduction) has been shown to be protective against wheat-specific immunoglobulin E [14,15]. With gluten allergy, there is similar evidence that introducing gluten between 4 and 6 months of age prevents celiac disease [11,17]. There is also evidence that introducing highly allergic foods while still breastfeeding may be protective against food allergy. Thus, celiac disease can be prevented in children less than the 2 years of age if they are still breastfeeding at the time of introduction of dietary gluten (OR 0.59; 95% CI 0.42–0.83), and even further decreased by continuing to breastfeed after introducing gluten (OR 0.36; 95% CI 0.26–0.51) [11]. Again, earlier introduction of egg in the first year of life affects allergic sensitization to eggs [12,13]. Introducing eggs at between 4 and 6 months compared with 7 to 9 months increases the risk for egg allergy (OR 1.4; 95% CI 1.2–3.0 ), and introducing egg between 10 and 12 months increases the risk for egg allergy even more (OR 6.5; 95% CI 3.6–11.6) [13].

A major concern remains the impact of early introduction of peanut-containing products and the effect on peanut allergy [16,18,19]. A recent epidemiology study from Israel found that the prevalence of peanut allergy was tenfold lower in Jewish people living in Israel, where peanut butter is used in the weaning food Bamba, compared with London-based Jewish families with much less exposure to peanut-containing products [18]. At present, there are 2 ongoing studies looking at the effect of peanut exposure early in life on the development of peanut allergy at 3 to 6 years, and the results of these are eagerly awaited [16].

Iron

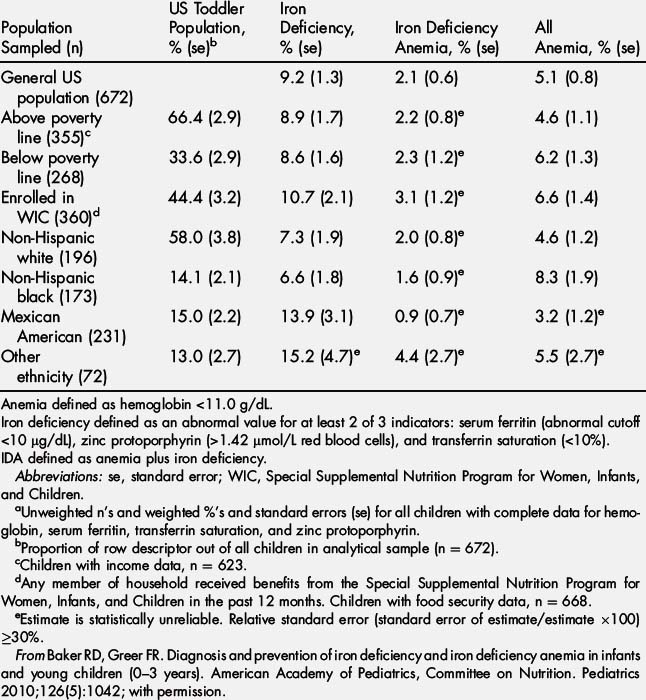

The AAP recently published its first official report on the diagnosis and prevention of iron deficiency (ID) and iron deficiency anemia (IDA) in infants and toddlers [2]. In the United States there has been a decline in prevalence of IDA, but it still accounts for about 20% of all anemias (hemoglobin [Hb]<11.0 g/dL) in young children between 12 and 35 months of age, with a prevalence of 2.1% [2] (Table 1). However, the prevalence of ID in young children between the ages of 12 and 35 months is much higher. It affects 9.2% of children between 12 and 35 months of age in the general US population and up to 15% of Mexican Americans of the same age group (see Table 1) [2].

IDA represents the severe form of iron deficiency and is associated with irreversible neurodevelopmental delay [2]. Given the likelihood that ID also has some effect on long-term neurodevelopment and behavior that may be irreversible, ID also remains a serious concern [2]. Because iron is prioritized to red cells more than other body tissues, its role in the transport of oxygen in the red blood cells is clearly its most critical function. This prioritization of iron for red cells, even more than the brain, would account for the adverse neurodevelopmental effects of iron deficiency with or without anemia in the infant. Iron is essential for neuronal proliferation, myelination, energy metabolism, neurotransmission, and various enzyme systems in the central nervous system [20].

The iron supplied in breast milk (averaging 0.27 mg/d assuming a breast milk intake of 0.78 L/d) coupled with the iron stores at birth in the healthy term infant, meets the iron needs only until the infant doubles its birth weight and thus doubles its blood volume, usually by 4 months of age. Between 4 and 6 months of age, there is a dramatic increase in the iron requirement, to 11 mg/d, for the remainder of the first year of life [2]. Thus, because of concerns about ID, the AAP has recommended that breastfed infants be supplemented with 1 mg/kg/d of oral iron beginning at 4 months of age until appropriate iron-containing complementary foods (including iron-fortified cereals) are introduced into the diet [2]. The best source of iron is heme iron found in red meat. Because breast milk contains no heme iron, the early introduction of complementary foods containing meat is advised. It is acknowledged that this would require a significant paradigm shift in the way complementary foods are generally introduced in the diets of infants by pediatric health care providers in the United States. For formula-fed infants, the iron needs for the first 12 months of life can be met by a standard infant formula (iron content 10–12 mg/L) and with the introduction of iron-rich complementary foods after 4 to 6 months of age [2]. Whole milk should not be used before 12 completed months of age.

For toddlers from 1 to 3 years of age, the AAP recommends an iron intake of 7 mg/d [2]. Again, this is best delivered by eating red meats, whole-grain cereals fortified with iron, vegetables that contain iron (ie, legumes), and fruits with vitamin C, which augments iron absorption. An alternative source of iron is liquid iron supplements up to 36 months of age, after which time chewable multivitamins can be used.

Because 80% of iron stores are accumulated during the last trimester of pregnancy, preterm infants need an iron intake of 2 mg/kg/d through 12 months of age, which can be supplied by iron-fortified formulas [2,21]. If fed human milk, these infants should receive an iron supplement of 2 mg/kg/d by 1 month of age and this should be continued until the infant is weaned to iron-fortified formula or eats enough complementary foods to supply 2 mg/kg/d of iron. An exception to this practice would be the preterm infant who has received an iron load from multiple packed red blood cell transfusions.

The AAP has also recommended universal screening for IDA and ID at 12 months of age, with a determination of Hb concentration and an assessment of risk factors associated with ID/IDA [2]. These risk factors include low socioeconomic status (especially in infants of Mexican American descent), a history of prematurity or low birth weight, exposure to lead, exclusive breastfeeding beyond 4 months of age without supplemental iron, and weaning to whole milk or complementary foods that do not include iron-fortified cereals or food naturally rich in iron. Other risk factors are feeding problems, poor growth, and the inadequate nutrition typically seen in infants with special health care needs. If, as a result of this screen, the Hb is less than 11.0 g/dL, then further work-up is required to establish the cause of anemia [2]. Regardless of the Hb, if there is a high risk of dietary ID, then further testing should be performed, given the potential adverse effects of ID on neurodevelopmental outcomes.

The AAP recognized that it was desirable to use the fewest tests that will accurately reflect iron status, and that, at the present time, there is no ideal test to screen for ID/IDA in the pediatric age group. The gold standard for determination of iron stores has been a measurement of bone marrow iron. However, serum ferritin (SF) is recognized as a sensitive parameter for the assessment of iron stores in healthy children. SF of 1 μg/L corresponds to 8 to 10 mg of available storage iron, and a cutoff of 10 μg/L has been recommended in children [2]. However, SF is an acute-phase reactant, and its concentration is increased in the presence of inflammation/infection, malignancy, and liver disease. A simultaneous C-reactive protein (CRP) is always required to rule out inflammation or infection. However, reticulocyte hemoglobin concentration (CHr), a test that is not familiar to most pediatric health care providers, is not affected by inflammation/infection or malignancy, and is a much more desirable biomarker for iron deficiency [22,23]. The CHr assay makes use of flow cytometry, and 2 of the 4 automated hematology analyzers commonly used in the United States have the capability to measure CHr [23]. It provides a measure of the iron available to cells recently released from the bone marrow. A low CHr concentration has been shown to be the strongest predictor of ID in children [22,23]. Therefore, the following screening tests have been recommended by the AAP for ID/IDA along with a screen for Hb when there is a risk for ID:

In the AAP report, serum transferring receptor concentration (TfR1) was acknowledged as a potential marker for the iron status of infants and children, and it has been promoted by the World Health Organization as a screening test for ID [24]. It is a measure of iron status and detects ID at the cellular level. TfR1 is found on cell membranes and facilitates transfer of iron into the cell. When iron supply is inadequate, there is upregulation of TfR1 to enable the cell to compete more effectively for iron, and subsequently more circulating TfR1 is found in serum. However, the TfR1 assay is not widely available and standard values for infants and children have not been established, so it cannot be recommended as a screening test at this time [2].

There have been some concerns about harmful effects of iron supplements on infants. In general, no ill effects have been reported in infants given formula with up to 12 mg/L of iron during the first year of life, which have been used for more than 30 years in the United States [2]. Evidence is insufficient to associate iron-containing formulas with gastrointestinal symptoms. No studies have convincingly documented decreased linear growth in iron-replete infants given medicinal iron [2]. In addition, data on the effect on iron on infection has been contradictory, and a systematic review has concluded that iron supplementation has no impact on infections in children [25].

In summary, there is evidence that both IDA and ID have adverse effects on cognitive and behavioral development in many studies [26–32]. Iron is essential for normal neurodevelopment in several animal models. Therefore it is important to minimize IDA and ID in infants and toddlers even if unequivocal evidence is not yet available [33,34].

Vitamin D

Vitamin D continues to be in the forefront of human nutrition. Both the AAP and Institute of Medicine have recently recommended increased intakes of vitamin D for children [3,4]. For infants in the first 12 months of life, the recommended vitamin D intake has been increased from 200 to 400 IU/d. For children/adolescents 1 year to 18 years of age, the recommendation has been increased from 200 IU to 600 IU a day for vitamin D. These recommendations are based on the intake of vitamin D to maintain serum levels of at least 50 nmol/L (20 ng/mL), a level that benefits 97.5% all of the US population based on the present evidence [4].

Because human milk contains minimal amounts of vitamin D, cases of rickets in infants secondary to inadequate vitamin D intake and decreased exposure to sunlight continue to be reported in the United States [35–38], which represents an extreme degree of deficiency of the vitamin. Most of these cases have been reported in infants who are exclusively breastfed without supplemental vitamin D, because the vitamin D content of human milk is minimal [39]. Infants with rickets often have increased skin pigmentation and a history of decreased sunshine exposure, but, in many areas of the United States sunshine exposure during the winter months is not effective for the cutaneous synthesis of vitamin D [3,4,40]. Vitamin D deficiency can be prevented in breast feeding infants with a vitamin D supplement of 400 IU/d or until they are receiving adequate amounts of vitamin D from vitamin D–fortified formula or dairy products [3,4].

In understanding the growing importance of vitamin D beyond rickets and skeletal health, it must be realized that vitamin D is not a vitamin but a hormone or a prohormone. Like all hormones in the steroid family, its synthesis begins with cholesterol. In the case of vitamin D, 7-dehydrocholesterol in the skin is converted to the parent compound vitamin D3 (cholecalciferol) with exposure to sunlight (ultraviolet-B radiation). The parent compound is then hydroxylated in the liver to form 25-hydroxy vitamin D3 (calcidiol) and hydroxylated again in the kidney to form 1,25-hydroxy vitamin D3 (calcitriol). Though 1,25-(OH)2 vitamin D3 is the true hormone that effects gene transcription at the cellular level, the most plentiful form of the vitamin in circulation (by a factor of 10) is 25-OH vitamin D3. It is believed to be the best marker for vitamin D status, again with an ideal level greater than or equal to 50 nmol/L (20 ng/mL) set by the Institute of Medicine (IOM) [4]. However, for the classic endocrine function of vitamin D, it is 1,25-(OH)2 vitamin D3 that acts in concert with parathyroid hormone to tightly regulate calcium metabolism and maintain the skeletal system [41,42].

The hormone 1,25-(OH)2 vitamin D3 promotes gene transcription through its interaction with genomic (nuclear) vitamin D receptors (VDRs) present in many types of cells [42]. It is these genomic VDRs, found in many different cells in the body, that have led to the association of vitamin D deficiency with many chronic diseases including hypertension, atherosclerosis/myocardial infarction, type 1 and 2 diabetes, obesity, colorectal cancer, prostate cancer, breast cancer, ovarian cancer, multiple sclerosis, rheumatoid arthritis, inflammatory bowel disease, and even wheezing in early childhood [3,4,41,43]. There is some plausibility for these nonendocrine functions of vitamin D, given new in vitro evidence that 25-OH vitamin D can be taken up by cells other than those in the kidney and hydroxylated at the 1 position to synthesize 1,25-dihydroxyvitamin D locally, where it has autocrine (within the cell) or paracrine (nearby cells) effects [42]. This process has been best shown in the immune system where VDRs are found in the nuclei of monocytes, macrophages, dendritic dells, natural killer cells, T cells, and B cells [44]. In cell culture, 1,25-dihydroxy vitamin D can be shown to alter cytokine secretion patterns, suppress T-cell activation, affect maturation and migration of dendritic cells, enhance phagocytic activity of macrophages, as well as increase the phagocytic activity of killer cells. However, these potential nonendocrine, nonskeletal outcomes of vitamin D deficiency have not been supported by adequate clinical trials showing that vitamin D is casually related to the disease process [3,4].

One of the most frequently cited studies in pediatrics is a Finnish study showing a relationship between the incidence of type 1 diabetes and vitamin D intake during childhood [45]. This study was done in Lapland, whose population has the highest known incidence of type 1 diabetes. That vitamin D may play a role is supported by the observation that it is far more common in northern latitudes where sunshine exposure is decreased and serum 25-OH D levels are low in winter. In animal and cell culture models, vitamin D also seems to stimulate insulin release and insulin receptor expression [46]. As noted earlier, vitamin D may play a role in immune function, and type 1 diabetes is known to be an autoimmune process directed against the β cells of the pancreas [46]. However, the Finnish study was a retrospective birth cohort study in children born in 1966, during a time period when the recommended vitamin D intake for children in this part of Finland was 2000 IU of vitamin D per day [45]. Though serum 25-OH vitamin D levels are known to be low in northern Finland, no 25-OH vitamin D levels were measured in these subjects, and the vitamin D intake was estimated from retrospective chart reviews of medical records. Again, although the epidemiologic association seems to be strong, this paper does not shed light on vitamin D and the causality of type 1 diabetes, which also has a strong genetic component.

The recent IOM report has concluded that the data do not provide compelling evidence that vitamin D is related to extraskeletal health outcomes and advises against the large intakes of vitamin D recommended by some vitamin D advocates [4]. In addition, though the measurement of 25-OH vitamin D level in children and adolescents indicates vitamin D exposure and status, the information available is not adequate to use 25-hydroxy vitamin D as a biomarker for an intermediate health endpoint (eg, blood pressure) or disease (eg, rickets, or osteoporosis) [4]. It is not recommended at this time to measure serum 25-OH vitamin D concentrations in children as a routine screening tool for vitamin D deficiency [3,4].

Lipid screening and the use of low-fat dairy products

The AAP recommended new guidelines for lipid screening and cardiovascular health in children in 2008 [5]. It recommended a population approach to a healthful diet for all children older than 2 years that was in accordance with the Dietary Guidelines for Americans [47]. This recommendation included the use of low-fat dairy products for all children more than 2 years of age. However, a little-noticed recommendation from the AAP report was that, for children between the ages of 12 months and 2 years for whom overweight (body mass index [BMI] ≥85% but <95%) or obesity (BMI≥95%) is a concern, or who have a family history of obesity, dyslipidemia, or cardiovascular disease (CVD), the use of reduced-fat milk would be appropriate [5]. Previously, the AAP had recommended the use of whole milk until 24 months of completed age [48]. The new recommendation was largely based on the increasing risk for overweight in young children and results of the ongoing Special Turku Risk Intervention Program [49]. This program was a randomized dietary intervention study beginning at approximately 7 months of age with weaning from breast milk. Children in the intervention group were maintained on a diet with total fat equal to less than 30% of calories, saturated fat less than 10% of calories, and cholesterol intake of less than 200 mg/d, using 1.5% cow milk after 12 months of age. Outcomes in the study included both growth and neurologic function. No adverse effects of the intervention diet were observed on growth or neurologic and cognitive outcomes through 5 years of age [50]. Other significant observations included lowering the low-density lipoprotein concentrations in boys and decreasing the prevalence of obesity in girls in the intervention group, compared with the controls. The study concluded that there was no effect of introducing reduced-fat milk in children beginning at 12 months of age. However, the new Special Supplemental Nutrition Program for Women, Infants, and Children (WIC) food programs instituted in the United States in October of 2009 have specifically excluded any low-fat or reduced-fat milk for children before 24 months completed age. Before this time, some WIC programs had dispensed low-fat milk after the first year of life to toddlers who were overweight or at risk for overweight (Frank R. Greer, MD, personal observations, 2009).

The AAP report also recommended targeting lipid screening for children sometime after 2 years of age but no later than 10 years of age [5]. Children and adolescents who are targeted for a fasting lipid profile are those with a positive family history of dyslipidemia or premature CVD (≤55 years of age for men and ≤65 years of age for women). Screening with a fasting lipid profile is also recommended for pediatric patients for whom family history is unknown, or for those with CVD risk factors such as overweight, obesity, hypertension (blood pressure ≥95 percentile), cigarette smoking, or diabetes mellitus [5].

New guidelines for lipid screening and cardiovascular health from the National Heart, Lung and Blood Institute are expected in late in 2011, and some changes for the pediatric population are anticipated.

Probiotics and prebiotics

The AAP recently published a clinical report on the use of probiotics and prebiotics in pediatrics [6]. Probiotics are dietary supplements or foods (including infant formula) that contain viable microorganisms that cause alterations of the microflora of the host. Typically these microorganisms are members of the genera Lactobacillus, Bifidobacterium, and Streptococcus. These organisms are fermentative, obligatory, or facultative anaerobic organisms typically found in the digestive tract, where they predominate and prevail over potential pathogenic microorganisms. Probiotic bacteria are believed to exert positive effects on the development of the mucosal immune system [51,53].

Prebiotics are usually in the form of oligosaccharides that may occur naturally but are now added as dietary supplements to foods, beverages, and infant formula. Although indigestible by humans, their presence in the digestive system selectively enhances proliferation of certain probiotic bacteria in the colon, especially Bifidobacterium species. Commercially available prebiotics include fructooligosaccharides (FOSs), galactooligosaccharides (GOSs), soybean oligosaccharides, and inulin. Inulin is a composite oligosaccharide that contains several FOS molecules. Human milk contains hundreds of identifiable prebiotic oligosaccharides and the identification of the most significant forms is the goal of ongoing research. These prebiotics have a significant influence on the preponderance of naturally occurring probiotic bacteria in the digestive system of breastfed infants [6].

Probiotics and prebiotics are added to many food products marketed to children and adolescents, including infant formula. When marketed as dietary supplements (not for treatment or prevention of a disease), they do not require premarket review and approval by the US Food and Drug Administration (FDA) [6]. Probiotics and prebiotics are also available as over-the-counter dietary supplements.

The AAP concluded in its report that the benefits of probiotics or prebiotics to healthy children and adolescents are largely of unproven benefit, including those added to food products marketed to children. However, there is no evidence that they are of any harm to healthy children and adolescents, although long-term effects, including those on the gut microflora, are unknown. Probiotics should not be given to children who are seriously or chronically ill until the safety of their administration to this population has been established [6].

The AAP has also noted that the addition of probiotics to powdered infant formula (they cannot be added to liquid formula, which is sterile) has not been shown to be harmful to healthy term infants. However, evidence of clinical efficacy for their addition is insufficient to recommend the routine use of these formulas at this time [6]. Furthermore, no randomized trials have directly compared the health benefits of feeding human milk versus infant formulas supplemented with probiotics. It also noted that, although prebiotics may prove to be beneficial in reducing common infections and atopy in otherwise healthy infants, confirmatory evidence in infants fed formula that is not also partially hydrolyzed is needed before any recommendations can be made [6].

The prebiotics and probiotics that are now being added to commercially available infant formulas are classified as generally regarded as safe (GRAS) as long as they are used in accordance with the food-additive regulations of the FDA. However, there is no cost-benefit information on their addition, particularly to infant formula. As dietary supplements in food products marketed for infants and children, they are not known to be harmful to healthy subjects. However, probiotics should not be given to children who are seriously or chronically ill until the safety of administration has been established [6].

References

[1] F.R. Greer, S.H. Sicherer, A.W. Burks. Effects of early nutritional interventions on the development of atopic disease in infants and children: the role of maternal dietary restriction, breastfeeding, timing of introduction of complementary foods, and hydrolyzed formulas. American Academy of Pediatrics, Committee on Nutrition and Section on Allergy and Immunology. Pediatrics. 2008;121:183-199.

[2] R.D. Baker, F.R. Greer. Diagnosis and prevention of iron deficiency and iron deficiency anemia in infants and young children (0–3 years). American Academy of Pediatrics, Committee on Nutrition. Pediatrics. 2010;126(5):1040-1050.

[3] C.L. Wagner, F.R. Greer. Prevention of rickets and vitamin D deficiency in infants, children, and adolescents. American Academy of Pediatrics, Committee on Nutrition, Section on Breastfeeding. Pediatrics. 2008;122:1142-1152.

[4] A.C. Ross, C.L. Taylor, A.L. Yaktine, et al. Dietary reference intakes for calcium vitamin D. Committee to Review Dietary Reference Intake for Vitamin D and Calcium, Food and Nutrition Board, Institute of Medicine. Washington, DC: The National Academies Press; 2011.

[5] R. Daniels, F.R. Greer. Lipid screening and cardiovascular health in childhood. Pediatrics. 2008;122:198-208.

[6] D.W. Thomas, F.R. Greer. Probiotics and prebiotics in pediatrics. Clinical report. American Academy of Pediatrics, Committee on Nutrition, and Section on Gastroenterology and Nutrition. Pediatrics. 2010;126:1217-1231.

[7] American Academy of Pediatrics. Committee on Nutrition. Hypoallergenic infant formulas. Pediatrics. 2000;106:346-349.

[8] J. Bhatia, F.R. Greer. The use of soy protein-base formulas in infant feeding. American Academy of Pediatrics, Committee on Nutrition, clinical report. Pediatrics. 2008;121:1062-1068.

[9] A. Von Berg, S. Koletzko, A. Gurbl, et al. The effect of hydrolyzed cow’s milk formula for allergy prevention in the first year of life: the German Infant Nutritional Interventions Study, a randomized double-blind trial. J Allergy Clin Immunol. 2003;111:533-540.

[10] A. Von Berg, B. Filipiak-Pittroff, U. Kramer, et al. Preventative effect of hydrolyzed infant formulas persists until age 6 years: long-term results from the German Infant Nutritional Intervention Study (GINI). J Allergy Clin Immunol. 2008;121:1442-1447.

[11] C. Agostoni, T. Decsi, M. Fewtrell, et al. Complementary feeding: a commentary by the ESPGHAN Committee on Nutrition. J Pediatr Gastroenterol Nutr. 2008;46:99-110.

[12] B.I. Nwaru, M. Erkkola, S. Ahonen, et al. Age at the introduction of solid foods during the first year and allergic sensitization at age 5 years. Pediatrics. 2010;125:50-59.

[13] J.J. Koplin, N.J. Osborne, M. Wake, et al. Can early introduction of egg prevent egg allergy in infants? A population-based study. J Allergy Clin Immunol. 2010;126:807-813.

[14] J.A. Poole, K. Barriga, M. Hoffman, et al. Timing of initial exposure to cereal grains and the risk of wheat allergy. Pediatrics. 2006;117:2175-2182.

[15] A. Zutavern, I. Brockow, B. Schaaf, et al. Timing of initial exposure to cereal grains and the risk of wheat allergy. Pediatrics. 2008;117:2175-2182.

[16] S. McLean, A. Sheikh. Does avoidance of peanuts in early life reduce the risk of peanut allergy? BMJ. 2010;340:c424.

[17] A. Carlsson, D. Agardh, S. Borulf, et al. Prevalence of celiac disease: before and after a national change in feeding recommendations. Scand J Gastroenterol. 2006;41:553-558.

[18] Du Tuit, Y. Katz, P. Sasieni, et al. Early consumption of peanuts in infancy is associated with a low prevalence of peanut allergy. J Allergy Clin Immunol. 2008;122:984-991.

[19] R.L. Thompson, L.M. Miles, J. Lunn, et al. Peanut sensitization and allergy: influence of early life exposure to peanuts. Br J Nutr. 2010;103:1278-1286.

[20] N.C. Andrews. Iron deficiency and related disorders. In: J.P. Greer, J. Foerster, J.N. Lukens, editors. Wintrobe’s clinical hematology. 11th edition. Philadelphia: Lippincott Williams & Wilkins; 2004:979-1009.

[21] R. Raghavendra, M. Georgieff. Microminerals. In: R.C. Tsang, R. Uauy, B. Koletzko, et al, editors. Nutrition of the preterm infant: scientific basis and practical guidelines. Cincinnati (OH): Digital Educational Publishing; 2005:277-310.

[22] C. Ulrich, A. Wu, C. Armsby, et al. Screening healthy infants for iron deficiency using reticulocyte hemoglobin content. JAMA. 2005;294(8):924-930.

[23] C. Brugnara, B. Schiller, J. Moran. Reticulocyte hemoglobin equivalent (Ret He) and assessment of iron-deficient states. Clin Lab Haematol. 2006;28(5):303-308.

[24] World Health Organization. Assessing the iron status of populations: report of a joint World Health Organization/Centers for Disease and Control and Prevention technical consultation on the assessment of iron status at the population level. Geneva (Switzerland): World Health Organization; April 6–8, 2004. Available at: http://whqlibdoc.who.int/publications/2004/9241593156_eng.pdf Accessed April 13, 2011

[25] T. Gera, H.P. Sachdev. Effect of iron supplementation on incidence of infectious illness in children: a systematic review. BMJ. 2002;325(7373):1142.

[26] J.K. Friel, W.L. Andrews, K. Aziz, et al. A randomized trail of two levels of iron supplementation and developmental outcome in low birth weight infants. J Pediatr. 2001;139:254-260.

[27] B. Lozoff, E. Jimenez, A.W. Wolf. Long-term developmental outcome of infants with iron deficiency. N Engl J Med. 1991;325(10):687-694.

[28] P. Idjradinata, E. Pollitt. Reversal of developmental delays in iron-deficient anaemic infants treated with iron. Lancet. 1993;341:1-4.

[29] R. Armony-Sivan, A.I. Eidelman, A. Lanir, et al. Iron status and neurobehavioral development of premature infants. J Perinatol. 2004;24(12):757-762.

[30] B. Lozoff, E. Jimenez, J. Hagen, et al. Poorer behavioral and developmental outcome more than 10 years after treatment for iron deficiency in infancy. Pediatrics. 2000;105:E51.

[31] J.C. McCann, B.N. Ames. An overview of evidence for a causal relation between iron deficiency during development and deficits in cognitive or behavioral function. Am J Clin Nutr. 2007;85(4):931-945.

[32] S. Martins, S. Logan, R. Gilbert. Iron therapy for improving psychomotor development and cognitive function in children under the age of three with iron deficiency anaemia. Cochrane Database Syst Rev. 2, 2001. CD001444

[33] E.S. Carlson, I. Tkac, R. Magid, et al. Iron is essential for neuron development and memory function in mouse hippocampus. J Nutr. 2009;139(4):672-679.

[34] P.V. Tran, S.J. Fretham, E.S. Carlson, et al. Long-term reduction of hippocampal brain-derived neurotrophic factor activity after fetal-neonatal iron deficiency in adult rats. Pediatr Res. 2009;65(5):493-498.

[35] S.R. Kreiter, R.P. Schwartz, H.N. KirkmanJr., et al. Nutritional rickets in African American breast-fed infants. J Pediatr. 2000;137:153-157.

[36] M.T. Pugliese, D.L. Blumberg, J. Hludzinski, et al. Nutritional rickets in suburbia. J Am Coll Nutr. 1998;17(6):637-641.

[37] L.M. Ward. Vitamin D deficiency in the 21st century: a persistent problem among Canadian infants and mothers. CMAJ. 2005;172:769-770.

[38] P. Weisberg, K.S. Scanlon, R. Li, et al. Nutritional rickets among children in the United States: review of cases reported between 1986–2003. Am J Clin Nutr. 2004;80:1697S-1705S.

[39] C.J. Lamin-Keefe. Vitamin D and E in human milk. In: R.D. Jensen, editor. Handbook of milk composition. San Diego (CA): Academic Press; 1995:706-717.

[40] A.R. Webb, L. Kline, M.F. Hollick. Influence of season and latitude on the cutaneous synthesis of vitamin D3: exposure to winter sunlight in Boston and Edmonton will not promote vitamin D3 synthesis in human skin. J Clin Endocrinol Metab. 1988;67(2):373-378.

[41] M.F. Holick, T.C. Chen. Vitamin D deficiency: a worldwide problem with health consequences. Am J Clin Nutr. 2008;87(Suppl):1080S-1086S.

[42] A.W. Norman. From vitamin D to hormone D: fundamentals of the vitamin D endocrine system for good health. Am J Clin Nutr. 2008;88(Suppl):419S-499S.

[43] M. Misra, D. Pacaud, A. Petryk, et al. Vitamin D deficiency in children and its management: review of current knowledge and recommendations. Pediatrics. 2008;122:398-417.

[44] M. Hewison. Vitamin D and the immune system: new perspectives on an old theme. Endocrinol Metab Clin North Am. 2010;39(2):365-379.

[45] E. Hypponen, E. Laara, A. Reunanen, et al. Intake of vitamin D and risk of type 1 diabetes: a birth-cohort study. Lancet. 2001;358(9292):1500-1503.

[46] C. Mathieu, K. Badenhoop. Vitamin D and type 1 diabetes mellitus: state of the art. Trends Endocrinol Metab. 2005;16(6):261-266.

[47] US Department of Health and Human Services. Dietary Guidelines for Americans 2010. Available at: http://www.health.gov/dietaryguidelines/ Accessed February 15, 2011

[48] American Academy of Pediatrics. National Cholesterol Education Program: report of the expert panel on blood cholesterol levels in children and adolescents. Pediatrics. 1999;89:525-584.

[49] P. Salo, J. Viikari, M. Hamalainen, et al. Serum cholesterol ester fatty acids in 7- and 13-month children in prospective randomized trial of a low-saturated fat, low-cholesterol diet: the STRIP baby project. Special Turku Coronary risk factor intervention project for children. Acta Paediatr. 1999;88:505-512.

[50] L. Rask-Nissila, E. Jokinen, T. Ronnemaa, et al. Prospective, randomized, infancy-onset trial of the effects of a low-saturated-fat, low-cholesterol diet on serum lipids and lipoproteins before school age: the Special Turku coronary risk factor intervention project (STRIP). Circulation. 2000;102:1477-1483.

[51] B. Corthesy, H.R. Gaskins, A. Mercenier. Cross-talk between probiotic bacteria and the host immune system. J Nutr. 2007;137(3 Suppl 2):781S-790S.

[52] T. Matsuzaki, A. Takagi, H. Ikemura, et al. Intestinal microflora: probiotics and autoimmunity. J Nutr. 2007;137(3 Suppl 2):798S-802S.

[53] Q. Yuan, W.A. Walker. Innate immunity of the gut: mucosal defense in health and disease. J Pediatr Gastroenterol Nutr. 2004;38(5):463-473.