Part 9: Terrorism and Clinical Medicine

261e |

Microbial Bioterrorism |

Descriptions of the use of microbial pathogens as potential weapons of war or terrorism date from ancient times. Among the most frequently cited of such episodes are the poisoning of water supplies in the sixth century B.C. with the fungus Claviceps purpurea (rye ergot) by the Assyrians, the hurling of the dead bodies of plague victims over the walls of the city of Kaffa by the Tartar army in 1346, and the efforts by the British to spread smallpox to the Native American population loyal to the French via contaminated blankets in 1763. The tragic attacks on the World Trade Center and the Pentagon on September 11, 2001, followed closely by the mailing of letters containing anthrax spores to media and congressional offices through the U.S. Postal Service, dramatically changed the mindset of the American public regarding both our vulnerability to microbial bioterrorist attacks and the seriousness and intent of the federal government to protect its citizens against future attacks. Modern science has revealed methods of deliberately spreading or enhancing disease in ways not appreciated by our ancestors. The combination of basic research, good medical practice, and constant vigilance will be needed to defend against such attacks.

Although the potential impact of a bioterrorist attack could be enormous, leading to thousands of deaths and high morbidity rates, acts of bioterrorism would be expected to produce their greatest impact through the fear and terror they generate. In contrast to biowarfare, where the primary goal is destruction of the enemy through mass casualties, an important goal of bioterrorism is to destroy the morale of a society through fear and uncertainty. Although the actual biologic impact of a single act may be small, the degree of disruption created by the realization that such an attack is possible may be enormous. This was readily apparent with the impact on the U.S. Postal Service and the functional interruption of the activities of the legislative branch of the U.S. government following the anthrax attacks noted above. Thus, the key to the defense against these attacks is a highly functioning system of public health surveillance and education so that attacks can be quickly recognized and effectively contained. This is complemented by the availability of appropriate countermeasures in the form of diagnostics, therapeutics, and vaccines, both in response to and in anticipation of bioterrorist attacks.

The Working Group for Civilian Biodefense created a list of key features that characterize the elements of biologic agents that make them particularly effective as weapons (Table 261e-1). Included among these are the ease of spread and transmission of the agent and the presence of an adequate database to allow newcomers to the field to quickly apply the good science of others to bad intentions of their own. Agents of bioterrorism may be used in their naturally occurring forms, or they can be deliberately modified to deliver greater impact. Among the approaches to maximizing the deleterious effects of biologic agents are the genetic modification of microbes for the purposes of antimicrobial resistance or evasion by the immune system, creation of fine-particle aerosols, chemical treatment to stabilize and prolong infectivity, and alteration of host range through changes in surface proteins. Certain of these approaches fall under the category of weaponization, which is a term generally used to describe the processing of microbes or toxins in a manner that would ensure a devastating effect following release. For example, weaponization of anthrax by the Soviets involved the production of vast numbers of spores of appropriate size to reach the lower respiratory tract easily in a form that maintained aerosolization for prolonged periods of time and that could be delivered in a massive release, such as via widely dispersed bomblets.

|

KEY FEATURES OF BIOLOGIC AGENTS USED AS BIOWEAPONS |

Source: From L Borio et al: JAMA 287:2391, 2002; with permission.

The U.S. Centers for Disease Control and Prevention (CDC) classifies potential biologic threats into three categories: A, B, and C (Table 261e-2). Category A agents are the highest-priority pathogens. They pose the greatest risk to national security because they (1) can be easily disseminated or transmitted from person to person, (2) result in high mortality rates and have the potential for major public health impact, (3) might cause public panic and social disruption, and (4) require special action for public health preparedness. Category B agents are the second highest priority pathogens and include those that are moderately easy to disseminate, result in moderate morbidity rates and low mortality rates, and require specifically enhanced diagnostic capacity. Category C agents are the third highest priority. These include certain emerging pathogens to which the general population lacks immunity; that could be engineered for mass dissemination in the future because of availability, ease of production, and ease of dissemination; and that have a major public health impact and the potential for high morbidity and mortality rates. It should be pointed out, however, that these A, B, and C designations are empirical and, depending on evolving circumstances such as intelligence-based threat assessments, the priority rating of any given microbe or toxin could change. The CDC classification system also largely reflects the severity of illness produced by a given agent, rather than its accessibility to potential terrorists.

|

CDC CATEGORY A, B, AND C AGENTS |

Abbreviations: MERS, Middle East respiratory syndrome; SARS, severe acute respiratory syndrome.

Source: Centers for Disease Control and Prevention and the National Institute of Allergy and Infectious Diseases.

CATEGORY A AGENTS

ANTHRAX

See also Chap. 175.

Bacillus anthracis as a Bioweapon Anthrax may be the prototypic disease of bioterrorism. Although rarely, if ever, spread from person to person, the illness embodies the other major features of a disease introduced through terrorism, as outlined in Table 261e-1. U.S. and British government scientists studied anthrax as a potential biologic weapon beginning approximately at the time of World War II (WWII). Offensive bioweapons activity including bioweapons research on microbes and toxins in the United States ceased in 1969 as a result of two executive orders by President Richard M. Nixon. Although the 1972 Biological and Toxin Weapons Convention Treaty outlawed research of this type worldwide, the Soviet Union produced and stored tons of anthrax spores for potential use as a bioweapon until at least the late 1980s. At present, there is suspicion that research on anthrax as an agent of bioterrorism is ongoing by several nations and extremist groups. One example of this was the release of anthrax spores by the Aum Shinrikyo cult in Tokyo in 1993. Fortunately, there were no casualties associated with that episode because of the inadvertent use of a nonpathogenic strain of anthrax by the terrorists.

The potential impact of anthrax spores as a bioweapon was clearly demonstrated in 1979 following the accidental release of spores into the atmosphere from a Soviet Union bioweapons facility in Sverdlovsk, Russia. Although total figures are not known, at least 77 cases of anthrax were diagnosed with certainty, of which 66 were fatal. These victims were exposed in an area within 4 km downwind of the facility, and deaths due to anthrax were also noted in livestock up to 50 km further downwind. Based on recorded wind patterns, the interval between the time of exposure and development of clinical illness ranged from 2 to 43 days. The majority of cases were within the first 2 weeks. Death typically occurred within 1–4 days following the onset of symptoms. It is likely that the widespread use of postexposure penicillin prophylaxis limited the total number of cases. The extended period of time between exposure and disease in some individuals supports the data from nonhuman primate studies, suggesting that anthrax spores can lie dormant in the respiratory tract for at least 4–6 weeks without evoking an immune response. This extended period of microbiologic latency following exposure poses a significant challenge for management of victims in the postexposure period.

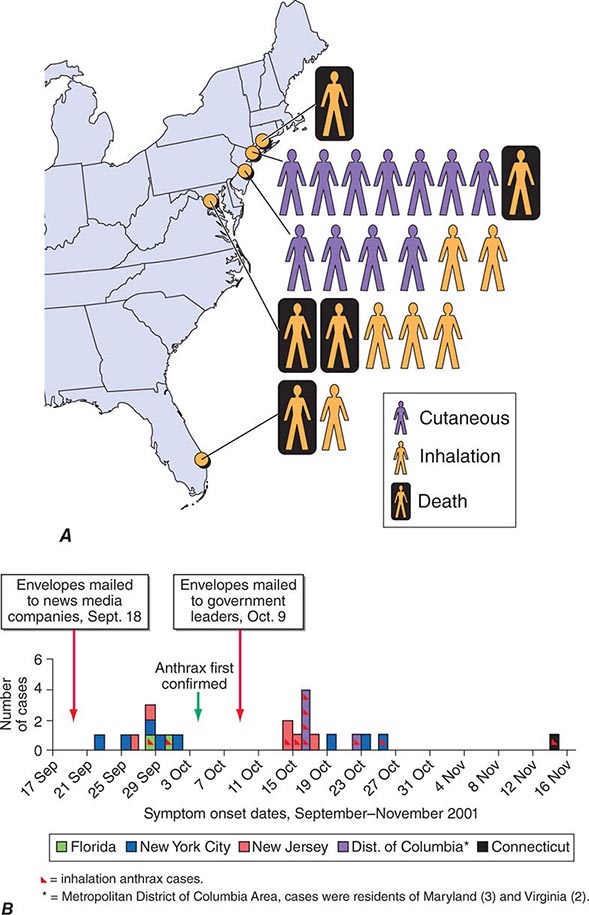

In September 2001, the American public was exposed to anthrax spores as a bioweapon delivered through the U.S. Postal Service by an employee of the United States Army Medical Research Institute for Infectious Diseases (USAMRIID) who had access to such materials and who committed suicide prior to being indicted for this crime. The CDC identified 22 confirmed or suspected cases of anthrax as a consequence of this attack. These included 11 patients with inhalational anthrax, of whom 5 died, and 11 patients with cutaneous anthrax (7 confirmed), all of whom survived (Fig. 261e-1). Cases occurred in individuals who opened contaminated letters as well as in postal workers involved in the processing of mail. A minimum of five letters mailed from Trenton, NJ, served as the vehicles for these attacks. One of these letters was reported to contain 2 g of material, equivalent to 100 billion to 1 trillion weapon-grade spores. Based on studies performed in the 1950s using monkeys exposed to aerosolized anthrax demonstrating that ~10,000 spores were required to produce lethal disease in 50% of animals exposed to this dose (the LD50), the contents of one letter had the theoretical potential, under optimal conditions, of causing illness or death in up to 50 million individuals. The strain used in this attack was the Ames strain. Although it was noted to have an inducible β-lactamase and to constitutively express a cephalosporinase, it was susceptible to all antibiotics standard for B. anthracis.

FIGURE 261e-1 Confirmed anthrax cases associated with bioterrorism: United States, 2001. A. Geographic location, clinical manifestation, and outcome of the 11 cases of confirmed inhalational and 11 cases of confirmed cutaneous anthrax. B. Epidemic curve for 22 cases of anthrax. (From DB Jernigan et al: Emerg Infect Dis 8:1019, 2002; with permission.)

Microbiology and Clinical Features Anthrax is caused by B. anthracis, a gram-positive, nonmotile, spore-forming rod that is found in soil and predominantly causes disease in herbivores such as cattle, goats, and sheep. Anthrax spores can remain viable for decades. The remarkable stability of these spores makes them an ideal bioweapon, and their destruction in decontamination activities can be a challenge. Naturally occurring human infection is generally the result of contact with anthrax-infected animals or animal products such as goat hair in textile mills or animal skins used in making drums. While an LD50 of 10,000 spores is a generally accepted number, it has also been suggested that as few as one to three spores may be adequate to cause disease in some settings. Advanced technology is likely to be necessary to generate a bioweapon containing spores of the optimal size (1–5 μm) to travel to the alveolar spaces.

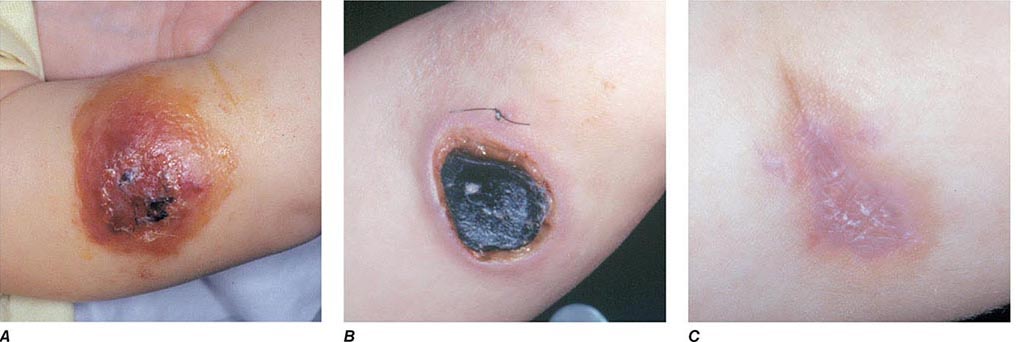

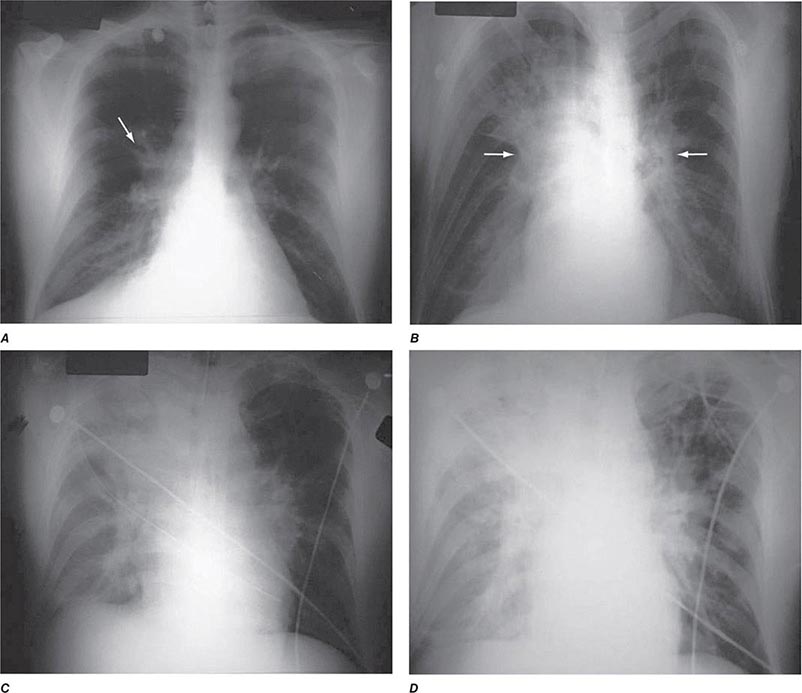

The three major clinical forms of anthrax are gastrointestinal, cutaneous, and inhalational. Gastrointestinal anthrax typically results from the ingestion of contaminated meat; the condition is rarely seen and is unlikely to be the result of a bioterrorism event. The lesion of cutaneous anthrax typically begins as a papule following the introduction of spores through an opening in the skin. This papule then evolves to a painless vesicle followed by the development of a coal-black, necrotic eschar (Fig. 261e-2). It is the Greek word for coal (anthrax) that gives the organism and the disease its name. Cutaneous anthrax was ~20% fatal prior to the availability of antibiotics. Inhalational anthrax is the form most likely to be responsible for death in the setting of a bioterrorist attack. It occurs following the inhalation of spores that become deposited in the alveolar spaces. These spores are phagocytized by macrophages and transported to the mediastinal and peribronchial lymph nodes where they germinate, leading to active bacterial growth and elaboration of the bacterial products edema toxin and lethal toxin. Subsequent hematogenous spread of bacteria is accompanied by cardiovascular collapse and death. The earliest symptoms are typically a viral-like prodrome with fever, malaise, and abdominal and/or chest symptoms that progress over the course of a few days to a moribund state. Characteristic findings on chest x-ray include mediastinal widening and pleural effusions (Fig. 261e-3). Although initially thought to be 100% fatal, the experiences at Sverdlovsk in 1979 and in the United States in 2001 (see below) indicate that with prompt initiation of antibiotic therapy, survival is possible. The characteristics of the 11 cases of inhalational anthrax diagnosed in the United States in 2001 following exposure to contaminated letters postmarked September 18 or October 9, 2001, followed the classic pattern established for this illness, with patients presenting with a rapidly progressive course characterized by fever, fatigue or malaise, nausea or vomiting, cough, and shortness of breath. At presentation, the total white blood cell counts were ~10,000 cells/μL; transaminases tended to be elevated, and all 11 had abnormal findings on chest x-ray and computed tomography (CT). Radiologic findings included infiltrates, mediastinal widening, and hemorrhagic pleural effusions. For cases in which the dates of exposure were known, symptoms appeared within 4–6 days. Death occurred within 7 days of diagnosis in the five fatal cases (overall mortality rate 55%). Rapid diagnosis and prompt initiation of antibiotic therapy were key to survival.

FIGURE 261e-2 Clinical manifestations of a pediatric case of cutaneous anthrax associated with the bioterrorism attack of 2001. The lesion progresses from vesicular on day 5 (A) to necrotic with the classic black eschar on day 12 (B) to a healed scar 2 months later (C). (Photographs provided by Dr. Mary Wu Chang. Part A reproduced with permission from KJ Roche et al: N Engl J Med 345:1611, 2001 and Parts B and C reproduced with permission from A Freedman et al: JAMA 287:869, 2002.)

FIGURE 261e-3 Progression of chest x-ray findings in a patient with inhalational anthrax. Findings evolved from subtle hilar prominence and right perihilar infiltrate to a progressively widened mediastinum, marked perihilar infiltrates, peribronchial cuffing, and air bronchograms. (From L Borio et al: JAMA 286:2554, 2001; with permission.)

|

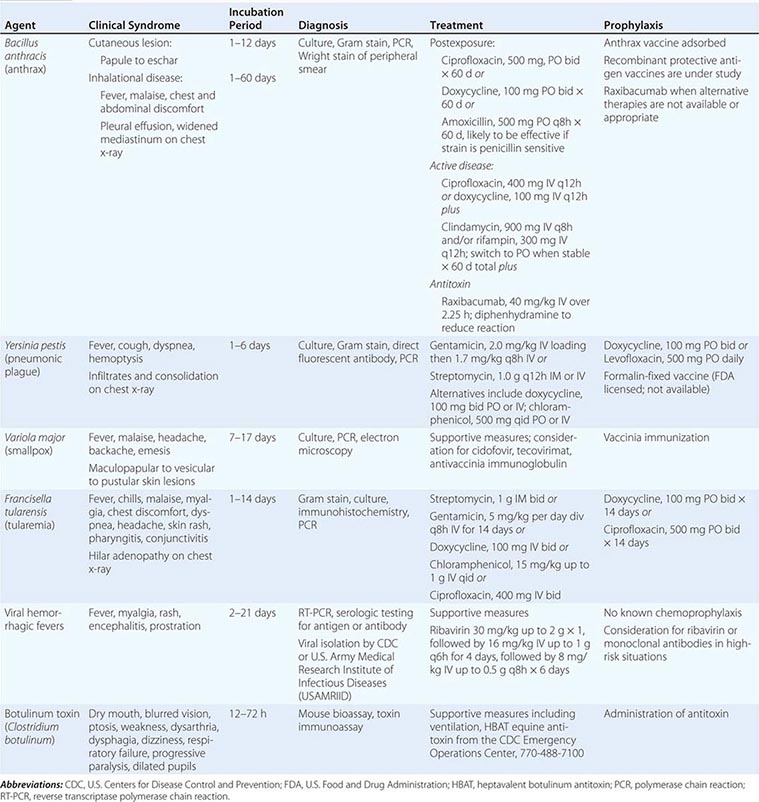

CLINICAL SYNDROMES, PREVENTION, AND TREATMENT STRATEGIES FOR DISEASES CAUSED BY CATEGORY A AGENTS |

Vaccination and Prevention The first successful vaccine for anthrax was developed for animals by Louis Pasteur in 1881. At present, the single vaccine licensed for human use is a product produced from the cell-free culture supernatant of an attenuated, nonencapsulated strain of B. anthracis (Stern strain), referred to as anthrax vaccine adsorbed (AVA). Clinical trials for safety in humans and efficacy in animals are currently under way to evaluate the role of recombinant protective antigen as an alternative to AVA. In a postexposure setting in nonhuman primates, a 2-week course of AVA plus ciprofloxacin was found to be superior to ciprofloxacin alone in preventing the development of clinical disease and death. Although the current recommendation for postexposure prophylaxis is 60 days of antibiotics, it would seem prudent to include immunization with anthrax vaccine if available. Given the potential for B. anthracis to be engineered to express penicillin resistance, the empirical regimen of choice in this setting is either ciprofloxacin or doxycycline. In settings where these approaches are not available or appropriate, one can administer the antitoxin monoclonal antibody raxibacumab.

PLAGUE

See also Chap. 196.

Yersinia pestis as a Bioweapon Although it lacks the environmental stability of anthrax, the highly contagious nature and high mortality rate of plague make it a close to ideal agent of bioterrorism, particularly if delivered in a weaponized form. Occupying a unique place in history, plague has been alleged to have been used as a biologic weapon for centuries. The catapulting of plague-infected corpses into besieged fortresses is a practice that was first noted in 1346 during the assault of the Crimean city of Kaffa by the Mongolian Tartars. Although unlikely to have resulted in disease transmission, some believe that this event may have played a role in the start of the Black Death pandemic of the fourteenth and fifteenth centuries in Europe. Given that plague was already moving across Asia toward Europe at this time, it is unclear whether such an allegation is accurate. During WWII, the infamous Unit 731 of the Japanese army was reported to have repeatedly dropped plague-infested fleas over parts of China, including Manchuria. These drops were associated with subsequent outbreaks of plague in the targeted areas. Following WWII, the United States and the Soviet Union conducted programs of research on how to create aerosolized Y. pestis that could be used as a bioweapon to cause primary pneumonic plague. As mentioned above, plague was thought to be an excellent bioweapon due to the fact that in addition to causing infection in those inhaling the aerosol, significant numbers of secondary cases of primary pneumonic plague would also likely occur due to the contagious nature of the disease and person-to-person transmission via respiratory aerosol. Secondary reports of research conducted during that time suggest that organisms remain viable for up to 1 h and can be dispersed for distances up to 10 km. Although the offensive bioweapons program in the United States was terminated prior to production of sufficient quantities of plague organisms for use as a weapon, it is believed that Soviet scientists did manufacture quantities sufficient for such a purpose. It has also been reported that more than 10 Soviet institutes and >1000 scientists were working with plague as a biologic weapon. Of concern is the fact that in 1995 a microbiologist in Ohio was arrested for having obtained Y. pestis in the mail from the American Type Culture Collection, using a credit card and a false letterhead. In the wake of this incident, the U.S. Congress passed a law in 1997 requiring that anyone intending to send or receive any of 42 different agents that could potentially be used as bioweapons first register with the CDC.

Microbiology and Clinical Features Plague is caused by Y. pestis, a nonmotile, gram-negative bacillus that exhibits bipolar, or “safety pin,” staining with Wright, Giemsa, or Wayson stains. It has had a major impact on the course of history, thus adding to the element of fear evoked by its mention. The earliest reported plague epidemic was in 224 B.C. in China. The most infamous pandemic began in Europe in the fourteenth century, during which time one-third to one-half of the entire population of Europe was killed. During a plague outbreak in India in 1994, even though the number of confirmed cases was relatively small, it is estimated that 500,000 individuals fled their homes in fear of this disease. In the first decade of the twenty-first century, 21,725 cases of plague were reported to the World Health Organization (WHO). Over 90% of these cases were from Africa, and the overall case fatality rate was 7.4%.

The clinical syndromes of plague generally reflect the mode of infection. Bubonic plague is the consequence of an insect bite; primary pneumonic plague arises through the inhalation of bacteria. Most of the plague seen in the world today is bubonic plague and is the result of a bite by a plague-infected flea. In part as a consequence of past pandemics, plague infection of rodents exists widely in nature, including in the southwestern United States, and each year thousands of cases of plague occur worldwide through contact with infected animals or fleas. Following inoculation of regurgitated bacteria into the skin by a flea bite, organisms travel through the lymphatics to regional lymph nodes, where they are phagocytized but not destroyed. Inside the cell, they multiply rapidly leading to inflammation, painful lymphadenopathy with necrosis, fever, bacteremia, septicemia, and death. The characteristic enlarged, inflamed lymph nodes, or buboes, give this form of plague its name. In some instances, patients may develop bacteremia without lymphadenopathy following infection, a condition referred to as primary septicemic plague. Extensive ecchymoses may develop due to disseminated intravascular coagulation, and gangrene of the digits and/or nose may develop in patients with advanced septicemic plague. It is thought that this appearance of some patients gave rise to the term Black Death in reference to the plague epidemic of the fourteenth and fifteenth centuries. Some patients may develop pneumonia (secondary pneumonic plague) as a complication of bubonic or septicemic plague. These patients may then transmit the agent to others via the respiratory route, causing cases of primary pneumonic plague. Primary pneumonic plague is the manifestation most likely to occur as the result of a bioterrorist attack, with an aerosol of bacteria spread over a wide area or a particular environment that is densely populated. In this setting, patients would be expected to develop fever, cough with hemoptysis, dyspnea, and gastrointestinal symptoms 1–6 days following exposure. Clinical features of pneumonia would be accompanied by pulmonary infiltrates and consolidation on chest x-ray. In the absence of antibiotics, the mortality rate of this form of plague is on the order of 85%, and death usually occurs within 2–6 days.

Vaccination and Prevention A formalin-fixed, whole-organism vaccine was licensed by the FDA for the prevention of plague. That vaccine is no longer being manufactured, but its potential value as a current countermeasure against bioterrorism would likely have been modest at best as it was ineffective against animal models of primary pneumonic plague. Efforts are under way to develop a second generation of vaccines that will protect against aerosol challenge. Among the candidates being tested are recombinant forms of the fraction 1 capsular (F1) antigen and the virulence component of the type III secretion apparatus (V) antigen of Y. pestis. It is likely that doxycycline or levofloxacin would provide coverage in a chemoprophylaxis setting. Unlike the case with anthrax, in which one has to be concerned about the persistence of ungerminated spores in the respiratory tract, the duration of prophylaxis against plague need only extend to 7 days following exposure.

SMALLPOX

See also Chap. 220e.

Variola Virus as a Bioweapon Given that most of the world’s population was once vaccinated against smallpox, variola virus would not have been considered a good candidate as a bioweapon 30 years ago. However, with the cessation of immunization programs in the United States in 1972 and throughout the world in 1980 due to the successful global eradication of smallpox, close to 50% of the U.S. population is fully susceptible to smallpox today. Given its infectious nature and the 10–30% mortality rate in unimmunized individuals, the deliberate spread of this virus could have a devastating effect on our society and unleash a previously conquered deadly disease. It is estimated that an initial infection of 50–100 persons in a first generation of cases could expand by a factor of 10–20 with each succeeding generation in the absence of any effective containment measures. Although the likely implementation of an effective public health response makes this scenario unlikely, it does illustrate the potential damage and disruption that can result from a smallpox outbreak.

In 1980, the WHO recommended that all immunization programs be terminated; that representative samples of variola virus be transferred to two locations, one at the CDC in Atlanta, GA, in the United States and the other at the Institute of Virus Preparations in the Soviet Union; and that all other stocks of smallpox be destroyed. Several years later, it was recommended that these two authorized collections be destroyed. However, these latter recommendations were placed on hold in the wake of increased concerns on the use of variola virus as a biologic weapon and thus the need to maintain an active program of defensive research. Many of these concerns were based on allegations made by former Soviet officials that extensive programs had been in place in that country for the production and weaponization of large quantities of smallpox virus. The dismantling of these programs with the fall of the Soviet Union and the subsequent weakening of security measures led to fears that stocks of Variola major may have made their way to other countries or terrorist organizations. In addition, accounts that efforts had been taken to produce recombinant strains of Variola that would be more virulent and more contagious than the wild-type virus have led to an increase in the need to be vigilant for the reemergence of this often fatal infectious disease.

Microbiology and Clinical Features Smallpox is caused by one of two variants of variola virus, V. major and V. minor. Variola is a double-strand DNA virus and member of the Orthopoxvirus genus of the Poxviridae family. Infections with V. minor are generally less severe than those of V. major, with milder constitutional symptoms and lower mortality rates; thus V. major is the only one considered to be a viable bioweapon. Infection with V. major typically occurs following contact with an infected person. Patients are infectious from the time that a maculopapular rash appears on the skin and oropharynx through the resolution and scabbing of the pustular lesions. Infection occurs principally during close contact, through the inhalation of saliva droplets containing virus from the oropharyngeal exanthem. Aerosolized material from contaminated clothing or linen can also spread infection. Several days after exposure, a primary viremia is believed to occur that results in dissemination of virus to lymphoid tissues. A secondary viremia occurs ~4 days later that leads to localization of infection in the dermis. Approximately 12–14 days following the initial exposure, the patient develops high fever, malaise, vomiting, headache, backache, and a maculopapular rash that begins on the face and extremities and spreads to the trunk (centripetal) with lesions in the same developmental stage in any given location. This is in contrast to the rash of varicella (chickenpox) that begins on the trunk and face and spreads to the extremities (centrifugal) with lesions at all stages of development. The lesions are initially maculopapular and evolve to vesicles that eventually become pustules and then scabs. The oral mucosa also develops maculopapular lesions that evolve to ulcers. The lesions appear over a period of 1–2 days and evolve at the same rate. Although virus can be isolated from the scabs on the skin, the conventional thinking is that once the scabs have formed the patient is no longer contagious. Smallpox is associated with 10–30% mortality rates, with patients typically dying of severe systemic illness during the second week of symptoms. Historically, ~5–10% of naturally occurring smallpox cases take either of two highly virulent atypical forms, classified as hemorrhagic and malignant. These are difficult to diagnose because of their atypical presentations. The hemorrhagic form is uniformly fatal and begins with the relatively abrupt onset of a severely prostrating illness characterized by high fevers and severe headache and back and abdominal pain. This form of the illness resembles a severe systemic inflammatory syndrome, in which patients have a high viremia but die without developing the characteristic rash. Cutaneous erythema develops accompanied by petechiae and hemorrhages into the skin and mucous membranes. Death usually occurs within 5–6 days. The malignant, or “flat,” form of smallpox is frequently fatal and has an onset similar to the hemorrhagic form, but with confluent skin lesions developing more slowly and never progressing to the pustular stage.

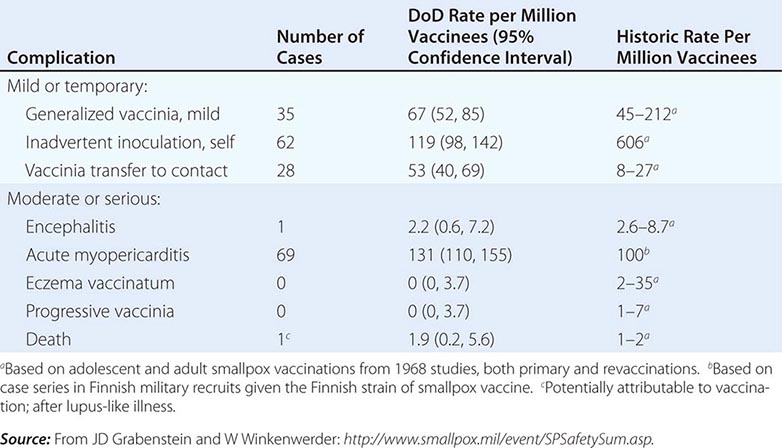

Vaccination and Prevention In 1796, Edward Jenner demonstrated that deliberate infection with cowpox virus could prevent illness on subsequent exposure to smallpox. Today, smallpox is a preventable disease following immunization with vaccinia. The current dilemma facing our society regarding assessment of the risk and benefit of smallpox vaccination is that the degree of risk that someone will deliberately and effectively release smallpox into our society is unknown. Given that there are well-described risks associated with vaccination, the degree of risk/benefit for the general population does not favor immunization. As a prudent first step in preparedness for a smallpox attack, however, members of the U.S. armed services received primary or booster immunizations with vaccinia before 1990 and after 2002. In addition, a number of civilian health care workers who comprise smallpox-response teams at the state and local public health level have been vaccinated.

Initial fears regarding the immunization of a segment of the American population with vaccinia when there are more individuals receiving immunosuppressive drugs and other immunocompromised patients than ever before were dispelled by the data generated from the military and civilian immunization campaigns of 2002–2004. Adverse event rates for the first 450,000 immunizations were similar to and, in certain categories of adverse events, even lower than those from prior historic data, in which most severe sequelae of vaccination occurred in young infants (Table 261e-4). In addition, 11 patients with early-stage HIV infection were inadvertently immunized without problem. One significant concern during that immunization campaign, however, was the description of a syndrome of myopericarditis, which had not been appreciated during prior immunization campaigns with vaccinia. In an effort to provide a safer vaccine to protect against smallpox, ACAM 2000, a cloned virus propagated in tissue culture, was developed and became the first second-generation smallpox vaccine to be licensed. This vaccine is now the only vaccinia product currently licensed in the United States and has been used by the U.S. military since 2008. It is part of the U.S. government stockpile. Research continues on attenuated forms of vaccinia such as modified vaccinia Ankara (MVA). Vaccinia immune globulin is available to treat those who experience a severe reaction to immunization with vaccinia.

|

COMPLICATIONS FROM 438,134 ADMINISTRATIONS OF VACCINIA DURING THE U.S. DEPARTMENT OF DEFENSE (DOD) SMALLPOX IMMUNIZATION CAMPAIGN INITIATED IN DECEMBER 2002 |

TULAREMIA

See also Chap. 195.

Francisella tularensis as a Bioweapon Tularemia has been studied as an agent of bioterrorism since the mid-twentieth century. It has been speculated by some that the outbreak of tularemia among German and Soviet soldiers during fighting on the Eastern Front during WWII was the consequence of a deliberate release. Unit 731 of the Japanese army studied the use of tularemia as a bioweapon during WWII. Large preparations were made for mass production of F. tularensis by the United States, but no stockpiling of any agent took place. Stocks of F. tularensis were reportedly generated by the Soviet Union in the mid-1950s. It has also been suggested that the Soviet program extended into the era of molecular biology and that some strains were engineered to be resistant to common antibiotics. F. tularensis is an extremely infectious organism, and human infections have occurred from merely examining an uncovered petri dish streaked with colonies. Given these facts, it is reasonable to conclude that this organism might be used as a bioweapon through either an aerosol or contamination of food or drinking water.

Microbiology and Clinical Features Although similar in many ways to anthrax and plague, tularemia, also referred to as rabbit fever or deer fly fever, is neither as lethal nor as fulminant as either of these other two category A bacterial infections. It is, however, extremely infectious, and as few as 10 organisms can lead to establishment of infection. Despite this fact, it is not spread from person to person. Tularemia is caused by F. tularensis, a small, nonmotile, gram-negative coccobacillus. Although it is not a spore-forming organism, it is a hardy bacterium that can survive for weeks in the environment. Infection typically comes from insect bites or contact with organisms in the environment. Infections have occurred in laboratory workers studying the agent. Large waterborne outbreaks have been recorded. It is most likely that the outbreak among German and Russian soldiers and Russian civilians noted above during WWII represented a large waterborne tularemia outbreak in a Tularensis-enzootic area devastated by warfare.

Humans can become infected through a variety of environmental sources. Infection is most common in rural areas where a variety of small mammals may serve as reservoirs. Human infections in the summer are often the result of insect bites from ticks, flies, or mosquitoes that have bitten infected animals. In colder months, infections are most likely the result of direct contact with infected mammals and are most common in hunters. In these settings, infection typically presents as a systemic illness with an area of inflammation and necrosis at the site of tissue entry. Drinking of contaminated water may lead to an oropharyngeal form of tularemia characterized by pharyngitis with cervical and/or retropharyngeal lymphadenopathy (Chap. 195). The most likely mode of dissemination of tularemia as a biologic weapon would be as an aerosol, as has occurred in a number of natural outbreaks in rural areas, including Martha’s Vineyard in the United States. Approximately 1–14 days following exposure by this route, one would expect to see inflammation of the airways with pharyngitis, pleuritis, and bronchopneumonia. Typical symptoms would include the abrupt onset of fever, fatigue, chills, headache, and malaise (Table 261e-3). Some patients might experience conjunctivitis with ulceration, pharyngitis, and/or cutaneous exanthems. A pulse-temperature dissociation might be present. Approximately 50% of patients would show a pulmonary infiltrate on chest x-ray. Hilar adenopathy might also be present, and a small percentage of patients could have adenopathy without infiltrates. The highly variable presentation makes acute recognition of aerosol-disseminated tularemia very difficult. The diagnosis would likely be made by immunohistochemistry, molecular techniques, or culture of infected tissues or blood. Untreated, mortality rates range from 5 to 15% for cutaneous routes of infection and from 30 to 60% for infection by inhalation. Since the advent of antibiotic therapy, these rates have dropped to <2%.

Vaccination and Prevention There are no vaccines currently licensed for the prevention of tularemia. Although a live, attenuated strain of the organism has been used in the past with some reported success, there are inadequate data to support its widespread use at this time. Development of a vaccine for this agent is an important part of the current biodefense research agenda. In the absence of an effective vaccine, postexposure chemoprophylaxis with either doxycycline or ciprofloxacin appears to be a reasonable approach (Table 261e-3).

VIRAL HEMORRHAGIC FEVERS

See also Chaps. 233 and 234.

Hemorrhagic Fever Viruses as Bioweapons Several of the hemorrhagic fever viruses have been reported to have been weaponized by the Soviet Union and the United States. Nonhuman primate studies indicate that infection can be established with very few virions and that infectious aerosol preparations can be produced. Under the guise of wanting to aid victims of an Ebola outbreak, members of the Aum Shinrikyo cult in Japan were reported to have traveled to central Africa in 1992 in an attempt to obtain Ebola virus for use in a bioterrorist attack. Thus, although there has been no evidence that these agents have ever been used in a biologic attack, there is clear interest in their potential for this purpose.

Microbiology and Clinical Features The viral hemorrhagic fevers are a group of illnesses caused by any one of a number of similar viruses (Table 261e-2). These viruses are all enveloped, single-strand RNA viruses that are thought to depend on a host reservoir for long-term survival. Although rodents or insects have been identified as the hosts for some of these viruses, for others the hosts are unknown. These viruses tend to be geographically restricted according to the migration patterns of their hosts. Great apes are not a natural reservoir for Ebola virus, but large numbers of these animals in sub-Saharan Africa have died from Ebola infection over the past decade. Humans can become infected with hemorrhagic fever viruses if they come into contact with an infected host or other infected animals. Person-to-person transmission, largely through direct contact with virus-containing body fluids, has been documented for Ebola, Marburg, and Lassa viruses and rarely for the New World arenaviruses. Although there is no clear evidence of respiratory spread among humans, these viruses have been shown in animal models to be highly infectious by the aerosol route. This, coupled with mortality rates as high as 90%, makes them excellent candidate agents of bioterrorism.

The clinical features of the viral hemorrhagic fevers vary depending on the particular agent (Table 261e-3). Initial signs and symptoms typically include fever, myalgia, prostration, and disseminated intravascular coagulation with thrombocytopenia and capillary hemorrhage. These findings are consistent with a cytokine-mediated systemic inflammatory syndrome. A variety of different maculopapular or erythematous rashes may be seen. Leukopenia, temperature-pulse dissociation, renal failure, and seizures may also be part of the clinical presentation.

Outbreaks of most of these diseases are sporadic and unpredictable. As a consequence, most studies of pathogenesis have been performed using laboratory animals. The diagnosis should be suspected in anyone with temperature >38.3°C for <3 weeks who also exhibits at least two of the following: hemorrhagic or purpuric rash, epistaxis, hematemesis, hemoptysis, or hematochezia in the absence of any other identifiable cause. In this setting, samples of blood should be sent after consultation to the CDC or the USAMRIID for serologic testing for antigen and antibody as well as reverse transcriptase polymerase chain reaction (RT-PCR) testing for hemorrhagic fever viruses. All samples should be handled with double-bagging. Given how little is known regarding the human-to-human transmission of these viruses, appropriate isolation measures would include full barrier precautions with negative-pressure rooms and use of powered air-purifying respirators (PAPRs). Unprotected skin contact with cadavers has been implicated in the transmission of certain hemorrhagic fever viruses such as Ebola, so it is recommended that autopsies of suspected cases be performed using the strictest measures for protection and that burial or cremation be performed promptly without embalming.

Vaccination and Prevention There are no licensed and effective vaccines for these agents. Studies are currently under way examining the potential role of DNA, recombinant viruses, and attenuated viruses as vaccines for several of these infections. Among the most promising at present are vaccines for Argentine, Ebola, Rift Valley, and Kyasanur Forest viruses. A series of monoclonal antibodies directed against the envelope glycoproteins of Ebola have demonstrated protection against infection in a postexposure setting in nonhuman primates and are being further developed for human use.

BOTULISM TOXIN (CLOSTRIDIUM BOTULINUM)

See also Chap. 178.

Botulinum Toxin as a Bioweapon In a bioterrorist attack, botulinum toxin would likely be dispersed as an aerosol or as contamination of a food supply. Although contamination of a water supply is possible, it is likely that any toxin would be rapidly inactivated by the chlorine used to purify drinking water. Similarly, toxin can be inactivated by heating any food to >85°C for >5 min. Without external facilitation, the environmental decay rate is estimated at 1% per minute, and thus the time interval between weapon release and ingestion or inhalation needs to be rather short. The Japanese biologic warfare group, Unit 731, is reported to have conducted experiments on botulism poisoning in prisoners in the 1930s. The United States and the Soviet Union both acknowledged producing botulinum toxin, and there is some evidence that the Soviet Union attempted to create recombinant bacteria containing the gene for botulinum toxin. In records submitted to the United Nations, Iraq admitted to having produced 19,000 L of concentrated toxin—enough toxin to kill the entire population of the world three times over. By many accounts, botulinum toxin was the primary focus of the pre-1991 Iraqi bioweapons program. In addition to these examples of state-supported research into the use of botulinum toxin as a bioweapon, the Aum Shinrikyo cult unsuccessfully attempted on at least three occasions to disperse botulinum toxin into the civilian population of Tokyo.

Microbiology and Clinical Features Unique among the category A agents for not being a live microorganism, botulinum toxin is one of the most potent toxins ever described and is thought by some to be the most poisonous substance in existence. It is estimated that 1 g of botulinum toxin would be sufficient to kill 1 million individuals if adequately dispersed. Botulinum toxin is produced by the gram-positive, spore-forming anaerobe C. botulinum (Chap. 178). Its natural habitat is soil. There are seven antigenically distinct forms of botulinum toxin, designated A–G. The majority of naturally occurring human cases are of types A, B, and E. Antitoxin directed toward one of these will have little to no activity against the others. The toxin is a 150-kDa zinc-containing protease that prevents the intracellular fusion of acetylcholine vesicles with the motor neuron membrane, thus preventing the release of acetylcholine. In the absence of acetylcholine-dependent triggering of muscle fibers, a flaccid paralysis develops. Although botulism does not spread from person to person, the ease of production of the toxin coupled with its high morbidity and 60–100% mortality make it a close to ideal bioweapon.

Botulism can result from the growth of C. botulinum infection in a wound or the intestine, the ingestion of contaminated food, or the inhalation of aerosolized toxin. The latter two forms are the most likely modes of transmission for bioterrorism. Once toxin is absorbed into the bloodstream, it binds to the neuronal cell membrane, enters the cell, and cleaves one of the proteins required for the intracellular binding of the synaptic vesicle to the cell membrane, thus preventing release of the neurotransmitter to the membrane of the adjacent muscle cell. Patients initially develop multiple cranial nerve palsies that are followed by a descending flaccid paralysis. The extent of the neuromuscular compromise is dependent on the level of toxemia. The majority of patients experience diplopia, dysphagia, dysarthria, dry mouth, ptosis, dilated pupils, fatigue, and extremity weakness. There are minimal true central nervous system effects, and patients rarely show significant alterations in mental status. Severe cases can involve complete muscular collapse, loss of the gag reflex, and respiratory failure. Recovery requires the regeneration of new motor neuron synapses with the muscle cell, a process that can take weeks to months. In the absence of secondary infections, which may be common during the protracted recovery phase of this illness, patients remain afebrile. The diagnosis is suspected on clinical grounds and confirmed by a mouse bioassay or toxin immunoassay.

Vaccination and Prevention A botulinum toxoid preparation has been used as a vaccine for laboratory workers at high risk of exposure and in certain military situations; however, it is not currently available in quantities that could be used for the general population. At present, early recognition of the clinical syndrome and use of appropriate equine antitoxin is the mainstay of prevention of full-blown disease in exposed individuals. The development of human monoclonal antibodies as a replacement for equine antitoxin antibodies is an area of active research interest.

CATEGORY B AND C AGENTS

The category B agents include those that are easy or moderately easy to disseminate and result in moderate morbidity and low mortality rates. A listing of the current category B agents is provided in Table 261e-2. As can be seen, it includes a wide array of microorganisms and products of microorganisms. Several of these agents have been used in bioterrorist attacks, although never with the impact of the category A agents described above. Among the more notorious of these was the contamination of salad bars in Oregon in 1984 with Salmonella typhimurium by the religious cult Rajneeshee. In this outbreak, which many consider to be the first bioterrorist attack against U.S. citizens, >750 individuals were poisoned and 40 were hospitalized in an effort to influence a local election. The intentional nature of this outbreak went unrecognized for more than a decade.

Category C agents are the third highest priority agents in the biodefense agenda. These agents include emerging pathogens to which little or no immunity exists in the general population, such as the severe acute respiratory syndrome (SARS) or Middle East respiratory syndrome (MERS) coronavirus or pandemic-potential strains of influenza that could be obtained from nature and deliberately disseminated. These agents are characterized as being relatively easy to produce and disseminate, having high morbidity and mortality rates, and having a significant public health impact. There is no running list of category C agents at the present time.

PREVENTION AND PREPAREDNESS

As noted above, a large number and diverse array of agents have the potential to be used in a bioterrorist attack. In contrast to the military situation with biowarfare, where the primary objective is to inflict mass casualties on a healthy and prepared militia, the objectives of bioterrorism are to harm civilians as well as to create fear and disruption among the civilian population. Although the military need only to prepare their troops to deal with the limited number of agents that pose a legitimate threat of biowarfare, the public health system needs to prepare the entire civilian population to deal with the multitude of agents and settings that could be used in a bioterrorism attack. This includes anticipating issues specific to the very young and the very old, the pregnant patient, and the immunocompromised individual. The challenges in this regard are enormous and immediate. Whereas military preparedness emphasizes vaccines toward a limited number of agents, civilian preparedness needs to rely on rapid diagnosis and treatment of a wide array of conditions.

The medical profession must maintain a high index of suspicion that unusual clinical presentations or the clustering of cases of a rare disease may not be a chance occurrence but rather the first sign of a bioterrorist event. This is particularly true when such diseases occur in traditionally healthy populations, when surprisingly large numbers of rare conditions occur, and when diseases commonly seen in rural settings appear in urban populations. Given the importance of rapid diagnosis and early treatment for many of these conditions, it is essential that the medical care team report any suspected cases of bioterrorism immediately to local and state health authorities and/or to the CDC (888-246-2675). Enhancements made to the U.S. public health surveillance network after the anthrax attacks of 2001 have greatly facilitated the rapid sharing of information among public health agencies.

A series of efforts are in place to ensure the biomedical security of the civilian population of the United States. The Public Health Service has a more highly trained, fully deployable force. The Strategic National Stockpile (SNS) maintained by the CDC provides rapid access to quantities of pharmaceuticals, antidotes, vaccines, and other medical supplies that may be of value in the event of biologic or chemical terrorism. The SNS has two basic components. The first of these consists of “push packages” that can be deployed anywhere in the United States within 12 h. These push packages are a preassembled set of supplies, pharmaceuticals, and medical equipment ready for immediate delivery to the field. Given that an actual threat may not have been precisely identified at the time of stockpile deployment, they provide treatment for a variety of conditions. The contents of the push packs are constantly updated to ensure that they reflect current needs as determined by national security risk assessments; they include antibiotics for treatment of anthrax, plague, and tularemia as well as a cache of vaccine to deal with a smallpox threat. The second component of the SNS comprises inventories managed by specific vendors and consists of the provision of additional pharmaceuticals, supplies, and/or products tailored to the specific attack.

The number of FDA-approved and licensed drugs and vaccines for category A and B agents is currently limited and not reflective of the pharmacy of today. In an effort to speed the licensure of additional drugs and vaccines for these diseases, the FDA has a rule for the licensure of such countermeasures against agents of bioterrorism when adequate and well-controlled clinical efficacy studies cannot be ethically conducted in humans. This is commonly referred to as the “Animal Rule.” Thus, for indications in which field trials of prophylaxis or therapy for a naturally occurring disease are not feasible, the FDA will rely on evidence solely from laboratory animal studies. For this rule to apply, it must be shown that (1) there are reasonably well-understood pathophysiologic mechanisms for the condition and its treatment; (2) the effect of the intervention is independently substantiated in at least two animal species, including species expected to react with a response predictive for humans; (3) the animal study endpoint is clearly related to the desired benefit in humans; and (4) the data in animals allow selection of an effective dose in humans. As noted above, levofloxacin for treatment of plague and raxibacumab for treatment of inhalational anthrax have been licensed via this mechanism.

Finally, the Biomedical Advanced Research and Development Authority (BARDA) was established in 2006 within the U.S. Department of Health and Human Services to provide an integrated, systematic approach to the development and purchase of the necessary vaccines, drugs, therapies, and diagnostic tools for public health medical emergencies. As authorized by the All-Hazards Preparedness Reauthorization Act of 2013 in conjunction with the Project Bioshield Act of 2006 and the Pandemic and All Hazards Act of 2006, BARDA manages a series of initiatives designed to facilitate biodefense research within the federal government, create a stable source of funding for the purchase of countermeasures against agents of bioterrorism, and create a category of “emergency use authorization” to allow the FDA to approve the use of unlicensed countermeasures during times of extraordinary unmet needs, as might be present in the context of a bioterrorist attack.

Although the prospect of a deliberate attack on civilians with disease-producing agents may seem to be an act of incomprehensible evil, history shows us that it is something that has been done in the past and will likely be done again in the future. It is the responsibility of health care providers to be aware of this possibility, to be able to recognize early signs of a potential bioterrorist attack and alert the public health system, and to respond quickly to provide care to the individual patient. Among the websites with current information on microbial bioterrorism are www.bt.cdc.gov, www.niaid.nih.gov, and www.cidrap.umn.edu.

262e |

Chemical Terrorism |

The use of chemical warfare agents (CWAs) in modern warfare dates back to World War I (WWI). Sulfur mustard and nerve agents were used by Iraq against the Iranian military and Kurdish civilians. Most recently the nerve agent Sarin, GB, was used by the Syrian military against their civilian population. Since the Japanese sarin attacks in 1994–1995 and the terrorist strikes of September 11, 2001, the all-too-real possibility of chemical or biological terrorism against civilian populations anywhere in the world has attracted increased attention.

Military planners consider the WWI blistering agent sulfur mustard and the organophosphorus nerve agents as the most likely agents to be used on the battlefield. In a civilian or terrorist scenario, the choice widens considerably. For example, many of the CWAs of WWI, including chlorine, phosgene, and cyanide, are used today in large amounts in industry. They are produced in chemical plants, are stockpiled in large tanks, and travel up and down highways and railways in large tanker cars. The rupture of any of these stores by accident or on purpose could cause many injuries and deaths. In three attacks in February 2007, for example, insurgents in Iraq used chlorine gas released from tankers after explosions as a crude form of chemical weaponry; these attacks killed 12 people and intoxicated more than 140 others. Countless hazardous materials (HAZMATs) that are not used on the battlefield can be used as terrorist weapons. Some of them, including insecticides and ammonia, could wreak as much damage and injury as the weaponized chemical agents.

Many mistakenly believe that chemical attacks will always be so severe that little can be done except to bury the dead. History proves the opposite. Even in WWI, when IV fluids, endotracheal tubes, and antibiotics were unavailable, the mortality rate among U.S. forces on the battlefield from CWAs—chiefly sulfur mustard and the pulmonary intoxicants—was only 1.9%. That figure was far lower than the 7% mortality rate from conventional wounds. In the 1995 Tokyo subway sarin incident, among the 5500 patients who sought medical attention at hospitals, 80% were not actually symptomatic and only 12 died. Recent events should prompt not a fatalistic attitude but a realistic wish to understand the pathophysiology of the syndromes these agents cause, with a view to treating expeditiously all patients who present for care and an expectation of saving the vast majority. As we prepare to defend our civilian population from the effects of chemical terrorism, we also must consider the fact that terrorism itself can produce sequelae such as physiologic or neurologic effects that may resemble the effects of nonlethal exposures to CWAs. These effects are due to a general fear of chemicals, fear of decontamination, fear of protective ensembles, or other phobic reactions. The increased difficulty in differentiating between stress reactions and nerve agent–induced organic brain syndromes has been pointed out. Knowledge of the behavioral effects of CWAs and their medical countermeasures is imperative to ensure that military and civilian medical and mental health organizations can deal with possible incidents involving weapons of mass destruction.

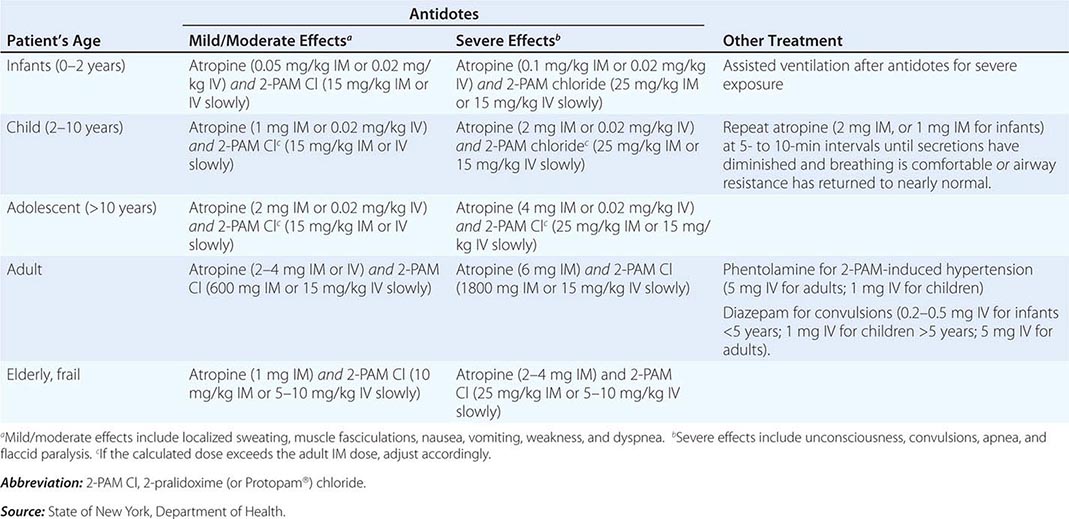

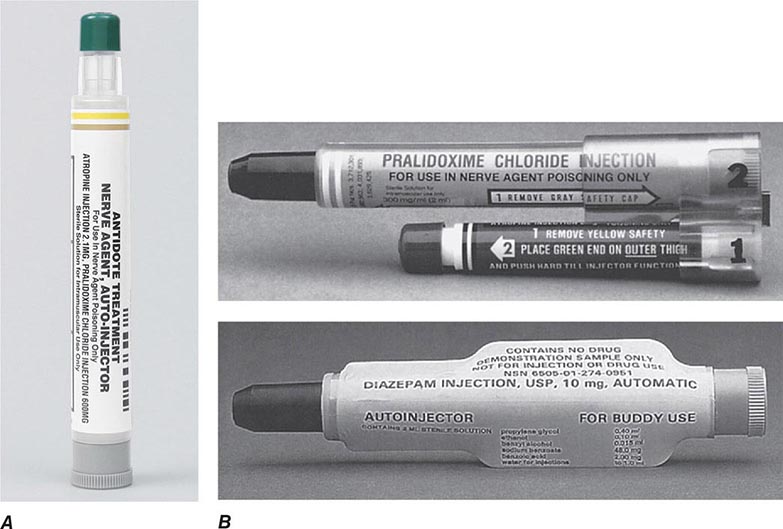

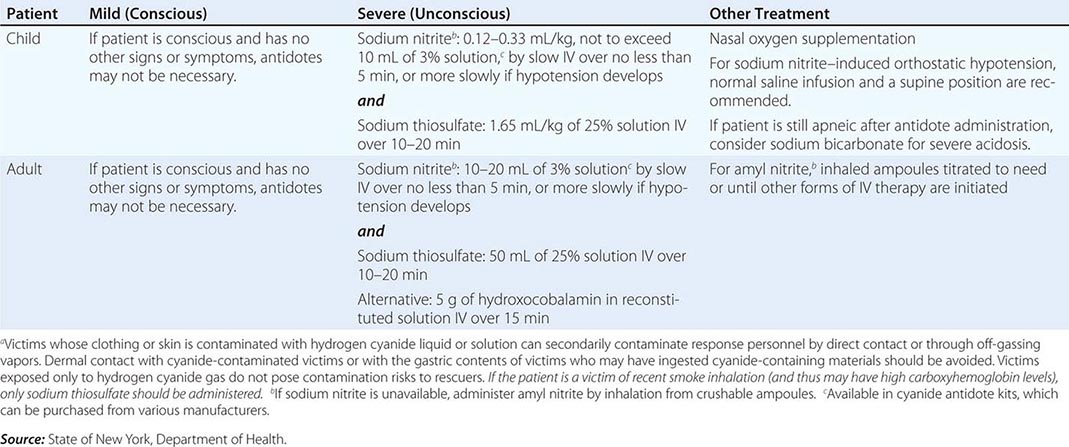

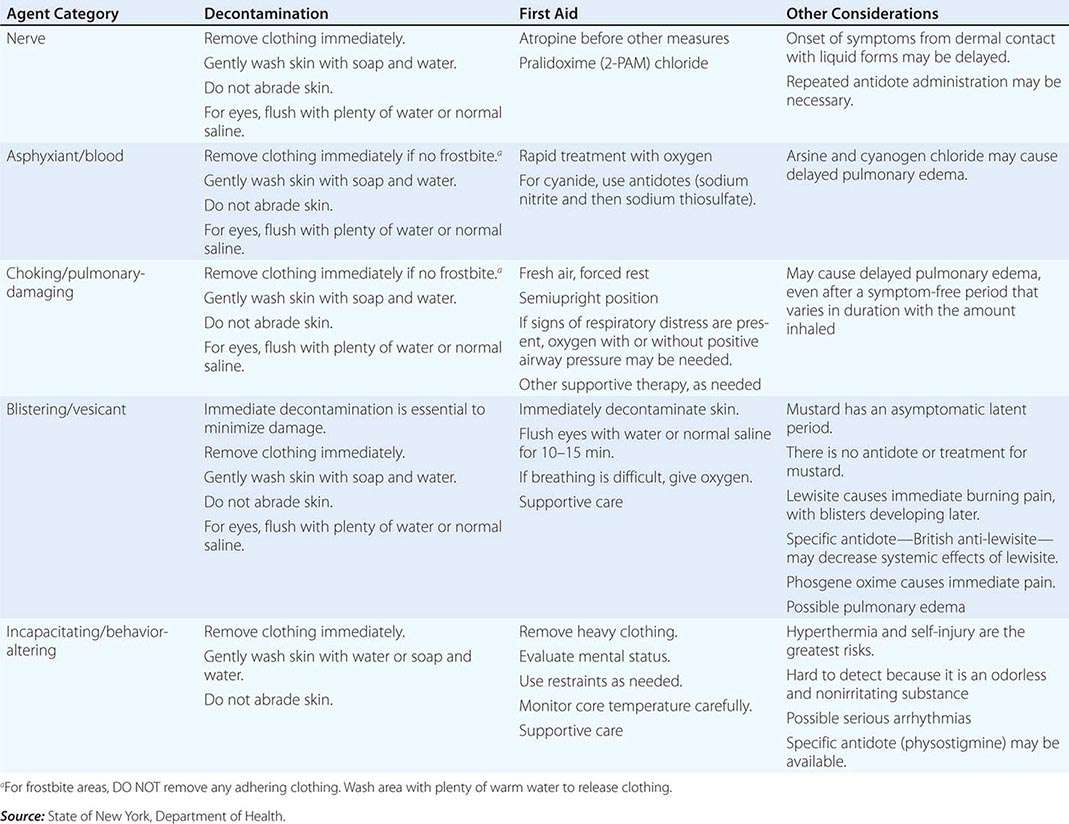

For the reader’s benefit, the CWAs, their two-letter North Atlantic Treaty Organization (NATO) codes (which were established by a NATO international convention and convey no clinical implications), their unique physical features, and their initial effects are listed in Table 262e-1. Table 262e-2 provides guidelines for immediate treatment. The focus of this chapter is on the blister and nerve CWAs, which have been employed in battle and against civilians and have had a significant public health impact.

|

RECOGNIZING AND DIAGNOSING HEALTH EFFECTS OF CHEMICAL TERRORISM |

|

DECONTAMINATION AND TREATMENT OF CHEMICAL TERRORISM |

VESICANTS: SULFUR MUSTARD

Sulfur mustard has been a military threat since it first appeared on the battlefield in Belgium during WWI. In modern times, it remains a threat on the battlefield as well as a potential chemical terrorism weapon because of the simplicity of its manufacture and its extreme effectiveness. Sulfur mustard accounted for 70% of the 1.3 million chemical casualties in WWI. Occasional cases of sulfur mustard intoxication continue to occur in the United States among people exposed to WWI- and WWII-era munitions.

Mechanism Sulfur mustard constitutes both a vapor and a liquid threat to all exposed epithelial surfaces. The effects are delayed, appearing hours after exposure. The organs most commonly affected are the skin (with erythema and vesicles), the eyes (with manifestations ranging from mild conjunctivitis to severe eye damage), and the airways (with effects ranging from mild upper airway irritation to severe bronchiolar damage). After exposure to large quantities of mustard, precursor cells of the bone marrow are damaged, with consequent pancytopenia and secondary infection. The gastrointestinal mucosa may be damaged, and there are sometimes central nervous system (CNS) signs of unknown mechanism. No specific antidotes exist; management is entirely supportive. Immediate decontamination of the liquid is the only way to reduce damage. Complete decontamination in 2 min stops clinical injury; decontamination at 5 min reduces skin injury by ~50%. Table 262e-2 lists approaches to decontamination after exposure to mustard and other CWAs.

Mustard dissolves slowly in aqueous media such as sweat, but, once dissolved, it rapidly forms cyclic ethylene sulfonium ions that are extremely reactive with cell proteins, cell membranes, and especially DNA in rapidly dividing cells. The ability of mustard to react with and alkylate DNA gives rise to the effects by which it has been characterized as “radiomimeti”—i.e., similar to radiation injury. Mustard has many biologic actions, but its actual mechanism of action is largely unknown. Much of the biologic damage from mustard results from DNA alkylation and cross-linking in rapidly dividing cells: corneal epithelium, basal keratinocytes, bronchial mucosal epithelium, gastrointestinal mucosal epithelium, and bone marrow precursor cells. This damage may lead to cellular death and inflammatory reactions. In the skin, proteolytic digestion of anchoring filaments at the epidermal-dermal junction may be the major mechanism of action resulting in blister formation. Mustard also has mild cholinergic activity, which may be responsible for effects such as early gastrointestinal and CNS symptoms. Mustard reacts with tissue within minutes of entering the body. Its circulating half-life in unaltered form is extremely brief.

Clinical Features Topical effects of mustard occur in the skin, airways, and eyes; the eyes are most sensitive and the airways next most sensitive. Absorbed mustard may produce effects in the bone marrow, gastrointestinal tract, and CNS. Direct injury to the gastrointestinal tract also may occur after ingestion of the compound through contamination of water or food.

Erythema is the mildest and earliest form of mustard skin injury. It resembles sunburn and is associated with pruritus, burning, or stinging pain. Erythema begins to appear within 2 h to 2 days after vapor exposure. Time of onset depends on severity of exposure, ambient temperature and humidity, and type of skin. The most sensitive sites are warm moist locations and areas of thin delicate skin, such as the perineum, external genitalia, axillae, antecubital fossae, and neck.

Within the erythematous areas, small vesicles can develop, which may later coalesce to form bullae (Fig. 262e-1). The typical bulla is large, dome-shaped, flaccid, thin-walled, translucent, and surrounded by erythema. The blister fluid, a transudate, is clear to straw-colored and becomes yellow, tending to coagulate. The fluid does not contain mustard and is not itself a vesicant. Lesions from high-dose liquid exposure may develop a central zone of coagulation necrosis with blister formation at the periphery. These lesions take longer to heal and are more prone to secondary infection than are the uncomplicated lesions seen at lower exposure levels. Severe lesions may require skin grafting.

FIGURE 262e-1 Large bulla formation from mustard burn in a patient. Although the blisters in this case involved only 7% of the body surface area, the patient still required hospitalization in a burn intensive care unit.

The primary airway lesion is necrosis of the mucosa with possible damage to underlying smooth muscle. The damage begins in the upper airways and descends to the lower airways in a dose-dependent manner. Usually the terminal airways and alveoli are affected only as a terminal event. Pulmonary edema is not usually present unless the damage is very severe, and then it becomes hemorrhagic.

The earliest effects of mustard—and perhaps the only effects of a low concentration—involve the nose, sinuses, and pharynx. There may be irritation or burning of the nares, epistaxis, sinus pain, and pharyngeal pain. As the concentration increases, laryngitis, voice changes, and nonproductive cough develop. Damage to the trachea and upper bronchi leads to a productive cough. Lower airway involvement causes dyspnea, severe cough, and increasing quantities of sputum. Terminally, there may be necrosis of the smaller airways with hemorrhagic edema into surrounding alveoli. Hemorrhagic pulmonary edema is rare.

Necrosis of airway mucosa causes “pseudomembrane” formation. These membranes may obstruct the bronchi. During WWI, high-dose mustard exposure caused acute death via this mechanism in a small minority of cases (Fig. 262e-2).

FIGURE 262e-2 Schematic diagram of pseudomembrane formation as is seen in high-dose sulfur mustard vapor inhalation exposure. In World War I, severe inhalation exposure often caused death via obstruction of large airways.

The eyes are the organs most sensitive to mustard vapor injury. The latent period is shorter for eye injury than for skin injury and is also dependent on exposure concentration. After low-dose vapor exposure, irritation evidenced by reddening of the eyes may be the only effect. As the dose increases, the injury includes progressively severe conjunctivitis, photophobia, blepharospasm, pain, and corneal damage (Fig. 262e-3). About 90% of eye injuries related to mustard heal in 2 weeks to 2 months without sequelae. Scarring between the iris and the lens may follow severe effects; this scarring may restrict pupillary movements and predispose victims to glaucoma. The most severe damage is caused by liquid mustard. Extensive eye exposure can be followed by severe corneal damage with possible perforation of the cornea and loss of the eye. In some individuals, latent chronic keratitis, sometimes associated with corneal ulcerations, has been described as early as 8 months and as late as 20 years after initial exposure.

FIGURE 262e-3 World War I photograph of troops exposed to sulfur mustard vapor. The vast majority of these troops survived with no long-term damage to the eyes; however, they were rendered effectively blind for days or weeks.

The mucosa of the gastrointestinal tract is susceptible to mustard damage from either systemic absorption or ingestion of the agent. Mustard exposure in small amounts will cause nausea and vomiting lasting up to 24 h. The mechanism of the nausea and vomiting is not understood, but mustard does have a cholinergic-like effect. The CNS effects of mustard also remain poorly defined. Exposure to large amounts can cause seizures in animals. Reports from WWI and from the Iran–Iraq war described people exposed to small amounts of mustard acting sluggish, apathetic, and lethargic. These reports suggest that minor psychological problems could linger for ≥1 year.

The cause of death in the majority of mustard poisoning cases is sepsis and respiratory failure. Mechanical obstruction via pseudomembrane formation and agent-induced laryngospasm is important in the first 24 h, but only in cases of severe exposure. From the third through the fifth day after exposure, secondary pneumonia due to bacterial invasion of denuded necrotic mucosa can be expected. The third wave of death is caused by agent-induced bone marrow suppression, which peaks 7–21 days after exposure and causes death via sepsis.

NERVE AGENTS

The organophosphorus nerve agents are the deadliest of the CWAs. They work by inhibition of tissue synaptic acetylcholinesterase, creating an acute cholinergic crisis. Death ensues because of respiratory depression and can occur within seconds to minutes.

The nerve agents tabun and sarin were first used on the battlefield by Iraq against Iran during the first Persian Gulf War (1984–1987). Estimates of casualties from these agents range from 20,000–100,000. In 1994 and 1995, the Japanese cult Aum Shinrikyo used sarin in two terrorist attacks in Matsumoto and Tokyo. Two U.S. soldiers were exposed to sarin while rendering safe an improvised explosive device in Iraq in 2004.

The “classic” nerve agents include tabun (GA), sarin (GB), soman (GD), cyclosarin (GF), and VX; VR, similar to VX, was manufactured in the former Soviet Union (Table 262e-1). All the nerve agents are organophosphorus compounds, which are liquid at standard temperature and pressure. The “G” agents evaporate at about the rate of water, except for cyclosarin, which is oily and thus probably will have evaporated within 24 h after deposition on the ground. Their high volatility thus makes a spill of any amount a serious vapor hazard. In the Tokyo subway attack in which sarin was used, 100% of the symptomatic patients inhaled sarin vapor that spilled out on the floor of the subway cars. The low vapor pressure of VX, an oily liquid, makes it much less of a vapor hazard but potentially a greater environmental hazard because it persists in the environment far longer.

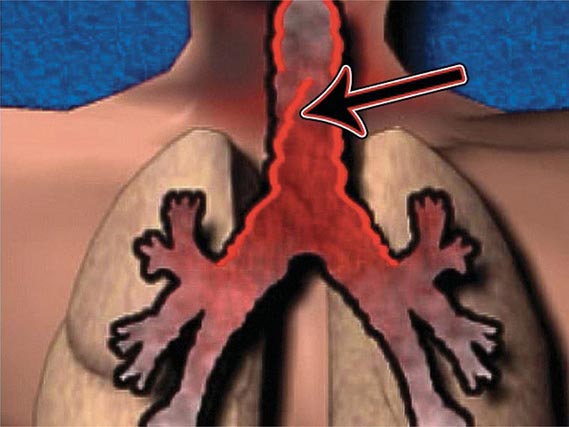

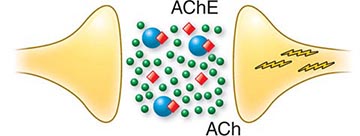

Mechanism Acetylcholinesterase inhibition accounts for the major life-threatening effects of nerve agent poisoning. The efficacy of antidotal therapy in the reversal of this inhibition proves that this is the primary toxic action of these poisons. At cholinergic synapses, acetylcholinesterase, bound to the postsynaptic membrane, functions as a turn-off switch to regulate cholinergic transmission. Inhibition of acetylcholinesterases causes the released neurotransmitter, acetylcholine, to accumulate abnormally. End-organ overstimulation, which is recognized by clinicians as a cholinergic crisis, ensues (Fig. 262e-4).

FIGURE 262e-4 Schematic diagram of the pathophysiology of nerve agent exposure. Nerve agent (![]() ) binds to the active site of acetylcholinesterase (AChE), which is shown as floating free in space but is in reality a postsynaptic membrane-bound enzyme. As a result, acetylcholine (

) binds to the active site of acetylcholinesterase (AChE), which is shown as floating free in space but is in reality a postsynaptic membrane-bound enzyme. As a result, acetylcholine (![]() ), which normally is released from presynaptic membrane but then is degraded, accumulates, and this leads (

), which normally is released from presynaptic membrane but then is degraded, accumulates, and this leads (![]() ) to organ overstimulation and cholinergic crisis.

) to organ overstimulation and cholinergic crisis.

Clinical Features Clinical effects of nerve agent exposure are identical for vapor and liquid exposure routes if the dose is sufficiently large. The speed and order of symptom onset will differ (Table 262e-2).

Exposure of a patient to nerve agent vapor, by far the more likely route of exposure in both battlefield and terrorist scenarios, will cause cholinergic symptoms in the order in which the toxin encounters cholinergic synapses. The most exposed synapses on the human integument are in the pupillary muscles. Nerve agent vapor easily crosses the cornea, interacts with these synapses, and produces miosis, described by Tokyo subway victims as “the world going black.” Rarely, this vapor also can cause eye pain and nausea. Exocrine glands in the nose, mouth, and pharynx are next exposed to the vapor, and cholinergic overload here causes increased secretions, rhinorrhea, excess salivation, and drooling. Toxin then interacts with exocrine glands in the upper airway, causing bronchorrhea, and with bronchial smooth muscle, causing bronchospasm. This combination of events can result in hypoxia.

Once the victim has inhaled, vapor can passively cross the alveolar-capillary membrane, enter the bloodstream, and incidentally and asymptomatically inhibit circulating cholinesterases, particularly free butyrylcholinesterase and erythrocyte acetylcholinesterase, both of which can be assayed. Unfortunately, the results of this assay may not be easily interpretable without a baseline, since cholinesterase levels vary enormously between individuals and over time in an individual, healthy patient.

Usually the first organ system to become symptomatic from bloodborne nerve agent exposure is the gastrointestinal tract, where cholinergic overload causes abdominal cramping and pain, nausea, vomiting, and diarrhea. After the gastrointestinal tract becomes involved, nerve agents will affect the heart, distant exocrine glands, muscles, and brain. Because there are cholinergic synapses on both the vagal (parasympathetic) and sympathetic sides of the autonomic input to the heart, one cannot predict how heart rate and blood pressure will change once intoxication has occurred. Remote exocrine activity will include oversecretion in the salivary, nasal, respiratory, and sweat glands—the patient will be “wet all over.” Bloodborne nerve agents will overstimulate neuromuscular junctions in skeletal muscles, causing fasciculations followed by frank twitching. If the process goes on long enough, ATP in muscles will eventually be depleted and flaccid paralysis will ensue.

In the brain, since the cholinergic system is so widely distributed, bloodborne nerve agents will, in sufficient doses, cause rapid loss of consciousness, seizures, and central apnea leading to death within minutes. If respiration is supported, status epilepticus that does not respond to usual anticonvulsants may ensue (Chap. 445). If status epilepticus persists, neuronal death and permanent brain dysfunction may occur. Even in mild nerve-agent intoxication, patients may recover but may experience weeks of irritability, sleep disturbance, and nonspecific neurobehavioral manifestations.

The time from exposure to development of the full-blown cholinergic crisis after nerve agent vapor inhalation can be minutes or even seconds, yet there is no depot effect. Since nerve agents have a short circulating half-life, if the patient is supported and, ideally, treated with antidotes, improvement should be rapid, without subsequent deterioration.

Liquid exposure to nerve agents results in different speeds and orders of symptom onset. A nerve agent on intact skin will partially evaporate and partially begin to travel through the skin, causing localized sweating and then, when it encounters neuromuscular junctions, localized fasciculations. Once in muscle, the agent will cross into the circulation and cause gastrointestinal discomfort, respiratory distress, heart rate changes, generalized fasciculations and twitching, loss of consciousness, seizures, and central apnea. The time course will be much longer than with vapor inhalation; even a large, lethal droplet can take up to 30 min to exert an effect, and a small, sublethal dose could progressively take effect over 18 h. Clinical worsening that occurs hours after treatment has started is far more likely with liquid than with vapor exposure. In addition, miosis, which is practically unavoidable with vapor exposure, is not always present with liquid exposure and may be the last manifestation to develop in this situation; such a delay is due to the relative insulation of the pupillary muscle from the systemic circulation.

Unless the cholinesterase is reactivated by specific therapy (oximes), its binding to the enzyme is essentially irreversible. Erythrocyte acetylcholinesterase activity recovers at ~1% per day. Plasma butyrylcholinesterase recovers more quickly and is a better guide to recovery of tissue enzyme activity.

CYANIDE

Cyanide (CN–) has become an agent of particular interest in terrorist scenarios because of its applicability to indoor targets. In recent years, for example, attacks with this agent have targeted the water supply of the U.S. Embassy in Italy. The 1993 World Trade Center bombing in New York may have been intended as a cyanide release as well.

Hydrogen cyanide and cyanogen chloride, the major forms of cyanide, are either true gases or liquids very close to their boiling points at standard room temperature. Hydrogen cyanide gas is lighter than air and does not remain concentrated outdoors for long; thus it is a poor military weapon but an effective weapon in an indoor space such as a train station or a sports arena. Cyanide is also water-soluble and poses a threat to the food and water supply from either accidents or malign intent. It is well absorbed from the gastrointestinal tract, through the skin, or via inhalation—the preferred terrorist route. Cyanide smells like bitter almonds, but 50% of persons lack the ability to smell it.

Unique among CWAs, cyanide is a normal constituent of the environment and actually is a required cofactor in many compounds important in metabolism, including vitamin B12. Cyanide is present in many plants, including tobacco; therefore, smokers, for instance, chronically carry cyanide at three times the usual level. Humans have evolved a detoxification mechanism for cyanide. Cyanide poisoning results if a large challenge of CN– overwhelms this mechanism, while treatment of cyanide poisoning exploits it.

Mechanism Cyanide directly poisons the last step in the mitochondrial electron transport chain, cytochrome a3, which results in a shutdown of cellular energy production. Tissues are poisoned in direct proportion to their metabolic rate, with the carotid baroreceptors and the brain—the most metabolically active tissues in the body—affected fastest and most severely. This poisoning results from cyanide’s high affinity for certain metals, notably Co and Fe+++. Cytochrome a3 contains Fe+++, to which CN– binds. Cyanide-poisoned tissues cannot extract oxygen from the blood; even though pulmonary oxygen exchange and cardiac function are preserved, cells die of hypoxia—i.e., of histotoxic rather than cardiopulmonary cause.

Clinical Features Hyperpnea occurs ~15 s after inhalation of a high concentration of cyanide and is followed within 15–30 s by the onset of convulsions and electrical status epilepticus. Respiratory activity stops 2–3 min later, and cardiac activity ceases several minutes after that. Exposure, especially via inhalation, to a large challenge of CN– can cause death in as little as 8 min. Smaller challenges will cause symptom spread over a longer period; very low doses may produce no effects at all because of the body’s ability to detoxify small amounts. Cyanogen chloride additionally produces mucous membrane irritation. Many but not all patients have a cherry-red appearance because their venous blood remains oxygenated.

Differential Diagnosis In a mass casualty incident caused by a chemical agent, the primary differential diagnosis of cyanide poisoning will be nerve agent poisoning. Cyanide-poisoned patients lack the prominent cholinergic signs seen in nerve agent poisoning, such as miosis and increased secretions. The cherry-red appearance often seen in cyanide poisoning is never seen in nerve agent poisoning. Cyanosis, confusingly, is not a prominent early sign in cyanide poisoning.

Prognosis Cyanide casualties tend to recover much more quickly than casualties exposed to other chemical agents. Many patients with industrial cases have returned to work within the same shift. If a patient receives a large challenge and dies, death usually takes place within minutes of exposure.

263e |

Radiation Terrorism |

The threat of a terror attack employing nuclear or radiation-related devices is unequivocal in the twenty-first century. Such an attack would certainly have the potential to cause unique and devastating medical and psychological effects that would require prompt action by members of the medical community. This chapter outlines the most probable scenarios for an attack involving radiation as well as the medical principles for handling such threats.

Potential terrorist incidents with radiologic consequences may be considered in two major categories. The first is the use of radiologic dispersal devices. Such devices disseminate radioactive material purposefully and without nuclear detonation. An attack with a goal of radiologic dispersal could take place through use of conventional explosives with incorporated radionuclides (“dirty bombs”), one or more fixed nuclear facilities, or nuclear-powered surface vessels or submarines. Other means could include detonation of malfunctioning nuclear weapons with no nuclear yield (nuclear “duds”) and installation of radionuclides in food or water. The second and less probable scenario is the actual use of nuclear weapons. Each scenario poses its own specific medical threats, including “conventional” blast or thermal injury, introduction to a radiation field, and exposure to either external or internal contamination from a radioactive explosion.

TYPES OF RADIOISOTOPIC RADIATION