Carbon dioxide absorption

CO2 absorbers

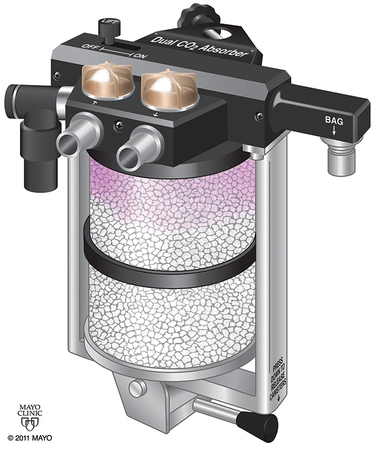

The amount of CO2 that can be absorbed varies depending upon the absorbent. In practical use, the maximum amount is rarely achieved because of factors such as channeling of gas flow around the granules of absorbent. Channeling refers to the preferential passage of exhaled gases through the canister via the pathways of least resistance. Excessive channeling will bypass much of the granule bulk and decrease efficiency. Proper canister design—with screens and baffles plus proper packing—helps decrease channeling (Figure 8-1).