Chapter 212 Art and Science of Guideline Formation

Clinical practice guidelines have become an integral part of the practice of medicine. They are meant to be used by physicians as resources to consider when making treatment decisions for individual patients. They are also frequently used by various organizations for policy and payment decisions. As of February, 2009, 2408 sets of clinical guidelines are listed on the National Guidelines Clearinghouse (NGC), with 479 additional guideline sets registered as “in progress (http://www.guideline.gov).” One hundred and seventy-four guideline sets in this one database focus on disorders of the spine. Only 25 of these were produced by organized spine surgery, sponsored by either the AANS/CNS Section on Disorders of the Spine or the North American Spine Society. These sets do not include myriad “technology assessments” commissioned by third-party payers, nor do they include a multitude of guidelines, evidence-based reviews, evidence-informed consensus statements, or other similarly titled systematic literature reviews published and disbursed outside of the NGC system. Clinical practice guidelines are here to stay and have proven to be important for the assessment of current best practices, guidance for future research, and defense of unpopular yet effective treatment strategies. The purpose of this chapter is to describe how guidelines are created in both the ideal situation and in the real world.

Author Group

One of the most useful tools for learning about evidence-based medicine, guidelines, and the application of guidelines to the real world is a small text by David Sackett and the McMaster University group called Evidence Based Medicine.1 We refer to this text several times in this chapter when discussing how to rate evidence and how to apply evidence to clinical situations. In the chapter devoted to a discussion of the creation of clinical practice guidelines, Dr. Sackett offers the reader the following advice:

We hope, …, that you see how doubly dumb it is for one or a small group of local clinicians to try and create the evidence component of a guideline all by themselves. Not only are we ill equipped and inadequately resourced for the task, but by taking it on we steal energy away from …our real expertise… This chapter closes with the admonition to frontline clinicians: when it comes to lending a hand with guideline development, work as a “B-keeper*” not a meta-analyst.1

Despite this warning, it is absolutely critical that physicians with clinical expertise participate in the formation of clinical practice guidelines. Although epidemiologic support is necessary for the analysis of study design, clinical data cannot be accurately interpreted and the translation of data to recommendation cannot be made without an understanding of the clinical significance of the data. This understanding does not come from textbooks. A more reasonable interpretation of Sackett’s statement is that it is not efficient or desirable to have individual groups spend the resources to develop practice guidelines at a local level. It makes more sense to have guidelines produced at a national level and leave the interpretation of those guidelines to the local experts. A series of review articles published in the Journal of the American Medical Association by the same author group offers detailed explanations of many of the concepts to be discussed in this chapter. The level of detail is inappropriate for this particular review, but the reader is encouraged to use these as references for further inquiry.2–20

Conflict of interest is an important issue in the formation of a guidelines author group. Disclosure of such conflicts is the first step in managing conflicts, and the organizing body, be it a medical society, university work group, or insurance carrier, must decide how to manage or resolve the conflict. In some situations, compromises are necessary in order to garner sufficient topical expertise. In most situations, however, author groups can be constructed and organized to mitigate against the possibility of industry-related conflicts. It is our opinion that industry-sponsored “study groups” are an inappropriate source for clinical practice guidelines because the membership of and strategic direction of these panels may be easily influenced by the sponsoring body. Similarly, technology assessments produced by centers that are funded largely by third-party payers cannot be considered practice guidelines since they are paid for by entities primarily desiring to limit economic exposure as opposed to evaluating clinical efficacy. Furthermore, these panels notoriously lack relevant physician input and tend to place a higher value on study design and author interpretation of data than common sense and clinical fact. (e.g., go to www.ecri.org and review their assessment of “decompressive procedures for lumbosacral pain.” You will note that the author group contained only one physician, an Emergency Care Research Institute [ECRI] employee who practices internal medicine. No spine surgeon, physical therapist, rehabilitation physician, or other specialist input was solicited, and the topic is clearly ridiculous to anyone who regularly cares for these patients—decompression is not done as a treatment for low back pain, it is done for radiculopathy or stenosis.)

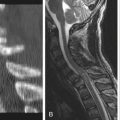

Those in the field of organized spine surgery, including the American Association of Neurological Surgeons and Congress of Neurological Surgeons Joint Section on Disorders of the Spine (Spine Section) and the North American Spine Society (NASS), have been active in guidelines development. The first significant product produced using modern evidence-based review techniques was the set of clinical practice guidelines dealing with cervical spine and spinal cord injury.21 The author group was recruited by Mark Hadley and consisted exclusively of neurosurgeons, both because of the funding agency (the spine section) and because of relative inexperience in guidelines formation. The group included general neurosurgeons, pediatric neurosurgeons, and neurosurgical spine specialists. Beverly Walters, a neurosurgeon who had trained in clinical epidemiology at McMaster University served as the epidemiologist. Each of the authors was employed at an academic center and had the support of local expertise in library science and statistics if necessary. The authors were tutored in evidence-based medicine techniques during 4-week-long sessions in order to solidify their ability to interpret the medical literature.

These guidelines were unique in the spine world and were qualitatively different from the various consensus-based guidelines that had been published previously (e.g., the NASS Low Back Pain Treatment Guidelines published in 1999). Because they applied to a relatively small patient population and because they were originally published as a supplement to Neurosurgery, a journal with virtually no penetrance into emergency medicine or orthopaedics, they did not receive immediate notoriety. With the exception of chapters dealing with the administration of steroids and the safety of traction reduction without MRI, very few recommendations were considered controversial.21

Question Formation

Once an author group is formed, a set of questions is developed. The questions asked are a very important determinant of the utility of the ultimate guideline document. Questions need to be both relevant and answerable. A question such as, “What is the best treatment for low back pain?” is unanswerable. Patients with low back pain are a heterogeneous population. Back pain may be caused by muscular strain, traumatic injury, degeneration of the intervertebral disc, or spinal tumors. It may be a symptom of renal calculi, dissecting aortic aneurysm, or a somatization disorder. There is, therefore, no one best treatment for back pain, and attempting to answer such a question is a frustrating and fruitless endeavor. A better question would be “In a patient with recalcitrant low back pain and neurogenic claudication due to spondylolisthesis and stenosis, does surgical intervention improve outcomes compared with the natural history of the disease?” Here, the patient population is well defined and the treatment modalities are well described, allowing a meaningful review of the medical literature. During the literature search, it may become apparent that multiple surgical interventions are employed, resulting in the parsing of the question into subcomponents related to individual surgical techniques.

Literature Search

The availability of computerized search engines has greatly simplified the ability to identify potentially useful references. Most guidelines groups use two different search engines and databases to ensure a thorough search. Familiarity with mesh headings or consultation with a librarian is very useful in creating an effective search that will not be overly inclusive. Unfortunately, the era of electronic publishing has greatly increased the number of potentially useful references (when just the title and abstract are available for initial screening), and it is not uncommon to obtain several hundred or even several thousand references that require individual review. Several strategies can be used to speed this process. First of all, if sufficient high-quality evidence, such as several concordant randomized trials, exists, lower-quality evidence may be ignored except as background information. For example, about 7 billion papers deal with the use of microdiscectomy for lumbar radiculopathy (OK, an exaggeration). Of these papers, 99.9% are case reports, small case series, technical notes, or historic anecdotes. There are a few large cohort studies with admittedly fatal flaws. Fortunately, several attempted randomized studies have been published within the last few years22,23 that provide higher-quality evidence than all of the other papers. Instead of spending months describing each case series, we can focus our review on a detailed analysis of the higher-quality papers and simply summarize the findings of the various case series. If the primary references are flawed, however, then we must incorporate the lower-quality evidence into the analysis.

Another way to speed up the literature search and review is to set minimum acceptable criteria for inclusion in the database. This is the strategy used by the Cochrane group, who only consider randomized clinical trials (RCT) as evidence worthy of review.24 Although this strategy certainly speeds up the review process, many relevant questions in the surgical realm are not particularly amendable to study via RCT. If the Cochrane criteria were applied to the surgical management of symptomatic intracranial extradural hematomas, the conclusion would necessarily be that there is no evidence to support the evacuation of such hematomas. No RCT has ever been performed on this patient population. Although academically pure, the adherence to such high standards breaks down in the trenches. A very humorous article in the British Medical Journal pointed out that since skydiving with a parachute was associated with occasional fatality, and that survival following falling out of plane with no or a malfunctioning parachute had been described, in the absence of a randomized trial, it must be concluded that there is no evidence to support the use of a parachute to increase survival when jumping out of an airplane.25

Evidence Grading

Once a dataset of relevant papers has been created, the papers must be read by several members of the author group and graded in terms of the quality of evidence provided. Several grading schemes are commonly used; however, most current guidelines use either a three- or five-point scale, with appropriately designed and performed RCTs being considered the highest level of evidence and expert opinion holding the lowest spot in the rankings. Criteria of quality do differ, however, according to the type of question asked (see Sackett, p. 173, for a useful summary table).1 For example, in evaluating a diagnostic test, if a “gold standard” exists, then a simple comparison between the new test and the gold standard in a single patient population with adequate reporting of results (true and false positives, true and false negatives) is considered high-quality evidence. In the therapeutic realm, where most of our questions exist (what is the best treatment for a patient with a known diagnosis), RCTs are king, with cohort studies (in which two groups of patients are treated for the same disease process with two different strategies) and case-control studies (in which characteristics of a group of interest are compared with characteristics of the general population) providing intermediate levels of evidence above that provided by case series (in which there is nothing to compare the results to) or case reports.1

Identifying the type of study used can be tricky sometimes, and even when studies appear to be well designed, they often have flaws that result in downgrading of the evidence to a lower class. The most common reasons for downgrading evidence derived from clinical studies include flaws in study design, the selection of the study sample, and the nature and quality of outcomes measures. The Spine Patient Outcomes Research Trial (SPORT) is an example of how problems with study design, whether planned or not, can decrease the quality of evidence derived from randomized studies. The SPORT investigators set out to perform a randomized controlled clinical trial to establish the efficacy of surgery for one of three disorders: lumbar disc herniation, spondylolisthesis, or lumbar stenosis.23,26–28 Patients were screened for eligibility and then offered the opportunity to participate in the clinical trials. The first methodologic concern relates to the fact that only about a quarter of eligible patients consented to participate in the study. The fact that most of the eligible patients declined participation immediately raises the concern that the patients who did consent were different from the general population—perhaps these patients had less severe symptoms, perhaps they were already improving, or perhaps they had a genetic predisposition toward risk taking. To their credit, the investigators did keep track of a group of patients who declined randomization to try and address this concern.

Once randomized, patient results were analyzed based on an “intent to treat” basis. This means that patient results stayed in the group that the patient was assigned to, regardless of what happened to the patient. Therefore, if a patient was assigned to nonoperative management, failed, then had surgery, and had a great result from the surgery, the great result was credited to the nonoperative management. Patients who fail in the surgical arm cannot cross back over to the nonoperative arm. This type of analysis creates a significant bias against any nonreversible intervention such as surgery. In fact, if crossover is high enough, then the analysis must be abandoned, which is what happened. A tremendous amount of crossover took place in both directions, creating comparison groups that did not differ in terms of the treatment received. About half of the patients who were randomized to nonsurgical treatment had surgery, as did about half of the patients randomized to surgery in all three arms of the study.23,26–28 No matter how effective any treatment is, it would be impossible to detect a difference between groups if the same number of patients in each group received the treatment. The authors had to resort to an “as treated” analysis, that is, a reporting of what actually happened to the patients. In all three studies, patients treated surgically enjoyed significant benefit in every outcome measure and at every time point. This is great news for proponents of surgery; however, the study is no longer a randomized study. Because the patients were able to pretty much choose their treatment regardless of randomization, the study is actually a cohort study and would be considered to provide lower-quality evidence than a randomized trial.

Patient selection can also be manipulated on purpose for specific reasons. When organizing a clinical trial, investigators, particularly those who are motivated to achieve a positive result, try to select a patient population most likely to benefit from the intervention being studied. For example, recently published studies looking at lumbar disc arthroplasty included a very select group of patients without significant spondylosis, facet arthropathy, spondylolisthesis, or stenosis.29,30 Although the arthroplasty group results were equivalent or, in some cases, marginally better than the comparison fusion groups, many authors have pointed out that the population operated on was not representative of the usual lumbar fusion patient population. For example, Wong et al. reported that none of 100 consecutive patients offered lumbar fusion in their practice would have been a candidate for arthroplasty had the study criteria been applied.31 Others have pointed out that the study population did not represent patients to whom many surgeons would offer a lumbar fusion in the first place32 and have questioned the relevance of the arthroplasty studies’ data to the broader fusion population. Therefore, although the trials were well organized and used valid outcomes instruments, any evidence drawn from the data presented must be interpreted in light of the fact that the data are based on a highly select and perhaps irrelevant patient population. For this reason, the data derived from such studies would not be considered to provide high-quality evidence for the general fusion population.

Outcomes measures must be used to report any sort of results. Outcomes measures may be patient reported (e.g., satisfaction scores), pain scales, or disability indexes. Some measures may be investigator reported, such as the absence or presence of neurologic deficits or other surgical complications. Other measures, such as radiographic measures, laboratory values, and survival statistics, may be reported independently of investigator or patient interpretation. Choice of an outcome measure is important and can influence the quality of information derived from a study. For example, many authors have reported different strategies for enhancing the success rate of lumbar fusion. Comparison of plain radiographs to more definitive assessments of fusion (e.g., operative exploration) has revealed that plain radiographs are a relatively poor diagnostic tool for detecting nonunion.33 Therefore, studies that rely on plain radiographs as an outcome measure for healed fusion would be considered to provide only low-quality data. Similarly, if the objective of a procedure is to provide good patient outcomes, and fusion rates do not necessarily correlate with good outcomes, then measuring fusion rates would not provide useful data about patient outcomes no matter what method was used to assess bone growth.

Creation of Recommendations

A common misconception is that once the literature review is completed, recommendations naturally follow. Although this is the case in some circumstances, it is usually the exception rather than the rule. Value judgments must be made, and here is where clinical expertise, broad representation, and appreciation of patient-centered outcomes are crucial. Sometimes two equally weighted studies have conflicting results—consider the Fritzell et al. and Brox et al. studies comparing surgical to nonsurgical treatment for low back pain.34,35 Both of these studies were randomized studies performed in a roughly similar patient population. The Fritzell et al. group found that surgery was more effective than nonsurgical care for improving patient outcomes, yet the Brox et al. group found that no significant differences occurred in the outcomes measures in which they were interested.34,35 Different guidelines groups have made different recommendations regarding the performance of lumbar fusion after reviewing the same literature. A surgical group, focusing on the results reported for back and leg pain, strongly recommended fusion as a treatment strategy in selected patients, whereas a largely medical group, focusing on fear avoidance behavior and work history, offered a much less enthusiastic recommendation.33,36 In other situations, there is simply a mishmash of low-quality data from which the author group needs to draw some conclusion. This would be the situation illustrated by the guidelines dedicated to the surgical management of head injury—a field in which it is ethically impossible (at least in North America and most of the developed world) to perform randomized studies.

Authors of guidelines documents use various means to achieve and describe the degree of consensus regarding a particular recommendation. Some formalized processes are based primarily on voting, in which a recommendation is provided along with an indication of the degree of consensus among the author group. In some more informal processes, the verbiage of the recommendation is altered to convey the degree of uncertainty of the author panel. Consider the 2002 recommendation for the use of steroids following cervical spinal cord injury: “Options: Treatment with methylprednisolone for either 24 or 48 hours is recommended as an option in the treatment of patients with acute spinal cord injuries that should be undertaken only with the knowledge that the evidence suggesting harmful side effects is more consistent than any suggestion of clinical benefit.”21

First of all, despite the fact that the data source for the recommendation was an RCT, a low-level (option) recommendation was made, reflecting the author group’s concerns regarding the study design and in particular the post hoc analysis of data.37 Second, the recommendation, although positive, is riddled with caveats specifically designed to cause the reader to carefully consider the enthusiasm that the author group had for the recommendation. Clearly, although these investigators wanted to preserve the use of steroids as an option for physicians managing spinal cord injury, they felt it important to emphasize that steroids were not necessarily required for optimal treatment of such patients.

The creation of recommendations requires clinical judgment. Therefore, those who do not have experience treating patients with the topical disorders are not well equipped to make such a judgment. Multiple “technology assessments” and other literature-based reviews created by freestanding centers for hire include recommendations that may not reflect reality. For example, the ECRI was hired by the Washington State Worker’s Compensation Board to review evidence about the performance of lumbar fusion. The firm created recommendations based on the previously discussed Brox et al. studies,35 encouraging the use of the nonsurgical treatment described by Brox et al. as an alternative to lumbar fusion. In response to this, the board issued a coverage decision essentially eliminating the performance of lumbar fusion in the worker’s compensation population. It was not until a group from organized spinal surgery, again through a coalition between the AANS/CNS spine section and NASS, pointed out that the population of patients treated in the Brox et al. studies did not match the vast majority of patients treated in Washington State and that the “Brox protocol” did not exist in North America that the decision was reconsidered.

Chou R., Baisden J., Carragee E.J., et al. Surgery for low back pain: a review of the evidence for an American Pain Society Clinical Practice Guideline. Spine (Phila Pa 1976). 2009;34(10):1094-1109.

Peul W.C., van den Hout W.B., Brand R., et al. Prolonged conservative care versus early surgery in patients with sciatica caused by lumbar disc herniation: two year results of a randomised controlled trial. BMJ. 2008;336(7657):1355-1358.

Resnick D.K., Watters W.C. Lumbar disc arthroplasty: a critical review. Clin Neurosurg. 2007;54:83-87.

Sackett D.L., Straus S.E., Richardson S., et al. Evidence based medicine, ed 2. Edinburgh: Churchill Livingstone; 2001.

Weinstein J.N., Lurie J.D., Tosteson T.D., et al. Surgical versus nonsurgical treatment for lumbar degenerative spondylolisthesis. N Engl J Med. 2007;356(22):2257-2270.

Weinstein J.N., Lurie J.D., Tosteson T.D., et al. Surgical versus nonoperative treatment for lumbar disc herniation: four-year results for the Spine Patient Outcomes Research Trial (SPORT). Spine (Phila Pa 1976). 2008;33(25):2789-2800.

1. Sackett D.L., Straus S.E., Richardson S., et al. Evidence based medicine, ed 2. Edinburgh: Churchill Livingstone; 2001.

2. Barratt A., Irwig L., Glasziou P., et al. Users’ guides to the medical literature: XVII. How to use guidelines and recommendations about screening. Evidence-Based Medicine Working Group. JAMA. 1999;281(21):2029-2034.

3. Bucher H.C., Guyatt G.H., Cook D.J., et al. Users’ guides to the medical literature: XIX. Applying clinical trial results. A. How to use an article measuring the effect of an intervention on surrogate end points. Evidence-Based Medicine Working Group. JAMA. 1999;282(8):771-778.

4. Dans A.L., Dans L.F., Guyatt G.H., Richardson S. Users’ guides to the medical literature: XIV. How to decide on the applicability of clinical trial results to your patient. Evidence-Based Medicine Working Group. JAMA. 1998;279(7):545-549.

5. Guyatt G.H., Rennie D. Users’ guides to the medical literature. JAMA. 1993;270(17):2096-2097.

6. Guyatt G.H., Sackett D.L., Cook D.J. Users’ guides to the medical literature. II. How to use an article about therapy or prevention. A. Are the results of the study valid? Evidence-Based Medicine Working Group. JAMA. 1993;270(21):2598-2601.

7. Guyatt G.H., Sackett D.L., Cook D.J. Users’ guides to the medical literature. II. How to use an article about therapy or prevention. B. What were the results and will they help me in caring for my patients? Evidence-Based Medicine Working Group. JAMA. 1994;271(1):59-63.

8. Guyatt G.H., Sackett D.L., Sinclair J.C., et al. Users’ guides to the medical literature. IX. A method for grading health care recommendations. Evidence-Based Medicine Working Group. JAMA. 1995;274(22):1800-1804.

9. Guyatt G.H., Sinclair J., Cook D.J., Glasziou P. Users’ guides to the medical literature: XVI. How to use a treatment recommendation. Evidence-Based Medicine Working Group and the Cochrane Applicability Methods Working Group. JAMA. 1999;281(19):1836-1843.

10. Hayward R.S., Wilson M.C., Tunis S.R., et al. Users’ guides to the medical literature. VIII. How to use clinical practice guidelines. A. Are the recommendations valid? The Evidence-Based Medicine Working Group. JAMA. 1995;274(7):570-574.

11. Jaeschke R., Guyatt G., Sackett D.L. Users’ guides to the medical literature. III. How to use an article about a diagnostic test. A. Are the results of the study valid? Evidence-Based Medicine Working Group. JAMA. 1994;271(5):389-391.

12. Jaeschke R., Guyatt G.H., Sackett D.L. Users’ guides to the medical literature. III. How to use an article about a diagnostic test. B. What are the results and will they help me in caring for my patients? The Evidence-Based Medicine Working Group. JAMA. 1994;271(9):703-707.

13. McAlister F.A., Straus S.E., Guyatt G.H., Haynes R.B. Users’ guides to the medical literature: XX. Integrating research evidence with the care of the individual patient. Evidence-Based Medicine Working Group. JAMA. 2000;283(21):2829-2836.

14. McGinn T.G., Guyatt G.H., Wyer P.C., et al. Users’ guides to the medical literature: XXII: how to use articles about clinical decision rules. Evidence-Based Medicine Working Group. JAMA. 2000;284(1):79-84.

15. Naylor C.D., Guyatt G.H. Users’ guides to the medical literature. XI. How to use an article about a clinical utilization review. Evidence-Based Medicine Working Group. JAMA. 1996;275(18):1435-1439.

16. Naylor C.D., Guyatt G.H. Users’ guides to the medical literature. X. How to use an article reporting variations in the outcomes of health services. The Evidence-Based Medicine Working Group. JAMA. 1996;275(7):554-558.

17. Oxman A.D., Cook D.J., Guyatt G.H. Users’ guides to the medical literature. VI. How to use an overview. Evidence-Based Medicine Working Group. JAMA. 1994;272(17):1367-1371.

18. Oxman A.D., Sackett D.L., Guyatt G.H. Users’ guides to the medical literature. I. How to get started. The Evidence-Based Medicine Working Group. JAMA. 1993;270(17):2093-2095.

19. Randolph A.G., Haynes R.B., Wyatt J.C., et al. Users’ Guides to the Medical Literature: XVIII. How to use an article evaluating the clinical impact of a computer-based clinical decision support system. JAMA. 1999;282(1):67-74.

20. Richardson W.S., Wilson M.C., Guyatt G.H., et al. Users’ guides to the medical literature: XV. How to use an article about disease probability for differential diagnosis. Evidence-Based Medicine Working Group. JAMA. 1999;281(13):1214-1219.

21. Hadley M., Walters B., Resnick D., et al. Guidelines for the management of acute cervical spine and spinal cord injuries. Clin Neurosurg. 2002;49:407-498.

22. Peul W.C., van den Hout W.B., Brand R., et al. Prolonged conservative care versus early surgery in patients with sciatica caused by lumbar disc herniation: two year results of a randomised controlled trial. BMJ. 2008;336(7657):1355-1358.

23. Weinstein J.N., Lurie J.D., Tosteson T.D., et al. Surgical versus nonoperative treatment for lumbar disc herniation: four-year results for the Spine Patient Outcomes Research Trial (SPORT). Spine (Phila Pa 1976). 2008;33(25):2789-2800.

24. Gibson J.N., Waddell G. Surgery for degenerative lumbar spondylosis: updated Cochrane Review. Spine (Phila Pa 1976). 2005;30(20):2312-2320.

25. Smith G.C., Pell J.P. Parachute use to prevent death and major trauma related to gravitational challenge: systematic review of randomised controlled trials. BMJ. 2003;327(7429):1459-1461.

26. Weinstein J.N., Lurie J.D., Tosteson T.D., et al. Surgical versus nonsurgical treatment for lumbar degenerative spondylolisthesis. N Engl J Med. 2007;356(22):2257-2270.

27. Weinstein J.N., Lurie J.D., Tosteson T.D., et al. Surgical compared with nonoperative treatment for lumbar degenerative spondylolisthesis. four-year results in the Spine Patient Outcomes Research Trial (SPORT) randomized and observational cohorts. J Bone Joint Surg [Am]. 2009;91(6):1295-1304.

28. Weinstein J.N., Tosteson T.D., Lurie J.D., et al. Surgical versus nonsurgical therapy for lumbar spinal stenosis. N Engl J Med. 2008;358(8):794-810.

29. Blumenthal S., McAfee P.C., Guyer R.D., et al. A prospective, randomized, multicenter Food and Drug Administration investigational device exemptions study of lumbar total disc replacement with the CHARITE artificial disc versus lumbar fusion: part I: evaluation of clinical outcomes. Spine (Phila Pa 1976). 2005;30(14):1565-1575. discussion E1387–E1591

30. Delamarter R.B., Fribourg D.M., Kanim L.E., Bae H. ProDisc artificial total lumbar disc replacement: introduction and early results from the United States clinical trial. Spine (Phila Pa 1976). 2003;28(20):S167-S175.

31. Wong D.A., Annesser B., Birney T., et al. Incidence of contraindications to total disc arthroplasty: a retrospective review of 100 consecutive fusion patients with a specific analysis of facet arthrosis. Spine J. 2007;7(1):5-11.

32. Resnick D.K., Watters W.C. Lumbar disc arthroplasty: a critical review. Clin Neurosurg. 2007;54:83-87.

33. Resnick D.K., Choudhri T.F., Dailey A.T., et al. Guidelines for the performance of fusion procedures for degenerative disease of the lumbar spine. Part 7: intractable low-back pain without stenosis or spondylolisthesis. J Neurosurg Spine. 2005;2(6):670-672.

34. Fritzell P., Hagg O., Wessberg P., Nordwall A. 2001 Volvo Award Winner in Clinical Studies: Lumbar fusion versus nonsurgical treatment for chronic low back pain: a multicenter randomized controlled trial from the Swedish Lumbar Spine Study Group. Spine (Phila Pa 1976). 2001;26(23):2521-2532. discussion 2532–2524

35. Brox J.I., Sorensen R., Friis A., et al. Randomized clinical trial of lumbar instrumented fusion and cognitive intervention and exercises in patients with chronic low back pain and disc degeneration. Spine (Phila Pa 1976). 2003;28(17):1913-1921.

36. Chou R., Baisden J., Carragee E.J., et al. Surgery for low back pain: a review of the evidence for an American Pain Society Clinical Practice Guideline. Spine (Phila Pa 1976). 2009;34(10):1094-1109.

37. Bracken M.B., Shepard M.J., Holford T.R., et al. Administration of methylprednisolone for 24 or 48 hours or tirilazad mesylate for 48 hours in the treatment of acute spinal cord injury. Results of the Third National Acute Spinal Cord Injury Randomized Controlled Trial. National Acute Spinal Cord Injury Study. JAMA. 1997;277(20):1597-1604.