CHAPTER 5 Radiation-Induced Meningiomas

HISTORICAL PERSPECTIVE

One evening in November 1895, Wilhelm Conrad Roentgen was surprised to notice an unexplained glow on a fluorescent screen in his laboratory during cathode-ray tube experiments. For weeks, Roentgen worked intently to explain the mystery, and on December 22 he published his discovery of a new form of energy,1 which he named x-rays for the mathematical designation for something unknown. During 1896, a tremendous number of scientific and news articles about the new “Roentgen rays” appeared in newspapers and books, as well as leading scientific and medical journals such as Lancet, the British Medical Journal, Nature, and Science.2 Roentgen’s announcement, including the famous x-ray image of his wife’s hand, heralded one of the defining scientific discoveries of the modern era, and earned him the first Nobel Prize in Physics in 1901. His was the first of more than 20 Nobel Prizes awarded for research related to radioactivity in the 20th century.

Marie and her husband Pierre Curie isolated small quantities of the new elements from 100 kg of waste pitchblende and characterized their atomic properties. They refused suggestions to patent their isolation process, believing that research should be carried out for its own sake, with no profit motive, even though radium was soon being produced at the very high price of $100,000 per gram.3 For their work, the Curies shared with Becquerel the 1903 Nobel Prize in Physics.4 After her husband’s death, Marie Curie earned a second Nobel Prize in 1911, in Chemistry, for her work in radioactivity; she conducted active research until her death in 1934 (Fig. 5-1).

Potential diagnostic and therapeutic applications for radiation were quickly proposed. Roentgen’s first images so excited physicians around the world with the new ray’s ability to see inside the human body that x-rays were used to diagnose bone fractures and locate embedded bullets within weeks of his discovery.5 During a 1903 lecture and demonstration at the Royal Institution in London, Pierre Curie mentioned the possibility that radium could possibly be used to treat cancer, and described a burn-like skin injury on his forearm resulting from 10 hours of exposure to a sample of radium. Radiation was indeed used routinely for therapeutic purposes by the 1920s. While presenting, his hands trembling from significant cumulative exposure to radioactive materials, Dr. Curie spilled a small amount of radium on the podium; 50 years later some surfaces in the hall required cleaning after radioactivity was detected.4

Adverse effects of x-rays in the form of slow-healing skin lesions on the hands of radiologists and technicians were noticed early, but the full extent of dangers from exposure to x-rays was poorly understood for decades. Patients, technicians, physicians, and researchers were repeatedly exposed to large doses of ionizing radiation with no shielding. Fluoroscopists calibrated their equipment by placing their hands directly in the x-ray beam; many lost fingers as a result (Fig. 5-2). There were also more serious problems. American inventor Thomas Edison, who designed the first commercially available fluoroscope, suffered damage to his eyesight, while his assistant, Clarence Dally, succumbed to metastatic radiation-induced skin cancer. Edison halted work with x-rays in his laboratory because of their ill effects.6

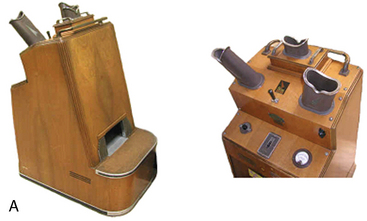

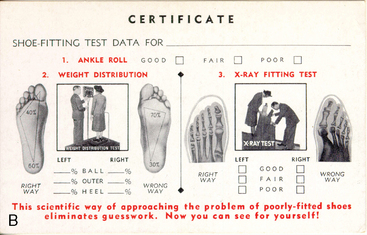

X-rays also captured the public imagination. Radium was widely thought to have curative powers. A radium potion that “bathed the stomach in sunshine” was thought to cure stomach cancer. Radithor, a medical drink, was sold over the counter until 1931. Belts to be strapped onto limbs, hearing aids, toothpaste, face cream, and hair tonic, all containing radium, were sold into the 1930s,7 and shoe-fitting fluoroscopy was available as a customer service in many shoe stores until after 1950 (Fig. 5-3).8,9

Broader public awareness of the dangers of radiation exposure began to develop late in 1927, when journalist Walter Lippman, then editor of the New York World newspaper, exposed the fate of young women employed by U.S. Radium Co. to paint watch dials with radioactive materials. The women, who had worked in large rooms with no shielding, and were instructed to point their brushes with their lips, lost their teeth and developed serious bone decay in their mouths, necks, and backs. As the young women were dying, Lippman railed against delays in the courts that blocked settlements against the U.S. Radium company, which had known of the danger from chronic exposure but provided no protection for its workers.10 The case is a classic, now used to train journalists regarding the role of investigative reporting in societal change. Scholarly articles describing dangers from radiation also appeared before World War II, particularly in German and French medical journals.11,12 X-ray exposure guidelines were established in Germany in 1913,13 the United Kingdom in 1921,14 and the United States during the 1930s.13 The trend has been toward more rigorous protection in the decades since early limits were established.15

Important additional evidence of risk appeared in 1927 when Hermann Joseph Muller, a founding figure in genetic research, published evidence of a 150-fold increase in the natural mutation rate of fruit flies (Drosophilia melanogaster) exposed to x-rays. Muller showed that x-rays broke the genes apart and rearranged them.16 He earned the Nobel Prize in Medicine for his work, but only in 1947, when concern over genetic consequences from exposure to low levels of radiation became widespread after the world saw the devastation wrought from atomic bombings in Hiroshima and Nagasaki in 1945.17

Research to define the optimal parameters of radiation therapy for the treatment of brain tumors and to assess treatment risk began in the years after World War I. In 1938, Davidoff and colleagues11 documented profound histologic and morphologic changes, especially marked in glial and nerve tissues, in the brains and spinal cords of monkeys after exposure to 10 to 50 grays (Gy) in a single exposure, or 48 to 72 Gy in two fractions. Davidoff concluded that the intensity of change was determined primarily by x-ray dose, with time from radiation exposure to autopsy contributing to a lesser extent. Wachowski12 and others18,19 also showed that exposure to ionizing radiation had degenerative effects on neural tissue.

In spite of this work, and the mounting evidence of wide-ranging risks from exposure to ionizing radiation, neural tissue was long considered resistant to direct damage. The authors of a case report published in 1953, describing a patient who had received superficial x-ray therapy for a basal cell carcinoma, stated, “Brain and neural tissue are usually resistant to direct damage by x-ray radiation.”20 The patient had sustained a skin dose of approximately 25 Gy, and a dose to the temporal lobe of 12.87 Gy at a depth of 2 cm. The authors considered her development of an “underlying, expanding intracranial nontumorous mass” to be a rare event, and not the result of a radiation exposure.

Radiation is now known to induce a wide range of changes in neural tissue, including visual deterioration, hearing loss, hormonal disturbances, vasculopathy, brain and bone necrosis, atrophy, demyelination, calcification, fatty replacement of bone marrow, and induction of central nervous system (CNS) neoplasms.21 Many changes have been shown to be dose dependent.14,22–26

In the more than 60 years since the atomic bombings, scientific evidence based on extensive research among these survivors and other populations exposed to ionizing radiation supports the hypothesis that there is a linear dose–response relationship between exposure to ionizing radiation and the development of solid cancer in humans. Excess lifetime risk of disease and death for all solid cancers and leukemia has been estimated based on a wide range of doses from 0.005 up to greater than 2 sieverts (Sv). A statistically significant dose–response relationship has also been shown for heart disease, stroke, and diseases of the digestive, respiratory, and hematopoietic systems, although noncancer risks at very low doses are uncertain.17

Although we have not yet achieved a full understanding of the mechanisms for carcinogenesis after exposure to radiation, research has elucidated some of the diverse responses in complex biologic systems. Ionizing radiation overcomes the binding energy of electrons orbiting atoms and molecules, resulting in a variety of directly and indirectly induced DNA lesions, including DNA base alterations, DNA–DNA and DNA–protein crosslinks, and single- and double-strand DNA breaks.27 Cellular mechanisms have the capability to repair some radiation-induced damage; however, some damage may overwhelm cells’ intrinsic capability for repair. There may also be genetic factors that modify cellular mechanisms for repair and increase susceptibility to the development of tumors after radiation in some individuals.28 Occasional misrepair can result in point mutations, chromosomal translocations, and gene fusions, all with potential to induce neoplasms. Radiation may also produce more subtle modifications that can alter gene expression, affect the intracellular oxidative state, lead to the formation of free radicals, influence signal transduction systems and transcription factor networks, and directly or indirectly impact upon metabolic pathways.29

RADIATION-INDUCED MENINGIOMAS

After publication of small case series describing a suspected link between meningioma and exposure to ionizing radiation,30–32 the causal association between irradiation and meningioma was recognized in a 1974 analytical epidemiologic study by Modan and colleagues33 In this cohort study, elevated incidence of meningiomas and other tumors of the head and neck area was shown in individuals irradiated as children for treatment of tinea capitis compared to matched nonirradiated population and sibling controls. Radiation-induced meningioma (RIM) is now considered the most common brain neoplasm known to be caused by exposure to ionizing radiation.34–36

In 1991, Harrison and colleagues35 categorized RIM according to level of exposure. Doses of less than 10 Gy, such as those used for treatment of tinea capitis between 1909 and 1960, were defined as low; doses of 10 to 20 Gy, typical of irradiation for the treatment of head and neck tumors or vascular nevi, are termed intermediate; and doses greater than 20 Gy, used for treatment of primary or secondary brain tumors, were termed high. Harrison’s categorization is frequently cited in the neurosurgical literature. Others considered exposure levels greater than 10 Gy to be high.37,38 However, the National Academy of Sciences defined exposure of 0.1 Gy or lower as low dose, greater than 0.1 to 1 Gy as a medium dose, and 1 Gy or higher as high dose in its seventh report on the Biological Effects of Ionizing Radiation (BEIR VII).17

RIM After Exposure to High Doses of Therapeutic Radiation

Mann and colleagues,32 writing in 1953, are generally credited with the first report of a meningioma ascribed to previous irradiation. The patient was a 4-year-old girl who had been treated as an infant with 65 Gy for an optic nerve glioma.

Numerous case reports and small patient series describing meningiomas developing subsequent to high-dose radiation therapy for primary brain tumors have been published since the initial article appeared. Several authors have summarized their own experience together with reviews of up to 126 cases reported in the literature.35–41 Radiation dose ranges from 22 to 87 Gy in these series. The majority of high-dose RIM patients were irradiated as children, adolescents, or young adults; however, meningiomas secondary to irradiation for primary brain neoplasms in middle-aged and older adults have also been described.37,39,41

Latency periods from irradiation to meningioma detection ranging from two36,42 to 59,39 and even 63 years43 have been reported, with average latency between 10 and 20 years in most series. Latency is shorter with increasing radiation dose and younger patient age at irradiation.35,37,40,44 Descriptive studies of series of patients who developed RIM, such as the studies cited above, may understate the true mean latency for tumor development. A more accurate value would require a cohort study including a large population of patients irradiated for primary brain tumor, and the maximum follow-up period possible.

In 2006, Neglia and colleagues23 published a multicenter nested case-control study of new primary CNS neoplasms in 14,361 survivors of childhood cancer, as a part of the Childhood Cancer Survivor Study (CCSS). Individuals were eligible for participation in the cohort if they were diagnosed and treated between January 1, 1970 and December 31, 1986; received a primary diagnosis of leukemia, CNS cancer, Hodgkin lymphoma, non-Hodgkin lymphoma, kidney tumor, neuroblastoma, soft tissue sarcoma, or bone sarcoma; younger than age 21; and survived for at least 5 years. Data analysis for second primary CNS neoplasms closed on December 31, 2001. The study design included analysis of administration of 28 specific chemotherapeutic agents, surgical procedures, imaging reports, and site-specific dosimetry from radiation therapy. Second primary CNS neoplasms, including 40 gliomas and 66 meningiomas, were diagnosed in 116 case patients of the CCSS cohort. Three meningiomas were malignant at first diagnosis. Each case patient was matched with four other cohort members, who had not developed a CNS neoplasm, by age at primary cancer, gender, and time since original cancer diagnosis. New primary CNS tumors were diagnosed from 5 to 28 years after the original primary tumor diagnosis. Gliomas were diagnosed at a median of 9 years after diagnosis of the primary cancer, with 52.5% diagnosed within 5 years from first cancer diagnosis. Meningiomas showed a much longer latency than gliomas, with a median diagnosis at 17 years from first cancer diagnosis, and 71.2% diagnosed 15 years or more later.23 Follow-up ranged from 15 to 31 years, and therefore no data are available for latency beyond 31 years.

Exposure to therapeutic radiation delivered for treatment of the original cancer was the most important risk factor for occurrence of a secondary CNS neoplasm. Any exposure to radiation therapy was associated with increased risk of glioma (odds ratio [OR]: 6.78; 95% confidence interval [CI]: 1.54–29.7) and meningioma (OR: 9.94; 95% CI: 2.17–45.6).23 The CCSS study is unique among assessments of high-dose RIM because of the large number of case patients, the detailed review of medical records for chemotherapy treatment and radiotherapy dosimetry, the length of follow-up (15–31 years), and the size and structure of the study. Taken together, the findings provide compelling evidence of increased risk for secondary CNS neoplasms, including meningioma, following exposure to therapeutic radiation for treatment of primary cancer during childhood.

The incidence of high-dose RIM is expected to increase as a larger proportion of patients who receive radiation therapy for primary tumors survive for extended periods.37,44,45 Continued close follow-up is warranted after high-dose cranial irradiation, particularly when it is administered to children.23,37,46,47

RIM After Exposure to Low to Moderate Doses of Radiation

Increased risk of meningioma has been reported in individuals who were irradiated for tinea capitis during childhood,30,31,33,48–54 irradiated for the treatment of skin hemangioma during infancy,22 exposed to radiation after the explosions of atomic bombs in Hiroshima and Nagasaki,24,25,55–57 and exposed to a series of dental radiographic studies.58–61

RIM After Irradiation for Tinea Capitis

From 1909 to 1960, the international standard for treatment of tinea capitis was scalp irradiation via the Keinbock–Adamson technique, which was designed to irradiate the entire scalp uniformly with exposure to five overlapping treatment areas.62 In phantom dosimetry studies, conscientious use of the technique resulted in radiation doses of 5 to 8 Gy to the scalp, 1.4 to 1.5 Gy to the surface of the brain, and 0.7 Gy to the skull base.63,64 Tinea capitis was epidemic in some areas (Fig. 5-4), and irradiation was considered the standard of care in such situations before the introduction of griseofulvin circa 1960.65–68

The first evidence of negative consequences after treatment using this protocol appeared in 1929 with a report of somnolence lasting 4 to 14 days in 30 of approximately 1100 children (ages 5–12 years) treated for tinea capitis.69 In 1932 and 1935, new reports describing children irradiated for tinea capitis added atrophic and telangiectatic changes in the scalp, epilepsy, hemiparesis, emotional changes, and dilatation of the ventricles to the list of symptoms and complaints.70,71

In 1966, evidence of long-term side effects increased with a report from the New York University Medical Center comparing 1908 tinea capitis patients treated with irradiation between 1940 and 1958 with 1801 patients who were not irradiated. In the irradiated population there were nine neoplasms—three cases of leukemia and six solid tumors, including two brain tumors—compared to one case of Hodgkin lymphoma in the nonirradiated group.63 A later report on these patients described additional effects, including increased rates of psychiatric hospitalization, long-term electroencephalographic changes, and permanent functional changes to the nervous system.64

Additional reports of RIM from Israel, the Unites States, Europe, and the former Soviet Union followed.30,31,35,48,49,54,72 In 1983, Soffer and colleagues54 described unique histopathological characteristics in a series of 42 Israeli RIM patients. His findings are discussed in detail below. From 1936 to 1960, about 20,000 Jewish children age 1 to 15 years (mean 7 years) and an unknown number of non-Jewish children were irradiated in Israel for treatment of tinea capitis, with an estimated 50,000 additional children irradiated abroad, before immigration (personal communication from S. Sadetzki, April 15, 2008).

In 1974, Modan and colleagues33 published the first results of the Israeli tinea capitis cohort study, showing that irradiation was associated with significantly increased risk of meningioma and other benign and malignant head and neck tumors in this population. The cohort includes 10,834 irradiated individuals and two nonirradiated control groups, one drawn from the general population (n = 10,834) and the second from untreated siblings (n = 5392). The nonexposed groups were matched to the irradiated group by age, gender, country of origin, and immigration period.

Research using the Israeli cohort continues. In 1988, Ron and colleagues52 showed a 9.5-fold increase in meningioma incidence following mean radiation exposure levels to the brain of 1.5 Gy (range: 1.0–6.0 Gy). More recently, Sadetzki and colleagues26 reported Excess Relative Risk per Gy (ERR/Gy) of 4.63 (95% CI: 2.43–9.12) and 1.98 (95% CI: 0.73–4.69) for benign meningiomas and malignant brain tumors, respectively, after a median 40-year follow-up of the cohort. The risk for developing both benign and malignant tumors was positively associated with dose. For meningioma a linear quadratic model gave a better fit than the linear model, but the two models were very similar up to 2.6 Gy, a point that encompasses 95% of observations. The ERR of meningioma reached 18.82 (95% CI: 5.45–32.19) when the level of exposure was greater than 2.6 Gy. While the ERR/Gy for malignant brain tumors decreased with increasing age at irradiation, no trend with age was observed for benign meningiomas.

For both malignant and benign brain tumors, risk remained elevated after a latent period of 30 years and more. While the great majority (74.6%) of benign meningiomas were diagnosed 30 years or more after exposure, only 54.8% of malignant brain tumors had latency over 30 years.26 In a separate, descriptive study comparing demographic and clinical characteristics of 253 RIM and 41 non-RIM cases, Sadetzki and colleagues53 showed lower age at diagnosis (P = .0001), higher prevalence of calvarial tumors (P = .011), higher rates of tumor multiplicity (P < .05), and higher rates of recurrence (not statistically significant in this study).

RIM After Irradiation for Skin Hemangioma in Infancy

Karlsson and colleagues22 performed a pooled analysis of the incidence of intracranial tumors in two Swedish cohorts (the Gothenburg and Stockholm cohorts) involving 26,949 individuals irradiated for the treatment of hemangioma during infancy for whom follow-up data were available. Treatment was administered between 1920 and 1965, with some differences between dates for the two cohorts. In individuals who developed meningiomas, the mean dose to the brain was 0.031 Gy (range 0–2.26 Gy) and average age of exposure was 7 months (range 2–30). In individuals who developed gliomas, mean dose to the brain was 1.02 Gy (range 0–10.0 Gy) and mean age at exposure was 4 months (range 1–15). Both cohorts were followed for intracranial tumors reported in the Swedish Tumor Registry from 1958 to 1993, with a 33-year mean time from exposure as the study closed.

RIM in Survivors of the 1945 Atomic Bombing of Hiroshima and Nagasaki

An estimated 120,000 individuals survived atomic bombings in Hiroshima and Nagasaki in 1945, and slightly less than half of this population was still alive in 2000.17

The Life Span Study (LSS),73 an ongoing cohort study, includes data for approximately 86,500 survivors who were within 2.5 km of a hypocenter, as well as a sample of survivors who were 3 to 10 km from ground zero, and thus had only negligible exposure to radiation. The LSS continues to serve as a major source of information for experts evaluating health risks from exposure to ionizing radiation. The population is large; not selected because of disease or occupation; has a long follow-up period (1950 and ongoing); and includes both sexes and all ages at exposure, allowing many comparisons of risks by these factors. Extensive data regarding illness and mortality are available for survivors who remained in Japan. Radiation doses for survivors resulted from whole-body exposure. Individual doses have been reasonably well characterized, based on consideration of differences in the biological effectiveness of the bombs, distance from hypocenter, and specific location at the time of explosion. For example, exposure for individuals who were inside a typical Japanese home was reduced by nearly 50%.17 Within 10 years of the bombings, an excess incidence of leukemia was found in survivors and linked to radiation exposure; however, elevated risk for solid tumors and a direct relationship between solid tumor incidence and proximity to hypocenter was shown only in 1960.74 A Japanese review of postmortem studies of primary brain tumors in Nagasaki survivors for the years 1946–1977 found only five cases of meningioma, and the authors concluded there was no evidence of increased meningioma incidence in this population 32 years after the bombings.75 The first report demonstrating excess risk of meningioma in atomic bomb survivors was published only in 1994, when Shibata and colleagues24 reported a significantly higher meningioma incidence among Nagasaki survivors beginning in 1981, 36 years after the bombings, and increasing annually. This report was confirmed by further research in Nagasaki55 and in Hiroshima.56,57 In 1996, Sadamori and colleagues55 reported meningioma latency ranging from 36 to 47 years in Nagasaki survivors (mean 42.5 years). Shintani and colleagues56 reported meningioma incidence of 3.0 per 105 persons per year in a population that was distant from the hypocenter and thus received very low levels of exposure, versus incidence of 6.3, 7.6, and 20.0 meningiomas per 105 persons per year in survivors who had been 1.5 to 2.0, 1.0 to 1.5, and less than 1.0 km from hypocenter, respectively. Overall incidence in survivors of the bombs, as well as incidence in each of the three groups, differed significantly from incidence in the population with very low exposure. The authors assumed this increasing incidence indicated dose dependence, as doses increased with increasing proximity to hypocenter.

Preston and colleagues25 studied incidence of CNS tumors between 1958 and 1995 among survivors from both cities in relation to radiation dose measured in Sievert (Sv). The authors found a statistically significant, linear dose–response relationship for all CNS tumors combined (ERR/Sv: 1.2; 95% CI: 0.6–2.1), and concluded that incidence of tumors of the nervous system increases with exposure to even moderate doses (<1 Sv) of ionizing radiation. Risk for glioma was increased, although not to a statistically significant level (ERR/Sv: 0.6; 95% CI: –0.2–2.0). A statistically nonsignificant increased risk was also seen for meningioma (ERR/Sv: 0.06; 95% CI: –0.01–1.8). When risk of meningioma was evaluated separately for adults and children (defined as those <20 years of age at exposure), the authors found higher risk for children compared to adults (ERR/Sv: 1.3; 95% CI: –0.05–4.3, and 0.4, 95% CI: not smaller than –0.1–1.7, respectively), although neither relationship reached statistical significance. In general, children represented a relatively small part of the population among survivors, and the aggregation of all survivors exposed at age 20 or younger into a single group limits understanding of risk levels for exposure at younger ages (e.g., 10 or 12 years and younger), when the brain is thought to be more susceptible to damage from ionizing radiation. There were varying combinations of exposure to gamma rays and neutrons in the two cities. The biological effectiveness of ionizing radiation varies, depending on the type of radiation used. While most RIM studies reported dose in grays (Gy), a measure of absorbed dose, doses in the LSS are expressed in sieverts (Sv), which is calculated as the absorbed dose multiplied by a weighting factor that depends on the type of radiation used.

RIM Due to Dental X-ray Examination

In 1953, Nolan and Patterson76,77 published evidence that a single full-mouth dental x-ray delivered doses in excess of 75 rads (0.75 Gy) to the lateral surfaces of the face and neck. He also observed that lines of radiation converged at the meninges, resulting in points of high ionization. He reported blood changes in nine patients exposed to 65 to 315 rads (0.65–3.15 Gy). Nolan’s work anticipated a 1980 case-control study of women in Los Angeles County diagnosed with meningioma, led by Preston-Martin and colleagues,59 which showed an association between early exposure (age <20 years) to dental x-rays and meningioma. Tumors were located in the tentorial or infratentorial region in 68% of patients with a history of greater than 10 full-mouth exposures. Risk was elevated in those radiographed as children or teenagers, and in those imaged before 1945, when dental x-ray doses were higher.

Case control studies in Sweden61 and the United States,58 published in 1998 and 2004 respectively, showed that dental radiographs performed after age 25 also increase risk of meningioma. However, the extent to which dental x-ray exposure is associated with increased incidence of meningioma remains controversial.

Before 1960, the dose from a full-mouth series was often nearly 2 Gy. Today the dose from dental studies has been drastically reduced. The brain is exposed to about 37 to 55 µGy in a panoramic study performed on standard equipment,78 and approximately 10 to 86 µGy with digital equipment.79 Doses vary widely depending on equipment and imaging protocol. Panoramic studies are the highest dose radiographic study of the mouth. The absorbed dose to the frontal lobe for children who undergo digital cephalometric radiography for orthodontic work can range from 2.9 to 30.4 µSv.80 This very low level of x-rays (86 µGy = 0.000086 Gy) is comparable to exposure from naturally occurring radiation in day-to-day life.

Clinical Presentation

As is the case with sporadic meningioma (SM), RIM is characterized by strong female preponderance, with female:male incidence approaching 2:1 in most studies.25,36,37,44,53,61 The hallmarks of radiation treatment at clinical examination include scanty hair, or alopecia, and scalp atrophy.35,50,72,81 Based on their experience treating a large number of RIM patients, the authors are also of the impression that microcephaly occurs with some frequency in these patients, most likely as a result of premature closure of the skull sutures after irradiation during early childhood.

Meningiomas occurring secondary to irradiation typically occur in the irradiated field. In patients treated for tinea capitis, the majority of tumors are calvarial.35,53,54,72 In patients treated with high-dose irradiation for primary brain tumors, 4% to 19% of RIM are found in the cranial base; however, Ghim and colleagues40 found a high proportion of calvarial tumors in pediatric high-dose RIM patients.

SMs generally arise in the fifth or sixth decades of life. In comparison, mean age at presentation is 45 to 58 years in patients treated with low-dose irradiation,35,53–55,72,82 and typically 29 to 40 years in those exposed to high-dose treatments,35,36,38,39,42,45,83 although both older23,37,43 and younger23,40 high-dose RIM patients have been reported. Latency varies with radiation dose and age at treatment. The longest average latency for low-dose RIM, 42.5 years, was reported among survivors in Nagasaki. In patients with RIM after treatment for tinea capitis, average latency was reported as 36.3 years.53 Mean latency was shorter in a series describing 15 pediatric RIM patients who sustained high-dose irradiation for the treatment of primary brain tumors at an average age of 2.5 years.40 In these patients, meningiomas were diagnosed an average of 10.3 years after initial treatment (range 5–15.5), at a mean age of 13 years. Strojan and colleagues38 reported mean latency in high-dose RIM patients of 18.7 years in a case series and review of the literature. A 14-month latency was reported in one 14-year-old boy with a history of irradiation for a tumor in the posterior fossa.84 Mack and colleagues44 found a significant correlation between age at irradiation, dose, and latency. Others have also reported that younger age at initial treatment is related to shorter latency for RIM.37,38 Multiplicity was reported in 15.8% of RIM patients versus 2.4% of those with SM in the Israeli tinea capitis cohort (Fig. 5-5).53 More aggressive clinical behavior, with high rates of recurrence after surgery and radiation, is characteristic of RIM.35,36,39,49,53,54,72,85 Sofer and colleagues54 found an 18.7% recurrence rate in RIM patients, with multiple recurrences in many, compared with a 3% rate in the control group of 84 SM patients. Recurrences occurred after complete removal in a higher proportion of RIM than in the control group. RIM patients also tended to have earlier recurrences.

Histologic subtypes in RIM resemble those for SM, with meningotheliomatous, syncytial, transitional, and fibroblastic being the most common;53,54,85 however, histologic features are distinctive. Soffer and colleagues54 found higher rates of cellularity, nuclear pleomorphism, an increased mitotic rate, focal necrosis, bone invasion, and tumor infiltration of the brain in a series of 42 low-dose RIM patients, compared with histological findings in 84 SMs. Rubinstein and colleagues72 similarly reported a high degree of cellularity; pleomorphic nuclei with great variation in nuclear size, shape, and chromatin density; numerous multi-nucleated and giant cells; and nuclei with vacuolated inclusions. Frequent mitoses, psammoma bodies, foam cells, and thickened blood vessels that did not stain for amyloid were also noted. In various reports, other authors have also reported higher proliferation indices,86 and higher rates of atypia or malignancy.35–38,40,44,45,54,85,86

Management of Radiation-Induced Meningiomas

Surgery

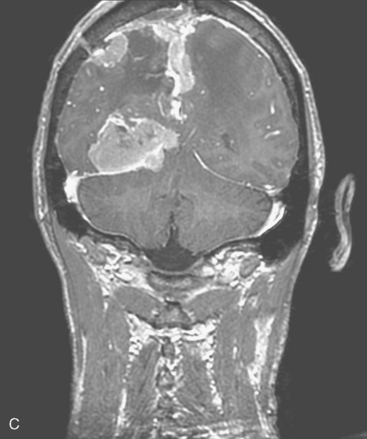

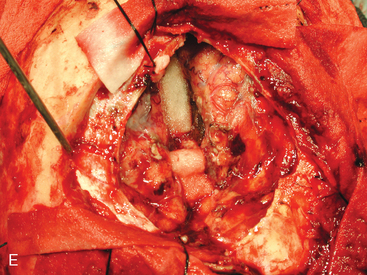

Scalp atrophy is the first consideration when planning the surgical approach in many RIM patients; multiplicity and high recurrence rates are also important issues to consider when planning the surgical procedure (Fig. 5-6 and Case Report). The scalp may be slightly or severely atrophic and poorly vascularized, depending on the radiation dose received. The thickness of the galeal/skin flap may be only 1.5 to 2 mm in some patients, necessitating careful planning and precise technique in order to avoid cerebrospinal fluid (CSF) leak and skin flap dehiscence. The risk of these complications increases in patients with atrophic scalp who require multiple craniotomies, as do a large proportion of RIM patients. When scalp atrophy is a significant factor, it may be appropriate to include a plastic surgeon on the team.

Wide resection margins, always important in surgery for meningioma,87–90 are especially vital given the aggressive nature and higher recurrence rates for RIM.39 Bony invasion has been linked to higher rates of tumor occurrence, hence the osseous portion of involved bone should be removed radically.35,54,72,91,92 In cases where we suspect or find clear evidence of bone flap invasion, we either autoclave the flap or replace it with acrylic cranioplasty. When tumors involve the skull base or a major cranial sinus, wide resection margins may be impossible to achieve. Recurrence rates are higher in these patients,39,90,93 and adjunct radiosurgery should be considered.82,94–97 Wound closure also frequently requires special consideration of fragile skin conditions. Multilayer closure may not be feasible, especially in convexity meningiomas, where atrophic scalp may not accept additional sutures. The authors close the skin and galea aponeurosis using a single layer of nylon 3/0 sutures, with or without locking, in patients with a very thin scalp, avoiding use of Vicryl. Meticulous stitches, capable of providing a single, watertight layer that can prevent CSF leak, are required. However, skin necrosis, with potential serious sequelae, can result when stitches are spaced too closely. Staples are not suitable for closure in these patients because they provide poor approximation, without hermetic closure, and can easily damage a fragile atrophic scalp.

CASE REPORT

Two weeks after radiosurgery, the patient presented with a dehiscent wound in the right frontal radiated scalp area, exposing the skull and Craniofix device (Fig. 5-6A). CSF leak was managed with lumbar drainage and the Craniofix was removed; however, the patient’s scalp wound became chronically infected and failed to heal. In July 2006, the plastic surgery department performed a delayed rotational flap to cover the skin defect (Fig. 5-6B). The underlying bacterial infection was not controlled in spite of focal and intravenous treatment with antibiotics. The rotational flap developed necrotic foci and was removed. In September 2006, a large right latissimus dorsi muscle free flap was grafted onto the surgical site. The underlying infection was resolved, and the free flap remained well vascularized and viable (Fig. 5-6C).

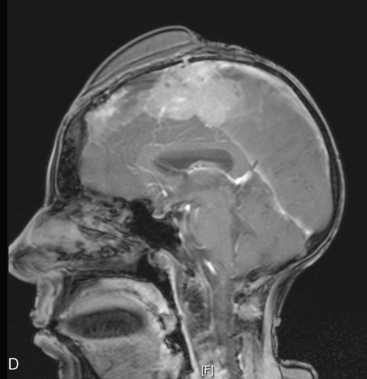

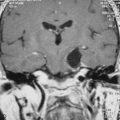

In late 2007, the patient presented with neurologic deterioration due to recurrence of the previously operated and irradiated bifrontal parasagittal meningioma (Fig. 5-6D). Small bilateral frontal convexity meningiomas were stable, and a new small left anterior clinoid meningioma was also noted. In January 2008, a gross total resection of the parasagittal meningioma was again performed and three additional small convexity tumors were removed from the area of exposure (Fig. 5-6E). Histopathology from the primary lesion was WHO grade II meningioma. Focal brain invasion was noted, with an MIB-1 labeling index of 15%. The postoperative course was uneventful and the free flap healed well (Fig. 5-6F).

The patient remains under close follow-up.

Stereotactic Radiosurgery and Fractionated Stereotactic Radiotherapy

Adjuvant or primary stereotactic radiosurgery (SRS) or fractionated stereotactic radiotherapy (FSR) treatment may be necessary in RIM patients when radical excision is not possible, which is not uncommon, or for elderly, infirm patients and others who are not eligible for surgery. When the data are available, treatment planning and dose prescription and delivery should be carried out with careful consideration of the original doses and treatment fields in RIM patients. Adjuvant conventional conformal irradiation is generally recommended after surgery and/or radiosurgery to address tumor cells that may remain viable in the brain parenchyma and along the dura, especially in patients with WHO grade II or WHO grade III meningiomas.87,88,92 Conventional radiotherapy, however, is not an option in some RIM patients, who may have already received maximum tolerable doses of ionizing irradiation. In these patients, fractionated stereotactic radiotherapy FSR or SRS may be advised.98,99

A recent study of gamma knife radiosurgery in 16 patients with 20 radiation-induced tumors (17 typical meningiomas, 1 atypical meningioma, and 1 schwannoma), with median follow-up of 40 months,100 found outcomes resembling results in previously published studies involving patients with SM.98,99 Using the m3® high-resolution micro-multileaf collimater (BrainLAB, Heimstetten, Germany) mounted on a linear accelerator for stereotactic radiosurgery, we have found tumor control rates in RIM patients with WHO grade I meningiomas comparable to reported outcomes treating WHO grade I SM (unpublished data). Evidence-based data in this regard remains limited.

Unfortunately, there is a reduced response to stereotactic radiosurgery for both RIM and SM with WHO II or WHO grade III pathology (50% and 17%, respectively).99 These higher grade tumors are associated with poor outcome.99,101,102

Systemic Therapy

In patients with recurrent, nonresectable SM, systemic adjuvant treatment with tamoxifen103 or hydroxyurea104–106 has been reported. Both agents have been used in our department for selected RIM patients with recurrent meningioma that was not controlled with surgery and radiotherapy, but with no measurable response.

GENETICS OF RADIATION-INDUCED MENINGIOMA

Genetic Predisposition

The tinea capitis cohort provided an ideal basis for an investigation of this hypothesis.26,33 To enlarge the sample size, additional irradiated and nonirradiated cases were ascertained through the Israel Cancer Registry and through the use of compensation files, submitted in the framework of the Israeli tinea capitis compensation law. This law, established in 1994 by the Israeli Parliament, was designed to compensate irradiated individuals for specific diseases that were proven to be causally associated with irradiation.107

Based on this nested case-control study, Sadetzki and colleagues108 published results of an assessment of the possible role of 12 candidate genes involved in DNA repair and cell cycle control, as well as the NF2 gene, which is known to be responsible for familial meningioma in RIM and SM formation. Intragenic single nucleotide polymorphisms (SNPs) in the Ki-ras and ERCC2 genes were associated with increased risk of meningioma (OR: 1.76; 95% CI: 1.07–2.92 and OR: 1.68; 95% CI: 1.00–2.84, respectively), and a significant interaction was found between radiation and cyclin D1 and p16 SNPs (P for interaction: .005 and .057), suggesting an inverse effect in RIM compared with SM.

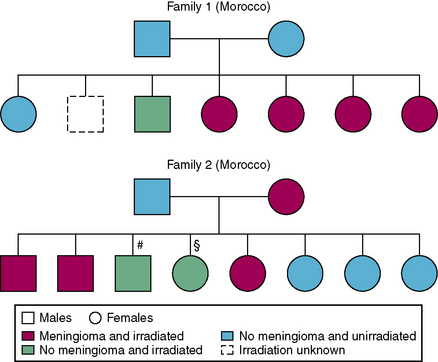

Variation in radiosensitivity across the human population is an accepted concept.17,109 Direct evidence of radiation sensitivity was demonstrated recently in the tinea capitis study based on a data set of families that included irradiated and nonirradiated members, and members with and without RIM.28 The fact that tinea capitis is a contagious disease, and that the cohort included a high proportion of individuals from North Africa, generally characterized as having large families (i.e., mean of five to six children), led to a unique situation in which several family members were exposed to radiation and others were not. A natural experiment was therefore created, enabling simultaneous assessment of the effect of exposure and inheritance shown by familial aggregation of the disease among irradiated individuals within the same family (Fig. 5-7).

Based on the knowledge of increased risk of meningioma with exposure to ionizing radiation, and of genetic susceptibility to meningioma, the contribution of variants in DNA repair genes to disease susceptibility was recently explored.110 The analysis was based on data from five case-control series, including 631 cases and 637 controls that contributed to multinational studies assessing whether mobile telephone use increases the risk of cancer (the Interphone Study).111 Genetic analysis included the genotyping of 136 DNA repair genes, as well as 388 putative functional SNPs. Statistically significant associations with meningioma were found for 12 SNPs. Of these, three mapped to breast cancer susceptibility gene 1 (BRCA1)-interacting protein 1 (BRIP1), and four to ataxia telangiectasia-mutated. The most statistically significant association was observed for SNP rs4968451 of the BRIP1, for which statistical significance was maintained after adjustment for multiple testing (P = .009), and for which there was no evidence of heterogeneity among the risks observed in the five case-control data sets. It is believed that this SNP may contribute significantly to meningioma development, as approximately 28% of the European population carries at-risk genotypes for this variant.

Somatic Alterations of RIM

Sporadic meningioma was one of the first solid tumors studied for somatic alterations. The first studies focused on chromosomal aberrations using cytogenetic techniques, including Giemsa staining, spectral karyotyping (SKY), and comparative genomic hybridization (CGH). A number of cytogenetic alterations have been reported; the most frequent abnormalities, seen in 54% to 78% of SM, were located in chromosome 22 and were monosomy or deletion of 22q. Another common chromosomal aberration that was found to correlate with tumor behavior was loss of 1p. Other chromosomal aberrations reported in anaplastic and atypical meningioma have been found at 3p, 6q, 10p, 10q, and 14q, suggesting candidate regions for tumor suppressor genes.112,113

Using loss of heterozygosity (LOH) studies, researchers were able to locate smaller regions that were deleted in meningioma. Further studies have tried to identify specific genes and proteins involved in meningioma formation. The gene NF2, which causes neurofibromatosis II syndrome and has been mapped to chromosome 22q12.2,114,115 was deleted in up to 50% of SM.116,117 Several observations led to the hypothesis that other tumor suppressor genes located on 22q (e.g., BAM22, LARGE, and MN1) may be involved in meningioma formation.112

Recent studies have identified additional genes involved in early and advanced meningioma. Inactivation of the 4.1B gene was found in 60% of meningiomas, regardless of histological grade, suggesting that this is an early event in the carcinogenesis process.118 In atypical and malignant meningioma, genetic changes were found in several genes, i.e., hTERT and K4A/INK4B.119

Only a few investigators have assessed the somatic characteristics of RIM and even fewer compared genetic alteration in RIM to non-RIM. The first three publications on RIM were case reports, each reporting on one patient who developed meningioma after exposure to therapeutic radiation for a first tumor (skin carcinoma, pituitary adenoma, and glioma). The karyotype for all three meningiomas showed deletions of chromosome 22, and two of them showed additional chromosomal aberrations in chromosome 1, 6, 8, and 9.120–122

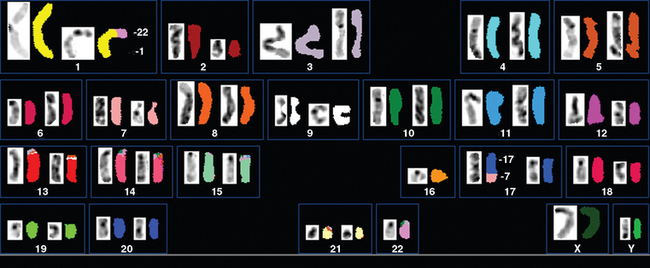

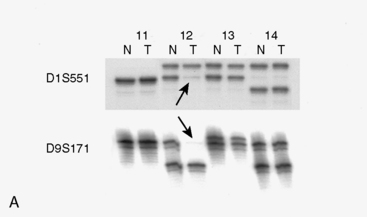

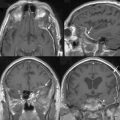

Zattara-Cannoni and colleagues123 used cytogenic techniques to study six meningiomas (four benign and two atypical) from patients previously treated with radiation for first cancer (Fig. 5-8); all showed the same chromosomal abnormality rearrangements between chromosomes 1 and 22. Their hypothesis was that since the rearrangement between chromosomes 1 and 22 was in the 1q11 region, a tumor suppressor gene with possible involvement in RIM formation might be localized in this region.

Al-Mefty and colleagues39 studied serial tumor samples of 16 RIM cases. The authors found deletions, loss, or additions in chromosome 1p in 89% of cases, and loss or deletion in 6q in 67%.

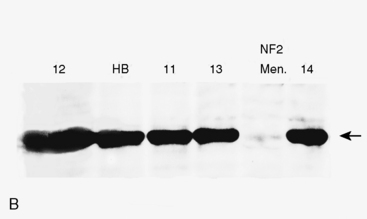

Studies comparing molecular genetic changes in RIM and SM are limited, mainly due to small sample sizes that preclude significant results. In the first study analyzing a group of radiation-induced solid tumors using molecular genetic tools,124 investigators studied seven RIM (five grade I, two grade II), and eight SM cases (seven grade I, one grade III). The study assessed chromosomal changes, LOH, mutations in NF2, and level of NF2 gene product (schwannomin/merlin protein). Allelic loss in 1p was found in 57% of RIM samples and loss of 22q was found in 29% of cases, whereas these changes were found in 30% and 60% to 70%, respectively, in the sporadic cases (Fig. 5-9A). Although no mutations were detected in the NF2 gene in RIM cases, 50% of SM showed mutations in this gene. NF2 protein levels were normal in four of four RIM cases tested, whereas 50% activity or less was seen in the non-RIM tumors (Fig. 5-9B). The authors concluded that NF2 gene inactivation does not play a role in the pathogenesis of meningioma formation in RIM cases, and that chromosomal deletion in 22q is less frequent in RIM compared to non-RIM. They suggested that other regions, such as 1p, may be important for RIM formation.

Rienstein and colleagues125 made cytogenetic comparisons between 16 RIM (14 benign, two atypical) and 17 sporadic meningioma (16 benign, 1 atypical), and found that the most common losses were in chromosomes 22 and 1 (56.2% and 37.5% compared to 47% and 35.3% in RIM and SM, respectively). In RIM cases, gains of DNA copy numbers of chromosomes 8 and 12 were detected in 2 of 16 cases. The authors concluded that the RIM and SM have the same tumorigenic pathway.

Joachim and colleagues126 analyzed 25 RIM (9 WHO grade I, 5 WHO grade II, 11 WHO grade III) and 36 SM (21 grade WHO II, 15 WHO grade III) for six genes (NF2, p53, PTEN, K-ras, N-ras, and H-ras). Although no differences in mutation rates were found between groups for the ras (no mutations were found in either group), p53, or PTEN genes, there were differences in the frequency of NF2 mutations between RIM and SM (23% and 56%, respectively, P < .02). The authors concluded that with the exception of NF2, there is a certain overlap in the mutation spectrum in RIM and SM.

Rajcan-Separovic and colleagues127 analyzed six RIM cases (two benign and four malignant), and one atypical SM. They found loss of 1p and 7p in four of five RIMs with abnormal karyotype and loss of 6q in three of five RIM cases, in comparison with losses in the SM case at 1p, 6q, 14q, 18q, and 22q.

[1] Roentgen W.C. Ueber eine neue Art von Strahlen. S B Phys Med Ges Wurzburg. 1895:132-141.

[2] Morgan R.H. The emergence of radiology as a major influence in American medicine. Caldwell Lecture, 1970. Am J Roentgenol Radium Ther Nucl Med. 1971;111(3):449-462.

[3] Thomas A. The invisible light. British Society for the History of Radiation, 2001. http://www.bshr.org.uk/html/developemnt.html. (Accessed March 9, 2008)

[4] Fröman N. Marie and pierre curie and the discovery of polonium and radium. Nobel foundation (Nobelprize.org), 1996. Marshall-Lundén N, Trans. http://nobelprize.org/cgi-bin/print?from=%2Fnobel_prizes%2Fphysics%2Farticles%2Fcurie%2Findex.html (Accessed February 17, 2008)

[5] Kent E. Radiology in 1896. The British Society for the History of Radiology, 1998. http://www.rhhct.org.uk/news/10.html. (Accessed March 31, 2006)

[6] Duke University Rare Book, Manuscript, and Special Collections Library. Edison fears the hidden perils of the x-rays. Edison, Clarence Dally, and the hidden perils of the x-rays. Durham, NC: Rare Book, Manuscript, and Special Collections Library, Duke University, Aug 3, 1903. New york World http://home.gwi.net/~dnb/read/edison/edison_xrays.htm (Accessed January 8, 2008)

[7] Caufield C. Multiple Exposures: Chronicles of the Radiation Age. New York: Harper & Row, 1989.

[8] Hempelmann L.H. Potential dangers in the uncontrolled use of shoe-fitting fluoroscopes. N Engl J Med. 1949;241(9):335.

[9] Williams C.R. Radiation exposures from the use of shoe-fitting fluoroscopes. N Engl J Med. 1949;241(9):333-335.

[10] Kovarik W. Mainstream media: the radium girls. In: Neuvil M., Kovarik W., editors. Mass Media and Environmental Conflict. Thousand Oaks, CA: Sage Publications; 1996:33-52.

[11] Davidoff L., Cornelius G., Elsberg C., Tarlov I. The effect of radiation applied directly to the brain and spinal cord. Radiology. 1938;31:451-463.

[12] Wachowski T.J., Chenault H. Degenerative effects of large doses of roentgen rays on the human brain. Radiology. 1945;45:227-246.

[13] Committee for Review and Evaluation of the Medical Use Program of the Nuclear Regulatory Commission, National Resource Council of the National Academies of Science: Radiation in medicine: a need for regulatory reform. National Academies PressGottfried K.D., Penn G. http://books.nap.edu/openbook.php?record_id=5154&page=R1, 1996. (Accessed January 8, 2008)

[14] Margulis A.R. The lessons of radiobiology for diagnostic radiology. Caldwell Lecture, 1972. Am J Roentgenol Radium Ther Nucl Med. 1973;117(4):741-756.

[15] Hendee W.R. History, current status, and trends of radiation protection standards. Med Phys. 1993;20(5):1303-1314.

[16] Muller J.M. Artificial transmutation of the gene. Science. 1927;66:84-87.

[17] Committee to Assess Biological Effects of Ionizing Radiation (BEIR), National Research Council of the National Academy of Sciences. Health risks from exposure to low levels of ionizing radiation: BEIR VII-Phase 2. National Academy of Science http://books.nap.edu/catalog.php?record_id=11340#toc, 2006. (Accessed March 13, 2008)

[18] Lyman R.S., Kupalov P., Scholz W. Effect of Roentgen rays on the central nervous system: results of large doses on brains of adult dogs. Arch Neurol Psychiatry. 1933;29:56-87.

[19] Nemenow M.I. Effect of Roentgen rays on the brain: experimental investigation by means of the conditioned reflex method. Radiology. 1934;23:94-96.

[20] Foltz E.L., Holyoke J.B., Heyl H.L. Brain necrosis following x-ray therapy. J Neurosurg. 1953;10(4):423-429.

[21] Rabin B.M., Meyer J.R., Berlin J.W., et al. Radiation-induced changes in the central nervous system and head and neck. Radiographics. 1996;16(5):1055-1072.

[22] Karlsson P., Holmberg E., Lundell M., et al. Intracranial tumors after exposure to ionizing radiation during infancy: a pooled analysis of two Swedish cohorts of 28,008 infants with skin hemangioma. Radiat Res. 1998;150(3):357-364.

[23] Neglia J.P., Robison L.L., Stovall M., et al. New primary neoplasms of the central nervous system in survivors of childhood cancer: a report from the Childhood Cancer Survivor Study. J Natl Cancer Inst. 2006;98(21):1528-1537.

[24] Shibata S., Sadamori N., Mine M., Sekine I. Intracranial meningiomas among Nagasaki atomic bomb survivors. Lancet. 1994;344(8939–8940):1770.

[25] Preston D.L., Ron E., Yonehara S., et al. Tumors of the nervous system and pituitary gland associated with atomic bomb radiation exposure. J Natl Cancer Inst. 2002;94(20):1555-1563.

[26] Sadetzki S., Chetrit A., Freedman L., et al. Long-term follow-up for brain tumor development after childhood exposure to ionizing radiation for tinea capitis. Radiat Res. 2005;163(4):424-432.

[27] Morgan W.F., Day J.P., Kaplan M.I., et al. Genomic instability induced by ionizing radiation. Radiat Res. 1996;146(3):247-258.

[28] Flint-Richter P., Sadetzki S. Genetic predisposition for the development of radiation-associated meningioma: an epidemiological study. Lancet Oncol. 2007;8(5):403-410.

[29] Feinendegen L., Hahnfeldt P., Schadt E.E., et al. Systems biology and its potential role in radiobiology. Radiat Environ Biophys. 2008;47(1):5-23.

[30] Beller A.J., Feinsod M., Sahar A. The possible relationship between small dose irradiation to the scalp and intracranial meningiomas. Neurochirurgia (Stuttg). 1972;15(4):135-143.

[31] Munk J., Peyser E., Gruszkiewicz J. Radiation induced head and intracranial meningiomas. Clin Radiol. 1969;20:90-94.

[32] Mann I., Yates P.C., Ainslie J.P. Unusual case of double primary orbital tumour. Br J Ophthalmol. 1953;37(12):758-762.

[33] Modan B., Baidatz D., Mart H., et al. Radiation-induced head and neck tumours. Lancet. 1974;1(7852):277-279.

[34] Al-Mefty O., Kersh J.E., Routh A., Smith R.R. The long-term side effects of radiation therapy for benign brain tumors in adults. J Neurosurg. 1990;73(4):502-512.

[35] Harrison M.J., Wolfe D.E., Lau T.S., et al. Radiation-induced meningiomas: experience at the Mount Sinai Hospital and review of the literature. J Neurosurg. 1991;75(4):564-574.

[36] Salvati M., Cervoni L., Puzzilli F., et al. High-dose radiation-induced meningiomas. Surg Neurol. 1997;47(5):435-441. discussion 441–442

[37] Musa B.S., Pople I.K., Cummins B.H. Intracranial meningiomas following irradiation—a growing problem?. Br J Neurosurg. 1995;9(5):629-637.

[38] Strojan P., Popovic M., Jereb B. Secondary intracranial meningiomas after high-dose cranial irradiation: report of five cases and review of the literature. Int J Radiat Oncol Biol Phys. 2000;48(1):65-73.

[39] Al-Mefty O., Topsakal C., Pravdenkova S., et al. Radiation-induced meningiomas: clinical, pathological, cytokinetic, and cytogenetic characteristics. J Neurosurg. 2004;100(6):1002-1013.

[40] Ghim T.T., Seo J.J., O’Brien M., et al. Childhood intracranial meningiomas after high-dose irradiation. Cancer. 1993;71(12):4091-4095.

[41] Yousaf I., Byrnes D.P., Choudhari K.A. Meningiomas induced by high dose cranial irradiation. Br J Neurosurg. 2003;17(3):219-225.

[42] Soffer D., Gomori J.M., Siegal T., Shalit M.N. Intracranial meningiomas after high-dose irradiation. Cancer. 1989;63(8):1514-1519.

[43] Kleinschmidt-DeMasters B.K., Lillehei K.O. Radiation-induced meningioma with a 63-year latency period. Case report. J Neurosurg. 1995;82(3):487-488.

[44] Mack E.E., Wilson C.B. Meningiomas induced by high-dose cranial irradiation. J Neurosurg. 1993;79(1):28-31.

[45] Salvati M., Cervoni L., Artico M. High-dose radiation-induced meningiomas following acute lymphoblastic leukemia in children. Childs Nerv Syst. 1996;12(5):266-269.

[46] Gold D.G., Neglia J.P., Dusenbery K.E. Second neoplasms after megavoltage radiation for pediatric tumors. Cancer. 2003;97(10):2588-2596.

[47] Ware M.L., Cha S., Gupta N., Perry V.L. Radiation-induced atypical meningioma with rapid growth in a 13–year-old girl. Case report. J Neurosurg. 2004;100(5 Suppl. Pediatrics):488-491.

[48] Gabivov G., Kuklina A., Martynov V., et al. [Radiation-induced meningiomas of the brain]. Zh Vopr Neirokhir. 1983;6:13-18. [Russian]

[49] Gomori J.M., Shaked A. Radiation induced meningiomas. Neuroradiology. 1982;23(4):211-212.

[50] Gosztonyi G., Slowik F., Pasztor E. Intracranial meningiomas developing at long intervals following low-dose X-ray irradiation of the head. J Neurooncol. 2004;70(1):59-65.

[51] Kandel E.I. Development of meningioma after x-ray irradiation of the head. Zh Vopr Nelrokhir. 1978;1:51-53. [Russian]

[52] Ron E., Modan B., Boice J.D.Jr, et al. Tumors of the brain and nervous system after radiotherapy in childhood. N Engl J Med. 1988;319(16):1033-1039.

[53] Sadetzki S., Flint-Richter P., Ben-Tal T., Nass D. Radiation-induced meningioma: a descriptive study of 253 cases. J Neurosurg. 2002;97(5):1078-1082.

[54] Soffer D., Pittaluga S., Feiner M., Beller A.J. Intracranial meningiomas following low-dose irradiation to the head. J Neurosurg. 1983;59(6):1048-1053.

[55] Sadamori N., Shibata S., Mine M., et al. Incidence of intracranial meningiomas in Nagasaki atomic-bomb survivors. Int J Cancer. 1996;67(3):318-322.

[56] Shintani T., Hayakawa N., Hoshi M., et al. High incidence of meningioma among Hiroshima atomic bomb survivors. J Radiat Res (Tokyo). 1999;40(1):49-57.

[57] Shintani T., Hayakawa N., Kamada N. High incidence of meningioma in survivors of Hiroshima. Lancet. 1997;349(9062):1369.

[58] Longstreth W.T.Jr, Phillips L.E., Drangsholt M., et al. Dental x-rays and the risk of intracranial meningioma: a population-based case-control study. Cancer. 2004;100(5):1026-1034.

[59] Preston-Martin S., Paganini-Hill A., Henderson B.E., et al. Case-control study of intracranial meningiomas in women in Los Angeles County, California. J Natl Cancer Inst. 1980;65(1):67-73.

[60] Preston-Martin S., Yu M.C., Henderson B.E., Roberts C. Risk factors for meningiomas in men in Los Angeles County. J Natl Cancer Inst. 1983;70(5):863-866.

[61] Rodvall Y., Ahlbom A., Pershagen G., et al. Dental radiography after age 25 years, amalgam fillings and tumours of the central nervous system. Oral Oncol. 1998;34(4):265-269.

[62] Adamson H.A. Simplified method of x-ray application for the cure of ringworm of the scalp: Keinbock’s method. Lancet. 1909;1:1378-1380.

[63] Albert R.E., Omran A.R., Brauer E.W., et al. Followup study of patients treated by x-ray for tinea capitis. Am J Pub Health. 1966;56:2114-2120.

[64] Omran A.R., Shore R.E., Markoff R.A., et al. Follow-up study of patients treated by X-ray epilation for tinea capitis: psychiatric and psychometric evaluation. Am J Pub Health. 1978;68(6):561-567.

[65] Goldman L., Schwartz J., Preston R.H., et al. Current status of Griseofulvin. JAMA. 1960;172:532-538.

[66] Russell B., Frain-Bell W., Stevenson C.J., et al. Chronic ringworm infection of the skin and nails treated with griseofulvin. Report of a therapeutic trial. Lancet. 1960;1:1141-1147.

[67] Ziprkowski L., Krakowski A., Schewach-Millet M., Btesh S. Griseofulvin in the mass treatment of tinea capitis. Bull WHO. 1960;23:803-810.

[68] Katzenellenbogen I., Sandbank M. [The treatment of tinea capitis and dermatomycosis with griseofulvin. Follow-up of 65 cases]. Harefuah. 1961;60:111-115.

[69] Druckman A. Schlafsucht als Folge der Roentgenbestrahlung. Beitrag zur Strahlenempfindlichkeit des Gehirns. Strahlentherapie. 1945;33:382-384. Seen inWachowski T.J., Chenault H. Degenerative effects of large doses of roentgen rays on the human brain. Radiology. 1929;45:227-246.

[70] Lorey A., Schaltenbrand G. Pachymeningitis nach Rontgenbestrahlung. Strahlentherapie. 1945;44:747-758. Seen inWachowski T.J., Chenault H. Degenerative effects of large doses of roentgen rays on the human brain. Radiology. 1932;45:227-246.

[71] Schaltenbrand G. Epilepsie nach Rontgenbestrahlung des Kopfes im Kindsalter. Nervenarzt. 1945;8:62-66. Seen inWachowski T.J., Chenault H. Degenerative effects of large doses of roentgen rays on the human brain. Radiology. 1935;45:227-246.

[72] Rubinstein A.B., Shalit M.N., Cohen M.L., et al. Radiation-induced cerebral meningioma: a recognizable entity. J Neurosurg. 1984;61(5):966-971.

[73] Health effects of radiation: findings of the radiation effects research foundation (RERF), National Academy of Sciences http://dels.nas.edu/dels/rpt_briefs/rerf_final.pdf, 2003. (Accessed May 12, 2008)

[74] Harada T., Ishida M.I. Neoplasms among A-bomb survivors in Hiroshima: first report of the Research Committee on Tumor Statistics, Hiroshima City Medical Association, Hiroshima, Japan. J Natl Cancer Inst. 1960;25:1253-1264.

[75] Kishikawa M., Yushita Y., Toda T., et al. Pathological study of brain tumors of the A-bomb survivors in Nagasaki [in Japanese]. Hiroshima Igaku. 1994;35:428-430. Seen inShibata S., Sadamori N., Mine M., Sekine I. Intracranial meningiomas among Nagasaki atomic bomb survivors. Lancet. 1982;344:1770.

[76] Nolan W.E. Radiation hazards to the patient from oral roentgenography. J Am Dent Assoc. 1953;47(6):681-684.

[77] Nolan W.E., Patterson H.W. Radiation hazards from theental x-ray units. Radiology. 1953;61(4):625-629.

[78] Preece J.W. Biological effects of panoramic radiography. In Langland O.E., Langlais R.P., McDavid W.D., DelBalso A.M., editors: Panoramic radiology, 2nd ed, Philadelphia: Lea & Fehiger, 1989.

[79] Gijbels F., Jacobs R., Bogaerts R., et al. Dosimetry of digital panoramic imaging. Part I: Patient exposure. Dentomaxillofac Radiol. 2005;34(3):145-149.

[80] Gijbels F., Sanderink G., Wyatt J., et al. Radiation doses of indirect and direct digital cephalometric radiography. Br Dent J. 2004;197(3):149-152. discussion 140

[81] Umansky F., Shoshan Y., Rosenthal G., et al. Radiation-induced meningioma. Neurosurg Focus. 2008;24(5):E7.

[82] Pollak L., Walach N., Gur R., Schiffer J. Meningiomas after radiotherapy for tinea capitis—still no history. Tumori. 1998;84(1):65-68.

[83] Domenicucci M., Artico M., Nucci F., et al. Meningioma following high-dose radiation therapy. Case report and review of the literature. Clin Neurol Neurosurg. 1990;92(4):349-352.

[84] Choudhary A., Pradhan S., Huda M.F., et al. Radiation induced meningioma with a short latent period following high dose cranial irradiation: case report and literature review. J Neurooncol. 2006;77:73-77.

[85] Al-Mefty O., Kadri P.A., Pravdenkova S., et al. Malignant progression in meningioma: documentation of a series and analysis of cytogenetic findings. J Neurosurg. 2004;101(2):210-218.

[86] Louis D.N., Scheithauer B.W., Budka H., et al. Meningiomas. In: Kleihues P., Cavenee W.K., editors. World Health Organization Classification of Tumours. Pathology and Genetics: Tumors of the Nervous System. Lyon, France: International Agency for Research on Cancer (IARC) Press; 2000:176-184.

[87] Borovich B., Doron Y. Recurrence of intracranial meningiomas: the role played by regional multicentricity. J Neurosurg. 1986;64(1):58-63.

[88] Borovich B., Doron Y., Braun J., et al. Recurrence of intracranial meningiomas: the role played by regional multicentricity. Part 2: Clinical and radiological aspects. J Neurosurg. 1986;65(2):168-171.

[89] Jaaskelainen J. Seemingly complete removal of histologically benign intracranial meningioma: late recurrence rate and factors predicting recurrence in 657 patients. A multivariate analysis. Surg Neurol. 1986;26(5):461-469.

[90] Simpson D. The recurrence of intracranial meningiomas after surgical treatment. J Neurol Neurosurg Psychiatry. 1957;20(1):22-39.

[91] Stechison M.T., Burkhart L.E. Radiation-induced meningiomas. J Neurosurg. 1994;80(1):177-178.

[92] Wilson C.B. Meningiomas: genetics, malignancy, and the role of radiation in induction and treatment. The Richard C. Schneider Lecture. J Neurosurg. 1994;81(5):666-675.

[93] Mirimanoff R.O., Dosoretz D.E., Linggood R.M., et al. Meningioma: analysis of recurrence and progression following neurosurgical resection. J Neurosurg. 1985;62(1):18-24.

[94] Aichholzer M., Bertalanffy A., Dietrich W., et al. Gamma knife radiosurgery of skull base meningiomas. Acta Neurochir (Wien). 2000;142(6):647-652. discussion 652–653

[95] Debus J., Wuendrich M., Pirzkall A., et al. High efficacy of fractionated stereotactic radiotherapy of large base-of-skull meningiomas: long-term results. J Clin Oncol. 2001;19(15):3547-3553.

[96] Dufour H., Muracciole X., Metellus P., et al. Long-term tumor control and functional outcome in patients with cavernous sinus meningiomas treated by radiotherapy with or without previous surgery: is there an alternative to aggressive tumor removal?. Neurosurgery. 2001;48(2):285-294. discussion 294–296

[97] Pirzkall A., Debus J., Haering P., et al. Intensity modulated radiotherapy (IMRT) for recurrent, residual, or untreated skull-base meningiomas: preliminary clinical experience. Int J Radiat Oncol Biol Phys. 2003;55(2):362-372.

[98] Henzel M., Gross M.W., Hamm K., et al. Significant tumor volume reduction of meningiomas after stereotactic radiotherapy: results of a prospective multicenter study. Neurosurgery. 2006;59(6):1188-1194. discussion 1194

[99] Kondziolka D., Mathieu D., Lunsford L.D., et al. Radiosurgery as definitive management of intracranial meningiomas. Neurosurgery. 2008;62(1):53-58. discussion 58–60

[100] Jensen A.W., Brown P.D., Pollock B.E., et al. Gamma knife radiosurgery of radiation-induced intracranial tumors: local control, outcomes, and complications. Int J Radiat Oncol Biol Phys. 2005;62(1):32-37.

[101] Kano H., Takahashi J.A., Katsuki T., et al. Stereotactic radiosurgery for atypical and anaplastic meningiomas. J Neurooncol. 2007;84(1):41-47.

[102] Mattozo C.A., De Salles A.A., Klement I.A., et al. Stereotactic radiation treatment for recurrent nonbenign meningiomas. J Neurosurg. 2007;106(5):846-854.

[103] Goodwin J.W., Crowley J., Eyre H.J., et al. A phase II evaluation of tamoxifen in unresectable or refractory meningiomas: a Southwest Oncology Group study. J Neurooncol. 1993;15(1):75-77.

[104] Mason W.P., Gentili F., Macdonald D.R., et al. Stabilization of disease progression by hydroxyurea in patients with recurrent or unresectable meningioma. J Neurosurg. 2002;97(2):341-346.

[105] Newton H.B. Hydroxyurea chemotherapy in the treatment of meningiomas. Neurosurg Focus. 2007;23(4):E11.

[106] Newton H.B., Scott S.R., Volpi C. Hydroxyurea chemotherapy for meningiomas: enlarged cohort with extended follow-up. Br J Neurosurg. 2004;18(5):495-499.

[107] Sadetzki S., Modan B. Epidemiology as a basis for legislation: how far should epidemiology go?. Lancet. 1999;353(9171):2238-2239.

[108] Sadetzki S., Flint-Richter P., Starinsky S., et al. Genotyping of patients with sporadic and radiation-associated meningiomas. Cancer Epidemiol Biomarkers Prev. 2005;14(4):969-976.

[109] International Commission on Radiological Protection. Genetic susceptibility to cancer. ICRP publication 79. Approved by the Commission in May 1997. Ann ICRP. 1998;28(1–2):1-157.

[110] Bethke L., Murray A., Webb E., et al. Comprehensive analysis of DNA repair gene variants and risk of meningioma. J Natl Cancer Inst. 2008;100(4):270-276.

[111] Cardis E., Richardson L., Deltour I., et al. The INTERPHONE study: design, epidemiological methods, and description of the study population. Eur J Epidemiol. 2007;22(9):647-664.

[112] Ragel B.T., Jensen R.L. Molecular genetics of meningiomas. Neurosurg Focus. 2005;19(5):E9.

[113] Claus E.B., Bondy M.L., Schildkraut J.M., et al. Epidemiology of intracranial meningioma. Neurosurgery. 2005;57(6):1088-1095. discussion 1088–1095

[114] Rouleau G.A., Seizinger B.R., Wertelecki W., et al. Flanking markers bracket the neurofibromatosis type 2 (NF2) gene on chromosome 22. Am J Hum Genet. 1990;46(2):323-328.

[115] Wolff R.K., Frazer K.A., Jackler R.K., et al. Analysis of chromosome 22 deletions in neurofibromatosis type 2–related tumors. Am J Hum Genet. 1992;51(3):478-485.

[116] Lomas J., Bello J., Arjona D., et al. Genetic and epigenetic alteration of the NF2 gene in sporadic meningiomas. Genes Chromosomes Cancer. 2005;42:314-319.

[117] Perry A., Gutmann D.H., Reifenberger G. Molecular pathogenesis of meningiomas. J Neurooncol. 2004;70(2):183-202.

[118] Lusis E., Gutmann D.H. Meningioma: an update. Curr Opin Neurol. 2004;17(6):687-692.

[119] Simon M., Bostrom J.P., Hartmann C. Molecular genetics of meningiomas: from basic research to potential clinical applications. Neurosurgery. 2007;60(5):787-798. discussion 787–798

[120] Chauveinc L., Dutrillaux A.M., Validire P., et al. Cytogenetic study of eight new cases of radiation-induced solid tumors. Cancer Genet Cytogenet. 1999;114(1):1-8.

[121] Chauveinc L., Ricoul M., Sabatier L., et al. Dosimetric and cytogenetic studies of multiple radiation-induced meningiomas for a single patient. Radiother Oncol. 1997;43(3):285-288.

[122] Pagni C.A., Canavero S., Fiocchi F., Ponzio G. Chromosome 22 monosomy in a radiation-induced meningioma. Ital J Neurol Sci. 1993;14(5):377-379.

[123] Zattara-Cannoni H., Roll P., Figarella-Branger D., et al. Cytogenetic study of six cases of radiation-induced meningiomas. Cancer Genet Cytogenet. 2001;126(2):81-84.

[124] Shoshan Y., Chernova O., Juen S.S., et al. Radiation-induced meningioma: a distinct molecular genetic pattern?. J Neuropathol Exp Neurol. 2000;59(7):614-620.

[125] Rienstein S., Loven D., Israeli O., et al. Comparative genomic hybridization analysis of radiation-associated and sporadic meningiomas. Cancer Genet Cytogenet. 2001;131(2):135-140.

[126] Joachim T., Ram Z., Rappaport Z.H., et al. Comparative analysis of the NF2, TP53, PTEN, KRAS, NRAS and HRAS genes in sporadic and radiation-induced human meningiomas. Int J Cancer. 2001;94(2):218-221.

[127] Rajcan-Separovic E., Maguire J., Loukianova T., et al. Loss of 1p and 7p in radiation-induced meningiomas identified by comparative genomic hybridization. Cancer Genet Cytogenet. 2003;144(1):6-11.