Chapter 98B Living donor liver transplantation

Open and laparoscopic

Overview

Organ availability continues to be a major issue in contemporary transplantation. Living-donor renal transplantation is accepted globally as an important alternative to cadaveric renal transplantation for patients with end-stage renal failure. In 2008, living donors were the source of 36% of the total kidney transplants in the United States (United Network of Organ Sharing [UNOS], 2008). This is largely because the kidneys are paired organs, laparoscopic donor nephrectomy is widespread, and donor morbidity and mortality is low (Kocak et al, 2006).

Despite advances in hepatobiliary surgery, hepatic resection remains a technically demanding surgical endeavor, with higher complication rates than kidney transplantation (Miller et al, 2004a; Pomfret, 2003). Liver transplantation, now standard treatment for many hepatic diseases (see Chapter 97A), has gone through the same evolution as renal transplantation with respect to live donation. Transplantologists embarked on living-donor liver transplantation (LDLT) in the late 1980s, principally as a means to combat pediatric waiting-list mortality (Broelsch et al, 1991; Raia et al, 1989). The inception and refinement of LDLT and split-liver transplantation (see Chapter 98C) have significantly reduced pediatric waiting-list mortality (Testa et al, 2001), thus paving the way for the development of LDLT in adults.

As the indications for liver transplantation have broadened, and cadaveric organ supply has remained largely static, the waiting-list mortality for adults has increased. Thus, in the mid-1990s, as in pediatric transplantation, adult LDLT was initiated in response. In Japan, where death defined by neurologic criteria is not accepted, LDLT began with left-lobe adult LDLT (Hashikura et al, 1994), leading to right-lobe LDLT (Yamaoka et al, 1994) with substantial acceptance and growth of this practice in Asia (Chen et al, 2003), followed by initiation and refinement in the United States in the late 1990s (Boillot et al, 1999; Marcos et al, 1999; Miller et al, 2001; Wachs et al, 1998). Despite the inherent donor risks—and rare, but high-impact, donor deaths (Miller et al, 2004a; Fan et al, 2003)—right-lobe LDLT has remained a strong option in the treatment of liver disease. To that end, as of 2008, LDLT has made up 4% to 9% of all adult liver transplantations performed in the United States (UNOS, 2008). Currently, donor safety remains the primary focus of LDLT, superceding the omnipresent clinical incentive: the static cadaveric donor pool and transplant waiting-list mortality (Salame et al, 2002; Surman, 2002).

Congruent with the tenet of donor safety, reducing donor morbidity is of equal importance. The great advances that have been made in minimally invasive liver resection have now been applied to liver donation in hopes of reducing surgical morbidity and enhancing donor recovery. Similar to the evolution of recipient procedures, minimally invasive liver donation began in the pediatric setting, with laparoscopic harvest of the left lateral section (Cherqui et al, 2002). As centers gained experience in minimally invasive hemihepatectomy (Koffron et al, 2007a; O’Rourke & Fielding, 2004), these methods were carefully applied to right-lobe donation (Koffron et al, 2006) in the hope of reducing donor morbidity, enhancing recovery, and secondarily increasing the willingness to consider donation (Baker et al, 2009). These efforts show promise in their initial results, but greater experience and analysis of clinical outcomes is necessary to define the role of laparoscopy in liver donation and transplantation as a whole.

Living Donor Liver Transplantation: Indications and Results

Many of the indications for LDLT are the same as those for liver transplantation in general (see Chapters 97A, 97C, 97D, and 97E). Given this, the reasons to consider LDLT as opposed to DDLT center largely on chronology with regard to hepatitis C virus (HCV), hepatocellular carcinoma (HCC), and the likelihood that a cadaveric donor organ would become available in a timely fashion. These reasons are always weighed against the tenet that it is unjustified to subject even a volunteer to the risks inherent in donor hepatectomy if the recipient has a reasonable chance of receiving a cadaveric liver graft prior to medical decompensation or progression.

Pediatric Living Donor Liver Transplantation

In pediatric LDLT (see Chapter 98C), although the results in all indications are equivalent with whole-liver grafts, cadaveric graft allocation remains the major concern in the setting of acute hepatic failure. The combination of outcome and availability has made acute liver failure (ALF) a well-accepted indication for pediatric LDLT. For other indications for liver transplantation, split-liver transplantation—in which a cadaveric donor organ is divided to transplant two recipients—is an attractive and increasingly popular alternative to LDLT, and it avoids placing a healthy donor in harm’s way.

Adult Living Donor Liver Transplantation

Experience has shown that although retransplantation is higher after LDLT, patient survival is similar to DDLT (Abt et al, 2004; Freise et al, 2008). There are many reasons for this phenomenon, many of which are related to implantation of a smaller allograft. It seems intuitive that the more advanced the liver disease (liver decompensation, severe portal hypertension), the more ill the patient, and consequently the need for greater hepatocellular mass. In this light, many centers would not perform LDLT on patients with Child-Turcotte-Pugh (CTP) class C cirrhosis, unless the donor can provide a graft of substantial size. Fortunately, in most circumstances, the current U.S. organ allocation system can accommodate such gravely ill patients with DDLT.

Adult LDLT in the treatment of fulminant hepatic failure (FHF) has a unique geographic paradigm. This phenomenon is largely a result of differences in organ donation. In Western countries, LDLT for acute hepatic failure has acceptable results (Sugawara et al, 2002a, 2003), but the organ allocation scheme in the United States places patients with ALF at highest allocation priority, and so it is favorable that DDLT would be available. Therefore, in the United States the concern is raised that LDLT for ALF may place live donors at unnecessary risk in a clinical situation in which a potentially clinically superior DDLT may be possible. The A2ALL retrospective study resulted in a similar conclusion (Campsen et al, 2008). In the nine U.S. transplant centers, LDLT was rarely performed for ALF but was associated with acceptable recipient mortality and donor morbidity; however, the authors raised the concern that a partial graft might result in reduced survival of critically ill recipients and that the rapid course of ALF would lead to selection of inappropriate donors.

This contrasts sharply with the role of LDLT for ALF in Asia, where cadaveric donation is scarce. Here, LDLT accounts for more than 90% of the liver transplantations (de Villa & Lo, 2007; Kobayashi et al, 2003; Lubezky et al, 2004). FHF was the indication for transplantation in 5.7% of the adult LDLT series reported by the Asian group (Lee et al, 2007), in 12% of the series reported by the Hong Kong group (Lo et al, 2004), and in 14.6% of the series reported by the Kyoto group (Morioka et al, 2007); therefore in the East, the consensus is reflected in the findings of a large study on this subject (Ikegami et al, 2008). Over 10 years, 42 LDLTs performed for ALF were reviewed, concluding that outcomes were acceptable even in severely ill recipients, and that LDLT is the accepted treatment of choice for ALF.

Investigation of LDLT has provided clarity in other aspects of this form of liver therapy. Interestingly, results of the A2ALL retrospective cohort study did not show an immunologic advantage for LDLT versus DDLT (Shaked et al, 2009). Longer cold ischemia time was associated with a higher rate of acute cellular rejection in both groups despite much shorter median cold ischemia time in LDLT.

In terms of general recipient complications, the A2ALL study has provided additional data regarding LDLT. Complication rates were higher after LDLT (median, 3%) versus DDLT (median, 2%) and included biliary leak (32% vs. 10%), unplanned reexploration (26% vs. 17%), hepatic artery thrombosis (6.5% vs. 2.3%), portal vein thrombosis (2.9% vs. 0.0%), and complications leading to retransplantation or death (15.9% vs. 9.3%; P < .05) (Freise et al, 2008). Most notably, this analysis demonstrated that although the complication rates were initially higher with LDLT, with increasing center experience, complication rates declined to levels comparable to DDLT.

Hepatitis C Virus

Considerable reservations surround LDLT for patients with HCV (see Chapters 64 and 97A). If a patient has detectable HCV RNA before transplantation, the concern is that recurrence after LDLT may rapidly progress to cirrhosis and graft loss. In the first half of the decade, many centers reported that the course of recurrent HCV after LDLT was worse than in patients who received DDLT (Bozorgzadeh et al, 2004; Gaglio et al, 2003; Garcia-Retortillo et al, 2004; Shiffman et al, 2004; Zimmerman & Trotter, 2003), causing some centers to avoid performing LDLT for this indication (Garcia-Retortillo et al, 2004).

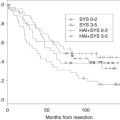

In addition, it has been shown that the degree of transplant center experience may influence the outcome after LDLT for HCV. The A2ALL retrospective study found that graft and patient survival was significantly lower for LDLT in centers of limited experience (<20 cases), but 3-year graft and patient survival were not significantly different (Terrault et al, 2007). This may be a significant factor, as there are more recent reports that are encouraging for LDLT in the treatment of HCV.

Although the hepatocellular mechanisms remain unknown, Schmeding and colleagues (2007) studied 289 patients and found that the intensity of HCV recurrence was not increased in living-donor graft recipients compared with recipients of full-size grafts. These findings have been reported by other groups (Takada et al, 2006). Furthermore, there is encouraging evidence that when HCV recurrence occurs, combination therapy with ribavirin and interferon appears to improve the outcome of recurrent HCV-infected patients after LDLT (Park et al, 2007).

One specific advantage of LDLT in the treatment of HCV is the ability to optimize transplantation timing. It has been reported that patients who clear HCV RNA with interferon/ribavirin have a high likelihood of remaining HCV RNA-negative after transplantation (Berenguer & Wright, 2003). The availability of a living donor allows for treatment of HCV to proceed to transplantation shortly after HCV RNA clearance has been achieved. Unfortunately, pretransplantation treatment used to prevent progression of disease or to minimize recurrence posttransplantation has highly variable effectiveness, tolerability, and outcome.

In attempts to allow greater patient tolerance and thereby achieve HCV RNA clearance prior to transplantation, the so-called low accelerating dose regimen (LADR) has been studied. Using LADR, Everson and colleagues (2005) studied 124 patients, and although 63% experienced treatment-related complications, 46% patients were HCV RNA negative at the end of treatment, 24% in follow-up; more significantly, 12 (80%) of 15 who were RNA negative before transplantation remained negative for 6 months after transplantation. One of the secondary aims of the A2ALL cohort prospective study is to evaluate LADR in combination with LDLT for reducing posttransplantation HCV recurrence.

Primary Hepatic Malignancy

Liver transplantation is now considered a treatment option for unresectable primary hepatic malignancy. The guidelines for LDLT in the treatment of malignancy largely parallel DDLT for both HCC and cholangiocarcinoma as discussed in Chapters 97D and 97E, respectively. But because of constraints in organ supply and allocation, as well as in the timing of transplantation, LDLT offers several strategic options not available in DDLT.

The growth of LDLT has been fostered in part by the inherent ability to treat hepatic malignancy with the chronologic advantage that scheduled LDLT provides. This is particularly evident in the treatment of HCC. In cirrhotic patients with early stage, unresectable HCC, transplantation is the favored treatment. Under the constraints of the Milan criteria (see Chapter 97D), posttransplantation survival rates equal those in patients without HCC. Patients with HCC within the Milan criteria are given added priority in the organ-allocation system. The combination of this systematic design, combined with therapies to delay tumor progression, such as ablation and arterial-based therapy, results in generally accepted outcomes. In spite of this validated process, some patients may incur prolonged waiting times. This time may allow for progression in some of these patients (Yao et al, 2003), excluding them as transplant candidates under the established guidelines.

In this circumstance, LDLT allows patients with HCC to proceed more rapidly to transplantation, potentially reducing the chance of tumor progression and/or posttransplantation recurrence. This has logically led many centers to offer LDLT for HCC; and similar to DDLT, the efficiency and efficacy of LDLT for HCC is widely reported (Gondolesi et al, 2004c; Kaihara et al, 2003; Todo & Furukawa, 2004). However, as LDLT is increasingly utilized to treat patients with locally advanced HCC not prioritized under the organ-allocation system, patients with large tumors logically experience a higher rate of recurrence (Axelrod et al, 2005).

In the era of limited organ availability, it is necessary to impose guidelines such as the Milan criteria to reduce posttransplantation cancer recurrence and to use resources wisely. This is the setting in which LDLT can provide a therapeutic alternative. In carefully selected patients, those who have been studied to determine that estimated risk of HCC recurrence is low enough to justify placing a healthy donor at risk, LDLT may provide benefit without taxing the organ pool. The acceptable success rates and controversial expanded HCC criteria designed to select such patients have been proposed by numerous centers (Bruix & Llovet, 2002; Gondolesi et al, 2004c; Lang et al, 2002).

Despite the sincere desire, and obvious ability, to treat patients with HCC, caution and practicality should supercede. LDLT may limit the waiting time and, as a result, may decrease the progression of disease so that the recurrence rate, intuitively, should be lower than for recipients awaiting DDLT; however, the reverse may also occur, and rapid transplantation may preclude waiting-list drop out, leading to higher recurrence posttransplantation. One example of this phenomenon was reported by the Northwestern group (Kulik & Abecassis, 2004), who reviewed the institutional experience in LDLT for HCC and found a higher recurrence rate, stage for stage, in recipients whose transplantations were accelerated (“fast tracked”) by performing LDLT, especially in the prior era, in which patients with HCC were disadvantaged by the allocation algorithm. Clearly, the role of LDLT in management of patients with HCC requires prospective direct analysis of both recurrence and drop-out rates in comparable patient cohorts with HCC undergoing either deceased- or living-donor liver transplantation.

Donor Evaluation

The appropriate liver donor for LDLT is a legal adult who is healthy, without liver disease, not significantly overweight, has a blood type compatible with the recipient, and is able to provide a graft of adequate size. Donor evaluation is carried out by a physician who serves as the donor’s advocate physician (New York State Committee on Quality Improvement in Living Liver Donation, 2002). The comprehensive medical evaluation includes 1) a complete history and physical examination; 2) blood analysis to exclude viral and autoimmune liver disease, diabetes, hyperlipidemia, and hypercoagulable states; 3) cardiology clearance; and 4) extensive psychosocial evaluation, with psychiatry consultations in cases of even minor concern.

As in resection for other indications, patient (potential donor) obesity increases perioperative risk and degree of hepatic steatosis. It is known that magnetic resonance imaging (MRI) can predict the presence of significant steatosis (>15%) and simplify donor evaluation in overweight candidates (Rinella, et al, 2003). Routine preoperative biopsy is not widely practiced (Ryan et al, 2002), but in many centers, potential donors who are significantly overweight (Schiano et al, 2001) or who have MRI findings suggestive of steatosis undergo liver biopsy to fully assess both donor and recipient short- and long-term risk (Hwang et al, 2004).

Our understanding of steatosis and its effect in liver transplantation has improved (Soejima et al, 2003b), and guidelines for DDLT have been established (Fishbein et al, 1997; Uchino et al, 2004). In LDLT, where liver mass is limited, hepatic steatosis must be considered in the calculation of true hepatocellular mass to ensure adequate engraftment (Limanond et al, 2004).

Estimation of graft volume (GV) is critical in LDLT to optimize donor safety and recipient outcome. Liver volume can be estimated by calculation or imaging, but no consensus exists on the most clinically effective method. To provide clarity in this dilemma, one study examined the accuracy of formula-derived GV estimates and compared them to both radiogically derived estimates and actual measurements (Salvalaggio, et al, 2005). This study found a marginal concordance between the formula-derived calculation and GV for right-lobe donors, but the error ratio was lower than for radiologic estimates; in contrast, MRI measurements for left lateral section grafts demonstrated a lower error ratio than formula-derived estimation. The authors therefore concluded that formula-derived estimates of GV should be routinely used in the initial screening of potential living donors.

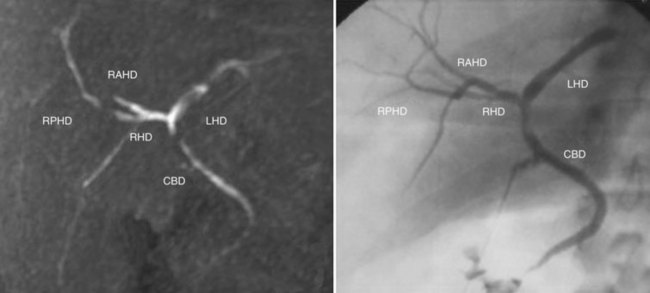

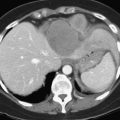

Following successful medical clearance, liver vasculobiliary anatomy and graft-remnant liver volumes are assessed radiologically. MRI that includes arteriography (MRA), venography (MRV), and cholangiography (MRC) is frequently chosen, as it simultaneously defines graft-remnant liver volume, vascular and biliary anatomy, and overall hepatic integrity. MRC with mangafodipir trisodium may be used to enhance visualization of small-order biliary structures (Fig. 98B.1; Cheng et al, 2001; Goldman et al, 2003; Yeh et al, 2004).

Anatomic Variations (See Chapter 1B)

The general segmental anatomy of the liver provides reliable external landmarks for living donation. Using the main hepatic scissura, anatomic resection of the left lateral section and hemilivers is commonplace for a wide variety of indications (see Chapters 90A and 92); however, LDLT relies not only on successful resection but also on the need to maintain intrahepatic and extrahepatic vasculobiliary structures, first, for donor remnant liver viability, and second, to facilitate reconstruction and implantation in the recipient. Therefore, to be successful, identification and management of the hepatic anatomy is crucial throughout the donor and recipient procedures. The following sections focus on the anatomic variations influential in LDLT.

Hepatic Artery

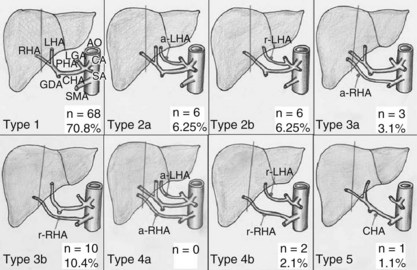

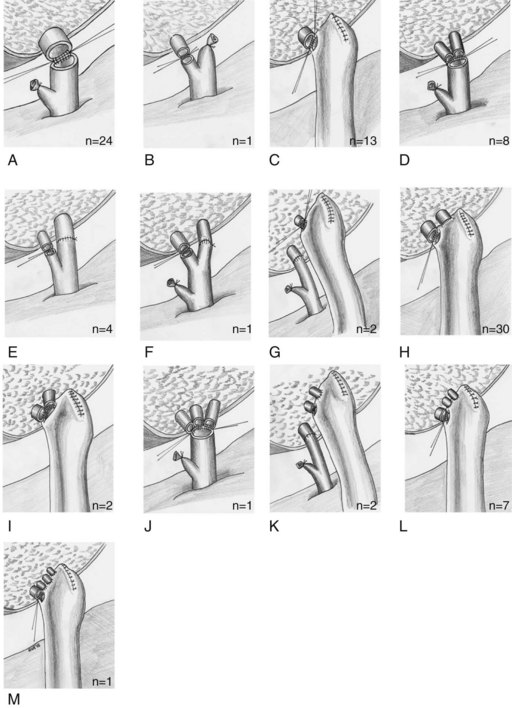

A great understanding and comfort managing arterial variations has resulted from liver transplantation. It is imperative to delineate precisely the arterial anatomy, because “normal” anatomy is present in just over half of the population (Gruttadauria et al, 2001; Hardy & Jones, 1994; Hiatt et al, 1994; Kawarada et al, 2000). The described arterial anatomic variations and their frequency are depicted in Figure 98B.2 (Varotti et al, 2004).

It is intuitive that arterial anatomy would have considerable impact on both donor candidacy and recipient surgical management. Experience has shown that a totally replaced right or left hepatic artery simplifies, rather than complicates, donor hepatectomy; in such situations, greater vessel length may be obtained. Rarely, imaging identifies two arteries supplying the graft. Although back-table reconstruction is possible (Marcos et al, 2001a, 2003), it may be prudent to evaluate other potential donors, if the surgical team has concerns about the recipient procedure.

Portal Vein

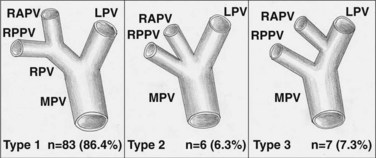

Clinically significant portal variations are less common than arterial variations (Nakamura et al, 2002) but have equal technical implications in both the donor and recipient procedures. These variations involve the configuration of the right portal vein bifurcation into sectorial branches. Instead of a portal bifurcation and a left and right common portal trunk, the right anterior and posterior sectorial branches may arise separately, effectively forming a portal trifurcation (Fig. 98B.3). When these sectorial branches arise immediately adjacent to each other, it is sometimes possible to leave a common wall between them to allow for single anastomosis in the recipient; however, sectorial branches that are significantly separated must be taken individually to avoid altering the portal flow to the remnant left lobe. Logically, to use such a lobe for LDLT, an extensive reconstruction using a bifucated interposition graft, such as a recipient portal vein bifurcation, is required (see Fig. 98B.3; Varotti et al, 2004). For this reason, many centers may choose to find a donor with less complex anatomy.

Biliary Anatomy

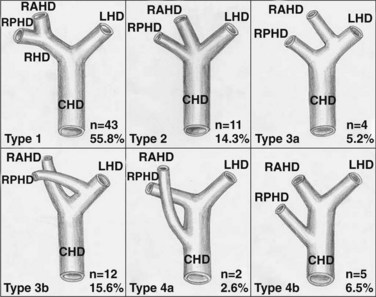

Biliary anatomic variations (Fig. 98B.4) tend to mimic those of the associated vascular structures, especially the portal vein (Lee, et al, 2004), but they may have greater surgical impact, particulary in right-lobe LDLT.

The standard anatomy consists of a confluence of left and right hepatic ducts to form the common hepatic duct. Usually, the segment II and III ducts join in the umbilical fissure, and dividing the hilar plate in this area provides a single duct for anastomosis (Renz et al, 2000). During left-lobe donation, care must be taken to avoid injury to a right posterior sectorial duct that is found to cross the Cantlie line, joining the left hepatic duct at the base of segment IV (see see Fig. 98B.4).

Right-lobe biliary anatomy may be quite complex. Only half of the donors have a single right hepatic duct (Kawarada et al, 2000) or a short duct, which is divided proximally in the interest of donor safety. Logically, the incidence of biliary complications increases with more complex anatomy and anastomoses (Gondolesi et al, 2004a). The most difficult variation is perhaps a right posterior sectorial duct draining into the left hepatic duct, which may be inadvertently transected, as it lies cephalad and posterior to the right portal vein. Because of its location, this bile duct branch is particularly difficult to reconstruct once the portal vein is anastomosed in the recipient.

Hepatic Veins

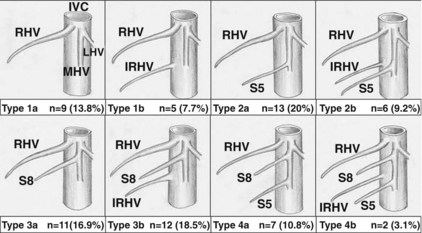

The venous drainage of the right lobe is much more complex. Typically, the right hepatic vein represents the main venous outflow of the right hemiliver, although variations occur that may drain individual liver segments (Fig. 98B.5). For this reason, in many centers, surgical decision making has evolved from considering the right liver as a whole to considering segmental venous drainage.

Although still somewhat controversial, it is generally agreed that venous structures 5 to 10 mm in diameter constitute significant drainage of the graft; therefore identifying and characterizing these structures has significance in LDLT. As illustrated in Figure 98B.5, imaging frequently identifies significant veins draining the anterior and posterior sectors. Those veins draining the posterior sector directly into the cava (e.g., segment VI inferior vein) may be preserved and reimplanted (Hwang et al, 2004). Anterior sector (segment V and VIII) veins drain into the middle hepatic vein. These may require preservation and later reconstruction in cases of marginal graft-recipient weight ratio (GRWR) and/or significant recipient portal hypertension (Sugawara & Makuuchi, 2001).

Graft Size and Small-for-Size Syndrome

We are only beginning to understand the unique ability of the liver to regenerate after injury or resection (see Chapter 5). Since the genesis of LDLT, however, one persistent problem has been posttransplantation hepatic insufficiency as a result of small graft mass (Lo et al, 1999). This underscores the importance of accurate estimation in the donor, especially as there is significant variation in total liver volume relative to body size and in the configuration of the liver (Gondolesi et al, 2004b).

Predonation imaging can accurately estimate the total donor liver volume and the volume of the proposed graft. Formulas to estimate expected liver volume have also been developed (Yoshizumi et al, 2003) and are slightly superior to imaging in donor screening (Salvalaggio, et al, 2005). Many centers use a combination of techniques to screen and then confirm the graft weight, which is then compared with the weight of the potential recipient. The ratio of the estimated weight of the donor liver graft to the weight of the recipient, expressed as a percent, is the GRWR.

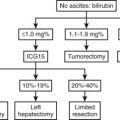

It is well established that when the GRWR is less than 0.8%, there is significant risk of the recipient developing small-for-size syndrome (SFSS) (Sugawara et al, 2001). SFSS is posttransplantation hepatic insufficiency presenting as prolonged cholestasis, coagulopathy, and ascites formation in the absence of hepatic vascular insufficiency (see Chapter 100). Because this results in death or the need for retransplantation in half of the patients, over time the use of the right lobe has become the preferred graft in adult LDLT. The exception is when a very large person donates to a smaller recipient, where a left-lobe graft may be of adequate mass.

Additional factors contribute to recipient SFSS, including advanced cirrhosis, portal hypertension, and associated hyperdynamic splanchnic circulation. It is known that recipients of this type require a GRWR greater than 0.8% (Ben-Haim et al, 2001). The splanchnic hemodynamic mechanisms responsible for SFSS are poorly understood (Asakura et al, 1998; Gondolesi et al, 2002b; Huang et al, 2000; Niemann et al, 2002; Piscaglia et al, 1999). In attempts to reduce the hyperdynamic portal circulation and abrogate graft injury, some centers have studied adjunctive splenectomy, portosystemic shunt, or octreotide infusion with mixed results (Masetti et al, 2004; Troisi & de Hemptinne, 2003).

Although certainly an important factor for liver graft function in general, optimizing liver graft outflow, is a possible way to reduce hyperdynamic liver injury. In right-lobe grafts, the outflow of the anterior sector to the middle hepatic vein, via segment V and VIII veins, is variable. In patients in whom the GRWR is low, surgical reconstruction of these middle hepatic vein tributaries may reduce hepatic congestion and potentially prevent the development of SFSS (Sugawara et al, 2004). Venous reconstruction may be in the form of including the main trunk of the middle hepatic vein with the graft, or interposition grafting can be used to recreate the intrahepatic middle hepatic vein.

Another proposed method of avoiding SDSS is increasing liver mass by using dual liver grafts. One center reported their preliminary experience using dual grafts from one right lobe without the middle hepatic vein and one left lateral segment in adult-adult LDLT (Chen et al, 2009). They concluded that dual grafts prevent SFSS in adult-adult LDLT and secure the safety of the donors. Although this strategy reduces the individual donor risk by avoiding extended resection, it places two individuals at risk in efforts to perform a safer transplantation in one recipient; further study will clarify the utility of this approach in LDLT.

Donor and Recipient Surgical Procedures (See Chapter 90D)

General Principles

It is paramount to make donor safety the primary concern when contemplating liver donation leading to LDLT. Despite the enormous advances in surgical conduct and patient care in hepatobiliary surgery, live donation places a person who lacks medical indications for surgery in a potentially life-threatening situation for the medical improvement of another. In addition, contrary to pathology-based liver resection, in LDLT there is equal concern for the portion of the liver to remain in the donor (remnant liver) as for the portion being donated; therefore identifying and managing the hepatic vasculobiliary anatomy has particular significance to ensure 1) the remant liver is fully viable and able to regenerate and continue normal function in the donor, and 2) the donated portion is anatomically amenable to successful transplantation in the recipient. Furthermore, in efforts to avoid ischemic injury to the liver graft, hepatic inflow occlusion is generally avoided during parenchymal transection, but some centers find it of no clinical consequence (Imamura et al, 2004; Miller et al, 2004b).

One clinical advantage of LDLT is the ability to choreograph both the donor and recipient procedures, not only the timing of transplantation in the medical course of the recipient, reducing hepatic graft cold ischemic time during the event. The procedures are performed by two separate surgical teams, in adjacent rooms, such that donor safety is optimized, and liver graft is transferred efficiently. Typically, the donor operation takes longer than the recipient hepatectomy, and therefore the donor procedure is begun first; however, when there is concern that the recipient operation could be aborted, such as upon finding hepatic malignancy, the procedures are reversed. When the recipient is being transplanted for hepatic malignancy, the recipient is surgically explored to exclude disease undetected on preoperative studies, and the donor procedure is initiated, if no recipient contraindications are found (Gondolesi et al, 2002a).

The combination of ever-increasing experience in living donor hepatectomy and minimally invasive liver surgery and the ubiquitous desire to minimize donor morbidity has led to the application of minimally invasive techniques in transplantation. Thus far, these advances have been applied to liver donation surgery. Although initial experience in minimally invasive and robotic-assisted renal transplantation is expanding (Hoznek et al, 2002; Rosales, 2010), the ability to perform laparoscopic liver recipient procedures seems doubtful because of graft size and operative domain requirements.

In the following sections, both the open and minimally invasive liver-donation procedures will be described. These sections will focus on laparoscopic variations, as the open procedures are reviewed in detail in Chapter 90D.

Left Lateral Section Transplantation

Open Donor Procedure

Once anatomic variations identified preoperatively are confirmed, the gastrohepatic omentum is divided. During this time, dissection proceeds carefully to preserve a replaced left hepatic artery arising from the left gastric artery to facilitate reconstruction during the recipient procedure (Kostelic et al, 1996). If present, the parenchymal bridge overlying the umbilical fissure between segments III and IV is divided, exposing the left portal structures within the umbilical fissure. Vascular dissection is approached at the origin of the left hepatic artery and proceeds distally. If there is more than one artery supplying segments II and III, all are carefully preserved in the donor for later reconstruction (Soin et al, 1996; Suzuki et al, 1971). The artery supplying segment IV is sacrificed, unless it originates proximal enough to be preserved and yet provide adequate arterial length to the graft, or if it arises from the right hepatic artery (Fig. 98B.6). The resultant ischemia of segment IV is tolerated well in the donor with expected radiographic postsurgical changes (Shoji et al, 2003).

The full length of the left portal vein is isolated by sacrificing the portal branches to segment IV medially and the caudate lobe dorsally (see Fig. 98B.6; Broelsch et al, 1991). The left hepatic vein is isolated, including division of the ligamentum venosum, but carefully preserving the middle hepatic vein, as the left and middle veins unite in, or just cranially to, their exit from the hepatic parenchyma. The segment II and III bile duct is divided sharply at the hilar plate at the base of the umbilical fissure, and the distal end is oversewn. The parenchyma between segment IV and segments II and III is divided using the dissecting device of the surgeon’s choice without hepatic inflow occlusion.

Now that the liver graft is separate from the remnant right trisegment, the left hepatic artery is ligated proximally and divided, the left portal vein and left hepatic vein are divided (cut ends are oversewn in the donor), and the liver graft is removed. The graft is taken promptly to the back-table and is flushed with preservative and prepared for implantation (Tanaka et al, 1993; Yamaoka et al, 1995).

Laparoscopic Donor Procedure

Cherqui and colleagues (2002) first described the laparoscopic donor procedure, which consists of removing segments II and III, left arterial and portal inflow, left bile duct, and left hepatic vein without hepatic inflow occlusion (see Chapter 90E). Logically, the operative strategy and extent of vascular dissection parallels that of the open procedure.

Recipient Procedure

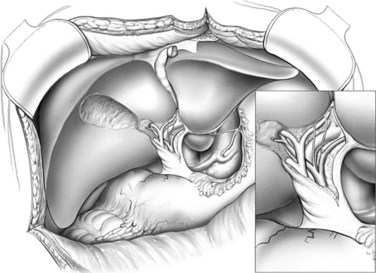

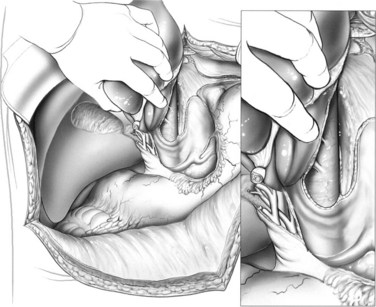

As in all varieties of LDLT, recipient hepatectomy is performed with both preservation of the recipient vena cava and meticulous dissection of the hepatic hilum to preserve maximum length of the hepatic artery and portal vein (Fig. 98B.7).

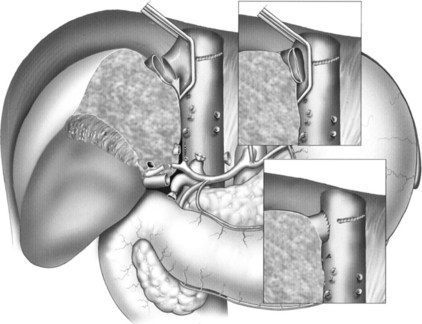

Once the diseased liver is removed, the recipient vena cava is occluded; this allows the orifices of the hepatic veins to be joined sharply to create a common orifice that matches the donor left hepatic vein. This maneuver is pivotal in creating the maximum achievable graft outflow tract. The venous anastomosis is created in a wide, triangular fashion (Fig. 98B.8).

The venous anastomosis is completed in a manner that allows later graft flushing prior to reperfusion. The graft left portal vein is anastomosed to the recipient portal bifurcation or, if indicated, to the level of the mesenteric-splenic confluence, utilizing an autologous venous interposition graft. To prevent anastomotic coarctation, the portal anastomosis is performed with interrupted sutures (Emre, 2001) or with a running suture tied for a large growth factor. The hepatic arterial reconstruction is performed under high magnification, using a surgical loupe or an operating microscope, typically by creating a hepatic artery bifurcation branch patch to match the size of the hepatic artery of the graft (see Fig. 98B.8; Stevens et al, 1992; Wei et al, 2004). Biliary-enteric continuity is achieved with a Roux-en-Y hepaticojejunostomy (Fig. 98B.9).

Left Hepatic Transplantation

Open Donor Procedure

The approach for the donor procedure is similar to that for the left lateral section, although the left medial section is not devascularized. Following exposure and mobilization of the left hemiliver, cholecystectomy is performed, and the left hepatic artery and left portal vein are isolated at their origin. Aberrant arterial anatomy is identified and preserved for possible later reconstruction, including a large segment IV artery originating from the right hepatic artery. The hilar plate is lowered at the biliary confluence, and a cholangiogram via the cystic duct confirms both the biliary anatomy and the site of safe left hepatic duct transection. The left hepatic duct is isolated and divided, and the remnant side is secured. The posterior right hepatic duct may cross the Cantlie line to enter the left hepatic duct in some donors, therefore care must be taken to divide the left hepatic duct to the left of the anomalous entry (Soejima et al, 2003a; Varotti et al, 2004).

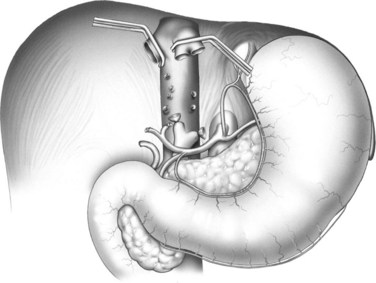

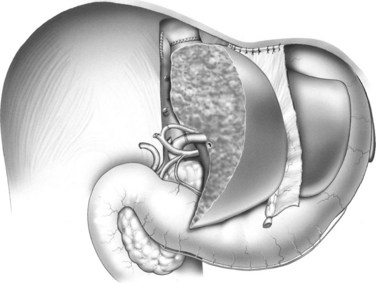

Many centers choose to include the caudate lobe with a left-lobe graft, given the left portal inflow, biliary drainage, and added hepatic mass (Fig. 98B.10; Abdalla et al, 2002; Miyagawa et al, 1998; Takayama et al, 2000).

FIGURE 98B.10 Dissection of the caudate lobe off of the vena cava during preparation of a left-lobe graft.

The left lobe is elevated, the caudate is dissected off of the vena cava to the base of the Cantlie line, and the caudate vein proper is encircled. The left and middle hepatic veins are isolated above the liver, utilizing the technique of Arantius for simplification. Temporary inflow occlusion may be used to delineate the transection plane at the Cantlie line. The parenchyma is transected, which may be facilitated by the so-called hanging maneuver (Belghiti et al, 2001; Broering et al, 1998). The intrahepatic middle hepatic vein is divided as it courses into segment IVa, allowing procurement of left and middle hepatic veins with the graft.

Laparoscopic Donor Procedure

There is extensive global clinical experience in left-lobe LDLT. Although some centers are performing laparoscopic donor hepatectomy for the left lateral section and right lobe, only limited minimally invasive left-lobe donors have been reported (Kurosaki, et al, 2006). In this report, 10 left-lobe donor procedures (5 including the caudate lobe) were reviewed, exhibiting good donor and recipient outcomes. The technique utilized is video-assisted and is summarized below.

Laparaoscopic left hepatectomy is established for other clinical indications (Koffron et al, 2007b). The techniques are well defined, but its application to left-lobe donation is complicated by caudate management; therefore it seems logical that this donation procedure will evolve to a minimally invasive extent, positioned between that for the left lateral sectionectomy and right hemihepatectomy.

Recipient Procedure

As in all forms of LDLT, the recipient hepatectomy is performed with caval preservation, and the portal structures are dissected distally in the hepatic hilum. The left hepatic vein–middle hepatic vein confluence of the liver graft is typically anastomosed end-to-end to the same in the recipient. If there is a vessel size discrepancy or concern for torsion or kinking of the venous outflow, direct anastomosis to the vena cava may be performed (Egawa et al, 1997; Makuuchi & Sugawara, 2003; Sugawara et al, 2002b).

Right Hepatic Transplantation

Open Donor Procedure

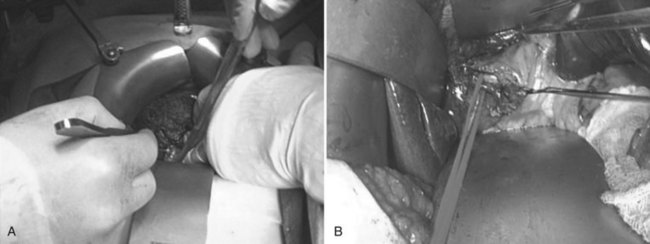

Access to the abdomen is gained through either a right subcostal or bilateral subcostal incision with upper midline extension. Following exploration, the teres and falciform ligaments are divided close to the abdominal wall to facilitate reconstruction after right-lobe donation and to prevent medial-posterior rotation and torsion of the remnant liver (Miller et al, 2001). Cholecystectomy is performed, and the cystic duct is cannulated for later cholangiography. With anteromedial retraction of the extrahepatic biliary system, the peritoneum and neurolymphatic tissue of the portal hepatis is approached posterolaterally. This allows identification and isolation of the right hepatic artery from the lateral border of the common hepatic duct to its entrance into the right lobe (Fig. 98B.11; Kawarada et al, 2000).

Dividing the right hepatic duct is a crucial step. If the right hepatic duct exists as a single structure, precise transection is necessary to obtain a single orifice, just proximal to division into sectorial ducts, and also avoid encroaching on the remnant liver biliary system (Huang et al, 1996; Varotti et al, 2004). To ensure accurate and safe transection, guidance with fluoroscopic cholangiography allows identification, marking, and precise transection of the right hepatic duct. The duct is transected sharply, avoiding overdissection and ischemic or thermal injury, which may lead to biliary complications in the recipient (Gondolesi et al, 2004a).

The caudate process is divided over the IVC at the base of the Cantlie line using electrocautery; this allows passage of an umbilical tape from above the liver, between the middle hepatic vein and right hepatic vein, behind the liver, and up through the incised caudate process. The umbilical tape is lifted during hepatic parenchymal transection, guiding the division down the line of Cantlie, greatly facilitating the division of the most posterior parenchyma and simultaneously protecting the vena cava from injury (Belghiti et al, 2001; Broering et al, 1998).

In rapid sequence, the graft is devascularized and flushed. The right hepatic artery is ligated proximally and divided distal to the ligature to allow back bleeding. The right portal vein is then clamped (or stapled) and divided to ensure no compromise to the portal inflow to the remnant left hepatic lobe. The right hepatic vein, middle hepatic vein, and any preserved short hepatic veins are clamped or stapled and then divided, and the graft is removed. While the graft is flushed with preservation solution, and necessary vascular reconstructions are performed (Nakamura et al, 2002), the donor procedure is completed. This includes suturing clamped vessel stumps, ensuring no bile leakage, and completion cholangiogram to ensure vascularity of the remnant liver, followed by reconstruction of the falciform ligament and closure.

Laparoscopic Donor Procedure

Because of the right lobe’s large size, fragility, and the consequences of graft injury, minimally invasive donation of the right lobe is usually performed by using a hybrid technique of liver resection (Koffron et al, 2007a). This technique of laparoscopic-assisted liver resection uses laparoscopic techniques to mobilize the liver while avoiding the morbid right subcostal incision. The hilar dissection and parenchymal transection proceed laparoscopically to the limits of safety or surgeon comfort level, at which time standard open techniques are used via the hand-assisted/extraction incision. This technique of right-lobe donation was first described by Koffron and colleagues in 2006 and is briefly summarized.

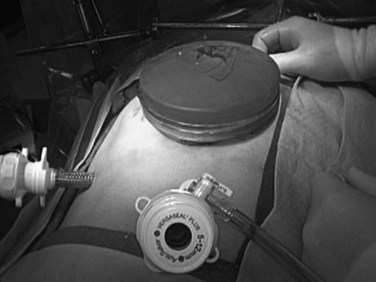

initial maneuvers

The donor is placed under general anesthesia in supine position with arms adducted. Pneumoperitoneum (CO2 at 12 mm Hg) is established through a 12-mm umbilical port, and the abdomen is explored utilizing a 30-degree 10-mm laparoscope for optimal visualization of the retrohepatic structures. Once the liver is visualized, one additional 10-mm port is placed at the right midclavicular line, and a 5-cm subxiphoid midline incision is created for hand assistance during right-lobe manipulation and for graft extraction using a hand-port device (Fig. 98B.12). This configuration enables the surgeon, who stands on the left side of the patient, to use the hand port for graft manipulation, with simultaneous use of the midclavicular line port for dissection.

right lobe mobilization

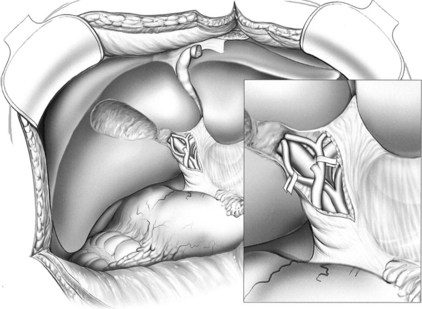

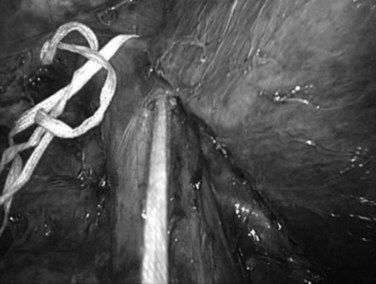

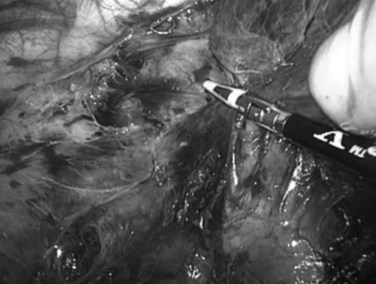

Next, the ligamentum teres, falciform, coronary, and right triangular ligaments are divided using a coaptive laparoscopic tissue-sealing device. The hepatic bare area is completely mobilized, and the right lobe is elevated to expose the length of the retrohepatic IVC. The posterior vena cava ligament (Fig. 98B.13) and short hepatic veins are divided using a secure method—such as a sealing device, clips, or stapler (Figs. 98B.14 and 98B.15)—to separate the IVC and right lobe to allow visualization of the right hepatic vein (RHV) as it enters the IVC. An umbilical tape is passed around the RHV (Fig. 98B.16), the right lobe is allowed to return to anatomic position, and a cholecystectomy is performed.

FIGURE 98B.13 Division of the posterior vena cava ligament. Right hemiliver is elevated anteromedially.

FIGURE 98B.14 Division of short hepatic and caudate veins following division of the posterior vena cava ligament.

hilar dissection and liver resection

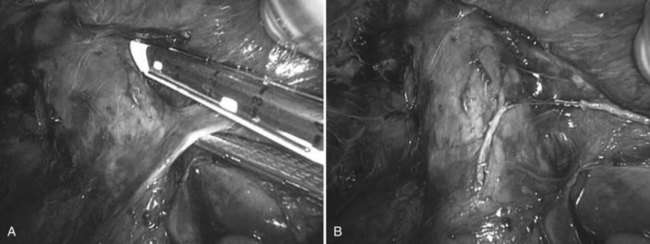

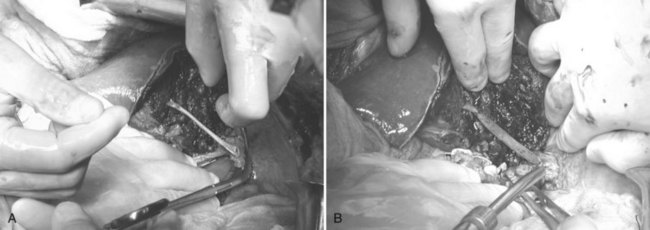

The right hepatic artery (RHA) and right portal vein (RPV) are isolated posteriolaterally, with hand-assisted laparoscopy, as they enter the right lobe. Using the hand-assisted/extraction incision, under direct vision (Fig. 98B.17), the cystic duct is cannulated, cholangiogram confirms the biliary anatomy, and the right hepatic duct is isolated using fluoroscopic cholangiographic guidance. The right hepatic duct is then transected, leaving a 4-mm stump with the donor hepatic confluence, which is oversewn. The RHA and RPV branches are further dissected under direct vision to optimize vessel length and enhance safety (see Fig. 98B.17B). There is no hepatic inflow occlusion, and the central venous pressure is maintained at 2 to 4 mm Hg in preparation for parenchymal transection. Using the hand-assisted/extraction incision, the liver parenchyma is divided at the Cantlie line (Fig. 98B.18) in an inferior/anterior to superior/posterior direction using the transaction methods of choice. The middle hepatic vein tributaries from segments V and VIII are secured and divided using a method that allows later reconstruction, such as sutures or staples.

The retrohepatic umbilical tape is used toward the end of the parenchymal transection in the method of Belghiti (Belghiti et al, 2001; Ogata et al, 2007) to bring the transection plane closer to the abdominal wall. Once the right liver is separate, the RHA is ligated just lateral to the common bile duct and is transected sharply with scissors distally through the hand-assisted incision, allowing back-bleeding from the graft side. Immediately, the RPV is stapled using an Endo GIA (gastrointestinal anastomosis) stapler (Covidien, Mansfield, MA) (Fig. 98B.19A), the right lobe is retracted laterally, and the RHV is stapled and divided at the IVC with the stapler (Fig. 98B.19B). The RPV is then transected sharply distally to the staple line; this causes the right lobe to decompress by allowing back-bleeding through the graft side of the RPV. The right lobe is gently extracted through the hand-assist subxiphoid incision (Fig. 98B.19C). A completion cholangiogram is performed, and a closed suction drain is placed and exteriorized through the right subcostal port site. The port sites and the extraction incision are closed in layers.

The initial reported series of four donors suggested equivalent donor and recipient outcomes using this technique compared with the standard open technique (Koffron et al, 2006). Later, the same group performed a retrospective, comparative analysis of 33 laparoscopic donors and 33 open donors (Baker et al, 2009). This analysis demonstrated that the laparoscopic approach can be performed as safely as the open technique, with the advantages of reduced operative times, a trend toward reduced blood loss, and comparable donor complication rates, length of stay, and hospital and surgical costs. In addition, the laparoscopic procedure provided equitable grafts for the recipient with equal patient and graft survival and complication rates. Although greater experience and wider usage of this technique is necessary to confirm these findings, such an approach intuitively can benefit donors and may lead to the increase in willingness to donate, as has been demonstrated for kidney transplantation.

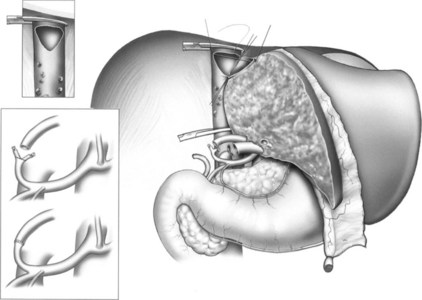

Recipient Procedure

As in left-lobe LDLT, total hepatectomy is performed with caval preservation (Fig. 98B.20; Tzakis et al, 1989), and the structures of the portal triad are dissected high in the hilum to preserve options for subsequent reconstruction. If anticipating a duct-to-duct biliary reconstruction, avoid overdissection of the recipient common bile duct, which may devascularize the proximal extent, the site of potential anastomosis to the graft hepatic duct. In addition, maintaining the distal portion of the recipient middle hepatic vein—dissected from the cranial parenchyma of segment IVa, as the devascularized liver is being removed—leaves this available for use in graft middle hepatic vein reconstruction. This final portion of the hepatectomy is facilitated by complete occlusion of the cava, which allows division and suture control of difficult retrohepatic structures.

Once the liver is removed, or in the case of recipient instability, partial occlusion may be chosen to maintain venous return during implantation. Of course, as in whole-liver transplantation, venovenous bypass may be used as an adjunct to ensure hemodynamic stability, reduce blood loss, or inhibit visceral vascular congestion during the anhepatic phase (Fan et al, 2003; Grewal et al, 2001). Once the diseased liver is removed, hemostasis is ensured, the graft is placed in the resulting hepatic fossa, and venous reconstruction is visualized.

The right hepatic vein is reconstructed in a manner that allows maximal possible venous outflow. This is achieved either by anastomosis to the residual cuff of the recipient right hepatic vein or to an enlarged cavotomy created by enlarging the right hepatic vein orifice (see Fig. 98B.20; Liu et al, 2003; Sugawara & Makuuchi, 2001). This reconstruction should be performed anticipating acute and delayed enlargement of the graft following reperfusion/engorgement and hepatic regeneration, respectively.

Compensatory hepatic regeneration occurs within the space confined by the vena cava, diaphragm, and lateral thoracoabdominal wall. As the hepatic graft enlarges, this can change the geometry of the venous anastomosis over time and can potentially lead to outflow obstruction (Hata et al, 2004; Humar et al, 2004; Olthoff, 2003). Accessory inferior hepatic veins over 5 mm in diameter are anastomosed directly to the side of the vena cava (see Fig. 98B.20).

Once the dorsal-most hepatic veins have been addressed, reconstruction of segment V or VIII middle hepatic vein tributaries, if required, can be performed (Fig. 98B.21). Many techniques for reconstruction are available, depending on the surgical anatomy and vessel availability (Kinkhabwala et al, 2003; Marcos et al, 2001b; Sugawara et al, 2004). The use of vascular interposition grafts, if needed, is facilitated by placement during graft back table preparation and may include cadaveric iliac vein, recipient saphenous vein, preserved vascular allograft, and synthetic vascular conduit.

The donor right portal vein is anastomosed to the recipient main or right portal vein, accounting for size discrepancy and/or vessel length. As in whole-organ transplantation, recipient portal vein thrombosis is treated by thromboendovenectomy or portal vein resection and venous interposition graft (Dumortier et al, 2002; Kadry et al, 2002; Moon et al, 2004). The donor right hepatic artery usually is anastomosed to the recipient right or proper hepatic arteries in an attempt to prevent redundancy or kinking, which may occur as the arterial spasm subsides following reperfusion.

The methods of biliary reconstruction depends on the size, number, and configuration of the donor and recipient ducts. This may include direct duct-to-duct anastomosis, Roux-en-Y hepaticojejunostomy, or a combination of these techniques in cases of biliary complexity (Fig. 98B.22; Azoulay et al, 2001; Testa et al, 2000).

Biliary Complications (See Chapter 100)

Biliary complications remain the largest contributor to morbidity following LDLT. Despite further understanding of the anatomy and both donor and recipient surgical techniques, biliary leak and stenosis rates range from 20% to 40% (Gondolesi et al, 2004a; Pomfret, 2003) up to 60% (Kawachi et al, 2002). The A2ALL cohort study reported a 31.8% biliary leak rate across the nine participating transplant centers (Freise et al, 2008). Studies investigating the methods of biliary reconstruction found no difference in overall complications, but one advantage of duct-to-duct reconstruction is that it allows future endoscopic access and management of these complications. Leaks generally manifest early after transplantation (Popescu et al, 1994) and are associated with significant morbidity and mortality (Gondolesi et al, 2004a). Bile leak from the cut liver edge may be treated successfully with percutaneous drainage, but anastomotic leaks invariably require endoscopic and/or operative management.

As in DDLT, biliary strictures are generally diagnosed by noting an increase in biliary enzymes. Evaluation of strictures usually involves US, MRC, and in some cases, direct cholangiography, either endoscopic or percutaneous transhepatic cholangiography (PTC). The treatment strategy is similar to biliary stricture in general (see Chapter 42A), although operative management in LDLT recipients is technically difficult as a result of the neighboring vascular reconstructions.

Postoperative Care of Living Liver Donors

Despite meticulous surgical postoperative care, right-lobe donor hepatectomy is associated with considerable morbidity, with up to 50% of donors experiencing complications in some series (Pomfret, 2003). The A2ALL retrospective cohort study found that out of 393 donors, 82 (21%) had one complication, and 66 (17%) had two or more complications Although most were of low-grade severity, such as respiratory and wound-related complications, a significant proportion were severe (26%), life threatening (2%), or even fatal (0.8%; Ghobrial et al, 2008).

Some degree of hepatic insufficiency is clinically identified after surgery, presenting as transient prolonged prothrombin time and nonobstructive cholestasis. Management of biliary leakage with right hepatectomy is similar to right hepatectomy for other indications, but the surgical team should be aware that delayed reoperation could occur because of the logical desire to avoid reoperating on a donor. Vascular complications such as hemorrhage and portal thrombosis are rare but logically require rapid detection and surgical correction to avoid injury to the remnant liver. Fortunately, uncommon instances of biliary stricture (Lee et al, 2004), incisional hernia, and small bowel obstruction have been treated by standard surgical means.

The psychiatric effects on liver donors is not insignificant. The A2ALL study group identified one or multiple psychiatric complications in 16 (4.1%) of 392 donors, including three severe complications: suicide, accidental drug overdose, and attempted suicide (Trotter et al, 2007). These findings stress the importance of both preoperative screening assessment and monitoring of liver donors to prevent such tragic events.

Abdalla EK, et al. The caudate lobe of the liver: implications of embryology and anatomy for surgery. Surg Oncol Clin North Am. 2002;11:835-848.

Abt PL, et al. Allograft survival following adult-to-adult living donor liver transplantation. Am J Transplant. 2004;4:1302-1307.

Asakura T, et al. Doppler ultrasonography in living-related liver transplantation. Transplant Proc. 1998;30:3190-3194.

Axelrod D, et al. Living donor liver transplant for malignancy. Transplantation. 2005;79(3):363-366.

Azoulay D, et al. Duct-to-duct biliary anastomosis in living related liver transplantation: the Paul Brousse technique. Arch Surg. 2001;136:1197-1200.

Baker T, et al. Laparoscopy-assisted and open living donor right hepatectomy: a comparative study of outcomes. Surgery. 2009;146:817-825.

Belghiti J, et al. Liver hanging maneuver: a safe approach to right hepatectomy without liver mobilization. J Am Coll Surg. 2001;193(1):109-111.

Ben-Haim M, et al. Critical graft size in adult-to-adult living donor liver transplantation: impact of the recipient’s disease. Liver Transpl. 2001;7:948-953.

Berenguer M, Wright TL. Treatment strategies for hepatitis C: intervention prior to liver transplant, pre-emptively or after established disease. Clin Liver Dis. 2003;7:631-650.

Boillot O, et al. Orthotopic liver transplantation from a living adult donor to an adult using the right hepatic lobe. Chirurgie. 1999;124:122-131.

Bozorgzadeh A, et al. Impact of hepatitis C viral infection in primary cadaveric liver allograft versus primary living-donor allograft in 100 consecutive liver transplant recipients receiving tacrolimus. Transplantation. 2004;77:1066-1070.

Broelsch CE, et al. Liver transplantation in children from living related donors: surgical techniques and results. Ann Surg. 1991;214:428-437.

Broering DC, et al. Vessel loop-guided technique for parenchymal transection in living donor or in situ split-liver procurement. Liver Transpl. 1998;4:241.

Bruix J, Llovet JM. Prognostic prediction and treatment strategy in hepatocellular carcinoma. Hepatology. 2002;35:519-524.

Campsen J, et al. Outcomes of living donor liver transplantation for acute liver failure: the adult-to-adult living donor liver transplantation cohort study. Liver Transpl. 2008;14(9):1273-1280.

Chen CL, et al. Living-donor liver transplantation: 12 years of experience in Asia. Transplantation. 2003;75(3 Suppl):S6-S11.

Chen Z, et al. Prevent small-for-size syndrome using dual grafts in living donor liver transplantation. J Surg Res. 2009;155(2):261-267.

Cheng YF, et al. Single imaging modality evaluation of living donors in liver transplantation: magnetic resonance imaging. Transplantation. 2001;72:1527-1533.

Cherqui D, et al. Laparoscopic living donor hepatectomy for liver transplantation in children. Lancet. 2002;359(9304):392-396.

de Villa V, Lo CM. Liver transplantation for hepatocellular carcinoma in Asia. Oncologist. 2007;12:1321-1331.

Dumortier J, et al. Eversion thrombectomy for portal vein thrombosis during liver transplantation. Am J Transplant. 2002;2:934-938.

Egawa H, et al. Hepatic vein reconstruction in 152 living-related donor liver transplantation patients. Surgery. 1997;121:250-257.

Emre S. Living donor liver transplantation: a critical review. Transplant Proc. 2001;33:3456-3457.

Everson G, et al. Treatment of advanced hepatitis C with low accelerating dose regimen of antiviral therapy. Hepatology. 2005;42(2):255-262.

Fan ST, et al. Right lobe living donor liver transplantation with or without venovenous bypass. Br J Surg. 2003;90:48-56.

Fishbein T, et al. Use of livers with microvesicular fat safely expands the donor pool. Transplantation. 1997;64:248-251.

Freise CE, et al. Recipient morbidity after living and deceased donor liver transplantation: findings from the A2ALL Retrospective Cohort Study. Am J Transplant. 2008;8(12):2569-2579.

Gaglio PJ, et al. Increased risk of cholestatic hepatitis C in recipients of grafts from living versus cadaveric liver donors. Liver Transpl. 2003;9:1028-1035.

Garcia-Retortillo M, et al. Hepatitis C recurrence is more severe after living donor compared to cadaveric liver transplantation. Hepatology. 2004;40:699-707.

Ghobrial RM, et al. Donor morbidity after living donation for liver transplantation. Gastroenterology. 2008;135(2):468-476.

Goldman J, et al. Noninvasive preoperative evaluation of biliary anatomy in right-lobe living donors with mangafodipir trisodium-enhanced MR cholangiography. Transplant Proc. 2003;35:1421-1422.

Gondolesi G, et al. Hepatocellular carcinoma: a prime indication for living donor liver transplantation. J Gastrointest Surg. 2002;6:102-107.

Gondolesi GE, et al. Venous hemodynamics in living donor right lobe liver transplantation. Liver Transplantation: official publication of the American Association for the Study of Liver Diseases and the International Liver Transplantation Society. 2002;8(9):809-813.

Gondolesi GE, et al. Biliary complications in 96 consecutive right lobe living donor transplant recipients. Transplantation. 2004;77:1842-1848.

Gondolesi GE, et al. Accurate method for clinical assessment of right lobe liver weight in adult living-related liver transplant. Transplant Proc. 2004;36:1429-1433.

Gondolesi GE, et al. Adult living donor liver transplantation for patients with hepatocellular carcinoma: extending UNOS priority criteria. Ann Surg. 2004;239:142-149.

Grewal HP, et al. Surgical technique for right lobe adult living donor liver transplantation without venovenous bypass or portocaval shunting and with duct-to-duct biliary reconstruction. Ann Surg. 2001;233:502-508.

Gruttadauria S, et al. The hepatic artery in liver transplantation and surgery: vascular anomalies in 701 cases. Clin Transplant. 2001;15:359-363.

Hardy KJ, Jones RM. Hepatic artery anatomy in relation to reconstruction in liver transplantation: some unusual variations. Aust N Z J Surg. 1994;64:437-440.

Hashikura Y, et al. Successful living-related partial liver transplantation to an adult patient. Lancet. 1994;343:1233-1234.

Hata S, et al. Volume regeneration after right liver donation. Liver Transpl. 2004;10:65-70.

Hiatt JR, et al. Surgical anatomy of the hepatic arteries in 1000 cases. Ann Surg. 1994;220:50-52.

Hoznek A, et al. Robotic assisted kidney transplantation: an initial experience. J Urol. 2002;167(4):1604-1606.

Huang TL, et al. Variants of the bile ducts: clinical application in the potential donor of living-related hepatic transplantation. Transplant Proc. 1996;28:1669-1670.

Huang TL, et al. Intraoperative Doppler ultrasound in living-related liver transplantation. Transplant Proc. 2000;32:2097-2098.

Humar A, et al. Liver regeneration after adult living donor and deceased donor split-liver transplants. Liver Transpl. 2004;10:374-378.

Hwang S, et al. The effect of donor weight reduction on hepatic steatosis for living donor liver transplantation. Liver Transpl. 2004;10:721-725.

Ikegami T, et al. Living donor liver transplantation for acute liver failure: a 10-year experience in a single center. J Am College Surg. 2008;206(3):412-418.

Imamura H, et al. Pringle’s maneuver and selective inflow occlusion in living donor liver hepatectomy. Liver Transpl. 2004;10:771-778.

Kadry Z, et al. Living donor liver transplantation in patients with portal vein thrombosis: a survey and review of technical issues. Transplantation. 2002;74:696-701.

Kaihara S, et al. Living-donor liver transplantation for hepatocellular carcinoma. Transplantation. 2003;75(3 Suppl):S37-S40.

Kawachi S, et al. Biliary complications in adult living donor liver transplantation with duct-to-duct hepaticocholedochostomy or Roux-en-Y hepaticojejunostomy biliary reconstruction. Surgery. 2002;132:48-56.

Kawarada Y, et al. Anatomy of the hepatic hilar area: the plate system. J Hepatobiliary Pancreat Surg. 2000;7:580-586.

Kinkhabwala MM, et al. Outflow reconstruction in right hepatic live donor liver transplantation. Surgery. 2003;133(3):243-250.

Kobayashi S, et al. Complete recovery from fulminant hepatic failure with severe coma by living donor liver transplantation. Hepatogastroenterology. 2003;50:515-518.

Kocak B, et al. Proposed classification of complications after live donor nephrectomy. Urology. 2006;67(5):927-931.

Koffron A, et al. Laparoscopic-assisted right lobe donor hepatectomy. Am J Transplant. 2006;6(10):2522-2525.

Koffron A, et al. Laparoscopic liver surgery for everyone: the hybrid method. Surgery. 2007;142(4):463-468.

Koffron AJ, et al. Evaluation of 300 minimally invasive liver resections at a single institution: less is more. Ann Surg. 2007;246(3):385-392.

Kostelic JK, et al. Angiographic selection criteria for living related liver transplant donors. AJR Am J Roentgenol. 1996;166:1103-1108.

Kulik L, Abecassis M. Living donor liver transplantation for hepatocellular carcinoma. Gastroenterology. 2004;127(5 Suppl 1):S277-S282.

Kurosaki I, et al. Video-assisted living donor hemihepatectomy through a 12-cm incision for adult-to-adult liver transplantation. Surgery. 2006;139(5):695-703.

Lang H, et al. Extended indications for liver transplantation in HCC with special reference to living donor liver donation. Kongressbd Dtsch Ges Chir Kongr. 2002;119:410-413.

Lee SG, et al. Which types of graft to use in patients with acute liver failure? (A) Auxiliary liver transplant (B) Living donor liver transplantation (C) The whole liver (D) I prefer living donor liver transplantation. J Hepatol. 2007;46:574-578.

Lee SY, et al. Living donor liver transplantation: complications in donors and interventional management. Radiology. 2004;230:443-449.

Limanond P, et al. Macrovesicular hepatic steatosis in living related liver donors: correlation between CT and histologic findings. Radiology. 2004;230:276-280.

Lee VS, et al. Liver transplant donor candidates: associations between vascular and biliary anatomic variants. Liver Transplant. 2004;10(8):1049-1054.

Liu CL, et al. Hepatic venoplasty in right lobe live donor liver transplantation. Liver Transpl. 2003;9:1265-1272.

Lo CM, et al. Minimum graft size for successful living donor liver transplantation. Transplantation. 1999;68:1112-1116.

Lo CM, et al. Lessons learned from one hundred right lobe living donor liver transplants. Ann Surg. 2004;240:151-158.

Lubezky N, et al. Initial experience with urgent adult-to-adult living donor liver transplantation in fulminant hepatic failure. Isr Med Assoc J. 2004;6:467-470.

Makuuchi M, Sugawara Y. Living-donor liver transplantation using the left liver, with special reference to vein reconstruction. Transplantation. 2003;75(3 Suppl):S23-S24.

Marcos A, et al. Right lobe living donor liver transplantation. Transplantation. 1999;68:798-803.

Marcos A, et al. Reconstruction of double hepatic arterial and portal venous branches for right-lobe living donor liver transplantation. Liver Transpl. 2001;7:673-679.

Marcos A, et al. Functional venous anatomy for right-lobe grafting and techniques to optimize outflow. Liver Transpl. 2001;7:845-852.

Marcos A, et al. Hepatic arterial reconstruction in 95 adult right lobe living donor liver transplants: evolution of anastomotic technique. Liver Transpl. 2003;9:570-574.

Masetti M, et al. Living donor liver transplantation with left liver graft. Am J Transplant. 2004;4:1713-1716.

Miller C, et al. Fulminant and fatal gas gangrene of the stomach in a healthy live liver donor. Liver Transpl. 2004;10:1315.

Miller CM, et al. One hundred nine living donor liver transplants in adults and children: a single-center experience. Ann Surg. 2001;234:301-311.

Miller CM, et al. Intermittent inflow occlusion in living liver donors: impact on safety and remnant function. Liver Transpl. 2004;10:244-247.

Miyagawa S, et al. Concomitant caudate lobe resection as an option for donor hepatectomy in adult living related liver transplantation. Transplantation. 1998;66:661-663.

Moon D, et al. Umbilical portion of recipient’s left portal vein: a useful vascular conduit in dual living donor liver transplantation for the thrombosed portal vein. Liver Transpl. 2004;10:802-806.

Morioka D, et al. Outcomes of adult-to-adult living donor liver transplantation: a single institution’s experience with 335 consecutive cases. Ann Surg. 2007;245:315-325.

Nakamura T, et al. Anatomical variations and surgical strategies in right lobe living donor liver transplantation: lessons from 120 cases. Transplantation. 2002;73:1896-1903.

New York State Committee on Quality Improvement in Living Liver Donation. Report to the New York Transplant Council and New York State Department of Health. Report No. 1. Albany, NY: New York Department of Health; 2002.

Niemann CU, et al. Intraoperative hemodynamics and liver function in adult-to-adult living liver donors. Liver Transpl. 2002;8:1126-1132.

Olthoff KM. Hepatic regeneration in living donor liver transplantation. Liver Transpl. 2003;9(10 Suppl 2):S35-S41.

Ogata S, et al. Two hundred liver hanging maneuvers for major hepatectomy: a single-center experience. Ann Surg. 2007;245(1):31-35.

O’Rourke N, Fielding G. Laparoscopic right hepatectomy: surgical techniques. J Gastrointest Surg. 2004;8:213-216.

Park J, et al. Clinical outcome after living donor liver transplantation in patients with hepatitis C virus–associated cirrhosis. Korean J Hepatol. 2007;13(4):543-555.

Piscaglia F, et al. Systemic and splanchnic hemodynamic changes after liver transplantation for cirrhosis: a long-term prospective study. Hepatology. 1999;30:58-64.

Pomfret EA. Early and late complications in the right-lobe adult living donor. Liver Transpl. 2003;9(10 Suppl 2):S45-S49.

Popescu I, et al. Biliary complications in 400 cases of liver transplantation. Mt Sinai J Med. 1994;61:57-62.

Raia S, et al. Liver transplantation from live donors. Lancet. 1989;2:497.

Renz JF, et al. Biliary anatomy as applied to pediatric living donor and split-liver transplantation. Liver Transpl. 2000;6:801-804.

Rinella M, et al. Dual-echo, chemical shift gradient-echo magnetic resonance imaging to quantify hepatic steatosis: implications for living liver donation. Liver Transpl. 2003;9(8):851-856.

Rosales A, et al. Laparoscopic kidney transplantation. Eur Urol. 2010;57(1):164-167. Epub 2009 July 5

Ryan CK, et al. One hundred consecutive hepatic biopsies in the workup of living donors for right lobe liver transplantation. Liver Transpl. 2002;8:1114-1122.

Salame E, et al. Analysis of donor risk in living-donor hepatectomy: the impact of resection type on clinical outcome. Am J Transplant. 2002;2:780-788.

Salvalaggio P, et al. Liver graft volume estimation in 100 living donors: measure twice, cut once. Transplantation. 2005;80(9):1181-1185.

Schiano TD, et al. Adult living donor liver transplantation: the hepatologist’s perspective. Hepatology. 2001;33:3-9.

Schmeding M, et al. Hepatitis C recurrence and fibrosis progression are not increased after living donor liver transplantation: a single-center study of 289. Liver Transpl. 2007;13(5):687-692.

Shaked A, et al. Incidence and severity of acute cellular rejection in recipients undergoing adult living donor or deceased donor transplantation. Am J Transplant. 2009;9(2):301-308.

Shiffman M, et al. Histologic recurrence of chronic hepatitis C virus in patients after living donor and deceased donor liver transplantation. Liver Transpl. 2004;10(10):1248-1255.

Shoji M, et al. The safety of the donor operation in living-donor liver transplantation: an analysis of 45 donors. Transpl Int. 2003;16:461-464.

Soejima Y, et al. Feasibility of duct-to-duct biliary reconstruction in left-lobe adult-living-donor liver transplantation. Transplantation. 2003;75:557-559.

Soejima Y, et al. Use of steatotic graft in living-donor liver transplantation. Transplantation. 2003;76:344-348.

Soin AS, et al. Donor arterial variations in liver transplantation: management and outcome of 527 consecutive grafts. Br J Surg. 1996;83:637-641.

Stevens LH, et al. Hepatic artery thrombosis in infants: a comparison of whole livers, reduced-size grafts, and grafts from living-related donors. Transplantation. 1992;53:396-399.

Sugawara Y, Makuuchi M. Surgical technique for hepatic venous reconstruction in liver transplantation. Nippon Geka Gakkai Zasshi. 2001;102:794-797.

Sugawara Y, et al. Small-for-size grafts in living-related liver transplantation. J Am Coll Surg. 2001;192:510-513.

Sugawara Y, et al. Living donor liver transplantation for fulminant hepatic failure. Transplant Proc. 2002;34:3287-3288.

Sugawara Y, et al. New venoplasty technique for the left liver plus caudate lobe in living donor liver transplantation. Liver Transpl. 2002;8:76-77.

Sugawara Y, et al. Left liver grafts for patients with MELD score of less than 15. Transplant Proc. 2003;35:1433-1434.

Sugawara Y, et al. Refinement of venous reconstruction using cryopreserved veins in right liver grafts. Liver Transpl. 2004;10:541-547.

Surman OS. The ethics of partial-liver donation. N Engl J Med. 2002;346:1038.

Suzuki T, et al. Surgical significance of anatomic variations of the hepatic artery. Am J Surg. 1971;122:505-512.

Takada Y, et al. Clinical outcomes of living donor liver transplantation for hepatitis C virus (HCV)-positive patients. Transplant. 2006;81(3):350-354.

Takayama T, et al. Living-related transplantation of left liver plus caudate lobe. J Am Coll Surg. 2000;190:635-638.

Tanaka K, et al. Surgical techniques and innovations in living related liver transplantation. Ann Surg. 1993;217:82-91.

Terrault NA, et al. Outcomes in hepatitis C virus–infected recipients of living donor and deceased donor liver transplantation. Liver Transpl. 2007;13(1):122-129.

Testa G, et al. Biliary anastomosis in living related liver transplantation using the right liver lobe: techniques and complications. Liver Transpl. 2000;6:710-714.

Testa G, et al. From living related to in-situ split liver transplantation: how to reduce waiting-list mortality. Pediatr Transpl. 2001;5:16-20.

Todo S, Furukawa H. Living donor liver transplantation for adult patients with hepatocellular carcinoma: experience in Japan. Japanese Study Group on Organ Transplantation. Ann Surg. 2004;240:451-461.

Troisi R, de Hemptinne B. Clinical relevance of adapting portal vein flow in living donor liver transplantation in adult patients. Liver Transpl. 2003;9:S36-S41.

Trotter JF, et al. Severe psychiatric problems in right hepatic lobe donors for live donor liver transplantation. Transplantation. 2007;83(11):1506-1508.

Tzakis A, et al. Orthotopic liver transplantation with preservation of the inferior vena cava. Ann Surg. 1989;210:649-652.

Uchino S, et al. Steatotic liver allografts up-regulate UCP-2 expression and suffer necrosis in rats. J Surg Res. 2004;120:73-82.

United Network for Organ Sharing (UNOS). The organ procurement and transplantation network data base. Available at http://www.unos.org, 2008. Accessed December 1, 2008

Varotti G, et al. Anatomic variations in right liver living donors. J Am Coll Surg. 2004;198:577-582.

Wachs ME, et al. Adult living donor liver transplantation using a right hepatic lobe. Transplantation. 1998;66:1313-1316.

Wei WI, et al. Microvascular reconstruction of the hepatic artery in live donor liver transplantation: experience across a decade. Arch Surg. 2004;139:304-307.

Yamaoka Y, et al. Liver transplantation using a right lobe graft from a living related donor. Transplantation. 1994;57:1127-1130.

Yamaoka Y, et al. Safety of the donor in living-related liver transplantation: an analysis of 100 parental donors. Transplantation. 1995;59:224-226.

Yao FY, et al. A follow-up analysis of the pattern and predictors of dropout from the waiting list for liver transplantation in patients with hepatocellular carcinoma: implications for the current organ allocation policy. Liver Transpl. 2003;9:684-692.

Yeh BM, et al. Biliary tract depiction in living potential liver donors: comparison of conventional MR, mangafodipir trisodium–enhanced excretory MR, and multi-detector row CT cholangiography—initial experience. Radiology. 2004;230:645-651.

Yoshizumi T, et al. A simple new formula to assess liver weight. Transplant Proc. 2003;35:1415-1420.

Zimmerman MA, Trotter JF. Living donor liver transplantation in patients with hepatitis C. Liver Transpl. 2003;9:S52-S57.