Image Processing

History of retinal imaging

The optical properties of the eye that allow image formation prevent direct inspection of the retina. Though existence of the red reflex has been known for centuries, special techniques are needed to obtain a focused image of the retina. The first attempt to image the retina, in a cat, was completed by the French physician Jean Mery, who showed that if a live cat is immersed in water, its retinal vessels are visible from the outside.1 The impracticality of such an approach for humans led to the invention of the principles of the ophthalmoscope in 1823 by Czech scientist Jan Evangelista  (frequently spelled Purkinje) and its reinvention in 1845 by Charles Babbage.2,3 Finally, the ophthalmoscope was reinvented yet again and reported by von Helmholtz in 1851.4 Thus, inspection and evaluation of the retina became routine for ophthalmologists, and the first images of the retina (Fig. 6.1) were published by the Dutch ophthalmologist van Trigt in 1853.5 The first useful photographic images of the retina, showing blood vessels, were obtained in 1891 by the German ophthalmologist Gerloff.6 In 1910, Gullstrand developed the fundus camera, a concept still used to image the retina today7; he later received the Nobel Prize for this invention. Because of its safety and cost-effectiveness at documenting retinal abnormalities, fundus imaging has remained the primary method of retinal imaging.

(frequently spelled Purkinje) and its reinvention in 1845 by Charles Babbage.2,3 Finally, the ophthalmoscope was reinvented yet again and reported by von Helmholtz in 1851.4 Thus, inspection and evaluation of the retina became routine for ophthalmologists, and the first images of the retina (Fig. 6.1) were published by the Dutch ophthalmologist van Trigt in 1853.5 The first useful photographic images of the retina, showing blood vessels, were obtained in 1891 by the German ophthalmologist Gerloff.6 In 1910, Gullstrand developed the fundus camera, a concept still used to image the retina today7; he later received the Nobel Prize for this invention. Because of its safety and cost-effectiveness at documenting retinal abnormalities, fundus imaging has remained the primary method of retinal imaging.

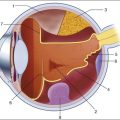

Fig. 6.1 First known image of human retina, as drawn by van Trigt in 1853. (Reproduced from Trigt AC. Dissertatio ophthalmologica inauguralis de speculo oculi. Utrecht: Universiteit van Utrecht, 1853.)

In 1961, Novotny and Alvis published their findings on fluorescein angiographic imaging.8 In this imaging modality, a fundus camera with additional narrow-band filters is used to image a fluorescent dye injected into the bloodstream that binds to leukocytes. It remains widely used, because it allows an understanding of the functional state of the retinal circulation.

The initial approach to depict the three-dimensional (3D) shape of the retina was stereo fundus photography, as first described by Allen in 1964, where multiangle images of the retina are combined by the human observer into a 3D shape.9 Subsequently, confocal scanning laser ophthalmoscopy (SLO) was developed, using the confocal aperture to obtain multiple images of the retina at different confocal depths, yielding estimates of 3D shape. However, the optics of the eye limit the depth resolution of confocal imaging to approximately 100 µm, which is poor when compared with the typical 300–500 µm thickness of the whole retina.10

OCT, first described in 1987 as a method for time-of-flight measurement of the depth of mechanical structures,11,12 was later extended to a tissue-imaging technique. This method of determining the position of structures in tissue, described by Huang et al. in 1991,13 was termed OCT. In 1993 in vivo retinal OCT was accomplished for the first time.14 Today, OCT has become a prominent biomedical tissue-imaging technique, especially in the eye, because it is particularly suited to ophthalmic applications and other tissue imaging requiring micrometer resolution.

History of retinal image processing

Matsui et al. were the first to publish a method for retinal image analysis, primarily focused on vessel segmentation.15 Their approach was based on mathematical morphology and they used digitized slides of fluorescein angiograms of the retina. In the following years, there were several attempts to segment other anatomical structures in the normal eye, all based on digitized slides. The first method to detect and segment abnormal structures was reported in 1984, when Baudoin et al. described an image analysis method for detecting microaneurysms, a characteristic lesion of diabetic retinopathy (DR).16 Their approach was also based on digitized angiographic images. They detected microaneurysms using a “top-hat” transform, a step-type digital image filter.17 This method employs a mathematical morphology technique that eliminates the vasculature from a fundus image yet leaves possible microaneurysm candidates untouched. The field dramatically changed in the 1990s with the development of digital retinal imaging and the expansion of digital filter-based image analysis techniques. These developments resulted in an exponential rise in the number of publications, which continues today.

Current status of retinal imaging

Retinal imaging has developed rapidly during the last 160 years and is a now a mainstay of the clinical care and management of patients with retinal as well as systemic diseases. Fundus photography is widely used for population-based, large-scale detection of DR, glaucoma, and age-related macular degeneration. OCT and fluorescein angiography are widely used in the daily management of patients in a retina clinic setting. OCT has also become an increasingly helpful adjunct in preoperative planning and postoperative evaluation of vitreoretinal surgical patients.18 The overview below is partially based on an earlier review paper.19

Fundus imaging

1. fundus photography (including so-called red-free photography): image intensities represent the amount of reflected light of a specific waveband

2. color fundus photography: image intensities represent the amount of reflected red (R), green (G), and blue (B) wavebands, as determined by the spectral sensitivity of the sensor

3. stereo fundus photography: image intensities represent the amount of reflected light from two or more different view angles for depth resolution

4. SLO: image intensities represent the amount of reflected single-wavelength laser light obtained in a time sequence

5. adaptive optics SLO: image intensities represent the amount of reflected laser light optically corrected by modeling the aberrations in its wavefront

6. fluorescein angiography and indocyanine angiography: image intensities represent the amounts of emitted photons from the fluorescein or indocyanine green fluorophore that was injected into the subject’s circulation.

There are several technical challenges in fundus imaging. Since the retina is normally not illuminated internally, both external illumination projected into the eye as well as the retinal image projected out of the eye must traverse the pupillary plane. Thus the size of the pupil, usually between 2 and 8 mm in diameter, has been the primary technical challenge in fundus imaging.7 Fundus imaging is complicated by the fact that the illumination and imaging beams cannot overlap because such overlap results in corneal and lenticular reflections diminishing or eliminating image contrast. Consequently, separate paths are used in the pupillary plane, resulting in optical apertures on the order of only a few millimeters. Because the resulting imaging setup is technically challenging, fundus imaging historically involved relatively expensive equipment and highly trained ophthalmic photographers. Over the last 10 years or so, there have been several important developments that have made fundus imaging more accessible, resulting in less dependence on such experience and expertise. There has been a shift from film-based to digital image acquisition, and as a consequence the importance of picture archiving and communication systems (PACS) has substantially increased in clinical ophthalmology, also allowing integration with electronic medical records. Requirements for population-based early detection of retinal diseases using fundus imaging have provided the incentive for effective and user-friendly imaging equipment. Operation of fundus cameras by nonophthalmic photographers has become possible due to nonmydriatic imaging, digital imaging with near-infrared focusing, and standardized imaging protocols to increase reproducibility.

Optical coherence tomography imaging

OCT is a noninvasive optical medical diagnostic imaging modality which enables in vivo cross-sectional tomographic visualization of the internal microstructure in biological systems. OCT is analogous to ultrasound B-mode imaging, except that it measures the echo time delay and magnitude of light rather than sound, therefore achieving unprecedented image resolutions (1–10 µm).20 OCT is an interferometric technique, typically employing near-infrared light. The use of relatively long-wavelength light with a very wide-spectrum range allows OCT to penetrate into the scattering medium and achieve micrometer resolution.

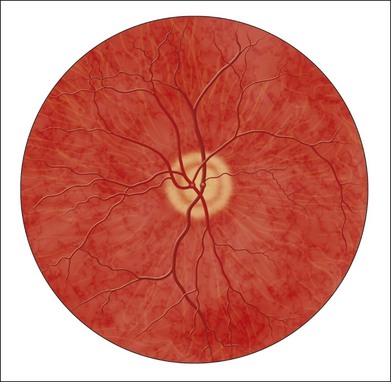

The principle of OCT is based upon low-coherence interferometry, where the backscatter from more outer retinal tissues can be differentiated from that of more inner tissues, because it takes longer for the light to reach the sensor. Because the differences between the most superficial and the deepest layers in the retina are around 300–400 µm, the difference in time of arrival is very small and requires interferometry to measure.21

We see the importance of the choice of a good low-coherence source – with either an incoherent or fully coherent source, interferometry is impossible. Such light can be generated by using superluminescent diodes (superbright light-emitting diodes) or lasers with extremely short pulses, femtosecond lasers. The optical setup typically consists of a Michelson interferometer with a low-coherence, broad-bandwidth light source (Fig. 6.2). By scanning the mirror in the reference arm, as in time domain OCT, modulating the light source, as in swept source OCT, or decomposing the signal from a broadband source into spectral components, as in spectral domain OCT (SD-OCT), a reflectivity profile of the sample can be obtained, as measured by the interferogram. The reflectivity profile, called an A-scan, contains information about the spatial dimensions and location of structures within the retina. A cross-sectional tomograph (B-scan) may be achieved by laterally combining a series of these axial depth scans (A-scan). En face imaging (C-scan) at an acquired depth is possible depending on the imaging engine used.

Fig. 6.2 Schematic diagram of the operation of an optical coherence tomography instrument, emphasizing splitting of the light in two arms, overlapping train of bursts “labeled” based on their autocorrelogram, and their interference after being reflected from retinal tissue as well as from the reference mirror (assuming the time delays of both paths are equal).

Time domain OCT

With time domain OCT, the reference mirror is moved mechanically to different positions, resulting in different flight time delays for the reference arm light. Because the speed at which the mirror can be moved is mechanically limited, only thousands of A-scans can be obtained per second. The envelope of the interferogram determines the intensity at each depth.13 The ability to image the retina two-dimensionally and three-dimensionally depends on the number of A-scans that can be acquired over time. Because of motion artifacts such as saccades, safety requirements limiting the amount of light that can be projected on to the retina, and patient comfort, 1–3 seconds per image or volume is essentially the limit of acceptance. Thus, the commercially available time domain OCT, which allowed collecting of up to 400 A-scans per second, has not yet been suitable for 3D imaging.

Frequency domain OCT

Spectral domain OCT

A broadband light source is used, broader than in time domain OCT, and the interferogram is decomposed spectrally using a diffraction grating and a complementary metal oxide semiconductor or charged couple device linear sensor. The Fourier transform is again applied to the spectral correlogram intensities to determine the depth of each scatter signal.22 With SD-OCT, tens of thousands of A-scans can be acquired each second, and thus true 3D imaging is routinely possible. Consequently, 3D OCT is now in wide clinical use, and has become the standard of care.

Swept source OCT

Instead of moving the reference arm, as with time domain OCT imaging, in swept source OCT the light source is rapidly modulated over its center wavelength, essentially attaching a second label to the light, its wavelength. A photo sensor is used to measure the correlogram for each center wavelength over time. A Fourier transform on the multiwavelength or spectral interferogram is performed to determine the depth of all tissue scatters at the imaged location.22 With swept source OCT, hundreds of thousands of A-scans can be obtained every second, with additional increase in scanning density when acquiring 3D image volumes.

Areas of active research in retinal imaging

Portable, cost-effective fundus imaging

For early detection and screening, the optimal place for positioning fundus cameras is at the point of care: primary care clinics, public venues (e.g., drug stores, shopping malls). Though the transition from film-based to digital fundus imaging has revolutionized the art of fundus imaging and made telemedicine applications feasible, the current cameras are still too bulky, expensive, and may be difficult to use for untrained staff in places lacking ophthalmic imaging expertise. Several groups are attempting to create more cost-effective and easier-to-use handheld fundus cameras, employing a variety of technical approaches.23,24

Functional imaging

For the patient as well as for the clinician, the outcome of disease management is mainly concerned with the resulting organ function, not its structure. In ophthalmology, current functional testing is mostly subjective and patient-dependent, such as assessing visual acuity and utilizing perimetry, which are all psychophysical metrics. Among more recently developed “objective” techniques, oxymetry is a hyperspectral imaging technique in which multispectral reflectance is used to estimate the concentration of oxygenated and deoxygenated hemoglobin in the retinal tissue.25 The principle allowing the detection of such differences is simple: deoxygenated hemoglobin reflects longer wavelengths better than does oxygenated hemoglobin. Nevertheless, measuring absolute oxygenation levels with reflected light is difficult because of the large variety in retinal reflection across individuals and the variability caused by the imaging process. The retinal reflectance can be modeled by a system of equations, and this system is typically underconstrained if this variability is not accounted for adequately. Increasingly sophisticated reflectance models have been developed to correct for the underlying variability, with some reported success.26 Near-infrared fundus reflectance in response to visual stimuli is another way to determine the retinal function in vivo and has been successful in cats. Initial progress has also been demonstrated in humans.27

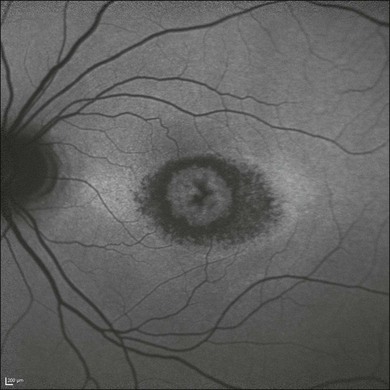

Adaptive optics

The optical properties of the normal eye result in a point spread function width approximately the size of a photoreceptor. It is therefore impossible to image individual cells or cell structure using standard fundus cameras because of aberrations in the human optical system. Adaptive optics uses mechanically activated mirrors to correct the wavefront aberrations of the light reflected from the retina, and thus has allowed individual photoreceptors to be imaged in vivo.28 Imaging other cells, especially the clinically highly important ganglion cells, has thus far been unsuccessful in humans.

Longer-wavelength OCT imaging

3D OCT imaging is now the clinical standard of care for several eye diseases. The wavelengths around 840 µm used in currently available devices are optimized for imaging of the retina. Deeper structures, such as the choroidal vessels, which are important for AMD and other choroidal diseases, and the lamina cribrosa, relevant for glaucomatous damage, are not as well depicted. Because longer wavelengths penetrate deeper into the tissue, a major research effort has been undertaken to develop low-coherence swept source lasers with center wavelengths of 1000–1300 µm. Prototypes of these devices are already able to resolve detail in the choroid and lamina cribrosa.29

Clinical applications of retinal imaging

The most obvious example of a retinal screening application is retinal disease detection, in which the patient’s retinas are imaged in a remote telemedicine approach. This scenario typically utilizes easy-to-use, relatively low-cost fundus cameras, automated analyses of the images, and focused reporting of the results. This screening application has spread rapidly over the last few years, and, with the exception of the automated analysis functionality, is one of the most successful examples of telemedicine.30 While screening programs exist for detection of glaucoma, age-related macular degeneration, and retinopathy of prematurity, the most important screening application focuses on early detection of DR.

Early detection of diabetic retinopathy

Early detection of DR via population screening associated with timely treatment has been shown to prevent visual loss and blindness in patients with retinal complications of diabetes.31,32 Almost 50% of people with diabetes in the USA currently do not undergo any form of regular documented dilated eye exam, in spite of guidelines published by the American Diabetes Association, the American Academy of Ophthalmology, and the American Optometric Association.33 In the UK, a smaller proportion or approximately 20% of diabetics are not regularly evaluated, as a result of an aggressive effort to increase screening for people with diabetes. Blindness and visual loss can be prevented through early detection and timely management. There is widespread consensus that regular early detection of DR via screening is necessary and cost-effective in patients with diabetes.34–37 Remote digital imaging and ophthalmologist expert reading have been shown to be comparable or superior to an office visit for assessing DR and have been suggested as an approach to make the dilated eye exam available to unserved and underserved populations that do not receive regular exams by eye care providers.38,39 If all of these underserved populations were to be provided with digital imaging, the annual number of retinal images requiring evaluation would exceed 32 million in the USA alone (approximately 40% of people with diabetes with at least two photographs per eye).39,40 In the next decade, projections for the USA are that the average age will increase, the number of people with diabetes in each age category will increase, and there will be an undersupply of qualified eye care providers, at least in the near term. Several European countries have successfully instigated in their healthcare systems early detection programs for DR using digital photography with reading of the images by human experts. In the UK, 1.7 million people with diabetes were screened for DR in 2007–2008. In the Netherlands, over 30 000 people with diabetes were screened since 2001 in the same period, through an early-detection project called EyeCheck.41 The US Department of Veterans Affairs has deployed a successful photo screening program through which more than 120 000 veterans were screened in 2008. While the remote imaging followed by human expert diagnosis approach was shown to be successful for a limited number of participants, the current challenge is to make the early detection more accessible by reducing the cost and staffing levels required, while maintaining or improving DR detection performance. This challenge can be met by utilizing computer-assisted or fully automated methods for detection of DR in retinal images.42–44

Early detection of systemic disease from fundus photography

In addition to detecting DR and age-related macular degeneration, it also deserves mention that fundus photography allows certain cardiovascular risk factors to be determined. Such metrics are primarily based on measurement of retinal vessel properties, such as the arterial to venous diameter ratio, and indicate the risk for stroke, hypertension, or myocardial infarct.45,46

Image-guided therapy for retinal diseases with 3D OCT

Another highly relevant example of a disease that will benefit from image-guided therapy is exudative age-related macular degeneration. With the advent of the anti-vascular endothelial growth factor (VEGF) agents ranibizumab and bevacizumab, it has become clear that outer retinal and subretinal fluid is the main indicator of a need for anti-VEGF retreatment.47–51 Several studies are under way to determine whether OCT-based quantification of fluid parameters and affected retinal tissue can help improve the management of patients with anti-VEGF agents.

Image analysis concepts for clinicians

Image analysis is a field that relies heavily on mathematics and physics. The goal of this section is to explain the major clinically relevant concepts and challenges in image analysis, with no use of mathematics or equations. For a detailed explanation of the underlying mathematics, the reader is referred to the appropriate textbooks.52

The retinal image

Different strategies for storing ophthalmic images

• Slides and computer printouts stored in the paper chart or photo archive

• Slides and paper printouts scanned and stored in a PACS

• Clinically relevant views stored in a PACS

• All raw data and clinically relevant views stored in a PACS

• Standard for storage and communication of ophthalmology images.

Digital exchange of retinal images and DICOM

DICOM stands for Digital Imaging and Communications in Medicine and is an organization founded in 1983 to create a standard method for the transmission of medical images and their associated information across all fields of medicine. For ophthalmology, Working Group 9 (WG-9) of DICOM is a formal part of the American Academy of Ophthalmology. Until recently, the work of WG-9 has focused on creating standards for fundus, anterior-segment, and external ophthalmic photography, resulting in DICOM Supplement 91 Ophthalmic Photography Image SOP Classes, and on OCT imaging in DICOM Supplement 110: Ophthalmic Tomography Image Storage SOP53,54 (http://medical.nema.org).

Retinal image analysis

Common image-processing steps

Preprocessing

There are many parallels between image preprocessing using computers and human retinal image processing in ganglion cells.19

Detection

There are many parallels between the features and the convolution process in digital image analysis, and the filters in the human visual cortex.55

Unsupervised and supervised image analysis

The design and development of a retinal image analysis system usually involves the combination of some of the steps as explained above, with specific sizes of features and specific operations used to map the input image into the desired interpretation output. The term “unsupervised” is used to indicate such systems. The term “supervised” is used when the algorithm is improved in stepwise fashion by testing whether additional steps or a choice of different parameters can improve performance. This procedure is also called training. The theoretical disadvantage of using a supervised system with a training set is that the provenance of the different settings may not be clear. However, because all retinal image analysis algorithms undergo some optimization of parameters, by the designer or programer, before clinical use, this is only a relative, not absolute, difference. Two distinct stages are required for a supervised learning/classification algorithm to function: a training stage, in which the algorithm “statistically learns” to classify correctly from known classifications, and a testing or classification stage in which the algorithm classifies previously unseen images. For proper assessment of supervised classification method functionality, training data and performance testing data sets must be completely separate.52

Pixel feature classification

Pixel feature classification is a machine learning technique that assigns one or more classes to the pixels in an image.55,57 Pixel classification uses multiple pixel features including numeric properties of a pixel and the surroundings of a pixel. Originally, pixel intensity was used as a single feature. More recently, n-dimensional multifeature vectors are utilized, including pixel contrast with the surrounding region and information regarding the pixel’s proximity to an edge. The image is transformed into an n-dimensional feature space and pixels are classified according to their position in space. The resulting hard (categorical) or soft (probabilistic) classification is then used either to assign labels to each pixel (for example “vessel” or “nonvessel” in the case of hard classification), or to construct class-specific likelihood maps (e.g., a vesselness map for soft classification). The number of potential features in the multifeature vector that can be associated with each pixel is essentially infinite. One or more subsets of this infinite set can be considered optimal for classifying the image according to some reference standard. Hundreds of features for a pixel can be calculated in the training stage to cast as wide a net as possible, with algorithmic feature selection steps used to determine the most distinguishing set of features. Extensions of this approach include different approaches to classifying groups of neighboring pixels subsequently by utilizing group properties in some manner, for example cluster feature classification, where the size, shape, and average intensity of the cluster may be used.

Measuring performance of image analysis algorithms

Sensitivity and specificity

The performance of a lesion detection system can be measured by its sensitivity, which is the number of true positives divided by the sum of the total number of (incorrectly missed) false negatives plus the number of (correctly identified) true positives.52 System specificity is determined as the number of true negatives divided by the sum of the total number of false positives (incorrectly identified as disease) and true negatives. Sensitivity and specificity assessment both require ground truth, which is represented by location-specific discrete values (0 or 1) of disease presence or absence for each subject in the evaluation set. The location-specific output of an algorithm can also be represented by a discrete number (0 or 1). However, the output of the assessment algorithm is often a continuous value determining the likelihood p of local disease presence, with an associated probability value between 0 and 1. Consequently, the algorithm can be made more specific or more sensitive by setting an operating threshold on this probability value, p.

Receiver operator characteristics

If an algorithm outputs a continuous value, as explained above, multiple sensitivity/specificity pairs for different operating thresholds can be calculated. These can be plotted in a graph, which yields a curve, the so-called receiver operator characteristics or ROC curve.52,56 The area under this ROC curve (AUC, represented by its value Az) is determined by setting a number of different thresholds for the likelihood p. Sensitivity and specificity pairs of the algorithm are then obtained at each of these thresholds. The ground truth is kept constant. The maximum AUC is 1, denoting a perfect diagnostic procedure, with some threshold at which both sensitivity and specificity are 1 (100%).

The reference standard or gold standard

Typically these performance measurements are made by comparing the output of the image analysis system to some standard, usually called the reference standard or gold standard. Because the performance of some image analysis systems, for example for detection of DR, is starting to exceed that of individual clinicians or groups of clinicians, creating the reference standard is an area of active research.42

Given that determining the true state of disease necessary to create the reference standard is so challenging, the following options have been developed and are in wide use42:

1. Using the modality under study. The images are read and adjudicated by multiple trained readers according to a standardized protocol. This is less biased and a better estimate than a single clinician, but has higher cost. This method is often used, but the true disease is not known this way.

2. Using a different modality. In the case of a microaneurysm, an angiogram would be a suitable modality. It requires expert interpretation, and preferably multiple experts. It is less biased towards the imaging modality and may therefore be a better estimate. Because of the added procedure it is less patient-friendly, and has higher cost associated with it.

3. Doing a biopsy. Often this may be ethically unacceptable. It also displaces the problem, because the biopsy would necessarily be interpreted by human expert(s), for example a pathologist, with intra- and interobserver variability. It is more unequivocal, but also more invasive and has higher cost.

4. Outcome-based. If the clinically relevant question is not so much whether a microaneurysm is present or absent, but instead whether the patient is at risk of going blind from proliferative disease, we can wait for that outcome to occur. However, the true state of disease at this moment would still not be known, only the true state at some time in the past. Clinical outcome is maximally unequivocal and minimally subjective.

5. True state of disease, which is an unknowable quantity, as explained above.

Clinical safety relevant performance measurement

Performance of a system that has been developed for screening should not be evaluated based solely on its sensitivity and specificity for detection of that disease. Such metrics do not accurately reflect the complete performance in a screening setup. Rare, irregular, or atypical lesions often do not occur frequently enough in standard data sets to affect sensitivity and specificity but can have dramatic health and safety implications. To maximize screening relevance, the system must therefore include a mechanism to detect rare, atypical, or irregular abnormalities, for example in DR detection algorithms.43 For proper performance assessment, the types of potential false negatives – lesions that can be expected or shown to be incorrectly missed by the automated system – must be determined. While detection of red lesions and bright lesions is widely covered in the literature, detection of rare or irregular lesions, such as hemorrhages, neovascularization, geographic atrophy, scars, and ocular neoplasms has received much less attention, despite the fact that they all can occur in combination with DR and other retinal diseases, as well as in isolation. For example, presence of such lesions in isolated forms and without any co-occurrence of small red lesions is rare in DR; thus missing these does not affect standard metrics of performance to a measurable degree.41 One suitable approach for detecting such lesions is to use a retinal atlas, where the image is routinely compared to a generic normal retina. After building a retinal atlas by registering the fundus images according to a disc, fovea, and a vessel-based coordinate system, image properties at each atlas location from a previously unseen image can be compared to the atlas-based image properties. Consequently, locations can be identified as abnormal if groups of pixels have values outside the normal atlas range.

Fundus image analysis

Detection of retinal vessels

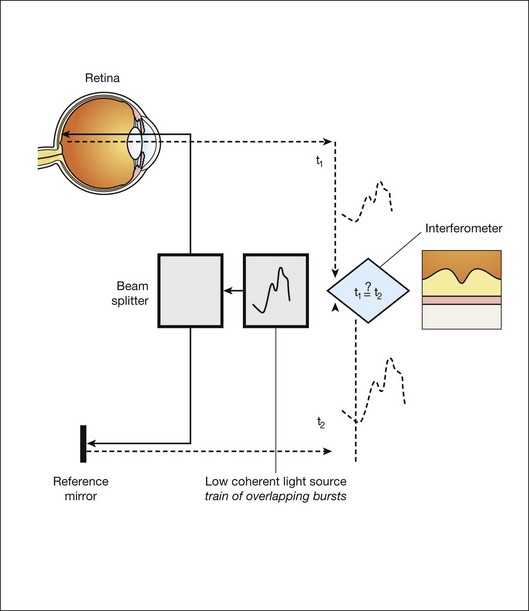

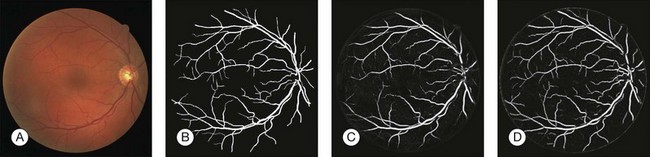

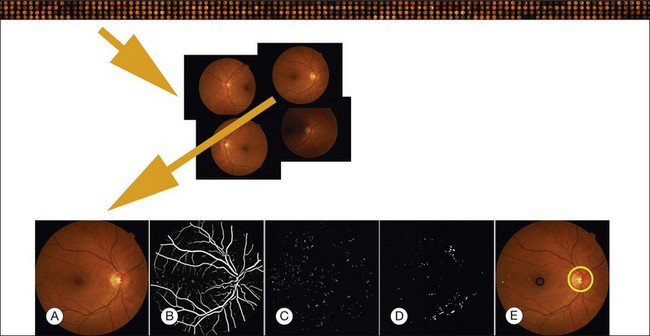

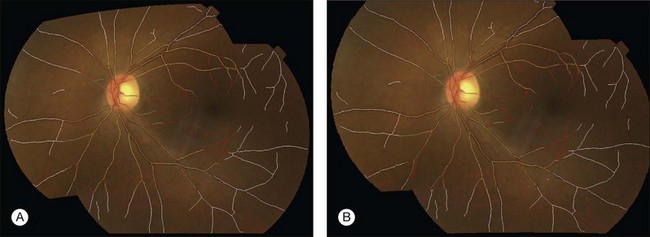

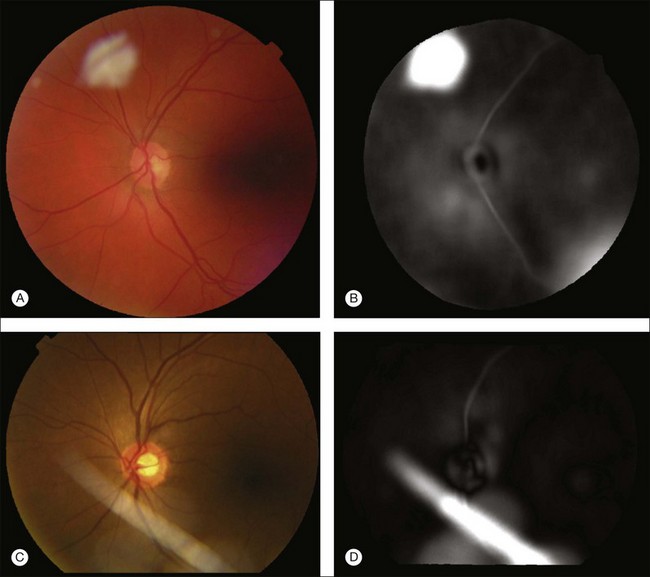

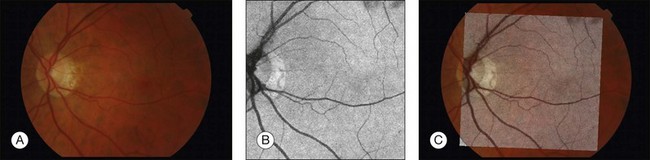

Automated segmentation of retinal vessels has been highly successful in the detection of large and medium vessels57–59 (Fig. 6.3). Because retinal vessel diameter and especially the relative diameters of arteries and veins are known to signal the risk of systemic disease, including stroke, accurate determination of retinal vessel diameters, as well as the ability to differentiate veins from arteries, has become more important. Several semiautomated and automated approaches to determining vessel diameter have now been published.60–62 Other active areas of research include separation of arteries and veins, detection of small vessels with diameters of less than a pixel, and analysis of complete vessel trees using graphs.

Fig. 6.3 Automated vessel analysis. (A) Fundus image; (B) retinal specialist annotation; (C) vesselness map from Staal algorithm (staal); (D) vesselness map from direct pixel classification. (Reproduced with permission from Niemeijer N, Staal JJ, van Ginneken B, et al. Comparative study of retinal vessel segmentation methods on a new publicly available database. In: Fitzpatrick JM, Sonka, editors. SPIE medical imaging, vol. 5370. SPIE, 2004, p. 648–56.)

Vessel detection approaches can be divided into region-based and edge-based approaches. Region-based segmentation methods label each pixel as either inside or outside a blood vessel. Niemeijer et al. proposed a pixel-based retinal vessel detection method using a gaussian derivative filter bank and k-nearest-neighbor classification.57,59 Staal et al.59 proposed a pixel feature-based method that additionally analyzed the vessels as elongated structures. Edge-based methods can be further classified into two categories: window-based methods and tracking-based methods. Window-based methods estimate a match at each pixel against the pixel’s surrounding window. The tracking approach exploits local image properties to trace the vessels from an initial point. A tracking approach can better maintain the connectivity of vessel structure. Lalonde et al.63 proposed a vessel-tracking method by following an edge line while monitoring the connectivity of its twin border on a vessel map computed using a Canny edge operator. Breaks in the connectivity will trigger the creation of seeds that serve as extra starting points for further tracking. Gang et al. proposed a retinal vessel detection using a second-order gaussian filter with adaptive filter width and adaptive threshold.64

Detection of fovea and optic disc

Location of the optic disc and fovea benefits retinal image analysis (Fig. 6.4). It is often necessary to mask out the normal anatomy before finding abnormal structures. For instance, the optic disc might be mistaken for a bright lesion if not detected. Secondly, the distribution of the abnormalities is not uniform on fundus photographs. Specific abnormalities occur more often in specific areas on the retina. Most optic disc detection methods are based on the fact that the optic disc is the convergence point of blood vessels and it is normally the brightest structure on a fundus image. Most fovea detection methods depend partially on the result of optic disc detection.

Fig. 6.4 Typical steps necessary for analysis of fundus images, in this case for early diabetic retinopathy. Top row: large sequence of image sets from multiple patients; second row: image set of four retinal images for a single patient; third row: (A) original image; (B) vesselness map; (C) automatically detected red lesions in white; (D) automatically detected bright lesions in white; and (E) detection of fovea (black) and optic disc (yellow) as well as automatically detected red lesions indicated in shades of green; bright lesions in shades of blue superimposed on original image.

Hoover et al. proposed a method for optic disc detection based on the combination of vessel structure and pixel brightness.65 If a strong vessel convergence point is found in the image, it is regarded as the optic disc. Otherwise the brightest region is detected. Foracchia et al. proposed an optic disc detection method based on vessel directions.66 A parabolic model of the main vascular arches is established and the model parameters are the directions associated with different locations on the parabolic model. The point with a minimum sum of square error is reported as the optic disc location. Lowell et al., by matching an optic disc model using the Pearson correlation, determined an initial optic disc location, and then traced the optic disc boundary using a deformable contour model.67

Most fovea detection methods use the fact that the fovea is a dark region in the image and that it normally lies in a fixed orientation and location relative to the optic disc and the main vascular arch. In a study by Fleming et al., approximate locations of the optic disc and fovea are obtained using the elliptical form of the main vascular arch.68 Then, the locations are refined based on the circular edge of the optic disc and the local darkness at the fovea. Li and Chutatape also proposed a method to select the brightest 1% pixels in a gray-level image.69 The pixels are clustered and principal component analysis based on a trained system is applied to extract a single point as the estimated location of the optic disc. A fovea candidate region is then selected based on the optic disc location and the main vascular arch shape. Within the candidate region, the centroid of the cluster with the lowest mean intensity and pixel number greater than one-sixth disc area is regarded as the foveal location. In a paper by Sinthanayothin et al., the optic disc was located as the area with the highest variation in intensity of adjacent pixels, while the fovea was extracted using intensity information and a relative distance to the optic disc.70 Tobin et al. proposed a method to detect the optic disc based on blood vessel features, such as density, average thickness, and orientation.71 Then the fovea location was determined based on the location of the optic disc and a geometry model of the main blood vessel. Niemeijer et al. proposed a method to localize automatically both the optic disc and fovea in 2006.72,73 For the optic disc detection, a set of features are extracted from the color fundus image. A k-nearest-neighbor classification is used to give a soft label to each pixel on the test image. The probability image is blurred and the pixel with the highest probability is detected as optic disc. Relative position information between the optic disc and the fovea is used to limit the search of fovea into a certain region. For each possible location of the optic disc, a possible location of the fovea is given. The possible locations for the fovea are stored in a separate image and the highest-probability location is detected as the fovea location.

Detection of retinal lesions

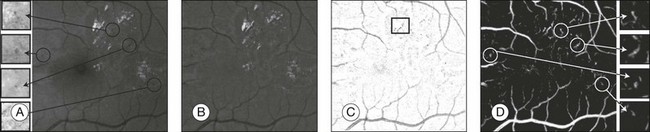

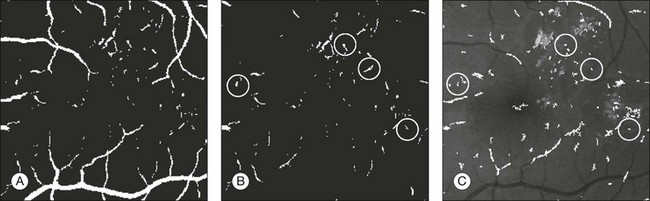

In this section we will primarily focus on detection of lesions in DR. DR has the longest history as a research subject in retinal image analysis. Figure 6.4 shows examples of a fundus photograph with the typical lesions automatically detected. After preprocessing, most approaches detect candidate lesions, after which a mathematical morphology template is utilized to segment and characterize the candidates (Fig. 6.5). This approach or a modification thereof is in use in many algorithms for detecting DR and age-related macular degeneration.74 Additional enhancements include the contributions of Spencer et al.75 and Frame et al.76 They added additional preprocessing steps, such as shade correction and matched filter postprocessing, to this basic framework to improve algorithm performance. Algorithms of this kind function by detecting candidate microaneurysms of various shapes, based on their response to specific image filters. A supervised classifier is typically developed to separate valid microaneurysms from spurious or false responses. However, these algorithms were originally developed to detect the high-contrast signatures of microaneurysms in fluorescein angiogram images. An important development was the addition of a more sophisticated filter, a modified version of the top-hat filter, so called because of its cross-section, to red-free fundus photographs rather than angiogram images, as was first described by Hipwell et al.77 They tested their algorithm on a large set of >3500 images and found a sensitivity/specificity operating point of 0.85/0.76. Once this filter-based approach had been established, development accelerated. The next step was broadening the candidate detection step, originally developed by Baudoin16 to detect candidate pixels, to a multifilter filter-bank approach.57,78 The responses of the filters are used to identify pixel candidates using a classification scheme. Mathematical morphology and additional classification steps are applied to these candidates to decide whether they indeed represent microaneurysms and hemorrhages (Fig. 6.6). A similar approach was also successful in detecting other types of DR lesions, including exudates and cotton-wool spots, as well as drusen in AMD.79

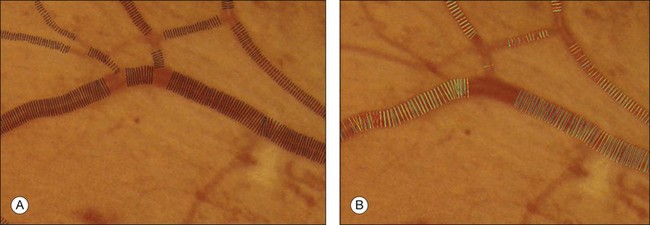

Fig. 6.5 Red lesion pixel feature classification. (A) Part of green color plane of a fundus image. Shown are pieces of vasculature and several red lesions. Circles mark the location of some of the red lesions in the image. (B) After subtracting median filtered version of the green plane, large background gradients are removed. (C) All pixels with a positive value are set to zero to eliminate bright lesions in the image. Note that exudates often partially occlude red lesions. Nonoccluded parts of red lesions show up clearly in this image. An example of this is marked with a rectangle. (D) Pixel classification result produced by contrast enhancement step. Nonoccluded parts of hemorrhages are visible together with the vasculature and a number of red lesions. (Reproduced with permission from Niemeijer M, van Ginneken B, Staal J, et al. Automatic detection of red lesions in digital color fundus photographs. IEEE Trans Med Imaging 2005;24:584–92.)

Fig. 6.6 Red lesion detection. (A) Thresholded probability map. (B) Remaining objects after connected component analysis and removal of large vasculature. (C) Shape and size of extracted objects in panel (B) do not correspond well with actual shape and size of objects in original image. Final region growing procedure is used to grow back actual objects in original image, which are shown here. In (B) and (C), the same red lesions as in Figure 6.6A are indicated with a circle. (Reproduced with permission from Niemeijer M, van Ginneken B, Staal J, et al. Automatic detection of red lesions in digital color fundus photographs. IEEE Trans MedImaging 2005;24:584–92.)

Initially, red lesions were detected in fluorescein angiograms because their contrast against the background is much higher than that of microaneurysms in color fundus photography images.75,76,80 Hemorrhages mask out fluorescence and present as dark spots in the angiograms. These methods employed a mathematical morphology technique that eliminated the vasculature from a fundus image but left possible microaneurysm candidates untouched, as first described in 1984.16 Later, this method was extended to high-resolution red-free fundus photographs by Hipwell et al.77 Instead of using morphology operations, a neural network was used, as demonstrated by Gardner et al.81 In their work, images are divided into 20 × 20 pixel grids and the grids are individually classified. Sinthanayothin et al. used a detection step to find blood-like regions and to segment both vessels and red lesions in a fundus image.82 A neural network was used to detect the vessels exclusively, and the remaining objects were labeled as microaneurysms. Niemeijer et al. presented a hybrid scheme that used a supervised pixel classification-based method to detect and segment the microaneurysm candidates in color fundus photographs.78 This method allowed for the detection of larger red lesions (i.e., hemorrhages) in addition to the microaneurysms using the same system. A large set of additional features, including color, was added to those that had been previously described.76,80 Using the features in a supervised classifier distinguished between real and spurious candidate lesions. These algorithms can usually distinguish between overlapping microaneurysms because they give multiple candidate responses.

Other recent algorithms only detect microaneurysms and forgo a phase of detecting normal retinal structures like the optic disc, fovea, and retinal vessels, which can act as confounders for abnormal lesions. Instead, the recent approaches find the microaneurysms directly using template matching in wavelet subbands.83 In this approach, the optimal adapted wavelet transform is found using a lifting scheme framework. By applying a threshold on the matching result of the wavelet template, the microaneurysms are labeled. This approach has meanwhile been extended explicitly to account for false negatives and false positives.42 Because it avoids detection of the normal structures, such algorithms can be very fast, on the order of less than a second per image.

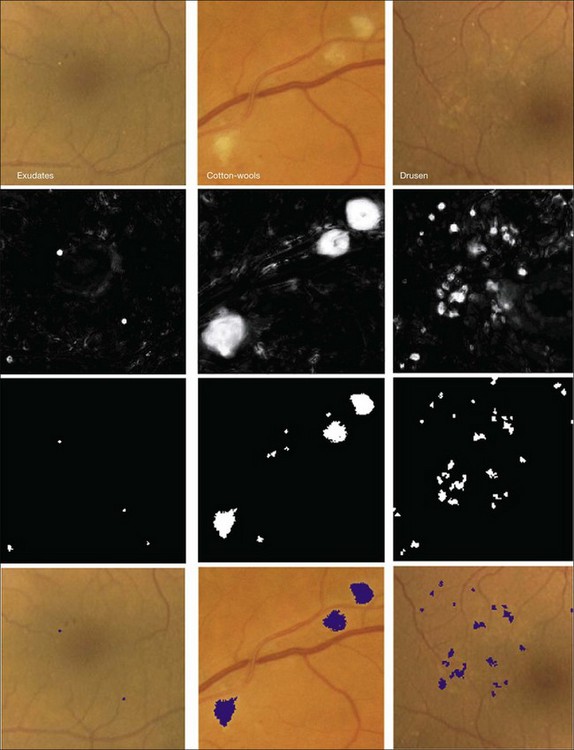

Bright lesions, defined as lesions brighter than the retinal background, can be found in the presence of retinal and systemic disease. Some examples of such bright lesions of clinical interest include drusen, cotton-wool spots, and lipoprotein exudates. To complicate the analysis, flash artifacts can be present as false positives for bright lesions. If the lipoprotein exudates only appear in combination with red lesions, they would only be useful for grading DR. The exudates can, however, in some cases appear as isolated signs of DR in the absence of any other lesion. Several computer-based systems to detect exudates have been proposed (Fig. 6.7).74,79,81,82,84

Fig. 6.7 Bright lesion detection algorithm steps performed to detect and differentiate “bright lesions.” From left to right: exudates, cotton-wool spots, and drusen. From top to bottom: relevant regions in the retinal color image (all at same scale); a posteriori probability maps after first classification step; pixel clusters labeled as probable bright lesions (potential lesions); bottom row shows final labeling of objects as true bright lesions, overlaid on original image. (Reproduced with permission from Niemeijer M, van Ginneken B, Russell SR, et al. Automated detection and differentiation of drusen, exudates, and cotton-wool spots in digital color fundus photographs for diabetic retinopathy diagnosis. Invest Ophthalmol Vis Sci 2007;48:2260–7.)

Because the different types of bright lesion have different diagnostic importance, algorithms should be capable not only of detecting bright lesions, but also of differentiating among the bright lesion types. One example algorithm capable of detection and differentiation of bright lesions was reported by Niemeijer et al. in 2007.79 This algorithm is based on an earlier red lesion algorithm presented by Hipwell et al. in 200077 and includes the following traditional steps, which are illustrated in Fig. 6.6:

1. lesion candidate cluster detection, where pixels are clustered into highly probable lesion regions

2. true bright lesion detection, where each candidate cluster is classified as a true lesion based on cluster features, including surface area, elongatedness, pixel intensity gradient, standard deviation of pixel values, pixel contrast, and local “vesselness” (as derived from a vessel segmentation map)

3. differentiation of lesions into drusen, exudates, and cotton-wool spots where a third classifier determines the likelihood for the true bright lesion to represent specific lesion types.

Vessel analysis

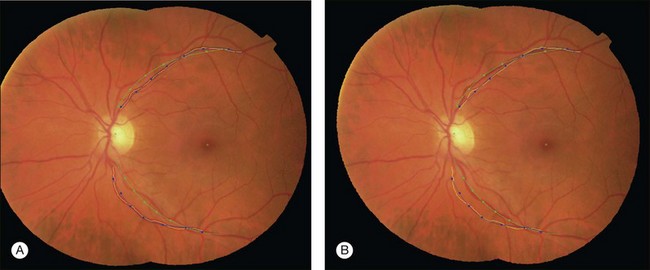

Vessel measures, such as the average width of arterioles and venules, the ratio of arteriolar to venular widths, and the branching ratio, have been established to be predictive of systemic diseases, especially hypertension, and also have potential value in degenerative retinal diseases such as retinitis pigmentosa. The methods in the section on detection of retinal vessels locate the vessels, but cannot determine vessel width. Additional techniques are needed to measure the vessel width accurately. Al-Diri et al. proposed an algorithm for segmentation and measurement of retinal blood vessels by growing a “ribbon of twins” active contour model. Their approach uses an extraction of segment profiles algorithm, which uses two pairs of contours to capture each vessel edge.85 The half-height full-width algorithm defines the width as the distance between the points on the intensity curve at which the function reaches half its maximum value to either side of the estimated center point.86–88 The Gregson algorithm fits a rectangle to the profile, setting the width so that the area under the rectangle is equal to the area under the profile.33 Xu et al. recently published a method based on graph search showing less variability than human experts (Fig. 6.8).89

Fig. 6.8 Automated vessel width measurement. (A) Automated measurement of vessel width (black lines); (B) three human experts marking the widths of the vessel manually (in green, blue, and yellow).

A fully automated method from the Abramoff group to measure the arteriovenous ratio in disc center retinal images was recently published.90 This method detects the location of the optic disc, determines an appropriate region of interest, classifies vessels as arteries or veins, estimates vessel widths, and calculates the arteriovenous ratio. The system eliminates all vessels outside the arteriovenous ratio measurement region of interest. A skeletonization operation is then applied to the remaining vessels after which vessel crossings and bifurcation points are removed, leaving a set of vessel segments consisting of only vessel centerline pixels. Features are extracted from each centerline pixel in order to assign these a soft label indicating the likelihood that the pixel is part of a vein. As all centerline pixels in a connected vessel segment should be the same type, the median soft label is assigned to each centerline pixel in the segment. Next, artery vein pairs are matched using an iterative algorithm, and finally, the widths of the vessels are used to calculate the average arteriovenous ratio.

Retinal atlas

The retina has a relatively small number of key anatomic structures (landmarks) visible using planar fundus camera imaging. Additionally, the expected shape, size, and color variations across a population are expected to be high. While there have been a few reports on estimating retinal anatomic structure using a single retinal image,71 we are not aware of any published work demonstrating the construction of a statistical retinal atlas using data from a large number of subjects. The choice of atlas landmarks in retinal images may vary depending on the view of interest. Regardless, the atlas should represent most retinal image properties in a concise and intuitive way. Three landmarks can be used as the retinal atlas key features: the optic disc center, the fovea, and the main vessel arch defined as the location of the largest vein/artery pairs. The disc and fovea provide landmark points, while the arch is a more complicated two-part curved structure that can be represented by its central axis. The atlas coordinate system then defines an intrinsic, anatomically meaningful framework within which anatomic size, shape, color, and other characteristics can be objectively measured and compared. Choosing either the disc center or fovea alone to define the atlas coordinate system would allow each image from the population to be translated so pinpoint alignment can be achieved. Choosing both disc and fovea allows corrections for translation, scale, and rotational differences across the population. However, nonlinear shape variations across the population would not be considered – this can be accomplished when the vascular arch information is utilized. The end of the arches can be defined as the first major bifurcations of the arch branches. The arch shape and orientation vary from individual to individual and influence the structure of the remaining vessel network. Establishing an atlas coordinate system that incorporates the disc, fovea, and arches allows for translation, rotation, scaling, and nonlinear shape variations to be accommodated across a population.

An isotropic coordinate system is a system in which the size of each imaged element is the same in all three dimensions. This is desirable for a retinal atlas so images can refer to the atlas independent of spatial pixel location by a linear one-to-one mapping. The radial distortion correction (RADIC) model attempts to register images in a distortion-free coordinate system using a planar-to-spherical transformation, so the registered image is isotropic under a perfect registration, and places the registered image in an isotropic coordinate system (Fig. 6.9).91 An isotropic atlas makes it independent of spatial location to map correspondences between the atlas and test image. The intensities in overlapping area are determined by a distance-weighted blending scheme.92

Fig. 6.9 Registration of fundus image pair using (A) quadratic model and (B) radial distortion correction model. Vessel center lines are overlaid for visual assessment of registration accuracy. This registration is performed to disc-centered and macula-centered images to provide an increased anatomic field of view. (Reproduced with permission from Lee S, Abramoff MD, Reinhardt JM. Retinal image mosaicing using the radial distortion correction model. In: Joseph MR, Josien PWP, eds. Proceedings of the SPIE. SPIE, 2008. p 691435.)

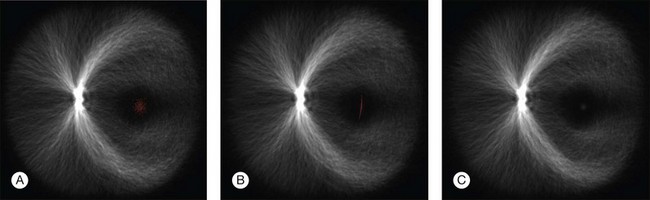

The atlas landmarks serve as the reference set so each color fundus image can be mapped to the coordinate system defined by the landmarks. As the last step of atlas generation, color fundus images are warped to the atlas coordinate system so that the arch of each image is aligned to the atlas vascular arch (Fig. 6.10).93 Rigid coordinate alignment is done for each fundus image to register the disc center and the fovea. The control points are determined by sampling points from equidistant locations in radial directions from the disc center. Usually, the sampling uses smoothed trace lines utilizing third-order polynomial curve fitting to eliminate locally high tortuosity of vascular tracings which may cause large geometric distortions (Fig. 6.11).

Fig. 6.10 Atlas coordinate mapping by thin plate spline (A) before and (B) after mapping. Naive main arch traces obtained by Dijkstra’s line detection algorithm are drawn as yellow lines that undergo polynomial curve fitting to result in blue lines. Atlas landmarks (disc center, fovea, and vascular arch) are drawn in green, and equidistant radial sampling points marked with dots. (Reproduced with permission from Abramoff MD, Garvin M, Sonka M. Retinal imaging and image analysis. IEEE Rev Biomed Eng 2010;3:169–208.)

Fig. 6.11 Registration of anatomic structures according to increasing complexity of registration transform – 500 retinal vessel images are overlaid and marked with one foveal point landmark each (red spots). Rigid coordinate alignment by (A) translation, (B) translation and scale, and (C) translation, scale, and rotation. (Reproduced with permission from Abramoff MD, Garvin M, Sonka M. Retinal imaging and image analysis. IEEE Rev Biomed Eng 2010;3:169–208.)

A retinal atlas can be used as a reference to quantitatively assess the level of deviation from normality. An analyzed image can be compared with the retinal atlas directly in the atlas coordinate space. The normality can thus be defined in several ways depending on the application purpose – using local or global chromatic distribution, degree of vessel tortuosity, presence of pathological features, or presence of artifacts (Fig. 6.12). Other uses for a retinal atlas include image quality detection and disease severity assessment. Retinal atlases can also be employed in content-based image retrieval leading to abnormality detection in retinal images.94

Fig. 6.12 Example application of employing retinal atlas to detect imaging artifacts. (A, C) Color fundus images with artifacts. (B, D) Euclidean distance maps in atlas space using atlas coordinate system. Note that distances are evaluated within atlas image. Consequently, field of view of distance map is not identical to that of fundus image. (Reproduced with permission from Abramoff MD, Garvin M, Sonka M. Retinal imaging and image analysis. IEEE Rev Biomed Eng 2010;3:169–208.)

Performance of DR detection algorithms

Several groups have studied the performance of detection algorithms in a real-world setting. The main goal of such a system is to decide whether the patient should be evaluated by a human expert or can return for routine follow-up, based solely on automated analysis of retinal images.43,44

DR detection algorithms appear to be mature and competitive algorithms and have now reached the human intrareader variability limit.41,42,95 Additional validation studies on larger, well-defined, but more diverse populations of patients with diabetes are urgently needed; anticipating cost-effective early detection of DR in millions of people with diabetes to triage those patients who need further care at a time when they have early rather than advanced DR. Validation trials are currently under way in the USA, UK, and the Netherlands.

To drive the development of progressively better fundus image analysis methods, research groups have established publicly available, annotated image databases in various fields. Fundus imaging examples are represented by the STARE,96 DRIVE,57 REVIEW,97 and MESSIDOR databases,98 with large numbers of annotated retinal fundus images, with expert annotations for vessel segmentation, vessel width measurements, and DR detection. Image analysis competitions such as the Retinopathy Online Challenge95 have also been initiated.

The Digital Retinal Images for Vessel Evaluation (DRIVE) database was established to enable comparative studies on segmentation of retinal blood vessels in retinal fundus images. It contains 40 fundus images from subjects with diabetes, both with and without retinopathy, as well as retinal vessel segmentations performed by two human observers. Starting in 2005, researchers have been invited to test their algorithms on this database and share their results with other researchers through the DRIVE website.89 At the same web location, results of various methods can be found and compared. Currently, retinal vessel segmentation research is primarily focusing on improved segmentation of small vessels, as well as on segmenting vessels in images with substantial abnormalities.

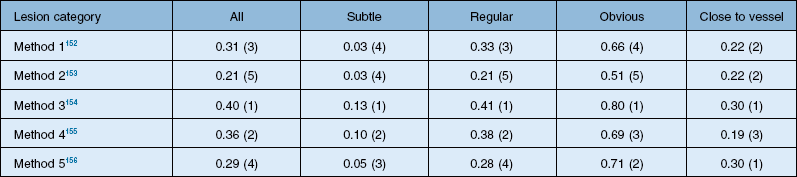

The DRIVE database was a great success, allowing comparisons of algorithms on a common data set. In retinal image analysis, it represented a substantial improvement over method evaluations on unknown data sets. However, different groups of researchers tend to use different metrics to compare the algorithm performance, making truly meaningful comparisons difficult or impossible. Additionally, even when using the same evaluation measures, implementation specifics of the performance metrics may influence final results. Consequently, until the advent of the Retinopathy Online Challenge competition in 2009, comparing the performance of retinal image analysis algorithms was difficult.95 This competition focused on detection of microaneurysms. Twenty-six groups participated in the competition, out of which six groups submitted their results on time. The results from each of the methods in this competition are summarized in Table 6.1.

Optical coherence tomography image analysis

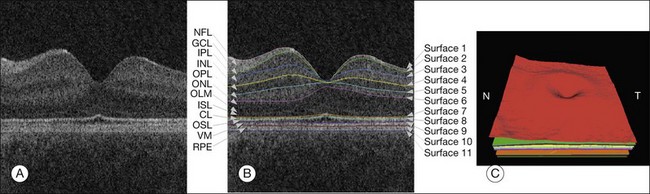

SD-OCT technology has enabled true 3D volumetric scans of the retina to be acquired. Thus, the importance of developing advanced image analysis techniques to maximize the extraction of clinically relevant information is especially important. Nevertheless, the development of such advanced techniques can be challenging as OCT images are inherently noisy, thus often requiring the utilization of 3D contextual information (Fig. 6.13). Furthermore, the structure of the retina can drastically change during disease. Here, we review some of the important image analysis steps for processing OCT images. We start with the segmentation of retinal layers, one of the earliest, yet still extremely important, OCT image analysis areas. We then discuss techniques for flattening OCT images in order to correct scanning artifacts. Building upon the ability to extract layers, we discuss use of thickness information and of texture information. This is followed by the segmentation of retinal vessels, which currently has its technical basis in many of the techniques used for segmenting vessels in fundus photography, but is beginning to take advantage of the 3D information only available in SD-OCT. The use of both layer-based and texture-based properties to detect the locations of retinal lesions is then described. The ability to segment layers in the presence of lesions is described. This section is based on a review paper. 19

Fig. 6.13 Segmentation results of 11 retinal surfaces (10 layers). (A) X-Z image of optical coherence tomography volume. (B) Segmentation results: nerve fiber layer (NFL), ganglion cell layer (GCL), inner plexiform layer (IPL), inner nuclear layer (INL), outer plexiform layer (OPL), outer nuclear layer (ONL), outer limiting membrane (OLM), plus inner-segment layer (ISL), outer-segment layer (OSL), and retinal pigment epithelium complex (RPE). CL, Inner–outer segment interface complex surface; VM, Verhoeff’s membrane. (C) Three-dimensional rendering of segmented surfaces. N, nasal; T, temporal. (Reproduced with permission from Abramoff MD, Garvin M, Sonka M. Retinal imaging and image analysis. IEEE Rev Biomed Eng 2010;3:169–208.)

Retinal layer analysis from 3D OCT

The segmentation of retinal layers in OCT scans has been an important goal, because thickness changes in the layers are one indication of disease status. Previous-generation time domain scanning systems (such as the Stratus OCT by Carl Zeiss Meditec) offered the ability to segment and provide thickness measurements for a single layer of the retina. In particular, the retinal nerve fiber layer thickness measurements of peripapillary circular scans and total retinal thickness measurements were available and used clinically. It can be assumed that commercialized methods utilized an inherently 2D approach (i.e., if multiple 2D slices are available in a particular scanning sequence they are segmented independently). Indeed, most of the early approaches that have been reported in the literature99–104 for the segmentation of time domain scans are also 2D in nature.

While variations to each of the early 2D approaches exist for the segmentation of retinal boundaries, a typical 2D approach proceeds as follows: preprocess the image,99–101,103 perform a 1D peak detection (detection) on each A-scan of the processed image to find points of interest, and (in some methods) correct for possible discontinuities in the 1D border detection.99 Other 2D time domain approaches include the use of 2D dynamic programing by Baroni et al.105

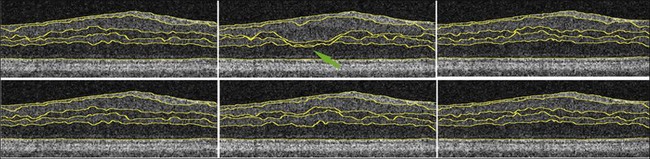

Haeker et al.106–108 and Garvin et al.109 reported the first true 3D segmentation approach for the segmentation of retinal layers on OCT scans, taking advantage of 3D contextual information. Their approach was unique in that the layers were segmented simultaneously.110 For time domain macular scans, they segmented 6–7 surfaces (5–6 layers), obtaining an accuracy and reproducibility similar to that of retinal specialists. By extending the approach to SD-OCT volumes,111 utilization of 3D contextual information had more of an advantage (Fig. 6.14). By employing a multiscale approach, the processing time was subsequently decreased from hours to a few minutes while enabling segmenting additional layers.112 A similar approach for segmenting the intraretinal layers in optic nerve head-centered SD-OCT volumes was reported with an accuracy similar to that of the interobserver variability of two human experts.113 A preliminary layer thickness atlas was built from a small set of normal subjects. Thickness loss of the macular ganglion cell layer in people with diabetes without retinopathy was thus demonstrated, showing that diabetes also leads to retinal neuropathy.114,115

Fig. 6.14 An illustration of why using the complete three-dimensional (3D) contextual information in the intraretinal layer segmentation process is superior. (Top) Sequence of two-dimensional (2D) results on three adjacent slices within a spectral domain volume obtained using a slice-by-slice 2D graph-based approach. Note the “jump” in the segmentation result for third and fourth surfaces in middle slice. (Bottom) Sequence of 3D results on same three adjacent slices using same graph-based approach, but with the addition of 3D contextual information. 3D contextual information prevented third and fourth surface segmentation from failing.

OCT image flattening

SD-OCT volumes frequently demonstrate motion artifacts and other artifacts may also be present, such as the tilting due to an off-axis placement of the pupil. Approaches for reducing these artifacts include 1D and 2D methods that use cross-correlation of either A-scans100 or B-scans.116,117 In some cases, a complete flattening of the volume is desired based on a surface segmentation to ensure a consistent shape for segmentation and visualization. Flattening the volumes makes it possible to truncate the image substantially in the axial direction (z-direction), thereby reducing the memory and time requirements of an intraretinal layer segmentation approach. Flattening an image involves first segmenting the retinal pigment epithelial surface in a lower resolution, fitting a thin-plate spline to this surface, and then vertically realigning the columns of the volume to make this surface completely flat.111

Retinal layer thickness analysis

After flattening and segmentation, the properties of the macular tissues in each layer can be extracted and analyzed. In addition to layer thickness, textural properties can also be quantified, as explained in the next paragraph. Measuring the thickening of specific layers is crucial in the management of diabetic macular edema and other retinal disorders.118 Typically, it is useful to compare the obtained thickness values to a normative database or atlas, as is available in commercial machines for the total macular thickness and the retinal nerve fiber layer thickness. However, a normative atlas for all the layers in 3D currently only exists within individual research groups.119 Nevertheless, work has been done to demonstrate previously unknown changes in the ganglion cell layer in patients with diabetes.114,115

Retinal texture analysis

Texture, defined as measures of the spatial distribution of image intensities, can be used to characterize tissue properties and tissue differences. Textural properties may be important for assessing changes in the structural or tissue composition of layers that cannot be measured by changes in thickness alone. Texture can be determined in each of the identified layers three-dimensionally.83,120,121 3D formulations of texture descriptors were developed for pulmonary parenchymal analysis122 and have been directly employed for OCT texture analysis.123 The wavelet transform, a form of feature detection, has been used in OCT analysis for de-noising and de-speckling124–126 as well as for texture analysis.127 Early work on 3D wavelet analysis of OCT images was based on a computationally efficient yet flexible nonseparable lifting scheme in arbitrary dimensions.123,128

Texture characteristics can be computed for each segmented layer, several adjacent layers, or in layer combinations (Fig. 6.12).

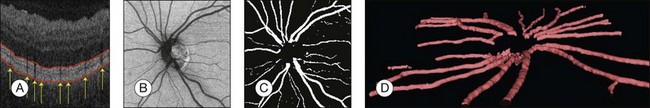

Detection of retinal vessels from 3D OCT

Segmenting the retinal vasculature in 3D SD-OCT volumes129,130 allows OCT-to-fundus and OCT-to-OCT image registration. The absorption of light by the blood vessel walls causes vessel silhouettes to appear below the position of vessels, which thus causes the projected vessel positions to appear dark on either a full projection image of the entire volume130 or a projection image from a segmented layer for which the contrast between the vascular silhouettes and background is highest, as proposed by Niemeijer et al.129,131 In particular, this approach used the layer near the retinal pigment epithelium to create the projection image. Vessels were segmented using feature detection with gaussian filter-banks and a k-NN pixel classification approach. The performance of the automated method was evaluated for both optic nerve head-centered as well as macula-centered scans. The retinal vessels were successfully identified in a set of 16 3D OCT volumes (8 optic nerve head and 8 macula-centered), with high sensitivity and specificity as determined using ROC analysis, AUC = 0.96. Xu et al. reported an approach for segmenting the projected locations of the vasculature by utilizing pixel classification of A-scans.132 The features used in the pixel classification were based on a projection image of the entire volume in combination with features of individual A-scans. Both of these reported prior approaches focused on segmenting the vessels in the region outside the optic disc region because of difficulties in the segmentation inside this region. The neural canal opening shares similar features with vessels, thus causing false positives.133 Hu et al.127 proposed a modified 2D pixel classification algorithm to segment the blood vessels in SD-OCT volumes centered at the optic nerve head, with a special focus on better identifying vessels near the neural canal opening. Given an initial 2D segmentation of the projected vasculature, Lee et al. presented an approach for segmenting the 3D vasculature in the volumetric scans134 by utilizing a graph-theoretic approach (Fig. 6.15). One of the current limitations of that approach is the inability to resolve the depth information of crossing vessels properly.

Fig. 6.15 Example of spectral three-dimensional (3D) optical coherence tomography (OCT) vessel segmentation. (A) Vessel silhouettes indicate position of vasculature. Also indicated in red are slice intersections of two surfaces that delineate the subvolume in which vessels are segmented (superficial retinal layers toward vitreous are at the bottom). (B) 2D projection image extracted from projected subvolume of spectral 3D OCT volume. (C) Automatic vessel segmentation. (D) 3D rendering of vessel around the optic nerve head. (Reproduced with permission from Lee K, Abràmoff M, Niemeijer MK, et al. 3D segmentation of retinal blood vessels in spectral-domain OCT volumes of the optic nerve head. Proc SPIE Med Imaging 2010;7626:76260 V.)

Detection of retinal lesions

Calculated texture and layer-based properties can be used to detect retinal lesions as a 2D footprint123 or in 3D. Out of many kinds of possible retinal lesions, symptomatic exudate-associated derangements (SEADs), a general term for fluid-related abnormalities in the retina, are of great interest in assessing the severity of choroidal neovascularization, diabetic macular edema, and other diseases. Detection of drusen, cotton-wool spots, areas of pigment epithelial atrophy, or pockets of fluid under epiretinal membranes may be attempted in a similar fashion.

The deviation of local retinal tissue from normal can be computed by determining the local deviations from the normal appearance at each location (x; y) in each layer and selecting the areas where the absolute deviation is greater than a predefined cutoff. More generally, in order to build an abnormality-specific detector, a classifier can be trained, the inputs of which may be the z-scores computed for relevant features. Comprehensive z-scores are appropriate since an abnormality may affect several layers in the neighborhood of a given location (x; y). The classifier-determined label associated with each column may be selected on relevant features by one of the many available cross-validation and/or feature selection methods,135–137 thus forming a SEADness or probabilistic abnormality map.

Fluid detection and segmentation

In choroidal neovascularization, diabetic macular edema, and other retinal diseases, intraretinal or subretinal fluid is reflective of disease status and changes in fluid are an indicator of disease progression or regression. With the availability of anti-VEGF therapy, assessment of the extent and morphology of fluid is expected to contribute to patient-specific therapy. While regions of fluid are inherently 3D, determining their 2D retinal footprint is highly relevant. Following the above-described analysis, fluid detection employs generalization of properties derived from expert-defined examples. Utilizing the differences between normal regional appearance of retinal layers as described by texture descriptors and other morphologic indices, a classifier can be trained to identify abnormal retinal appearance. The fluid detection starts with 3D OCT layer segmentation, resulting in 10 intraretinal layers, plus an additional artificial layer below the deepest intraretinal layer so that subretinal abnormalities can also be detected.123 Texture-based and morphologic descriptors are calculated regionally in rectangular subvolumes, the most discriminative descriptors are identified, and these descriptors are used for training a probabilistic classifier. The performance of a (set of) feature(s) is assessed by calculating the area under the ROC of the fluid classifier. Once the probabilistic classifier is trained, fluid-related probability is determined for each retinal location. In order to obtain a binary footprint for fluid in an image input to the system, the probabilities are thresholded and the footprint of the fluid region in this image is defined as the set of all pixels with a probability greater than a threshold. Useful 3D textural information can be extracted from SD-OCT scans and, together with an anatomical atlas of normal retinas, can be used for clinically important applications.

Fluid segmentation in 3D

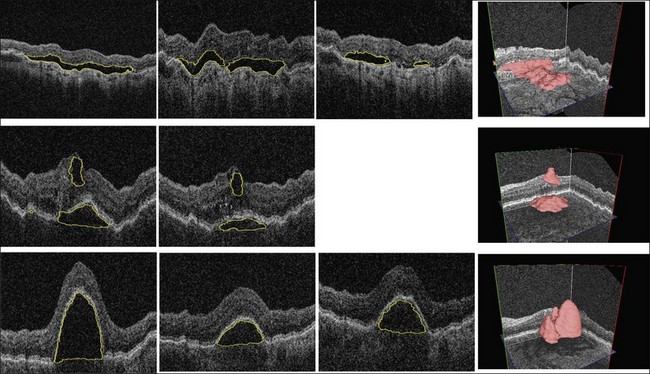

Complete volumetric segmentation of fluid from 3D OCT is the subject of active research. A promising approach is based on identification of a seed point in the OCT data set that is “inside” and “outside” a fluid region. These points can be identified automatically using a 3D variant of the probabilistic classification approach outlined in the previous paragraphs. Once these two points are identified, an automated segmentation procedure that is based on regional graph-cut method138,139 may be employed to detect the fluid volumetric region. The cost function utilized in a preliminary study was designed to identify dark 3D regions with somewhat homogeneous appearance. The desired properties of the fluid region are automatically learned from the vicinity of the identified fluid region seed point. This adaptive behavior allows the same graph-cut segmentation method driven by the same cost function to segment fluid of different appearance reliably. Figure 6.16 gives an example of 3D fluid region segmentations obtained using this approach. Note that the figure depicts the same locations in the 3D data sets imaged several times during the course of anti-VEGF treatment. The surfaces of the segmented fluid regions are represented by a 3D mesh, which can be interactively edited to maximize fluid region segmentation accuracy in difficult or ambiguous cases.

Fig. 6.16 Symptomatic exudate-associated derangement (SEAD) segmentation from three-dimensional optical coherence tomography and SEAD development over time. Top row: 0, 28, and 77 days after first imaging visit. Middle row: 0 and 42 days after first imaging visit. Bottom row: 0, 14, and 28 days after first imaging visit. Three-dimensional visualization in right column shows data from week 0. Each imaging session was associated with anti-vascular endothelial growth factor reinjection.

Multimodality retinal imaging

Multimodality imaging, defined as images from the same organ acquired using different techniques using different physical techniques, is becoming increasingly common in ophthalmology. For image information from multiple modalities to be usable in mutual context, images must be registered so that the independent information that was acquired by different methods can be concatenated and form a multimodality description vector. Thus, because of its importance in enabling multimodal analysis, retinal image registration reflects another active area of research. The several clinically used methods to image the retina were introduced above and include fundus photography, SLO, fluorescence imaging, and OCT. Additional retinal imaging techniques, such as hyperspectral imaging, oximetry, and adaptive optics SLO, will bring higher resolution and additional image information. To achieve a comprehensive description of retinal morphology and eventually function, diverse retinal images acquired by different or the same modalities at different time instants must be mutually registered to combine all available local information spatially. The following sections provide a brief overview of fundus photography and OCT registration approaches in both 2D and 3D; a more detailed review is available.19 Registration of retinal images from other existing and future imaging devices can be performed in a similar or generally identical manner.

Registration of fundus retinal photographs