Chapter 29 Error, Man and Machine

Much has been written recently about the lessons that medicine can take from aviation,1–3 but there is resistance to the idea of transferring those ideas from other industries to healthcare. The usual reason given is that patients and their diseases are too complex to adapt to checklists and standard operating procedures. However, it is precisely because of this complexity that tools from aviation such as these can be life-saving. In acknowledgement of this we already do refer to algorithms such as those from the American Heart Association or European Resuscitation Council (ERC) to guide us as to the best practice to follow in resuscitating the patient.4,5

Human factors in aviation

Today, almost 80% of aircraft accidents are due to human performance failures; and the introduction of Human Factors consideration into the management of safety critical systems has stemmed from the recognition of human error as the primary cause in a significant number of catastrophic events worldwide. In the late 1970s, a series of aircraft accidents occurred where, for the first time, the investigation established that there was nothing wrong with the aircraft and that the causal factor was poor decision-making and lack of situational awareness. The nuclear industry (Three Mile Island, Chernobyl) and the chemical industry (Piper Alpha, Bhopal) suffered similar accidents, where it became evident that the same issues, i.e. problem solving, prioritizing, decision-making, fatigue and reduced vigilance, were directly responsible for the disaster. It was clear, however, that the two-word verdict, ‘human error’, did little to provide insight into the reasons why people erred, or what the environmental and systems influences were that made the error inevitable. There followed a new approach to accident investigation that aimed to understand the previously under-estimated influence of the cognitive and physiological state of the individuals involved, as well as the cultural and organizational environment in which the event occurred. The objective of ‘Human Factors’ in aviation, as elsewhere, is to increase performance and reduce error: by understanding the personal, cognitive and organizational context in which we perform our tasks.

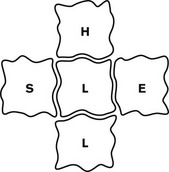

A commonly used diagram, which is useful in forming a basic understanding of the man/machine interface and human factors, is the SHEL model, first described by Edwards in 1972 and later refined by Hawkins in 1975 (Fig. 29.1). Each block represents one of the five components in the relationship, with liveware (human) always being in the central position. The blocks are, however, irregular in shape and must be carefully matched together in order to form a perfect fit. A mismatch highlights the potential for error.

• Liveware–Environment errors could be the product of extremely rushed procedures and items being missed. Long flights and excessive fatigue, or multiple, short busy flights, could also incur error as a direct product of the environment.

• Liveware–Software errors would naturally occur where information is delivered in a misleading or inaccurate manner, i.e. cluttered computer screens, or wrongly calculated weights and loads in published charts.

• Liveware–Hardware errors caused by poor design. Nearly 60 years ago, aircraft gear levers were located behind the pilot’s seat, along with various other handles that were indistinguishable on a dark and stormy night. Mis-selection caused so many accidents that a mirror was installed on the forward panel, in an attempt to help the pilot see the levers! It was not until Boeing realized that the levers should be at the front and directly visible that these particular accidents ceased. The design was further modified by varying the shape of the individual levels, thus providing the pilot with additional tactile confirmation. An extra margin of safety had thereby been introduced into the critical function of gear selection.

• Liveware–Liveware errors as a direct by-product of the quality of the team relationship, interaction, leadership and information flow (see Shiva factor below).

Understanding error

Aircraft accidents are seldom due to one single catastrophic failure. Investigations invariably uncover a chain of events – a trail of errors, where each event was inexorably linked to the next and each event was a vital contributor to the outcome. The advantage in this truth is that early error detection and containment can prevent links from ever forming a chain. Errors can be defined as lapses, slips and misses, or errors of omission and errors of commission (see Appendix 1 for definition of these terms in the context of the study of error). Some errors occur as a direct result of fatigue, where maintaining vigilance becomes increasingly difficult. Conversely, others are a product of condensed time frames, where items are simply missed. Whatever its source, it must be recognized that error is forever present in both operational and non-operational life. High error rates tell a story; they are indicative of a system that either gives rise to, or fails to prevent, them. In other words, when errors are identified, it should be appreciated that they are the symptoms and not the disease.

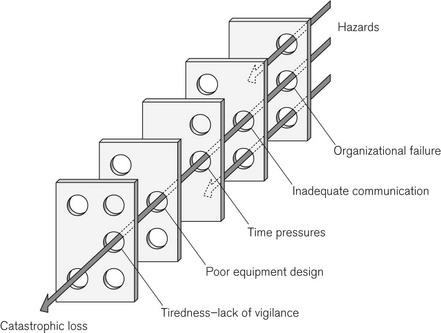

The ‘Swiss Cheese analogy’ is often used to explain how a system that is full of holes (ubiquitous errors, systems failures, etc.) can appear solid for most of the time. Thankfully, it is only rarely that the holes line up or cluster (e.g. adverse environmental circumstance + error + poor equipment design + fatigue) such that catastrophic failure occurs (Fig. 29.2). Error management aims to reduce the total number of holes, such that the likelihood of clustering by chance is reduced (see below).

Figure 29.2 The Swiss Cheese Model, originally by James Reason,6 demonstrates the multifactorial nature of a sample ‘accident’ and can be used to explain how latent conditions for an incident may lie dormant for a long time before combining with other failures (or viewed differently: breaches in successive layers of defences) to lead to catastrophe. As an example the organizational failure may be the expectation of an inadequately trained member of staff to perform a given task.

Root causes of adverse events

Once the catalyst event has occurred and the system faults have contributed to, rather than mitigated the error, the patient or passengers must now rely on human beings within the system to prevent harm. The greatest tool in aviation and in medicine is situational awareness: the ability to keep the whole picture in focus, to not get so lost in detail that one forgets to fly the plane or to prioritize patient ventilation over achieving tracheal intubation.7

An example from aviation

The Captain was under pressure from the airline dispatcher to return the plane to the USA, so he continued, sometimes exerting as much as 150 pounds of force on the jackscrew assembly in order to maintain control of the aircraft. What the crew did not know was that when the MD 80 was designed, dissimilar metals were used in the construction of the jackscrew assembly. Because this assembly experienced heavy flight loads, the softer metal wore at a greater rate than the harder metal, and so the assembly became loose (Catalyst event).

Situational awareness

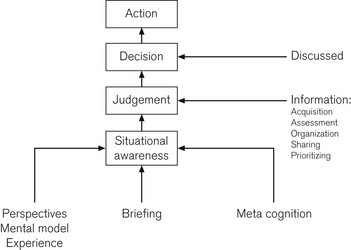

The term ‘situational awareness’ describes a dynamic state of (cognitive) awareness that allows for the integration of information and the use of it to anticipate changes in the current environment. Every individual has his or her own ‘picture’, or perception, of the environment. This mental model is informed by culture, training and previous experience. But no matter how familiar the territory, an individual’s perception of a situation may not be the right one and, if left unchallenged, could lead to faulty decisions and serious consequences. Crews are, therefore, taught to confer and cross-check their mental models before making assumptions or decisions that give rise to mistakes. They are thus enhancing their situational awareness and using this knowledge to make predictive judgements on the progress of the flight (Fig 29.3).

In a dynamic, fluid environment, where there is little time to go back and correct errors, it has long been appreciated that it is better to spend time making accurate decisions than to be caught in a reactive mode of trying to correct error. Errors inevitably give rise to more errors, a process that can suck its victim down a root and branch corridor of mistakes literally miles away from the original task at hand.

Fatigue, vigilance and arousal

Medicine is replete with examples where fatigue has contributed to or caused an error. A study in interns (first year trainees) confirmed that there was a higher rate of needle stick injuries in those who were fatigued.8 The Australian Incident Monitoring Study (AIMS) showed fatigue to be a ‘factor contributing to the incident’ in 2.7% of voluntary, anonymous, self-reported incidents and words such as ‘haste, inattention, failure to check’ often appeared in these reports.9 Fatigue positive reports most commonly involved pharmacologic incidents such as syringe swaps and over- or under-dosage. A study of anaesthesia trainees at Stanford confirmed that chronic fatigue can be as harmful as acute fatigue.10

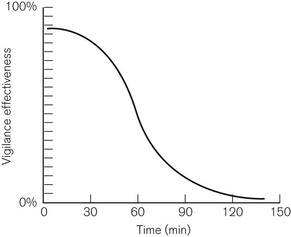

Vigilance, from the Latin vigilantia for watchfulness, is defined in the dictionary as ‘being keenly alert to danger’. In 1943, research by the Royal Air Force showed that vigilance, requiring continuous monitoring and detection of brief, low-intensity and infrequently occurring events over long periods, is poor. This is illustrated in Fig. 29.4, which shows rapid fall-off in vigilance after a period as short as half an hour. In acknowledgement of this, modern anaesthesia machines and monitors allow alarm parameters to be set for all measured variables; this level of vigilance monitoring was unheard of 25 years ago (see Chapter 4, The Anaesthetic Workstation).

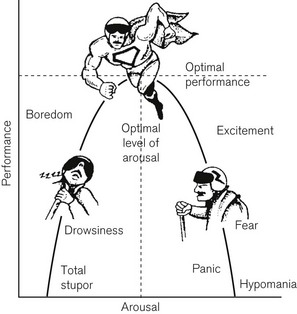

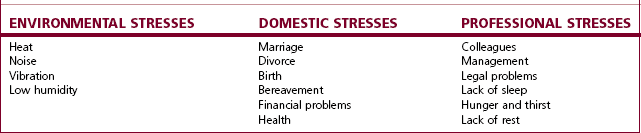

Arousal is the level of ‘wakefulness’. For any task there is a level of arousal at which one performs most efficiently, as shown in Fig. 29.5. Surprisingly, this optimal level decreases as the difficulty of the task increases. As such, overarousal often occurs in emergency situations when difficult tasks may need to be carried out rapidly. Underarousal for a particular task slows decision-making and makes it less accurate and additionally reduces vigilance. Underarousal also occurs with boredom and sleep deprivation. Table 29.1 shows some common stressors, which adversely affect performance.

Communication styles

In 2000 Sexton and others reported on ‘error, stress and teamwork in medicine and aviation’.2 They found that at that time, although most intensive care medical staff and pilots were in favour of a flat hierarchy, only 68% of surgeons favoured this. A flat hierarchy is not the answer to all of our communication difficulties in the operating room, in fact it is important that decisions are made and that at times we each need to assume the role of team leader. However, it is critical in the operating theatre that the anaesthetist is open to receiving input from others, including trainees and others whom he may consider to be in a subordinate role.

The Shiva factor

We believe that there are four identifiable steps which humans take that lead to adverse events. These we have collectively termed the ‘Shiva factor’, named after the head of the Hindu Trimurti, Shiva, who can be both the preserver of life, and the destroyer.1

2. Analyzing the facts to support a decision you have already made

3. Disregarding the advice or input of others

4. Persisting in a course of action despite a deteriorating condition due to loss of situational awareness.

The ‘Shiva factor’ in action was vividly displayed on 13 June 1982, at Washington DC National Airport. An unusually severe snowstorm had closed the airport, but by mid-afternoon it was reopened, and several aircraft had departed safely. An Air Florida Boeing 737-200 (Palm 90) then crashed in the Potomac River, hitting the 14th Street Bridge with the loss of 78 lives. The postcrash investigation revealed that the flight crew had used inappropriate de-icing procedures. The captain, possibly unaware of the proper procedures (as he was from a Florida based airline with little experience in severe winter weather; lack of proficiency), ‘postured’ in an attempt to mitigate his own fears and concerns as the crisis developed. His tone of voice on the cockpit voice recorder did not convey appropriate concern about the prevailing conditions (Shiva factor 1) and when his first officer questioned him, he was quick to silence him, attempting to appear as if he had everything under control (Shiva factor 3). In attempts to push back from the gate, the Captain used reverse thrust to aid the tug. This was not a wise or approved procedure for the existing conditions. The snow, which was blown forward, plugged the pitot tubes on the intake of the engines. The pressures from the pitot system are used to generate an engine pressure ratio (EPR), which pilots use to confirm their power settings. The flight crew failed to turn on the engine anti-ice system, which should have prevented the excessively high false reading. All the other indications showed that the EPR gauge was wrong. The first officer brought this to the attention of the captain who has the sole authority to reject the take off. Initially he was ignored (Shiva factor 3). When he again questioned the accuracy of the indications and questioned the captain’s assessment, he was overruled. (Shiva factor 2, Shiva factor 4). They continued the take off at a reduced power setting (because the EPR gauge falsely indicated that they had all the power that was available). Even when the stall warning system issued the appropriate warnings (no failure of technology) they failed to push the thrust levers fully up (failure of proficiency and judgement). That simple action, taken at the right time, might have averted the disaster.

Volant diagram

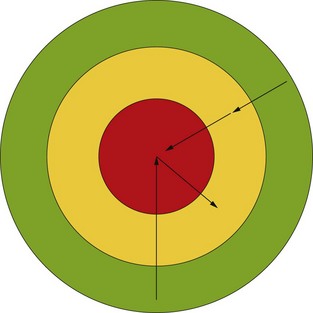

This reverse Volant diagram (Fig. 29.6) illustrates a global view of a situation. Red indicates a critical situation, yellow/amber indicates a situation that is not perfect but may be good enough to allow the team to stabilize the patient and to think about how best to resolve the problem. Green indicates an optimum situation with all systems functioning as intended. If a catastrophic (red) situation arises our tendency is to want to go back to green quickly and to hope that no one noticed. An example of this in practice is the unanticipated difficult airway. Faced with a patient who is difficult to intubate, our tendency is to want to try to accomplish that task, especially if we did not expect it to be difficult. We want to be back in the green and we hope no one will notice (Shiva factor 1). However, repeated attempts to intubate the patient may worsen the situation resulting in the feared combination of cannot intubate/cannot ventilate. Guidelines from the American Society of Anesthesiologists and the Difficult Airway Society in the UK11,12 do not recommend repeated intubation attempts and yet anaesthetists still have a tendency to persist in this path (Shiva factor 4). A much safer path is to return to mask ventilation or to place a laryngeal mask airway, an amber/yellow situation. This buys thinking time, so that additional technology may be brought in (difficult airway equipment), additional proficiency may be added (more/different personnel), guidelines can be referred to (standard operating procedure) and the judgement of the individual trying to intubate the patient can be enhanced by using the collective wisdom of those around him.

Error management

Helmreich defines error management as ‘the process of correcting an error before it becomes consequential to safety’.13

Briefings continue throughout all major phases of flight. At particularly crucial phases of flight, i.e. approach and landing, the briefing rate increases and the ‘challenge/response’ use of checklists becomes more critical in error capture and mitigation. In this instance, the external environment is considered replete with potential ‘threats’ which, if they do not recognize and manage, will cause the crew to make errors. The greater the understanding of the threat posed by the circumstances, the less the likelihood of error arising. It is perhaps this discipline; of briefing, conferring and cross-checking, that most markedly distinguishes the aviation industry from anaesthesia and medicine.

Barriers for safety

Checklists

Checklists14 introduce consistency and uniformity in performance, across a broad spectrum of individuals and situations, and form the basis for successful implementation of evidence-based standard operating procedures.

Human memory is notoriously unreliable for consistency in complex procedures. It worsens if you add stress and fatigue.15 In a hierarchical situation, the mandatory use of checklists can empower subordinates to insist on the adherence to approved and safe procedures.

In aviation, checklists are used in both normal and abnormal situations. They may be done individually or in a pair, with one pilot doing and the other confirming each step. Checklists may be simply a list, or may follow a flow pattern or algorithm. For abnormal checklists, there may be a quick reference handbook, with supplemental information in a pilot handbook. A similar situation in anaesthesia would be a cardiac arrest due to local anaesthetic, where one would initially refer to the advanced cardiac life support (ACLS) or ERC guidelines, but may then refer to other materials for guidance about Intralipid dosing,16 which is specific to this situation.

In an emergency situation, such as an engine fire, the approach was to use a memorized checklist. In medicine we rely on memorized lists, not wishing to appear lacking in knowledge. However, in highly stressful situations, items may be forgotten, so aviation has moved to a system where memorization of the first critical step is expected but then an electronic or paper checklist is used. An example would be a rapid decompression incident where the first step is that the pilot must secure his or her oxygen mask, subsequent steps are done using a checklist. The non-flying pilot reads and performs each step on the checklist, the flying pilot confirms steps, but has no other responsibility besides flying the plane. An example from anaesthesia would be the management of malignant hyperthermia. This rare complication will only be encountered by few anaesthetists during their career, but each must know how to respond. The first step is to discontinue trigger agents and then to ‘turn up the oxygen and call for help’: advice that has been given to trainees for years. All subsequent steps may be guided by a written or web-based checklist.17

An example from aviation

On British Midland Flight 92, on 8 January 1989, cross wiring of the engine fire warning system gave false information when the pilots were dealing with an engine fire emergency. There were other indicators available to allow the correct course of action. While the captain was in the process of confirming which engine was on fire, by checking the corresponding gauges, he was interrupted by air traffic control (ATC) clearing him to a lower altitude. He did not resume the checklist after his transmission to ATC, but proceeded to shut down a perfectly good engine. The engine which was failing and about to disintegrate remained the sole source of propulsion. The aircraft crashed on a motorway just short of the runway with massive loss of life (Fig. 29.7).

Appendix 1

Glossary

Error of commission: Generic term for error arising from an intended act against recommended procedure.

Error of omission: Generic term for error arising from an item or action being unintentionally missed.

Lapses: Unintended ‘oversight’ of information after the planning stage and before action.

Mishaps: Generic term for an unfortunate event.

Misses: Errors arising from items overlooked at planning stage.

Mistakes: Error incurred when a properly selected and applied action will not result in the desired outcome.

Slips: Unintended actions/inactions occurring during the execution of familiar or pre-planned procedures (slip of memory).

Violation: A deliberate action against the recommended procedure, which may, however, be the safest choice at the time. These occur when there is a conflict between the task and the recommended procedure versus the safest outcome.

1 Rampersad C, Rampersad SE. Can medicine really learn anything from aviation? Or are patients and their disease processes too complex? Semin Anesth. 2007;26:158–166.

2 Sexton JB, Thomas EJ, Helmreich RL. Error, stress, and teamwork in medicine and aviation: cross-sectional surveys. Semin Anesth. 2000;320:745–749.

3 Sexton JB, Marsch SC, Helmreich RL, Betzendoefer D, Kocher T, Scheidegger D, and the TOMS team. Jumpseating in the operating room. In: Henson L, Lee A, Basford A, eds. Simulators in anesthesiology education. New York: Plenum; 1998:107–108.

4 ECC Committee, Subcommittees and Task Forces of the American Heart Association. American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2005;112(24 Suppl):IV1–203. Epub 2005 Nov 28

5 Nolan JP, Baskett PJF. European Resuscitation Council guidelines for resuscitation 2005. editors. Resuscitation. 2005;67(1 Suppl):S1–S190.

6 Reason JT. Managing the risks of organizational accidents. Aldershot: Ashgate; 1997.

7 http://www.chfg.org/resources/07_qrt04/Anonymous_Report_Verdict_and_Corrected_Timeline_Oct_07.pdf. Accessed July 17 2011

8 Ayas NT, Barger LK, Cade BE, Hashimoto DM, Rosner B, Cronin JW, et al. Extended work duration and the risk of self-reported percutaneous injuries in interns. Resuscitation. 2006;296:1055–1062.

9 Morris GP, Morris RW. Anaesthesia and fatigue: an analysis of the first 10 years of the Australian Incident Monitoring Study 1987–1997. Anaesthetic Intensive Care. 2000;28:300–304.

10 Howard SK, Gaba DM, Rosekind MR, Zarcone VP. The risks and implications of excessive daytime sleepiness in resident physicians. Acad Med. 2002;77:1019–1025.

11 American Society of Anesthesiologists Task Force on Management of the Difficult Airway. Practice guidelines for management of the difficult airway: an updated report by the American Society of Anesthesiologists Task Force on Management of the Difficult Airway. Anesthesiology. 2003;98:1269–1277.

12 Henderson JJ, Popat MT, Latto IP, Pearce AC. Difficult Airway Society guidelines for management of the unanticipated difficult intubation. Anaesthesia. 2004;59:675–694.

13 Helmreich RL, Merritt AL. Culture at work in aviation and medicine. Aldershot: Ashgate; 1998.

14 Hales BM, Pronovost PJ. The checklist – a tool for error management and performance improvement. J Crit Care. 2006;21:231–235.

15 Bourne LE, Yaroush RA. Stress and cognition: a cognitive psychological perspective. NASA. 2003:1–121.

16 Picard J, Meek T. Lipid emulsion to treat overdose of local anaesthetic: the gift of the glob. Anaesthesia. 2006;61:107–109. (editorial)

17 http://medical.mhaus.org. Accessed 3 December 2008

18 Ralston M, Hazinski MF, Zaritsky AL, Schexnayder SM, Kleinman ME, eds. Pediatric advanced life support professional course guide, part 5 resuscitation team concept. Dallas: American Heart Association, 2006.

Dekker S. Just culture – balancing safety and accountability. Farnham, UK: Ashgate Books; 2007.

Dekker S. The Field Guide to Understanding Human Error. Farnham, UK: Ashgate Books; 2010.

Dismukes K, Berman BA, Loukopoulos LD. The limits of expertise: rethinking pilot error and the causes of airline accidents. Farnham, UK: Ashgate Books; 2007.

Flin R, O’Connor P, Crichton M. Safety at the sharp end – a guide to non-technical skills. Farnham, UK: Ashgate Books; 2008.

The above courtesy of Team Resource Management, Terema Ltd UK

Gawande A. Complications – a surgeon’s notes on an imperfect science. London: Picador; 2003.

Gladwell M. Blink – the power of thinking without thinking. New York: Back Bay Books; 2005.

Vincent C. Patient safety. Edinburgh: Elsevier; 2006.

UK portal for further information on human factors: Clinical Human Factors Group, www.chfg.org.

http://asrs.arc.nasa.gov/docs/cb/cb_346.pdf. Accessed 3 December 2008

Winters BD, Gurses AP, Lehmann H, Sexton JB, Rampersad CJ, Pronovost PJ. Clinical review: checklists – translating evidence into practice. Crit Care. 2009;13(6):210. Available online http://ccforum.com/content/13/6/210