Chapter 1 Ensuring Patient Safety in Surgery―First Do No Harm

Primum non nocere—first do no harm. This often-quoted phrase epitomizes the importance the medical community places on avoiding iatrogenic complications.1 In the process of providing care, patients, physicians, and the entire clinical team join to use all available medical weapons to combat disease to avert the natural history of pathologic processes. Iatrogenic injury or, simply, “treatment-related harm” occurs when this implicit rule to “first do no harm” is violated. Both society and the medical community have historically been intolerant of medical mistakes, associating them with negligence. The fact is that complex medical care is prone to failure. Medical mistakes are much like “friendly-fire” incidents in which soldiers in the high-tempo, complex fog of war mistakenly kill comrades rather than the enemy. Invariably, medical error and iatrogenic injury are associated with multiple latent conditions (constraints, hazards, system vulnerabilities, etc.) that predispose front-line clinicians to err. This chapter will review the science of human error in medicine and surgery. The specific case of wrong-sided brain surgery will be used as an illustration for implementation of emerging new strategies for enhancing patient safety.

The Nature of Iatrogenic Injury in Medicine and Surgery

The earliest practitioners of medicine recognized and described iatrogenic injury. Iatrogenic (Greek, iatros = doctor, genic = arising from or developing from) literally translates to “disease or illness caused by doctors.” Famous examples exist of likely iatrogenic deaths, such as that of George Washington, who died while being treated for pneumonia with blood-letting. The Royal Medical and Surgical Society, in 1864, documented 123 deaths that “could be positively assigned to the inhalation of chloroform.”2 Throughout history, physicians have reviewed unexpected outcomes related to the medical care they provided to learn and improve that care. The “father” of modern neurosurgery, Harvey Cushing, and his contemporary Sir William Osler modeled the practice of learning from error by publishing their errors openly so as to warn others on how to avert future occurrences.3–5 However, the magnitude of iatrogenic morbidity and mortality was not quantified across the spectrum of health care until the Harvard Practice Study, published in 1991.6 This seminal study estimated that iatrogenic failure occurs in approximately 4% of all hospitalizations and is the eighth leading cause of death in America—responsible for up to 100,000 deaths per year in the United States alone.7

A subsequent review of over 14,700 hospitalizations in Colorado and Utah identified 402 surgical adverse events, producing an annual incidence rate of 1.9%.8 The nature of surgical adverse events were categorized by type of injury and by preventability (Table 1-1).

Table 1-1 Surgical Adverse Events Categorized by Type of Injury and Preventability

| Type of Event | Percentage of Adverse Events | Percentage Preventable |

|---|---|---|

| Technique-related complication | 24 | 68 |

| Wound infection | 11 | 23 |

| Postoperative bleeding | 11 | 85 |

| Postpartum/neonatal related | 8 | 67 |

| Other infection | 7 | 38 |

| Drug-related injury | 7 | 46 |

| Wound problem (noninfectious) | 4 | 53 |

| Deep venous thrombosis | 4 | 18 |

| Nonsurgical procedure injury | 3 | 59 |

| Diagnostic error/delay | 3 | 100 |

| Pulmonary embolus | 2 | 14 |

| Acute myocardial infarction | 2 | 0 |

| Inappropriate therapy | 2 | 100 |

| Anesthesia injury | 2 | 45 |

| Congestive heart failure | 1 | 33 |

| Stroke | 1 | 0 |

| Pneumonia | 1 | 65 |

| Fall | 0.5 | 50 |

| Other | 5.5 | 32 |

These two studies were designed to characterize iatrogenic complications in health care. While not statistically powered to allow surgical subspecialty analysis, it is likely that the types of failures and subsequent injuries that this study identified can be generalized to the neurosurgical patient population. More recent literature supports the findings of these landmark studies.9–11

The Institute of Medicine used the Harvard Practice Study as the basis for its report, which endorsed the need to discuss and study errors openly with the goal of improving patient safety.7 The Institute of Medicine report on medical errors, “To Err Is Human: Building a Safer Health System,” must be considered a landmark publication.12 It was published in 1999 and focused on medical errors and their prevention. This was followed by the development of other quality improvement initiatives such as the Joint Commission on the Accreditation of Healthcare Organizations (JCAHO) Sentinel Events Program.12

One might argue that morbidity and mortality reviews already achieve this aim. The “M&M” conference has a long history of reviewing negative outcomes in medicine. The goal of this traditional conference is to learn how to prevent future patients from suffering similar harm, and thus incrementally improve care. However, frank discussion of error is limited in M&M conferences. Also, the actual review practices fail to support deep learning regarding systemic vulnerabilities13; indeed, since M&M conferences do not explicitly require medical errors to be reviewed, errors are rarely addressed. One prospective investigation of four U.S. academic hospitals found that a resident vigilantly attending weekly internal medicine M&M conferences for an entire year would discuss errors only once. The surgical version of the M&M conference was better with error discussion. However, while surgeons discussed adverse events associated with error 77% of the time, individual provider error was the focus of the discussion and cited as causative of the negative outcome in 8 of 10 conference discussions.13 Surgical conference discussion rarely identified structural defects, resource constraints, team communication, or other system problems. Further limiting its utility, the M&M conference is reactive by nature and highly subject to hindsight bias. This is the basis for most clinical outcome reviews, focusing solely on medical providers and their decision making.14 In their report, titled “Nine Steps to Move Forward from Error” in medicine, human factors experts Cook and Woods challenged the medical community to resist the temptation to simplify the complexities that practitioners face when reviewing accidents post hoc. Premature closure by blaming the closest clinician hides the deeper patterns and multiple contributors associated with failure, and ultimately leads to naive “solutions” that are weak or even counterproductive.15 The Institute of Medicine has also cautioned against blaming an individual and recommending training as the sole outcome of case review.7 While the culture within medicine is to learn from failure, the M&M conference does not typically achieve this aim.

A Human Factors Approach to Improving Patient Safety

Murphy’s law—that whatever can go wrong will—is the common-sense explanation for medical mishaps. The science of safety (and how to create it), however, is not common sense. The field of human factors engineering grew out of a focus on human interaction with physical devices, especially in military or industrial settings. This initial focus on how to improve human performance addressed the problem of workers that are at high risk for injury while using a tool or machine in high-hazard industries. In the past several decades, the scope of this science has broadened. Human factors engineering is now credited with advancing safety and reliability in aviation, nuclear power, and other high hazard work settings. Membership in the Human Factors and Ergonomics Society in North America alone has grown to over 15,000 members. Human factors engineering and related disciplines are deeply interested in modeling and understanding mechanisms of complex system failure. Furthermore, these applied sciences have developed strategies for designing error prevention and building error tolerance into systems to increase reliability and safety, and these strategies are now being applied to the healthcare industry.16–21 The specialty of anesthesiology has employed this science to reduce the anesthesia-related mortality rate from approximately 1 in 10,000 in the 1970s to over 1 in 250,000 three decades later.22 Critical incident analysis was used by a bioengineer (Jeffrey Cooper) to identify preventable anesthesia mishaps in 1978.23 Cooper’s seminal work was supplemented by the “closed-claim” liability studies, which delineated the most common and severe modes of failure and factors that contributed to those failures. The specialty of anesthesiology and its leaders endorsed the precepts that safety stems more from improved system design than from increasing vigilance of individual practitioners. As a direct result, anesthesiology was the first specialty to adopt minimal standards for care and monitoring, preanesthesia equipment checklists similar to those used in commercial aviation, standardized medication labels, interlocking hardware to prevent gas mix-ups, international anesthesia machine standards, and the development of high-fidelity human simulation to support crisis team training in the management of rare events. Lucien Leape, a former surgeon, one of the lead authors of the Harvard Practice Study, and a national advocate for patient safety, has stated, “Anesthesia is the only system in healthcare that begins to approach the vaunted ‘six sigma’ (a defect rate of 1 in a million) level of clinical safety perfection that other industries strive for. This outstanding achievement is attributable not to any single practice or development of new anesthetic agents or even any type of improvement (such as technological advances) but to application of a broad array of changes in process, equipment, organization, supervision, training, and teamwork. However, no single one of these changes has ever been proven to have a clear-cut impact on mortality. Rather, anesthesia safety was achieved by applying a whole host of changes that made sense, were based on an understanding of human factors principles, and had been demonstrated to be effective in other settings.”24 The Anesthesia Patient Safety Foundation, which has become the clearinghouse for patient safety successes in anesthesiology, was used as a model by the American Medical Association to form the National Patient Safety Foundation in 1996.25 Over the subsequent decade, the science of safety has begun to permeate health care.

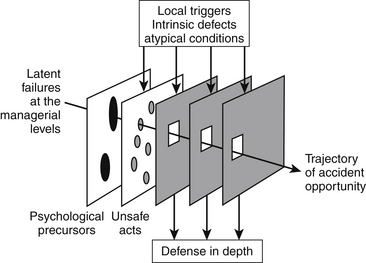

The human factors psychologist James Reason has characterized accidents as evolving over time and as virtually never being the consequence of a single cause.26,27 Rather, he describes accidents as the net result of local triggers that initiate and then propagate an incident through a hole in one layer of defense after another until irreversible injury occurs (Fig. 1-1). This model has been referred to as the “Swiss cheese” model of accident causation. Surgical care consists of thousands of tasks and subtasks. Errors in the execution of these tasks need to be prevented, detected, and managed, or tolerated. The layers of Swiss cheese represent the system of defenses against such error. Latent conditions is the term used to describe “accidents waiting to happen” that are the holes in each layer that will allow an error to propagate until it ultimately causes injury or death. The goal in human factors system engineering is to know all the layers of Swiss cheese and create the best defenses possible (i.e., make the holes as small as possible). This very approach has been the centerpiece of incremental improvements in anesthesia safety.

One structured approach designed to identify all holes in the major layers of cheese in medical systems has been described by Vincent.28,29 He classifies the major categories of factors that contribute to error as follows:

1. Patient factors: condition, communication, availability, and accuracy of test results and other contextual factors that make a patient challenging

2. Task factors: using an organized approach in reliable task execution, availability, and use of protocols, and other aspects of task performance

3. Practitioner factors: deficits and failures by any individual member of the care team that undermines management of the problem space in terms of knowledge, attention, strategy, motivation, physical or mental health, and other factors that undermine individual performance

4. Team factors: verbal/written communication, supervision/seeking help, team structure, and leadership, and other failures in communication and coordination among members of the care team such that management of the problem space is degraded

5. Working conditions: staffing levels, skills mix and workload, availability and maintenance of equipment, administrative and managerial support, and other aspects of the work domain that undermine individual or team performance

6. Organization and management factors: financial resources, goals, policy standards, safety culture and priorities, and other factors that constrain local microsystem performance

7. Societal and political factors: economic and regulatory issues, health policy and politics, and other societal factors that set thresholds for patient safety

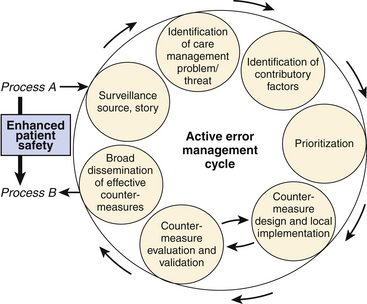

If this schema is used to structure a review of a morbidity or mortality, that review will be extended beyond myopic attention to the singular practitioner. Furthermore, the array of identified factors that undermine safety can then be countered systematically by tightening each layer of defense, one hole at a time. I have adapted active error management as described by Reason and others into a set of steps for making incremental systemic improvements to increase safety and reliability. In this adaptation, a cycle of active error management consists of (1) surveillance to identify potential threats, (2) investigation of all contributory factors, (3) prioritization of failure modes, (4) development of countermeasures to eliminate or mitigate individual threats, and (5) broad implementation of validated countermeasures (Fig. 1-2).

FIGURE 1-2 Sequence of steps for identifying vulnerabilities and then implementing corrective measures.

(Copyright Blike 2002.)

A comprehensive review of the science of human factors and patient safety is beyond the scope of this chapter; neurosurgical patient safety has been reviewed, including ethical issues and the impact of legal liability.30 Safety in aviation and nuclear power has taken over four decades to achieve the cultural shift that supports a robust system of countermeasures and defenses against human error. However, it is practical to use an example to illustrate some of the human factors principles introduced. Consider this case example as a window into the future of managing the most common preventable adverse events associated with surgery (see Table 1-1).

Example of Medical Error: “Wrong-Sided Brain Surgery”

Wrong-site surgery is an example of an adverse event that seems as though it should “never happen.” However, given over 40 million surgical procedures annually, we should not be surprised when it occurs. The news media has diligently reported wrong-site surgical errors, especially when they involve neurosurgery. Headlines such as “Brain Surgery Was Done on the Wrong Side, Reports Say” (New York Daily News, 2001) and “Doctor Who Operated on the Wrong Side of Brain Under Scrutiny” (New York Times, 2000), are inevitable when wrong-site brain surgery occurs.31–33 As predicted, these are not isolated stories. A recent report from the state of Minnesota found 13 instances of wrong-site surgery in a single year during which time approximately 340,000 surgeries were performed.34 No hospital appeared to be immune to what appears on the surface to be such a blatant mistake. Indeed, an incomplete registry collecting data on wrong-site surgery since 2001 now includes over 150 cases. Of 126 instances that have been reviewed, 41% relate to orthopedic surgery, 20% relate to general surgery, 14% to neurosurgery, 11% to urologic surgery, and the remaining to the other surgical specialties.35 In a recent national survey,36 the incidence of wrong-sided surgery for cervical discectomies, craniotomies, and lumbar surgery was 6.8, 2.2, and 4.5 per 10,000 operations, respectively.

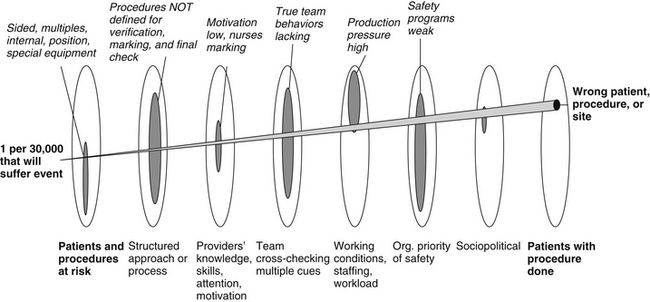

The sensational “front-page news” media fails to identify the deeper second story behind these failures and how to prevent future failures through creation of safer systems.37 In this example, we provide an analysis of contributory factors associated with wrong-site surgery to reveal the myriad of holes in the defensive layers of “cheese.” These holes will need to be eliminated to truly impact the frequency of this already rare event and create more reliable care for our patients.

Contributory Factor Analysis

Patient Factors Associated with Wrong-Site Surgery

Patient Condition (Medical Factors That If Not Known Increase the Risk for Complications)

Neurosurgical patients are at higher risk for wrong patient surgery than average. Patients and their surgical conditions contribute to error. When patients are asked what surgery they are having done on the morning of surgery, only 70% can correctly state and point to the location of the planned surgical intervention.38 Patients are a further source of misinformation of surgical intent when the pathology and symptoms are contralateral to the site of surgery, a common condition in neurosurgical cases. Patients scheduled for brain surgery and carotid surgery often confuse the side of the surgery with the side of the symptoms. Patients with educational or language barriers or cognitive deficits are more vulnerable since they are unable to accurately communicate their surgical condition or the planned surgery.

Certain operations in the neurosurgical population pose higher risk for wrong-site surgery. While left–right symmetry and sidedness represents one high-risk class of surgeries, spinal procedures in which there are multiple levels is another.39

Communication (Factors That Undermine the Patient’s Ability to Be a Source of Information Regarding Conditions That Increase the Risk for Complications and Need to Be Managed)

Obviously, patients with language barriers or cognitive deficits represent a group that may be unable to communicate their understanding of the surgical plan. This can increase the chance of patient identification errors that lead to wrong-site surgery. In a busy practice, patients requiring the same surgery might be scheduled in the same operating room (OR). It is not uncommon to perform five carotid endarterectomies in a single day.40 When one patient is delayed and the order switched to keep the OR moving, this vulnerability is expressed. Patients with common names are especially at risk. A 500-bed hospital will have approximately 1,000,000 patients in the medical record system. About 10% of patients will have the same first and last names. Five percent will have a first, middle, and last name in common with one other individual. Only by cross-checking the name with one other patient identifier (either birth date or a medical record number) can wrong-patient errors be trapped.41

Another patient communication problem that increases risk for wrong-site surgery consists of patients marking themselves. Marking the skin on the side of the proposed surgery with a pen is now common practice by the surgical team and part of the Universal Protocol. However, some patients have placed an X on the site not to be operated on. The surgical team has then confused this patient mark with their own in which an X specifies the side to be operated on. Patients are often not given information of what to expect and will seek outside information. For example, a neurosurgeon on a popular daytime talk show discussing medical mistakes stated incorrectly that patients should mark themselves with an X on the side that should not be operated on.42 This error in information reached millions of viewers, and was in direct violation of recommendations for marking provided by the Joint Commission on Accreditation of Healthcare Organizations (and endorsed by the American College of Surgeons, American Society of Anesthesiology, and Association of Operating Room Nurses). Patients who watched this show and took the advice of the physician are now at higher risk than average for a wrong-sided surgical error.

Availability and Accuracy of Test Results (Factors That Undermine Awareness of Conditions That Increase the Risk for Complications and Need to Be Managed)

Radiologic imaging studies can be independent markers of surgical pathology and anatomy. However, films and/or reports are not always available. Films may be lost or misplaced. Also, they may be unavailable because they were performed at another facility. New digital technology has created electronic imaging systems that virtually eliminate lost studies. However, space constraints have led many hospitals to remove old view boxes to make room for digital radiologic monitors. When patients bring films from an out-side hospital, this decision to eliminate view boxes prevents effective use of the studies. Even when available, x-rays and diagnostic studies are not labeled with 100% reliability. Imaging studies have been mislabeled and/or oriented backward, leading to wrong-sided surgery.43

Task Factors Associated with Wrong-Site Surgery

Task Design and Clarity of Structure (Consider This to Be an Issue When Work Is Being Performed in a Manner That Is Inefficient and Not Well Thought Out)

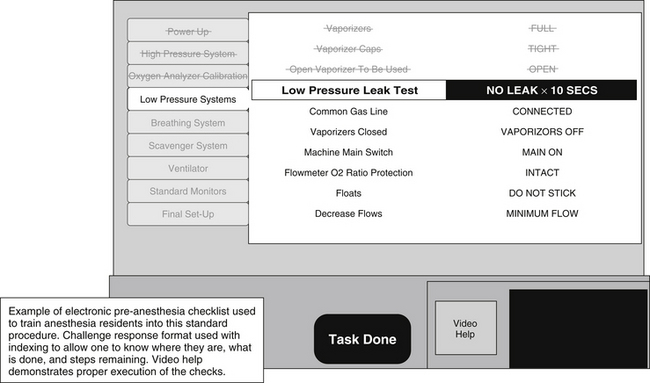

An example of a mature use of checklists exists in anesthesiology. An anesthesia machine (and other critical equipment) must be present and functional prior to the induction of anesthesia and initiation of paralysis so that a patient can have an airway as well as breathing and circulatory support provided within seconds to avoid hypoxia and subsequent cardiovascular complications. Until 1990, equipment failures were a significant problem leading to patient injury in anesthesia, even though anesthesia machines and equipment had been standardized and were being used on thousands of patients in a given facility.44 At this time, a preanesthesia checklist was established to structure the verification of mission critical components required to provide the anesthetic state and to verify that these components functioned nominally.45 This checklist includes over 40 items and has included redundancy for checking critical components. It has been introduced as a standard operating procedure for the discipline of anesthesia and is now mandated by the U.S. Food and Drug Administration46 (Fig. 1-3).

Availability and Use of Protocols (If Standard Protocols Exist, Are They Well Accepted and Are They Being Used Consistently?)

The first attempts to establish standardized protocols for patient safety began with JCAHO.35 The JCAHO Sentinel Event system began monitoring major quality issues in the late 1980s about the same time the original AAOS Sign Your Site program launched. A sentinel event was defined as “an unexpected occurrence involving death or serious physical or psychological injury, or the risk thereof.”35 In addition to the reporting aspect of the program, a quality review is triggered that requires a root cause analysis to try to determine factors contributing to the sentinel event.

The Universal Protocol was a logical extension of the Sentinel Events quality improvement program. Wrong-site surgery is considered a sentinel event. Because of the mandatory reporting of Sentinel Events, some of the best data on the incidence and anatomic location of wrong-site surgeries come from the JCAHO. Before implementation of the Universal protocol, the JCAHO analyzed 278 reports of wrong-site surgery in the Sentinel Events database up to 2003.47 This review showed that in 10% of the cases the wrong procedure had been performed, in 12% surgery had been performed on the wrong patient, and a further 19% of the reports characterized miscellaneous wrongs. Thus it was felt that a protocol to address this issue must include provisions to avoid wrong patient, wrong procedure, as well as wrong-site surgery.

In May 2003, the JCAHO convened a “Wrong Site Surgery Summit” to look into possible quality initiatives in this area. The three most effective measures identified were patient identification, surgical site marking, and calling a “time out” before skin incision to verify factors such as the initial patient identification, patient allergies, completion of preoperative interventions such as intravenous antibiotics, the procedure to be performed, available medical records, imaging studies, equipment etc. When correlated to Sentinel Event Data, it was found that only 12% of wrong-site surgeries occurred in institutions with two of three protocols applied.48 More importantly, no incidences of wrong-sided surgery were detected in institutions with all three measures in place. Therefore, these three key processes became the Core Elements of the Universal Protocol, which is a mandatory quality screen in all JCAHO-certified hospitals since July 1, 2004.48

The universal protocol for preventing wrong-patient, wrong-site errors is based on checklist principles; but it is not yet a validated comprehensive checklist that will trap errors in the way aviation checklists do. This is largely due to the lack of consistent execution of the checklists in a challenge-response format that is identical in procedure and practice throughout a single hospital’s ORs.49,50 This protocol is a first step, but the barriers to effective implementation are extensive at present and hinder improved safety.51,52

Another hazard is the lack of clarity for marking surgical sites. Marking the surgical site has been endorsed to improve safety and is a major component of the Universal Protocol. However, as described previously, the mark can be a source of error when placed inappropriately by the patient or any other member of the surgical team. Some specifics regarding the details of what, when, and how to mark are lacking. Do you mark the incision site or the target of the surgery? What constitutes a unique and definitive mark? What shape and color should be used? What type of pen should be used? Does the ink pose any risk for infection or is it washed off during the course of preparation? Who should place the mark? What are the procedures that get marked and which should not? Are there any patients for whom the mark is dangerous? How do you mark for a left liver lobe resection or other procedures like brain surgery in which there is a single organ but still sidedness that is critical? I worked with over 10 surgical specialties to develop specific answers to these questions. Multiple marks and pens were tested. Not all symbols and pens were equally effective. Many inks did not withstand preparation and remain visible in the operative field. We now use specific permanent pens (Carter fine and Sharpie very fine) and a green circle to mark only “sided” procedures. We specified that the target is marked rather than the incision, the mark must be done by the surgeon, and the mark must be placed in a manner in which it is visible during the preincision check after the position, preparation, and drape have been completed. For example, a procedure requiring cystoscopy to inject the right ureteral orifice to treat reflux is now marked on the right thigh so that the green circle mark is a cue to all members of the surgical team and can be seen even when the patient is prepared and draped. Again, we used the mark to specify the target, not the incision or body entry point. In addition, we have had every procedure in our booking system labeled as “mark required” or “mark not required,” because this was not always clear. Even with this level of specificity, we have found marking to be erroneous and inconsistent during our initial implementation. Marking the skin for spine surgery to indicate the level may increase the risk of wrong-site errors.53 A superior method for “marking” to verify the correct spinal level to be operated upon is to perform an intraoperative radiologic study with a radiopaque marker. We expect that many revisions to this type of safety measure will be needed before the marking procedure is robust and truly adds safety value. Cross-checking procedures in aviation were developed and matured over decades to achieve the reliability and consistency now observed.54

Statistics for the first two quarters after implementation of the Universal Protocol were encouraging.55 It appeared that reports of wrong-site surgeries had declined below the rate of approximately 70 cases per year for the previous 2 years. However, after a full year’s statistics had been accumulated, it was found that the incidents of wrong-site surgery had actually increased to about 88 for 2005.12 Overall, wrong-site surgery had climbed to the number 2 ranking in frequency of sentinel events. Whether these data represent a true increase in the frequency of wrong-site surgery or are simply explained by better awareness and reporting is unclear at this time.

Currently the direction in patient safety is more toward a holistic surgical checklist, including all aspects of a patient visit to the hospital and not only the limited time out before surgery.56 A number of studies have been conducted that evaluated the use of checklists in medicine and their effect in behavior modification.56 To that effect, the WHO surgical checklist has been developed.57 The features of the Universal Protocol have been integrated in this checklist with the addition of preprocedural and postprocedural checkpoints. Results from the implementation of the WHO checklist are encouraging. These initial attempts have been extended to the development of checklists, like the SURPASS checklist,58 that cover the whole surgical pathway from admission to discharge. Overall, although it has been shown that aviation based team training elicits initially sustainable responses, effects may take years to be part of the surgical culture.59

Practitioner Factors Associated with Wrong-Site Surgery

Knowledge, Skills, and Rules (Individual Deviation from Standard of Care Due to Lack of Knowledge, Poor Skills, or a Failure to Use Rules Associated with Best Practice)

Knowledge deficits are often due to over-reliance on memory for information used rarely. Measures that increase availability of referent knowledge when needed would be helpful. Unfortunately, references at the point of care on the day of surgery are not standard or reliable. Three descriptions of the surgery often exist. The operative consent lists the planned surgery in lay language patients should be able to understand. The surgical preoperative note may provide a technical description of the planned surgery but often is incomplete, failing to include such information as the specific reason for surgery, sidedness, target, approach, position, need for implants, and/or special equipment. The booking system will often use a third nomenclature to describe the planned surgery that is administrative and linked to billing codes. The use of three different references for the same surgical procedure creates ambiguity. A “right L3–L4 facetectomy in the prone position” may be listed on the consent form as a “right third lumbar vertebrae joint surgery” on the consent and a CPT code “LUMBAR FACETECTOMY 025-36047.”60

Subtle knowledge deficits are more likely to reach a patient and cause harm when individuals are charged to do work that is at the limits of their competency. The culture of medicine does not encourage knowledge calibration, the term used to describe how well individuals know what they know and know what they do not know.61 At our institution, when preoperative nurses were assigned the role of marking patients to identify sidedness, they routinely marked the wrong site or marked in a manner such that the mark was not visible after the position, prep, and drape. These nurses accepted this assigned role because our medical culture encourages guessing and assertiveness. On further review, we have found that only the surgeon has the knowledge required to specify the surgical plan in detail and to mark patients correctly. Other members of the surgical team often have subtle knowledge deficits regarding surgical anatomy, terminology, and technical requirements such that they are prone to err in marking or positioning patients. Similarly, nurses and anesthesiologists in the presurgical areas are not able to verify or reconcile multiple differing sources of information as to the surgical plan. Instead, they often propagate errors and/or enter new misinformation into scheduling systems and patient records.

Attention (Factors That Undermine Attention)

Task execution is degraded when attention is pulled away from the work being performed. Distraction and noise are significant problems in the operating theater that can dramatically affect performance and vigilance. Because the wall and floor surfaces are designed to be cleaned easily, noise levels in the OR are similar to those on a busy highway.62,63 The preincision interval is a very active time, when the patient is being given anesthesia, being positioned, and being prepared. These parallel activities represent competing priorities that conflict with a coordinated effort by the entire surgical team to verify surgical intent.

Strategy (Given Many Alternatives, Was the Strategy Optimized to Minimize Risks through Preventive Measures and through Recovery Measures That Use Contingency Planning and Anticipatory Behaviors?)

Motivation/Attitude (Motivational Failures and Poor Attitudes Can Undermine Individual Performance—the Psychology of Motivation Is Complex)

Physical/Mental Health (Provider Performance Deviations from Standard “Competencies” Can Be Due to Physical or Mental Illness)

Industries that have come to accept the human component as having requirements for optimal human–machine system performance have thus promoted regular “fit-for-duty” examinations.64 In the aviation industry, job screening includes a “color-blindness” test for air traffic controllers since many of the monitors encode critical information in color.65 Some specific provider health conditions can predispose to wrong-site surgery. Surgeons and other members of the perioperative team with dyslexia and related neuropsychiatric deficits have particular difficulty with sidedness and left–right orientation.

Team Factors Associated with Wrong-Site Surgery

A complex work domain will overwhelm the cognitive abilities of any one individual and not permit expertise of the entire field of practice. A common strategy for managing the excess demands that complex systems (like that of human physiology and pathophysiology) place on any individual is to subspecialize. Breaking a big problem into smaller parts that are then more manageable by a group of individuals is rational. However, by “fixing” the problem of individual cognitive work overload, a new class of problems manifests—those due to team communication and coordination failure. Many human factors experts consider team failures to be the most common contributory factor associated with error in complex sociotechnical work systems.66 Crisis resource management training and team training in aviation is considered to have played a major role in improving aviation safety.67 These methods are just now being applied in medicine.68

Verbal/Written Communication (Any Communication Mode That When It Fails Leads to a Degradation in Team Performance)

Verbal communications fail due to noise (just do not hear) or content comprehension (mismatch between what was intended and what was understood). Noise should be minimized to support verbal communication in the OR. Comprehension problems have many mechanisms. Human-to-human communication requires “grounding,” which is the process whereby both parties frame the communication episode based on how the one conveying a message discovers the frame of reference of the one receiving the message. This activity represents a significant part of effective communication. Agreeing on a common language and structuring communication goes a long way toward increasing accuracy and speed of communication of mission-critical information.17,18,69 While isolated examples of structured communication across members of the operative team exist, it is usually confined to individuals knowledgeable in safety science and the use of structured communication in the military and in aviation.

Supervision/Seeking Help (Any Member of the Team Who Fails to Mobilize Help When Getting into a Work Overload Situation, or a Team Member in a Supervisory Role Failing to Provide Adequate Oversight, Especially in Settings in Which There Are Learners and/or Transient Rotating Team Members)

True team performance is only realized when a group of individuals share a common goal, divide work tasks between individuals to create role delineation and role clarity within the team, and know each other’s roles well enough to provide cross-checks of mission-critical activities.70 On medical teams, data gathering and treatment implementation tend to be nursing roles, and diagnostic decision making and treatment selection tend to be physician roles.71 A myriad of supporting clinicians and nonclinicians are vital in medical teams. The nurse, nurse practitioner, medical student, physicians, and others must be able to detect problem states or deviations from the “expected course” and activate control measures. When a practitioner fails to work within his or her competencies or is on the learning curve for his or her role on the team, failure to get or provide supervision comes into play. For wrong-site surgery errors, this issue manifests when one surgeon does the preoperative consultation and operative planning and the other starts the surgery with incomplete knowledge. For example, a resident or fellow may fail to call an attending physician to seek clarification of the operative plan.

Team Structure and Leadership (Teams That Do Not Have Structure, Role Delineation, and Clarity, and Methods for Flattening Hierarchy While Resolving Conflict Will Have Suboptimal Team Performance)

Teams will inevitably have to face ambiguous situations that need immediate action. Authority gradients prevent junior members of the team from questioning the decision making and action planning of the leader (a nurse might be hesitant to tell a senior surgeon that he or she is violating a safety procedure, and/or the surgeon might disregard the nurse).70 Methods for flattening hierarchy will lead to more robust team situational awareness and support cross-checking behavior. In contrast, it is essential to have efficient ways of resolving conflict, especially under emergency conditions. Some surgeons view the Universal Protocol as a ridiculous requirement forced upon them by regulatory bodies responding to liability pressure. This can create a void in leadership regarding team behaviors that would otherwise help to trap errors that predispose to wrong-site surgery.

Staffing Levels, Skills Mix, and Workload (Managers Facing Financial Pressures, a Nursing Shortage, and Increasing Patient Acuity Can Choose to Institute Hiring Freezes and Reduce Staffing Ratios to Decrease the Costs Associated with Care)

While institutions and providers that have high surgical case volumes have been noted to have the best surgical outcomes, medical mishaps occur even in these institutions. Providing exceptional care to a few patients is easier than providing reliable care to everyone.72 Indeed, excessive production pressure and patient volumes are associated with safety violations due to cutting corners when productivity goals are unrealistic. Over two thirds of wrong-site surgeries occurred in ambulatory surgery settings in which patient acuity is the lowest but productivity pressures are high.73 Financial constraints have forced more ORs to be staffed by temporary traveling position nurses, have resulted in nursing orientations that have been reduced, and have increased production pressure on surgeons to increase their utilization of OR time. Unfortunately, such aggressive measures to utilize all the capacity of the OR resources conflicts with the need for some reserve capacity to manage the inherent uncertainty and variability associated with medical disease and surgical care. As a result, emergency situations can easily overwhelm care systems that lack reserve resources. Providers calling in sick during flu season and/or a flurry of surgical emergencies can create dangerous conditions for elective surgery due to the need to redirect those resources that might otherwise be available.

Availability and Maintenance of Equipment (Technology and Tools Vary in their Safety Features and Usability: Equipment Must Be Maintained or It Can Become a Liability)

For preventing wrong-site surgery, we have found that the specific marking pen we are utilizing needs to be stocked and available throughout the hospital to allow surgeons to perform the safety practices we have required. Surgeons unable to find a green marker will use alternative pens, resulting in a variation in practice that degrades the value of the safety measure. Other aspects of our wrong-site surgery safeguards have proved difficult to maintain. A computerized scheduling system had triggers to cue the operative team as to the marking protocol and special equipment needs. When a new procedure was added to the scheduling system, the programmers overlooked the “needs to be marked” trigger, and for a period of time these patients were not marked. The operative team had been using technology designed to support their work, but that technology was not maintained. The best team of practitioners can perform even better when provided state-of-the-art working conditions. For example, patient identification technology that utilizes bar coding and radiofrequency identification tags will virtually eliminate wrong patient errors.74 Although this technology is currently available, few organizations have been able to afford this technology to prevent wrong-site errors due to patient misidentification.

Administrative and Managerial Support (In Complex Work Settings, Domain Experts That Perform the Work Need to Be Supported by Personnel Who Are Charged with Managing Resources, Scheduling, Transcription, Billing, etc.)

Clinical information systems (e.g., an OR scheduling system) are not reliable or robust at confirming operative intent early in the process or planning for surgery.51 Busy surgical clinics often do not have efficient and reliable mechanisms for providing a scheduling secretary with the information they need or for verifying that booking information is accurate. Secretaries may be using a form that is illegible or may simply be working from a verbal description of the planned surgery. Because these support personnel may not understand the terminology, errors are common. In addition, busy surgeons may forget that other information, such as the operative position required, the need for surgical implants, or the requirement for special equipment, is not obvious, and thus fail to be explicit. In addition, this work and the expertise required are often undervalued. The result can be to hire inexperienced secretaries and accept high support staff turnover.

Organizational Factors Associated with Wrong-Site Surgery

Organizations must make safety a priority. If production pressure and economic goals are in conflict with safety, organizations must have structure and methods for ensuring safety as the priority.75 Independent offices of patient safety and patient safety officers with the authority to stop operations when necessary are examples of organizational structures designed to maintain safety in the face of economic pressure.

Financial Resources (Safety Is Not Free: The Costs Associated with Establishing Safe Practices and Acquiring Safety Technology May Be Prohibitive)

Goals and Policy Standards (Practice of Front-Line Workers Is Shaped by Clear Goals and Consistent Policies That Are Clinically Relevant)

Safety Culture and Priorities (A Safety Culture of an Organization May Be Pathologic, Reactive, Proactive, or Generative)

Most hospitals today are reactive in their culture of safety.27 The result is that those institutions that have had the most public wrong-site surgeries have done the most to establish safety countermeasures to prevent future wrong-site surgery. Proactive action to invest in creating safeguards was beyond the capability or commitment of most healthcare organizations as of 2005.

Sociopolitical Factors Associated with Wrong-Site Surgery

Economic, Regulatory Issues, Health Policy, and Politics

We practice medicine within large national healthcare systems. Currently, third-party payers wish for safety to be a priority. However, organizations that invest in safety technologies to avert error do not typically get a return on that investment. In fact, hospital investment to prevent iatrogenic injury directly benefits third-party payers, not the hospital. Similarly, our legal system does not serve as a strong incentive for safety because jury verdicts do not accurately identify and punish negligent care. Rather, patients with negative outcomes that were not preventable still win jury verdicts, while patients that truly suffered a preventable adverse event commonly fail to seek legally allowed compensation.76

Summary of Contributory Factor Analysis

This example of wrong-site surgery was used to illustrate the multiple contributory factors that allow error to propagate and evolve into an injury-causing accident. Even with an error as blatant as wrong brain surgery, one can identify multiple vulnerabilities in the multiple layers of our complex medical care systems (Fig. 1-4). While hindsight bias tempts one to blame the individuals involved as the sole causative factor, it is clear that the individuals are part of a complex system with multiple latent conditions (hazards and “accidents waiting to happen”). High-reliability organizations are notable for their dedication to systematically identify all hazards and then counter each one. These organizations understand that failure is multidimensional and so is maximizing safety.

Perspective

The plethora of factors associated with breaches in patient safety underline the complexity of this phenomenon and the protean solutions required to address it. This need is now more imperative than ever, especially in the face of the adoption by the Centers for Medicare and Medicaid Services (CMS) of a nonreimbursement policy for certain “never events.”77 This initiative has been powered by the trend to motivate hospitals to improve patient safety by implementing standardized protocols. These newly defined “never events” limit the ability of the hospitals to bill Medicare for adverse events and complications. The nonreimbursable conditions apply only to those events deemed “reasonably preventable” through the use of evidence-based guidelines. The need to address this problem effectively and to verify the solution through double-blind, placebo-controlled randomized trials is therefore imperative.

Bernstein M., Hebert P.C., Etchells E. Patient safety in neurosurgery: detection of errors, prevention of errors, and disclosure of errors. Neurosurg Q. 2003;13(2):125-137.

Blike G., Biddle C. Preanesthesia detection of equipment faults by anesthesia providers at an academic hospital: comparison of standard practice and a new electronic checklist. AANA J. 2000;68:497-505.

Brennan T.A., Leape L.L., Laird N.M., et al. Incidence of adverse events and negligence in hospitalized patients—results of the Harvard Medical Practice Study I. N Engl J Med. 1991;324(6):370-376.

Cook R.I., Woods D.D., Miller C. A tale of two stories: contrasting views of patient safety. Chicago: National Patient Safety Foundation; 1998. Available at http://www.npsf.org/exec/front.html

de Vries E.N., Hollmann M.W., Smorenburg S.M., et al. Development and validation of the SURgical PAtient Safety System (SURPASS) checklist. Qual Saf Health Care. 2009;18(2):121-126.

Gawande A.A., Thomas E.J., Zinner M.J. The incidence and nature of surgical adverse events in Colorado and Utah in 1992. Surgery. 1999;126(1):66-75.

Helmreich R.L., Foushee H.C. Why crew resource management? The history and status of human factors training programs in aviation. In: Wiener E., Kanki Helmreich R. Cockpit Resource Management. New York: Academic Press, 1993.

Jhawar B.S., Mitsis D., Duggal N. Wrong-sided and wrong-level neurosurgery: a national survey. J Neurosurg Spine. 2007;7(5):467-472.

Joint Commission on Accreditation of Healthcare Organizations. Lessons learned: wrong site surgery, sentinel event, No. 6, 2001 Available at http://www.jcaho.org/edu_ pub/sealert/sea6.html

Joint Commission on Accreditation of Healthcare Organizations. Joint Commission on the Accreditation of Healthcare Organizations. Oakbrook, IL: Sentinel Event Alert; 2003.

Joint Commission on Accreditation of Healthcare Organizations. Sentinel Event Alert: Follow-up Review of Wrong Site Surgery. No. 24, 2001.

Joint Commission on Accreditation of Healthcare Organizations. Sentinel Event Statistics. 2003. Available at: http://www.jcaho.org/accredited+organizations/hospitals/sentinel+events/sentinel+events+ statistics.htm.

Joint Commission on Accreditation of Healthcare Organizations. Joint Commission on the Accreditation of Healthcare Organizations. Oakbrook, IL: Universal Protocol Toolkit; 2004.

Kohn L.T., Corrigan J.M., Donaldson M.S. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press, 2000.

Leape L.L., Berwick D.M., Bates D.W. What practices will most improve safety? JAMA. 2002;288:501-507.

Leape L.L., Rennan Ta, Laird N., et al. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med. 1991;324:377-384.

Lembitz A., Clarke T.J. Clarifying “never events” and introducing “always events.”. Patient Saf Surg. 2009;3(1):26.

Makary M.A., Mukherjee A., Sexton J.B., et al. Operating room briefings and wrong-site surgery. J Am Coll Surg. 2007;204(2):236-243.

National Patient Safety Foundation. The NPSF is an independent, nonprofit research and education organization, Available at http://www.npsf. org/html/research/rfp.html

Sax H.C., Browne P., Mayewski R.J., et al. Can aviation-based team training elicit sustainable behavioral change? Arch Surg. 2009;144(12):1133-1137.

Smith C.M. Origin and uses of primum non nocere—above all, do no harm!. J Clin Pharmacol. 2005;45:371-377.

Vincent N., et al. Patient safety: Understanding and responding to adverse events. N Engl J Med. 2003;348(11):1051-1056.

Wilson I., Walker I. The WHO Surgical Safety Checklist: the evidence. J Perioper Pract. 2009;19(10):362-364.

Wong D.A. The Universal Protocol: A one-year update. AAOS Bulletin. American Academy of Orthopedic Surgeons. 2005;53:20.

Wong D.A., Watters W.C.3rd. To err is human: quality and safety issues in spine care. Spine (Phila Pa 1976). 2007;32(suppl 11):S2-S8.

1. Smith C.M. Origin and uses of primum non nocere—above all, do no harm!. J Clin Pharmacol. 2005;45:371-377.

2. Cushing H. The establishment of cerebral hernia as a decompressive measure for inaccessible brain tumors; with the description of intra-muscular methods of making the bone defect in temporal and occipital regions. Surg Gynecol Obstet. 1905;1:297-314.

3. Pinkus R.L. Mistakes as a social construct: an historical approach. Kennedy Inst Ethics J. 2001;11:117-133.

4. Bliss M., William Osler. A Life in Medicine. Toronto: University of Toronto Press; 1999.

5. Report of the Committee Appointed by the Royal Medical and Surgical Society to Inquire into the Uses and Physiological, Therapeutical and Toxical Effects of Chloroform. London: JR Adlarto; 1864.

6. Brennan T.A., Leape L.L., Laird N.M., et al. Incidence of adverse events and negligence in hospitalized patients—results of the Harvard Medical Practice Study I. N Engl J Med. 1991;324(6):370-376.

7. Kohn L.T., Corrigan J.M., Donaldson M.S. To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press, 2000.

8. Gawande A.A., Thomas E.J., Zinner M.J. The incidence and nature of surgical adverse events in Colorado and Utah in 1992. Surgery. 1999;126(1):66-75.

9. Leape L.L., Ta Rennan, Laird N., et al. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med. 1999;324:377-384.

10. Vincent C., Neale G., Woloshynowych M. Adverse events in British hospitals: preliminary retrospective record review. BMJ. 2001;322:517-519.

11. Wilson R.M., Harrison B.T., Gibberd R.W., Hamilton J.D. An analysis of the causes of adverse events from the Quality of Australian Health Care Study. Med J Aust. 1999;170:411-415.

12. Wong D.A., Watters W.C.3rd. To err is human: quality and safety issues in spine care. Spine. (Phila Pa 1976). 2007;32(suppl 11):S2-S8.

13. Pierluissi E, Fischer MA, Campbell AR, Landefeld CS. Discussion of medical errors in morbidity and mortality conferences. JAMA. 1003;290(21):2838-2842.

14. Woods D.D., Cook R.I. Perspectives on human error: hindsight bias and local rationality. In: Durso F.T., Nickerson R.S. Handbook of Applied Cognition. New York: John Wiley & Sons, 1999.

15. Woods D.D., Cook R.I. Nine steps to move forward from error. Cognition Technol Work. 2002;4:137-144.

16. Gawande A. Complications: A Surgeon’s Notes on an Imperfect Science. New York: Picador; 2002.

17. Wickens C.D., Gordon S.E., Liu Y. An Introduction to Human Factors Engineering. Reading, MA: Addison-Wesley; 1998.

18. Sanders M.S., McCormick E.J. Human Factors in Engineering and Design, 7th ed. New York: McGraw-Hill; 1993.

19. Bogner M.S. Human Error in Medicine. Mahwah, NJ: Lawrence Erlbaum; 1994.

20. deLeval M.R., Carthey J., Wright D.J., et al. All United Kingdom Pediatric Cardiac Centers. Human factors, and cardiac surgery: a multicenter study. J Thorac Cardiovasc Surg. 2000;119:661-672.

21. Donchin Y., Gopher D., Olin M., et al. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med. 1995;23:298.

22. Keats A.S. Anesthesia mortality in perspective. Anesth Analg. 1990;71:113-119.

23. Cooper J.B., Newbower R.S., Long C.D., McPeek B. Preventable anesthetic mishaps: a study of human factors. Anesthesiology. 1978;49:399-406.

24. Leape L., Berwick D.M., Bates D.W. What practices will most improve safety? JAMA. 2002;288:501-507.

25. National Patient Safety Foundation. The NPSF is an independent, nonprofit research and education organization, Available at http://www.npsf.org/html/research/rfp.html

26. Reason J. Human Error. New York: Cambridge University Press; 1990.

27. Reason J. Managing the Risks of Organizational Accidents. Aldershot, Hants, England; Brookfield, VT: Ashgate; 1997.

28. Vincent C., Taylor-Adams S., Chapman E.J., et al. How to investigate and analyze clinical incidents: clinical risk unit and association of litigation and risk management protocol. BMJ. 2000;320:777-781.

29. Vincent N., et al. Patient safety: understanding and responding to adverse events. N Engl J Med. 2003;348(11):1051-1056.

30. Bernstein M., Hebert P.C., Etchells E. Patient safety in neurosurgery: detection of errors, prevention of errors, and disclosure of errors. Neurosurg Q. 2003;13(2):125-137.

31. Brain surgery was done on the wrong side, reports say. New York Daily News. February 21, 2001.

32. Brain surgeon cited in bungled ’95 case faces a new inquiry. New York Times. February 18, 2000.

33. Altman L.K. State issues scathing report on error at Sloan-Kettering. New York Times. November 16, 1995.

34. Report details Minnesota hospital errors. Wall Street Journal. January 20, 2005.

35. Joint Commission on Accreditation of Healthcare Organizations. Sentinel Event Statistics, 2003 Available at http://www.jcaho.org/ accredited+organizations/hospitals/sentinel+events/sentinel+events+ statistics.htm

36. Jhawar B.S., Mitsis D., Duggal N. Wrong-sided and wrong-level neurosurgery: a national survey. J Neurosurg Spine. 2007;7(5):467-472.

37. Cook R.I., Woods D.D., Miller C. A tale of two stories: Contrasting views of patient safety. Chicago, IL: National Patient Safety Foundation; 1998. Available at http://www.npsf.org/exec/front.html

38. Schlosser E. Dartmouth-Hitchcock Medical Center Wrong Site Surgery Observational Study. Internal report. 2004.

39. Joint Commission on Accreditation of Healthcare Organizations. Lessons Learned: Wrong Site Surgery, Sentinel Event, No. 6, 2001 Available at http://www.jcaho.org/edu_ p ub/sealert/sea6.html

40. Harbaugh R.. Developing a neurosurgical carotid endarterectomy practice. Am Assoc Neurol Surg Bull, 1995;4:2.

41. Campion P. Dartmouth-Hitchcock Medical Center report on patient misidentification risk. Unpublished report. 2004.

42. Gupta S. Patient checklist. Outrageous medical mistakes—Oprah Winfrey Show. October 2003. Available at http://www2.oprah.com/health/yourbody/health_yourbody_ g upta.jhtml

43. Warnke J.P., Kose A., Schienwind F., Zierski J. Erroneous laterality marking in CT of head. A case report. Zentralbl Neurochir. 1989;50:190-192.

44. Cooper J.B., Newbower R.S., Kitz R.J. An analysis of major errors and equipment failures in anesthesia management: considerations for prevention and detection. Anesthesiology. 1984;60:34-42.

45. U.SFood and Drug Administration. Anesthesia apparatus checkout recommendations. Federal Registry. 1994;59:35373-35374.

46. Blike G., Biddle C. Preanesthesia detection of equipment faults by anesthesia providers at an academic hospital: comparison of standard practice and a new electronic checklist. AANA J. 2000;68:497-505.

47. Joint Commission on the Accreditation of Healthcare Organizations. Sentinel Event Alert. Oakbrook, IL: Joint Commission on the Accreditation of Healthcare Organizations; 2003.

48. Joint Commission on the Accreditation of Healthcare Organizations. Universal Protocol Toolkit. Oakbrook, IL: Joint Commission on Accreditation of Healthcare Organizations; 2004.

49. Johnston G., Ekert L., Pally E. Surgical site signing and “time out”: issues of compliance or complacence. J Bone Joint Surg Am. 2009;91(11):2577-2580.

50. Makary M.A., Mukherjee A., Sexton J.B., et al. Operating room briefings and wrong-site surgery. J Am Coll Surg. 2007;204(2):236-243.

51. Rogers M.L., Cook R.I., Bower R., et al. Barriers to implementing wrong site surgery guidelines: a cognitive work analysis. IEEE Trans Systems Man Cybernetics. 2007;34(6):757-763.

52. Agency for Healthcare Research and Quality. Prevention of misiden-tifications: strategies to avoid wrong-site surgery. Chapter 43.2, 2003 Available at http://www.ahrq.gov/clinic/ptsafety/chap43b.htm

53. Adverse Health Events in Minnesota Hospitals. First Annual Public Report 2004. Includes Hospital Events Reported, July 2003–October 2004, Available at http://www.health.state.mn.us/patientsafety/aereport0105.pdf, July 2003–October 2004

54. Degani A., Wiener E.L. Cockpit checklists—concepts, design, and use. Hum Factors. 1993;35:345-359.

55. Wong D.A. The universal protocol: a one year update. AAOS Bulletin. American Academy of Orthopedic Surgeons. 2005;53:20.

56. Nilsson L., Lindberget O., Gupta A., Vegfors M. Implementing a pre-operative checklist to increase patient safety: a 1-year follow-up of personnel attitudes. Acta Anaesthesiol Scand. 2010;54:176-182.

57. Wilson I., Walker I. The WHO Surgical Safety Checklist: the evidence. J Perioper Pract. 2009;19(10):362-364.

58. de Vries E.N., Hollmann M.W., Smorenburg S.M., et al. Development and validation of the SURgical PAtient Safety System (SURPASS) checklist. Qual Saf Health Care. 2009;18(2):121-126.

59. Sax H.C., Browne P., Mayewski R.J., et al. Can aviation-based team training elicit sustainable behavioral change? Arch Surg. 2009;144(12):1133-1137.

60. Current procedural terminology CPT ICD9-CM. AMA Press. 2005. Available at https://catalog.ama-assn.org/Catalog/cpt/cpt_home.jsp

61. Cook R., Woods D.D. Chapter x Bogner MS. In Human error in medicine. Mahwah, NJ: Lawrence Erlbaum; 1994.

62. Shapiro R.A., Berland T. Noise in the operating room. N Engl J Med. 1972;287(24):1236-1238.

63. Firth-Cozens J. Why communication fails in the operating room. Quality Safety Health Care. 2004;13:327.

64. Federal Aviation Administration. Aerospace Medical Certification Division. Pilot medical certification questions and answers, AAM-300, Available at http://www.cami.jccbi.gov/AAM-300/amcdfaq.html

65. Can corrective lenses effectively improve a color vision deficiency when normal color vision is required? J Occup Environ Med. 1998;40(6):518-519.

66. Helmreich R.L., Foushee H.C. Why crew resource management? The history and status of human factors training programs in aviation. In: Wiener E., Kanki Helmreich R. Cockpit Resource Management. New York: Academic Press, 1993.

67. Helmreich R.L., Wilhelm J.A. Outcomes of crew resource management training. Int J Aviat Psychol. 1991;1:287-300.

68. Howard S.K., Gaba D.M., Fish K.J., et al. Anesthesia crisis resource management training: teaching anesthesiologists to handle critical incidents. Aviat Space Environ Med. 1992;63(9):763-770.

69. Monan B.. Readback hearback, aviation Safety Reporting System Directline, No. 1, March 1991 Available at http://asrs.arc.nasa.gov/ directline_issues/dl1_read.htm

70. Salas E., Dickinson D.L., Converse S.A., et al. Toward an understanding of team performance and training. In: Swezey R.W., Salas E. Teams: Their Training and Performance. Norwood, NJ: Ablex, 1992.

71. Surgenor S.D., Blike G.T., Corwin H.L. Teamwork and collaboration in critical care: lessons from the cockpit [editorial]. Crit Care Med. 2003;31:992-993.

72. Berwick D.M. Errors today and errors tomorrow. N Engl J Med. 2003;348(25):2570-2572.

73. Joint Commission on Accreditation of Healthcare Organizations. Sentinel Event Alert: Follow-up Review of Wrong Site Surgery, No. 24; 2001.

74. VeriChip expands hospital infrastructure. Hackensack University Medical Center becomes the second major medical center to adopt the VeriChip system. Delray Beach, FL: Business Wire; March 14, 2005.

75. Cook R., Rasmussen J. “Going solid”: a model of system dynamics and consequences for patient safety. Qual Safety Health Care. 2005;14:130-134.

76. Studdert D.M., Mello M.M., Brennan T.A. Medical malpractice. N Engl J Med. 2004;350:283-292.

77. Lembitz A., Clarke T.J. Clarifying “never events” and introducing “always events.”. Patient Saf Surg. 2009;31;3(1):26.