CHAPTER 4 Distal Radius Study Group: Purpose, Method, Results, and Implications for Reporting Outcomes

Distal radius fracture care has undergone substantial changes in tools and methods since the first commercial introduction of dorsal and volar fixed angle subchondral fixation plates in 1996.1 Are these changes good? Is plating “good” in general and specifically?

What is “quality care” as related to distal radius fractures? In Zen and The Art of Motorcycle Maintenance, Pirzig2 examines the concept of Quality. His argument cannot be restated within the context of this chapter; however, his premise of Quality as a unification of all values implies that the true “Quality” of distal radius fracture care can be found only in understanding the whole problem. There are participants (insurers, hospital administrators, equipment manufacturers) in the care process that measure and assign value to only a segment or segments of the “results pie.” For the purpose of this chapter, we contend that the physician is the participant with sufficiently broad and specific training to enable “asking the big question”: that is, does the addition of new technology matter to the patient, and where should the technology be delivered? We posit that “asking this big question” is the physician’s obligation, and that doing so requires ongoing comparative examination of outcome.

The above-listed hypotheses assume the reader is familiar with basic concepts of outcomes medicine and some concepts related to manufacturing. Specifically, the reader should be aware that a primary outcome can be selected from three broad categories: (1) general health, (2) condition-specific health, and (3) satisfaction.3 It also is assumed that the reader accepts the validity of structured patient questionnaires to measure outcomes within the three broad categories. As related to manufacturing, the second hypothesis examines the generalized utility of the method/technology; this is crucial when a tool requires a manipulation/skill-set. Physicians are generally familiar with the need to include patient compliance as a factor to consider when testing medication effectiveness. In physician-administered, “tool-based” medicine, it is essential to examine the ability of physicians to “comply” with a method/device requirement to achieve a desired outcome, and not to assume that success can be generalized outward from a motivated cohort (the surgeon designer’s experience). The need to prove treatment efficacy by providers can be assumed to vary directly in relationship to the potential variation in skill and treatment risk. Although large outcome differences cannot be categorically stated to occur in all of medicine, it is known that significant differences in treatment response to specific musculoskeletal diagnoses do occur throughout the United States, and so it seems likely that physician/region specific differences in outcomes already exist.4

Traditionally, the best medical experiments manipulate a single variable with the experiment’s designer separated from the introduction of the variable and the data collection process. This type of randomized clinical trial (RCT) has many qualities to recommend its use whenever possible. Clinical work makes such studies expensive, however, at a level that practically cannot be sustained as a means to reconfirm and monitor surgeon/system efficacy. Also, randomization requires patient enrollment that is predicated on the physician’s belief that selected treatments are equivalent. Often, it is impossible to find providers without treatment prejudice. Prejudiced physicians could not follow the principles of clinical equipoise (a state of genuine uncertainty on the part of the clinical investigator regarding the comparative therapeutic merits of each arm in a trial).5 Taken together, cost and the need for clinical equipoise argue for an alternative method of prospective enrollment and clinical data acquisition.

“Practical clinical trials” (PCT) is a phrase coined by Tunis and colleagues.6 By the authors’ definition, such trials (1) select relevant information/interventions to compare, (2) include a diverse population of study participants (providers), (3) include patients from a heterogeneous mix of practice settings, and (4) collect a broad range of health outcomes.

For the purpose of studying the effects of distal radius fracture treatment, these instructions are particularly useful. Using this set of instructions enables the study participants to choose the treatment that they believe, based on their skill and experience, would best meet a specific patient’s needs. Also, a “high” percentage of eligible patients can be expected to enroll because the relative risk to their health is clinically unchanged compared with not enrolling. Most important in the context of understanding the results of clinical care, such a study can still yield meaningful future treatment guidance assuming that data input is structured and standardized, and assuming that treatment selection bias is low.

Conclusions reached from observational studies have been shown to resemble RCTs when available treatments carry similar indications and risk.7 RCTs are valuable, but, assuming that the patients who “fall” by their choice or physician’s recommendation into specific treatment groups in the PCT are similar (equivalent propensity after analyses), the PCT is able to provide equal or better guidance regarding treatment efficacy (real-world applicability) compared with the effectiveness guidance gained from the RCT (result in an expert world within a specified set of patients willing to be randomly assigned).8

The final point just mentioned leads to the issues of privacy and scalability. That is, the size of the observational trial matters as well as its longevity. A meaningful analogy might be as follows: Imagine that we know the number of small planes that took off and landed (without crashing) in Florida in the spring of 2007. What can this tell us about the spring of 2008 in Florida and in the United States? The obvious answer is “not much.” We need more data. To acquire these greater data on an ongoing basis within the budget allowed for many studies would be impossible. Hence, the concept of registries has evolved from processes concerned mostly with larger public health issues to registries that “are now vital to quality-improvement programs that assess the safety of new drugs and procedures, identify best clinical practice and compare healthcare systems.”9

The problem discussed by Williamson and colleagues9 concerns the clear need for a tracking mechanism across systems that while being respectful of privacy also addresses the need to “see” the patient specifically across systems and across time. In conjunction with the foregoing, the need for such a system to use an open and scalable means of data display, acquisition, and storage is obvious. That such a system must be deployed via the Internet to be affordable and universally available should go without saying.

Methods

The purpose of our choosing an observational model for the study was threefold:

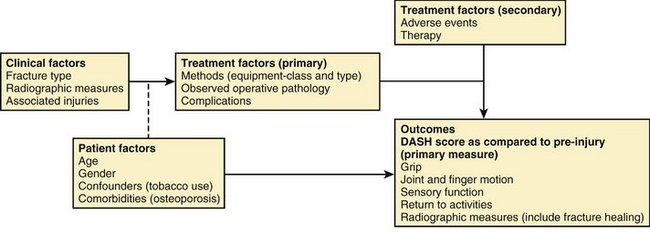

Previously reported variables were used as a guideline to create structured data sets for demographics, baseline, operative (pathology, equipment, complications), outcomes, and adverse events. The Disabilities of the Arm, Shoulder, and Hand (DASH) score was selected as the patient-reported instrument and designated as primary outcome for comparison. Fracture type as designated by the Orthopaedic Trauma Association classification system for distal radius fractures was selected as the primary means of fracture/disease classification.10 A conceptual model for treatment of distal radius fractures was designed (Fig. 4-1). The model depicts the basic treatment/measurement process and identifies the primary outcome and independent variables.

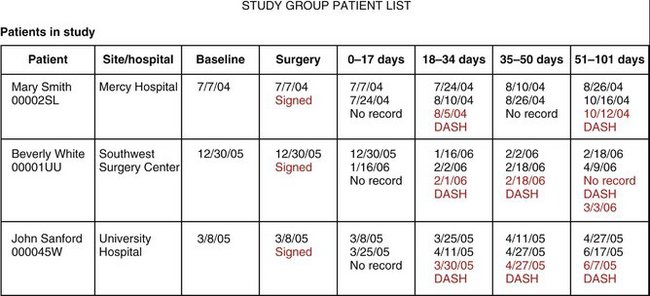

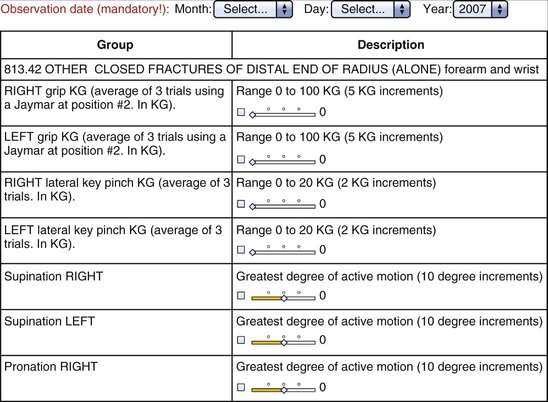

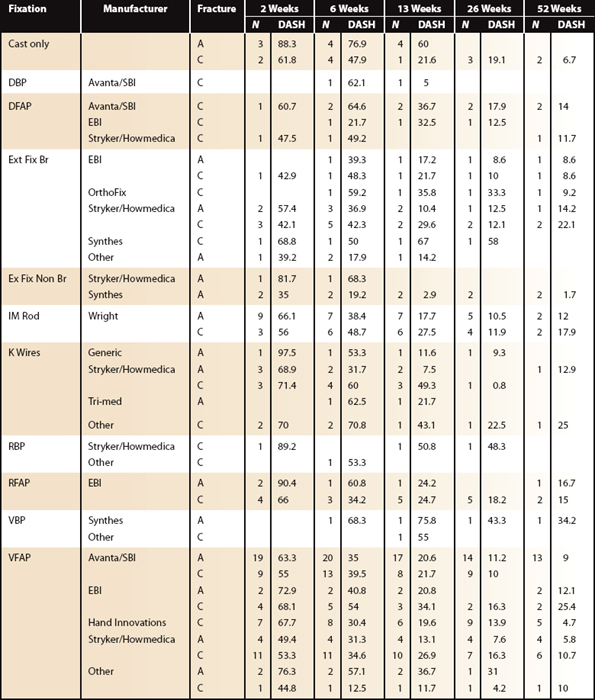

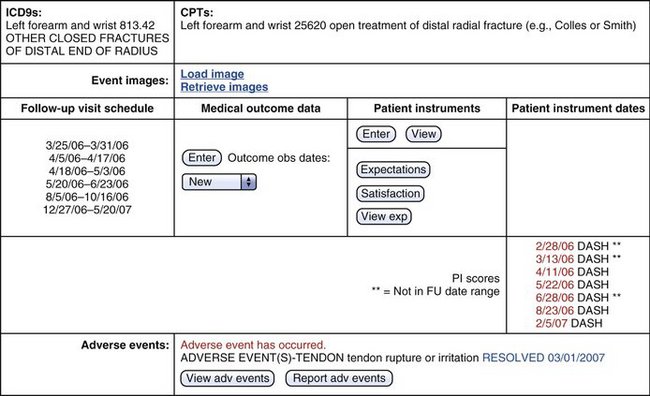

A study booklet was created for each site that summarized the data entry process and was used to familiarize the users with structure of the “screen” that would be seen during the process of data entry. This included a view of all patients enrolled and their follow-up schedule (divided into five postoperative intervals: 2 weeks, 6 weeks, 13 weeks, 26 weeks, and 52 weeks [±20%]) (Fig. 4-2). The type of data element to be collected was seen by the user and enforced by the system (range with specific end point using slider bars, single possible choice of data element from a list, multiple possible choice data element or elements from a list, and open text entry) (Fig. 4-3).

To enable individual treatment site data entry, an Internet-based data entry method was designed. BoundaryMedical Incorporated (www.boundarymedical.com) developed, deployed, and hosted the method as employed. No software applications or databases were installed at any remote site computers. A monitor, Internet connection, and browser (any) were the only required products at the remote site. The methods met Health Insurance Portability and Accountability Act (HIPAA) compliance and data integrity and security standards.

Each site monitored completion of individual patient follow-up data of enrolled patients using a site-specific follow-up screen that was continuously updated and available via the Internet. In some cases, outstanding DASH scores were obtained via an Internet-based patient portal from home or elsewhere. Data were obtained for analyses using a secure download method into a specifically formatted Access database that was used to create relational comparisons that were statistically analyzed using SAAS.

Results

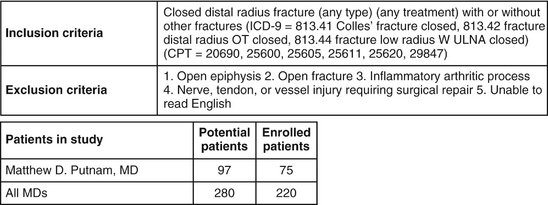

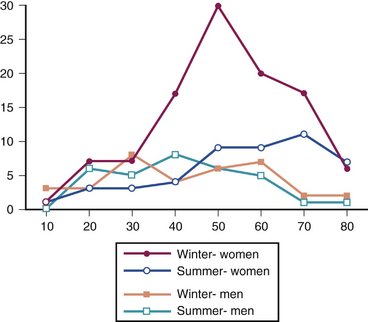

Since inception, 280 patients have been identified as meeting the enrollment criteria for study inclusion. Of these, 220 met all inclusion and exclusion criteria and chose to enroll. The primary author (M.D.P.) enrolled one third of all patients treated (Fig. 4-4). Enrollment was offered to qualified patients at the time of treatment initiation. Figure 4-5 depicts the distribution of patient ages, gender, and season at time of fracture. These and other patient factors (e.g., osteoporosis) appear similar across sites.

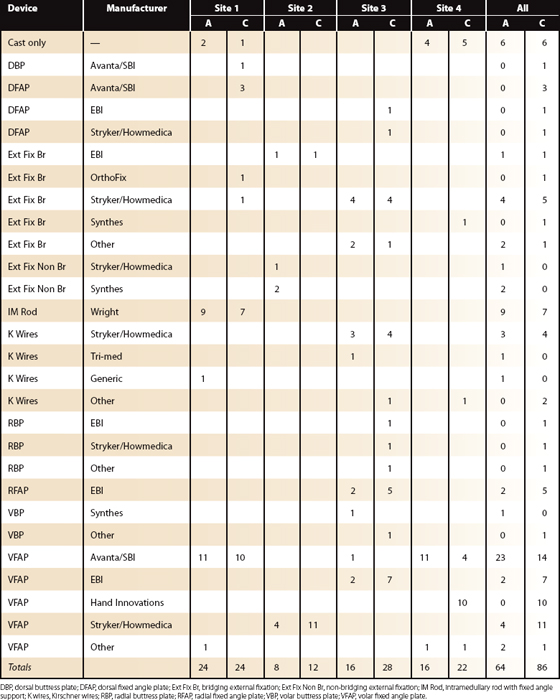

Sites were instructed to offer enrollment to all patients seen regardless of planned treatment. Treatment numbers suggest that this offer was not made. The frequency of closed cast management varied substantially among sites (Table 4-1). Because the distributions of intervention by season, gender, and age are otherwise similar per site, however, it may be true that the enrollment instruction was observed for surgical treatments at each site during the time period of study participation. Also, the enrollment and rejection rates were similar among sites, providing additional evidence that each site attempted to enroll eligible patients.

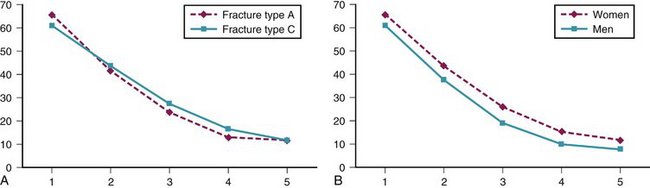

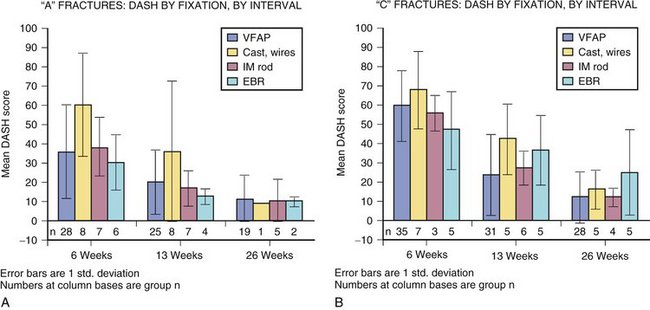

Primary outcome measure (DASH score) recovery was similar for A (extra-articular) fractures compared with C (intra-articular) fractures. Primary outcome measure (DASH score) recovery was faster for men compared with women. This trend was true at all intervals and approaches significance (Fig. 4-6).

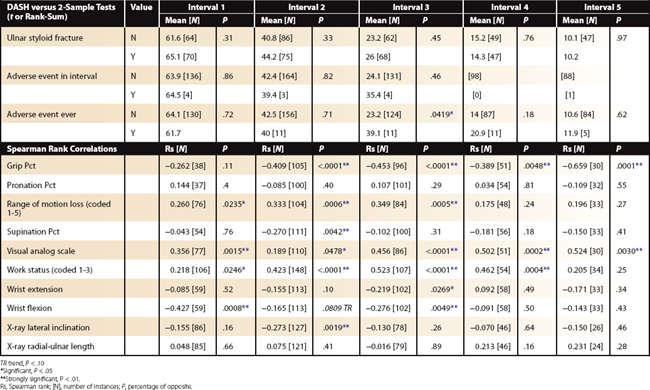

Excluding bilateral injuries, grip strength recovery (compared with percentage of unaffected side), digital motion recovery (total active motion of affected hand fingers [measure the worst finger]), visual analog scale pain scores (0 to 10), and work status (coded in an ordinal manner as to ability to perform “original” work tasks) are the best clinical (physician-collected) values as correlated to DASH recovery (P < .001). Wrist motion does not correlate to DASH recovery. Forearm motion is important, but not to the same degree as grip strength recovery and digital motion preservation (Table 4-2).

Fixation differences by site and fracture type are listed in Table 4-3. Excluding patients with bilateral injuries, weak evidence suggests external fixation bridging is best early (interval 2 [6 weeks]) compared with volar fixed-angle plating (VFAP). At later (interval 3 [13 weeks] and beyond) follow-up, VFAP (no difference between manufacturers observed) is different (lower DASH) from external fixation bridging. As previously noted, cast care and primary “pin” fixation recover most slowly (Fig. 4-7).

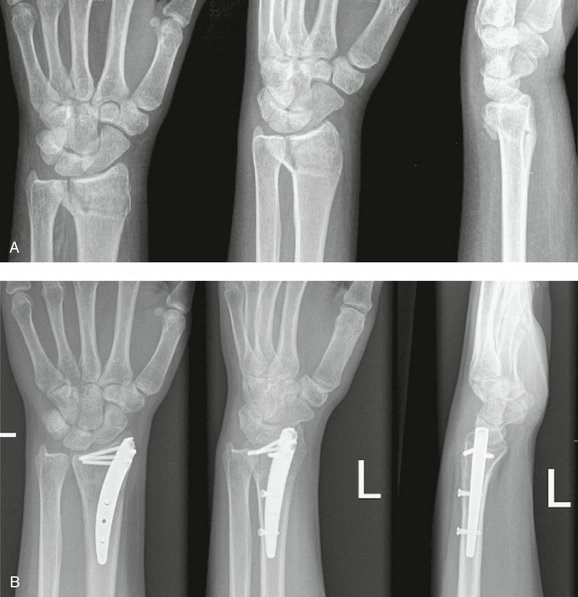

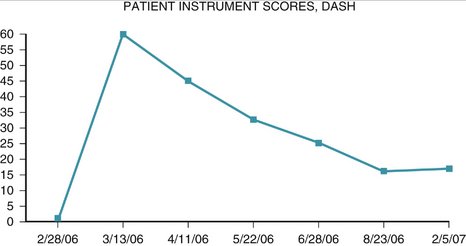

One case is used to illustrate the data capture process in this study. A patient presented with a new closed fracture. The patient was an otherwise healthy English-speaking woman with no prior injury or chronic medical concerns. No nerve or tendon injuries were present. An unstable fracture with a dorsal-ulnar corner fragment and associated laxity of the distal radioulnar joint was diagnosed. Fixation maintained reduction of this intra-articular fracture and early active-assisted range of motion was initiated. DASH score recovery was progressive and nearly complete. The patient presented nearly 10 months after fixation with dorsal tenderness over a fixation bolt (adverse event) that resolved after removal of the screw only and no change in the DASH score (Figs. 4-8 to 4-10).

Discussion

External fixation (bridging) is different (trend) and better early during recovery. We believe this observation warrants special emphasis in the face of the number of plating products with strong messages being communicated to physicians via advertising and data reported to support VFAP published by Chung and associates.11 Earlier work published by Kreder and coworkers12 found no difference between fixation with plating versus external fixation for unstable fractures of the radius when the patients were randomly assigned to one of the two groups.12 This article cannot be compared directly with the current work in that the plating techniques chosen for open fixation did not share the same characteristics as the techniques now presented to the market and represented within Chung’s work.

Proof that fixed-angle fixation of the radius would improve fixation rigidity using an in vitro extra-articular distal radius fracture fixation model was first shown by Gesensway and colleagues13 and later compared with fixation forces needed to allow early rehabilitation vis-à-vis a low load gripping (finger motion) model by Putnam and colleagues.14 Given generally understood knowledge of joint and ligament function, it is probably impossible to argue logically against restoration of function of all joints as early as possible. To be useful in the context of a utility/disutility analysis, however, at the outset of introducing alternative fixation methods into the matrix of fracture care, the instant and widely applicable benefits should be known. A specific analysis of distal radius fractures regarding utility/disutility has not been done. Inferring from the work of Gandjour and Lauterbach,15 one issue to be understood is the expected and observed volume of “greater benefit” for all distal radius patients who receive the best care compared with alternative (and, for argument’s sake, less expensive) care. In this example, “dollars available for health care” can be assumed to equal energy and are subject to the laws of thermodynamics, wherein they can neither be created nor be destroyed. To achieve utility, any increase in energy spent on radius fracture care should ideally result in greater societal health compared with spending these same dollars elsewhere.

A second goal of this study was to assess if:

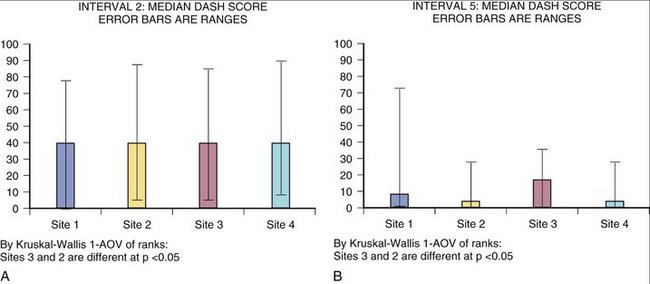

Separating DASH averages by site was reassuring and surprising. As seen in Figure 4-11, the sites are more similar than different when the DASH alone is examined. One site does trend to recover more slowly, however. Ultimately the true test of such a display would be the ability to detect a best (or worst) process. Ideally, such a method could confirm a competent result (single event) and competent method (many events by many physicians). The concept of measuring and displaying surgical competence is gaining acceptance as a goal. The CUSUM (CUmulative SUM) method of presenting surgical success/competence data may prove reliable and acceptable to the users.16,17

FIGURE 4-11 DASH Score Median. A, DASH score median with ranges at interval 2 showing site difference. B, DASH score median with ranges at interval 5 showing site difference.

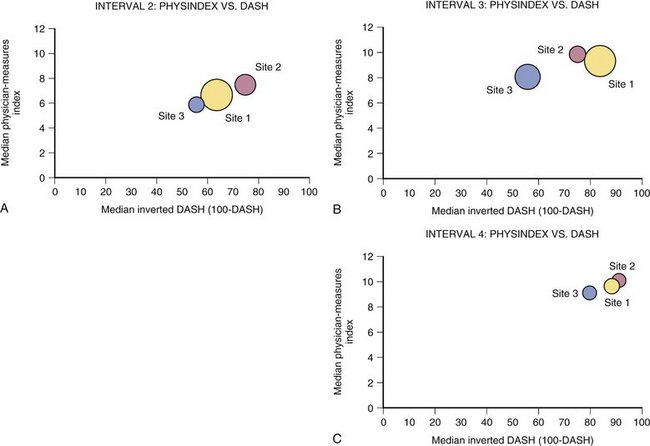

Figure 4-12 shows examples of how physician-derived data and patient instrument might be combined to represent distal radius fracture recovery at specific intervals. To create this graph, the physician values that most closely correlated with DASH score (grip, finger range of motion, visual analog scale, and work status) were assigned values to create a physician index. This index score can be seen to move in the same direction and approximate proportion compared with the DASH instrument. The importance of this fact can be surmised from the difference in number of sites represented in Figures 4-11 and 4-12. Specifically, site 4 was not successful at collecting values for physician-derived data. Although site 4 did collect patient instrument data that could be analyzed to create Figure 4-11, the frequency and completeness of data collection were insufficient to enable inclusion of site 4 in Figure 4-12.

In this observational study, we intended to enroll “all comers” as related to distal radius fracture patients who met the inclusion/exclusion criteria. Strictly speaking, all patients presenting with a specific diagnosis should be enrolled. Enrollment numbers suggest, however, that patients receiving cast care as treatment were not enrolled as frequently as patients undergoing surgical intervention. Notwithstanding this limitation of the current study, for purposes of formulating some conclusions from the collected data set, the distribution of patient characteristics is encouraging in that it compares favorably with an earlier demographic study by Falch.18

Considering the need to conserve resources in medicine and the inability of orthopaedic equipment to possess sufficient embedded knowledge so as to make the physician’s decision making and physical skills of minimal importance, it seems obvious that true and ongoing tests of widespread efficiency (ability of each user to employ a tool effectively [desired outcome]) are needed with continuous comparison with an existing standard when new and differently priced tools are introduced to the market.19

Incomplete capture of late follow-up data occurred secondary to patients failing to return for later examination. The current data set has not been examined using an “intention to treat” method.20 Presumably, this method would enable a better explanation of trends, although it may “downgrade” seemingly good results. In the absence of completing this analysis, the trend differences between sites and some equipment must be viewed with caution. The clinical correlations between DASH and grip and finger motion are so significant and largely dependent on earlier, more complete data that we expect these correlations to remain positive regardless of any new data points gained vis-à-vis our planned intention-to-treat analysis.

In this study, we identified an ability to use a primary outcome instrument to observe individual and group recovery of function over time using a validated outcomes instrument (DASH) as the primary outcome measure. Separation of treated fractures into comparative groups is not likely to have occurred in a uniformly agreeable manner. Dahlen and colleagues21 describes the “under” classification of extra-articular fractures by plain radiographs. Because of this, these authors suggested classification following routine computed tomography scan after original radiographs. If operative treatment expense is an issue, however, imagine the utility, or disutility, of obtaining a computed tomography scan in all patients presenting with a distal radius fracture. That intraobserver and interobserver reliability of fracture classification is poor for any but the simplest classification scheme is also known.22 One clear opportunity, if the power of a clinical database distributed via the Internet is to have maximal benefit for clinical studies, occurs at the moment a disease or image is classified. Whether the physician is simply given enough information to become more consistent or, ideally, a machine algorithm is used to validate any crucial classification, the value to a clinical data collection and sorting process is evident.

Summary

As related to the primary outcome of distal radius fracture treatment, we have shown that:

Pirzig2 relates the story of a South Indian monkey whose hand is caught in a coconut with rice inside. The monkey cannot remove its hand when holding onto rice because his hand closed around the rice is larger than the hole he reached through with his open flat hand. There are hunters coming. So, the monkey must choose to forego his hunger or be caught. Maintaining his frame of reference, the monkey will lose his freedom, or worse.

Medicine’s situation is more problematic. The “hunters” may not be here tomorrow and so, depending on the variable ages and needs of physicians, the need to remove one’s “hand” from the “food” would be viewed differently. The trick becomes to see the need from the “eyes of the future.” From this vantage point, it might be possible to place the greatest value on the overall quality achieved and, in this context, the need for patient-validated measurement of equipment, physicians, and sites is apparent. The database method presented fills the need to compare variables in a manner that enables an ongoing understanding of real-world efficiency and simultaneously provides individual understanding of effectiveness.

1. Putnam MD, Gesensway D: Method and apparatus for fixation of distal radius fractures. US Patent 5586985 issued December 24th, 1996.

2. Pirzig RM. Zen and the Art of Motorcycle Maintenance. William Morrow & Company; 1974.

3. Kane R, editor. Understanding Health Care Outcomes Research. Gaithersburg, MD: Aspen Publications, 1997.

4. Wennberg JE, Fisher ES, Skinner JS. Geography and the debate over Medicare reform. http://content.healthaffairs.org/cgi/content/abstract/hlthaff.w2.96v1, February 13, 2002.

5. Freedman B. Equipoise and the ethics of clinical research. N Engl J Med. 1987;317:141-145.

6. Tunis SR, Stryer DB, Clancy CM. Practical clinical trials. JAMA. 2003;290:1624-1632.

7. Stukel T, Fisher ES, Wennber DE, et al. Analysis of observational studies in the presence of treatment selection bias. JAMA. 2007;297:278-285.

8. D’Agostino RBJr, D’Agostino RBSr. Estimating treatment effects using observational data. JAMA. 2007;297:314-316.

9. Williamson O, Cameron P, McNeil J. Medical registry governance and patient privacy. Med J Aust. 2004;181:125-126.

10. Orthopaedic Trauma Association/Committee for Coding and Classification. OTA fracture classification. J Orthop Trauma. 1996;10(Suppl 1):27-30.

11. Chung KC, Watt AJ, Kotsis SV, et al. Treatment of unstable distal radial fractures with the volar locking plating system. J Bone Joint Surg Am. 2006;88:2687-2694.

12. Kreder HJ, Hanel DP, Agel J, et al. Indirect reduction and percutaneous fixation versus open reduction and internal fixation for displaced fractures of the distal radius: a randomized, controlled trial. J Bone Joint Surg Br. 2005;87:829-836.

13. Gesensway D, Putnam MD, Mente PL, et al. Design and biomechanics of a plate for the distal radius. J Hand Surg [Am]. 1995;20:1021-1027.

14. Putnam MD, Meyer NJ, Nelson EW, et al. Distal radial metaphyseal forces in an extrinsic grip model: implications for postfracture rehabilitation. J Hand Surg [Am]. 2000;25:469-475.

15. Gandjour A, Lauterbach KW. Utilitarian theories reconsidered: common misconceptions, more recent developments, and health policy implications. Health Care Anal. 2003;11:229-244.

16. Lim TO, Soraya A, Ding LM, et al. Assessing doctors’ competence: application of CUSUM technique in monitoring doctors’ performance. Int J Qual Health Care. 2002;14:251-258.

17. Yap C-H, Colson ME, Watters DA. Cumulative sum techniques for surgeons: a brief review. Aust N Z J Surg. 2007;77:583-586.

18. Falch JA. Epidemiology of fractures of the distal forearm in Oslo, Norway. Acta Orthop Scand. 1983;54:291-295.

19. Madhavan R, Grover R. From embedded knowledge to embodied knowledge: new product development as knowledge management. Journal of Marketing. 1998;62:1-12.

20. Hollis S, Campbell F. What is meant by intention to treat analysis? Survey of published randomized controlled trials. BMJ. 1999;319:670-674.

21. Dahlen HC, Franck WM, Saburi G, et al. Incorrect classification of extra-articular distal radius fractures by conventional x-rays: comparison between biplanar radiographic diagnostics and CT assessment of fracture morphology. Unfallchirurg. 2004;107:491-498.

22. De Oliveira Filho OM, Belangero WD, Teles JB. Distal radius fractures: consistency of the classifications. Rev Assoc Med Bras. 2004;50:55-61.