CHAPTER 38 Cardiopulmonary Resuscitation

In the late 1950s, children suffering cardiac arrest during anesthesia received 1.5 minutes of knee-to-chest “artificial respiration” followed by a thoracotomy for internal cardiac massage (Rainer, 1957). In 1958, closed-chest compressions were successfully performed on a 2-year-old child (Sladen, 1984). The resuscitation of that child, along with several successful resuscitations of subsequent patients (many undergoing anesthesia) led to reporting of closed-chest compressions for cardiac resuscitation (Kouwenhoven et al., 1960). Currently, 50% to 60% of children who have perioperative cardiac arrest are successfully resuscitated (Bhananker et al., 2007). Despite the success rate of resuscitation during anesthesia, the potential for disaster and the increased likelihood of cardiac arrests in younger children and infants require that pediatric anesthesiologists have a complete understanding of the physiology and pharmacology of cardiopulmonary resuscitation (CPR). “No more depressing shadow can darken an operating room than that occasioned by the death of a child” (Leigh and Belton, 1949).

Cardiac arrest during anesthesia

Incidence of Cardiac Arrest During Anesthesia

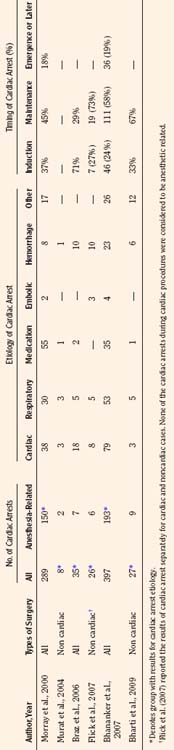

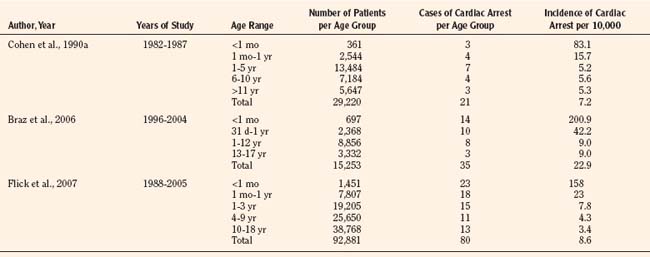

Results of studies that examined the incidence of pediatric perioperative cardiac arrest for all types of procedures, including cardiac surgery, are listed in Table 38-1. The overall incidence for pediatric perioperative cardiac arrest for all age groups undergoing all types of surgeries ranged from 7.2 to 22.9 per 10,000 procedures (Cohen et al., 1990a; Braz et al., 2006; Flick et al., 2007). Studies that excluded cardiac surgery reported a lower overall incidence, ranging from 2.9 to 7.4 per 10,000 (Murat et al., 2004; Flick et al., 2007; Bharti et al., 2009). When only anesthesia-related cardiac arrest was included, the incidence for all types of surgery (including cardiac) ranged from 0.8 to 4.58 per 10,000. The highest incidence of cardiac arrest was seen in patients undergoing cardiac surgery, ranging from 79 to 127 per 10,000 (Flick et al., 2007; Odegard et al., 2007). This information is helpful when estimating risk, but whatever the risk of pediatric perioperative cardiac arrest, the anesthesiologist must be ready and able to treat the cause and resuscitate the child.

TABLE 38-1 Incidence of Pediatric Perioperative Cardiac Arrest by Age Group for All Types of Surgery

As shown in Table 38-1, risk factors for pediatric perioperative cardiac arrest were consistently found in all studies to be associated with younger patient age. The highest risk was seen in infants younger than 1 month of age, followed by those younger than 1 year old. The Perioperative Cardiac Arrest (POCA) registry compared age groups in anesthesia-related cardiac arrests and found that between 1994 and 1997, 56% of cases were infants younger than 1 year old, whereas between 1998 and 2004, only 38% of the cases were infants younger than 1 year old. This significant decrease in the percentage of cardiac arrest in infants is attributed to the declining use of halothane and increasing use of sevoflurane, which is associated with less bradycardia and myocardial depression (Bhananker et al., 2007). Anesthesia-related cardiac arrest is reported to be higher overall for children (1.4 to 4.6 per 10,000) than adults (0.5 to 1 per 10,000), although the incidence in some studies is similar, presumably because both groups have high-risk patients at the extremes of age (Zuercher and Ummenhofer, 2008).

The patient’s physical condition impacts cardiac arrest risk. Risk significantly increases when American Society of Anesthesiology (ASA) physical status (PS) is 3 or higher (Morray et al., 2000; Murat et al., 2004; Braz et al., 2006; Bhananker et al., 2007; Flick et al., 2007). Patients at ASA PS 5 are often not included in reports of anesthesia-related events, because by definition they have a low likelihood of survival, making it difficult to determine whether events are a result of their condition or related to anesthesia. Patients with ASA PS 4 and 5 have a 30 to 300 times greater risk of cardiac arrest than patients with ASA PS 1 or 2 (Rackow et al., 1961; Newland et al., 2002). Prematurity, congenital heart disease, and congenital defects are common pediatric comorbidities that increase the risk for children (Morray et al., 2000; Bhananker et al., 2007; Odegard et al., 2007).

The designation of emergency status to a patient’s procedure was a risk factor for both cardiac arrest and mortality in some studies but not in others. Emergency surgery was associated with a significantly increased incidence of perioperative cardiac arrest, with 123 per 10,000 anesthesia procedures vs. 15 to 16 per 10,000 for nonemergent cases (p < 0.05) (Braz et al., 2006). In addition to a higher incidence of arrests during an emergency procedure, a poorer outcome was also reported (Vacanti et al., 1970; Marx et al., 1973; Olsson and Hallen, 1988; Morray et al., 2000; Biboulet et al., 2001; Newland et al., 2002; Sprung et al., 2003; Bharti et al., 2009). In contrast, several studies did not find a statistically significant trend to decreased survival as a result of emergency status (Biboulet et al., 2001; Flick et al., 2007; Zuercher and Ummenhofer, 2008). It is not clear whether emergency procedures have increased perioperative risk because of the patient’s condition, the lack of optimal personnel, or both.

Etiology of Cardiac Arrest During Anesthesia

Causes of cardiac arrest during anesthesia are typically grouped either by organ systems involved or interventions applied. A summary of the etiologies and timing of cardiac arrest during anesthesia as reported in the literature is listed in Table 38-2. The pediatric POCA registry uses a classification system that involved both interventions and organ systems, thus grouping cardiac arrests as being related to medication, cardiovascular factors, respiratory factors, or equipment (Morray et al., 2000; Odegard et al., 2007). Some etiologies may be difficult to classify because they fit into several grouping schemes. For example, succinylcholine-induced dysrhythmia may be classified as either a medication-related or a cardiovascular cause of cardiac arrest. A set of guidelines for reporting cardiac arrest data in children, known as the pediatric Utstein guidelines, suggested a classification based on organ systems for etiologies (Zaritsky et al., 1995). The Utstein guidelines used three groups consisting of cardiac, pulmonary, and cardiopulmonary factors for comparison of etiologies of cardiac arrest in children. The Utstein guidelines have not yet been widely incorporated into anesthesia-related cardiac arrest literature. The anesthesia literature generally groups the etiology of cardiac arrest into those related to medication, cardiovascular, or respiratory categories, as shown in Box 38-1.

Box 38-1 Causes of Cardiac Arrest During Anesthesia

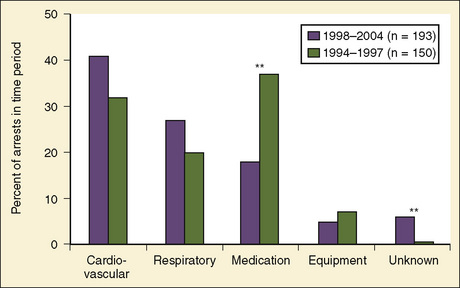

Cardiovascular-related causes

Previously, medication-related etiologies were the most common reasons for cardiac arrest related to anesthesia in children, representing approximately 35% of cardiac arrests (range of 4% to 54%) (Rackow et al., 1961; Salem et al., 1975; Keenan and Boyan, 1985; Olsson and Hallen, 1988; Morgan et al., 1993; Morray et al., 2000, Biboulet et al., 2001; Newland et al., 2002; Kawashima et al., 2003; Sprung et al., 2003). There has been a decrease in reports of medication-related etiologies to between 18% and 28%, and cardiac and respiratory causes are now the most commonly reported (Fig. 38-1) (Braz et al., 2006; Bhananker et al., 2007). This may be the result of a decrease in incidence of inhalation-agent overdose when use of sevoflurane replaced halothane for anesthetic induction. It is not clear whether sevoflurane is less cardiotoxic than halothane or the delivered dose of sevoflurane is lower because of vaporizer limits relative to a higher minimum alveolar concentration (MAC) for sevoflurane. Similarly, a decrease in succinylcholine-induced dysrhythmias was reported after a warning was issued related to use of succinylcholine in children. Other medication-related causes of cardiac arrest include those associated with regional anesthesia: intravenous (IV) administration of local anesthetic intended for caudal space, high spinal anesthesia, and local anesthesia toxicity. Inadequate reversal of a paralytic agent and opioid-induced respiratory depression are medication-related causes of cardiac arrest that more often present in the postoperative period.

Cardiovascular-related causes of cardiac arrest now represent approximately over 40% of cardiac arrests related to anesthesia in children (Braz et al., 2006; Bhananker et al., 2007). Cardiac arrests caused by decreased intravascular volume are most commonly reported in this group, and causes include inadequate volume administration, excessive hemorrhage, and inappropriate volume or transfusion administration (Braz et al., 2006; Bhananker et al., 2007; Flick et al., 2007). Dysrhythmias caused by hyperkalemia are seen with succinylcholine administration, transfusion, reperfusion, myopathy, or renal insufficiency (Larach et al., 1997). Dysrhythmia or cardiovascular collapse (asystole) may have a vagal etiology as a result of traction, pressures, or insufflations of the abdomen, eyes, neck, or heart. Cardiovascular collapse can occur with anaphylaxis from exposure to latex, contrast, drugs, or dextran. Venous air embolism is another important cause of cardiovascular collapse and cardiac arrest in patients who are under anesthesia. Malignant hyperthermia is a seldom-reported cause of cardiac arrest in this group.

Respiratory-related causes are responsible for approximately 31% (range of 15% to 71%) of cardiac arrest related to anesthesia in children and adults (Rackow et al., 1961; Salem et al., 1975; Keenan and Boyan, 1985; Olsson and Hallen, 1988; Morgan et al., 1993; Morray et al., 2000; Biboulet et al., 2001; Newland et al., 2002; Kawashima et al., 2003; Sprung et al., 2003; Braz et al., 2006; Bhananker et al., 2007; Flick et al., 2007). Respiratory-related events as the primary cause of cardiac arrest have declined over the years as a source of malpractice claims, from 51% in the 1970s to 41% in the 1980s and 23% from 1990 through 2000 (Jimenez et al., 2007). Inadequate ventilation and oxygenation are broad categories often listed in this group as causes of cardiac arrest. “Loss of the airway” may involve laryngospasm or bronchospasm; an anatomy that is difficult to manage; or a misplaced, kinked, plugged, or inadvertently removed endotracheal tube (ETT). Aspiration remains a cause of respiratory-related cardiac arrest but is not often mentioned in the recent literature.

Equipment-related causes involve approximately 4% (range of 0% to 20%) of cardiac arrest related to anesthesia in children and adults (Rackow et al., 1961; Salem et al., 1975; Keenan and Boyan, 1985; Olsson and Hallen, 1988; Morgan et al., 1993; Morray et al., 2000; Biboulet et al., 2001; Newland et al., 2002; Kawashima et al., 2003; Sprung et al., 2003). Categories of equipment-related cardiac arrest most commonly described include central-venous-catheter–induced bleeding, dysrhythmias, and breathing circuit disconnection. Other etiology groups of cardiac arrest reported in some studies include multiple events (3%), inadequate vigilance (6%), or an unclear etiology (9%, range of 1% to 18%) (Olsson and Hallen, 1988; Morray et al., 2000; Biboulet et al., 2001; Kawashima et al., 2003).

Anesthesia-related cardiac arrest may be preventable 53% of the time, and anesthesia-related mortality is preventable 22% of the time (Kawashima et al., 2003). Human error may be the most important factor in deaths attributable to anesthesia and usually manifests not as a fundamental ignorance but as a failure in application of existing knowledge (Olsson and Hallen, 1988). Poor preoperative preparation and inadequate vigilance are often reported as avoidable errors. Examples of poor preoperative preparation relevant to the pediatric anesthesiologist include failure to identify patients with symptoms of an undiagnosed skeletal myopathy, coronary involvement from Williams syndrome, prolonged QT syndrome, or a cardiomyopathy. Another category of preventable causes is inadequate vigilance, such as failure to recognize progressive bradycardia and failure to respond to persistent hypotension. In addition to improving preparation and vigilance, the use of “test doses” or divided dosing when administering medications (especially drugs that may cause hypotension in unstable patients) is suggested to minimize medication errors. Other important and preventable causes of anesthesia-related cardiac arrest include transfusion-related hyperkalemia, local anesthetic toxicity, and inhalation-anesthetic overdose (Morray et al., 2000).

Outcomes of Cardiac Arrest During Anesthesia

What is the risk of a child dying during the perioperative period? Studies that have investigated this question have reported varied results, depending on whether they include only anesthesia-related causes or all causes of cardiac arrest. Although survival is the outcome most commonly viewed as a measure of successful resuscitation after cardiac arrest, mortality is the rate most commonly reported. Anesthesia-related mortality is currently reported to be 0.1 to 1.6 per 10,000 cases, which is down from 2.9 per 10,000 cases between 1947 and 1958 (Rackow et al., 1961; Morita et al., 2001; Morray et al., 2000; Flick et al., 2007). Some studies have even reported no anesthesia-related deaths (Tay et al., 2001; Murat et al., 2004; Braz et al., 2006). When all causes of perioperative cardiac arrest are included (i.e., anesthesia-related, surgical, and patient disease), risk of mortality is higher, ranging from 3.8 to 9.8 per 10,000 cases (Cohen et al., 1990a; Morita et al., 2001; Braz et al., 2006; Flick et al., 2007). Compared with neonates and infants, older children had a lower incidence of both cardiac arrest and mortality.

Although survival is used to describe a positive outcome for a patient who suffers a cardiac arrest, it is imprecise as to duration or quality of patient outcome. A patient may survive initial resuscitation attempts but subsequently die in the intensive care unit (ICU) from persistent hemodynamic instability or devastating neurologic injury. Initial survival from cardiac arrest after successful resuscitation efforts is defined as return of spontaneous circulation (ROSC), meaning that native heartbeat and blood pressure are adequate for at least 20 minutes. Although ROSC indicates a successful reversal of cardiac arrest, it may not be a meaningful indicator if many patients subsequently die in the ICU. The number of patients with ROSC after cardiac arrest is usually much greater than the number that has a longer, more meaningful, period of survival, such as survival to discharge from the hospital. Although survival to discharge indicates a longer survival than ROSC, surviving for a longer time does not address the quality of that outcome. An assessment of the quality of survival should acknowledge either the presence of a new neurologic deficit or a return to the patient’s neurologic baseline. These terms are found in some descriptions in the anesthesia-related literature on outcomes of children who suffer cardiac arrest. Full recovery after intraoperative cardiac arrest in children is reported to range from 48% to 61% (Bharti et al., 2009; Bhananker et al., 2007; Flick et al., 2007).

It is often presumed that the duration and quality of survival from a cardiac arrest that occurred in the operating room should be good, because personnel who witness the cardiac arrest and provide resuscitation are trained and prepared. A review of the anesthesia literature reveals that cardiac arrest can be reversed in over 80% of anesthesia-related episodes (Sprung et al., 2003; Bhananker et al., 2007; Bharti et al., 2009). The likelihood of ROSC decreases to 50% or 60% if the cause of arrest includes those causes not related to anesthesia. Survival to hospital discharge after an anesthesia-related cardiac arrest appears to be approximately 65% to 68% (the range for pediatric studies of this variable is large). Survival to discharge is 30% if causes of cardiac arrest unrelated to anesthesia are included. Comparing these data with data in literature not related to anesthesia reveals that studies of in-hospital cardiac arrest (IHCA) in children show a 23% rate of survival to discharge (range of 8% to 42%) (Gillis et al., 1986; Von Seggern et al., 1986; Davies et al., 1987; Carpenter and Stenmark, 1997; Parra et al., 2000; Suominen et al., 2000; Reis et al., 2002; Nadkarni et al., 2006, Tibballs and Kinney, 2006). This 23% survival-to-discharge rate is comparable with the 30% rate for all causes and much lower than the 65% rate for anesthesia-related causes of cardiac arrest in the operating room. The presence of anesthesiologists may account, in part, for the better survival outcomes in anesthesia-related cardiac arrests.

Outcome studies for cardiac arrest should include a determination of the presence of new neurologic injuries. Pediatric studies of IHCA show a 71% favorable neurologic outcome for the survivors (range of 45% to 90%) (Gillis et al., 1986; Davies et al., 1987; Carpenter and Stenmark, 1997; Parra et al., 2000; Suominen et al., 2000; Reis et al., 2002). Compilation of the available anesthesia-related literature indicates that 57% of children who suffer perioperative cardiac arrest survive and return to their baseline neurologic status, whereas 5% survive with a new neurologic deficit. Thus, for anesthetic-related cardiac arrest, a child has a 62% chance of surviving, and survivors have a 92% chance of having a favorable neurologic outcome. This percentage for pediatric survivors falls to 22% for those who return to neurologic baseline out of a rate of 36% for total survivors, or a 61% favorable neurologic outcome when all causes of cardiac arrest are included. The 71% favorable neurologic outcome for IHCA is comparable with the 61% rate for all causes and lower than the 92% rate for anesthesia-related causes of cardiac arrest in the operating room. It is noteworthy to mention that the number of studies and patients for these estimates are small and the ranges are large. These data indicate that both the duration and quality of survival are favorable for children who experience cardiac arrest from anesthesia-related causes.

The etiology of cardiac arrest also impacts likelihood of successful resuscitation and survival. Mortality is increased if the cause of cardiac arrest is hemorrhage or is associated with protracted hypotension (both have a P < 0.001) (Girardi and Barie, 1995; Newland et al., 2002; Sprung et al., 2003). Resuscitation-related factors have an effect on outcome. These factors include cardiac rhythm during resuscitation, duration of resuscitation, and duration of no-flow and low-flow states during cardiac arrest and resuscitation. A no-flow state occurs when a patient is in cardiac arrest before receiving resuscitation efforts. A low-flow state occurs when a patient is in cardiac arrest and receiving resuscitation that is unable to provide adequate circulation. The longer the patient is in a no-flow or low-flow state, the worse the outcome is likely to be.

Asystole is a rhythm that, if present during resuscitation, has been associated with a decreased rate of both ROSC and survival to discharge for children with cardiac arrest outside of the operating room. Usually asystole is caused by prolonged hypoxia or myocardial ischemia and represents a terminal rhythm. Prolonged hypoxia causes the myocardium to be more resistant to resuscitation efforts and is more likely to result in neurologic injury. Thus, if the heart can be resuscitated, there is still the possibility of a poor outcome. In the operating room, continuous patient monitoring decreases the risk of prolonged periods of hypoxia or ischemia. Instead of asystole being a terminal rhythm, asystole in the operating room is often an initial rhythm that results from a vagal stimulation. As an initial rhythm, asystole is more likely to be reversed. Usually discontinuation of the vagal stimulus and chemical support of the heart rate are effective resuscitation measures. Unlike with cardiac arrests that occur outside the operating room, asystole is a commonly reported rhythm with anesthesia-related cardiac arrest and is associated with a good prognosis (Sprung et al., 2003).

The duration of the resuscitative efforts has an effect on patient outcome. Prolonged duration of CPR increases the possibility of low-flow intervals, thereby resulting in myocardial and cerebral injury. The need for CPR for more than 15 minutes has been determined to be a predictor of mortality in anesthesia-related cardiac arrests (P < 0.001) (Girardi and Barie, 1995). The interpretation of these data is complicated by reports of successful outcomes even after prolonged periods of resuscitation efforts. Up to 3 hours of CPR has been reported in anesthetic-related cardiac arrests, with eventual resuscitation and a good outcome (Cleveland, 1971; Lee et al., 1994). In summary, the cause of the cardiac arrest, the rhythm disturbance, and the duration of CPR can impact outcome from cardiac arrest that takes place in the operating room.

Cardiopulmonary resuscitation

Recognition of the Need for Cardiopulmonary Resuscitation

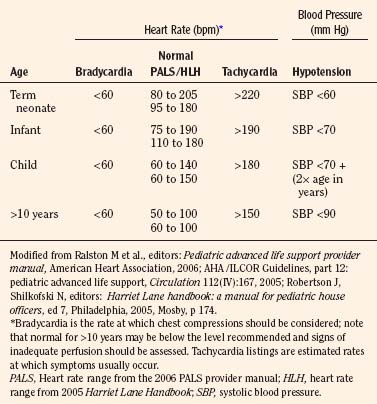

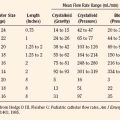

Early recognition that a child’s vital signs are inadequate and a response with rapid initiation of CPR reduces potential for injury from low-flow or no-flow intervals. It is difficult to give guidelines for the limit of each vital sign at which vital organ blood perfusion becomes inadequate for each child under anesthesia (Table 38-3). These limits depend on many factors, including the patient’s general health, the patient’s age, the type and depth of anesthesia, and the intensity and duration of deterioration of the vital signs. Pediatric training and experience are valuable in these uncommon but critical situations to help with the decision about when to initiate CPR.

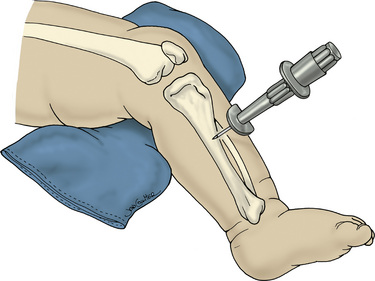

In general, CPR including chest compressions should be initiated when it is felt that perfusion is inadequate to deliver oxygen, substrates, or resuscitative medications to the heart or brain. Extensive monitoring and continuous presence of anesthesia personnel should be optimal for early detection of inadequate perfusion or ventilation in the operating room. In the absence of adequate monitoring, health care personnel should palpate the umbilical artery in the newborn, the brachial artery in the infant, and the carotid artery in the child to detect an abnormal heart rate (Cavallaro and Melker, 1983; Lee and Bullock, 1991; AHA, 2006a). The analysis of a pulse in anesthetized and slightly hypotensive (systolic pressure lower than 70 mm Hg) infants revealed that detection of a pulse within 10 seconds was best with auscultation; brachial palpation was less successful than auscultation but better than carotid or femoral palpation by operating room nurses (Inagawa et al., 2003). Femoral palpation of pulse was more successful than carotid or brachial in anesthetized and hypotensive (systolic pressure lower than 70 mm Hg) infants in a subsequent study with personnel who had more pediatric resuscitation training (Sarti et al., 2006). Both authors agree that successful counting of heart rate over a brief time was better with auscultation.

Physiology of Cardiopulmonary Resuscitation: Reestablishment of Ventilation

The fraction of inspired oxygen (Fio2) that should be administered during CPR is important, because either too much or too little may be detrimental. A report by Elam et al. (1954) showed that exhaled air from the rescuer (16% oxygen) provided adequate oxygenation of the victim (arterial oxygen level [Sao2] of 90% or greater) and became the basis for ventilation during CPR when supplemental oxygen is not available. In the operating room, the anesthesiologist has the ability to administer 100% oxygen via tracheal intubation during CPR. The anesthesiologist is faced with the theoretic concern that delivery of high oxygen levels during reperfusion may increase formation of oxygen free radicals and increase cellular injury. This concern is weighed against the knowledge that CPR is less effective in restoring oxygen delivery to the brain and heart than is native circulation and that during CPR the administration of low levels of oxygen may increase the delay in restoration of oxygen delivery. Adequacy of oxygen delivery during CPR depends on many variables, including the cause of cardiac arrest, the length of decreased perfusion, the effectiveness of CPR, and the patient’s metabolic demands. The complexity of the determination makes it unlikely that oxygen delivery during CPR can be measured or predicted. A review of newborn resuscitation using 21% or 100% Fio2 found that newborns with depressed (but not arrested) cardiac function can be effectively resuscitated with either 21% or 100% oxygen and that 21% oxygen administration is associated with less markers of oxidative stress. This review also found that for cardiac arrest in newborns there is no evidence that 21% is as effective as 100% oxygen in resuscitation of circulation, and that animal studies suggest 100% oxygen administration is more effective (Ten and Matsiukevich, 2009). A model of brain-tissue oxygen monitoring in piglets during CPR for cardiac arrest showed that despite administration of 100% Fio2, the brain-tissue oxygen levels remained either at or below the levels before cardiac arrest until after ROSC, when they became dramatically elevated (Cavus et al., 2006). This finding implies that maximal oxygen administration is needed during CPR, but that it can create hyperoxic conditions after ROSC. Without adequate data to resolve this question, it seems reasonable to continue to use 100% Fio2 during CPR for intraoperative cardiac arrest to help maximize the oxygen delivery during this low flow-state but to reduce oxygen levels once reliable oxygen monitoring shows adequate oxygenation during the hyperdynamic phase that occurs after ROSC (see Postresuscitation Care). The exception to the use of 100% O2 for resuscitation may be the child with a circulatory condition such as a hypoplastic left heart, whose poor systemic perfusion is the result of pulmonary overcirculation. In such a case, the anesthesiologist needs to decide whether high levels of oxygen administration would contribute to the poor systemic circulation.

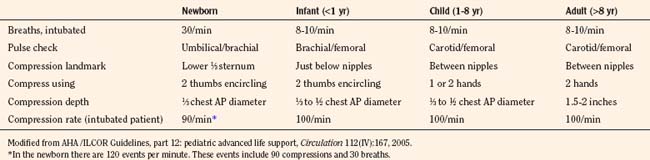

The contribution of chest compressions to ventilation during CPR impacts the decision of how much ventilation to provide to victims of cardiac arrest. Early in the study of external compressions, researchers did not add ventilation during CPR because they believed that closed-chest compression alone provided adequate ventilation (Kouwenhoven et al., 1960). The findings, that chest compressions alone provide some ventilation for adult victims and that minimal ventilation is necessary shortly after a sudden fibrillatory arrest, have resulted in over-the-phone instruction for CPR with compressions alone to untrained bystanders or those unwilling to provide mouth-to-mouth ventilation. It is difficult to determine how much chest compressions contribute to ventilation; their adequacy may vary with the cause of cardiac arrest, duration of cardiac arrest, the child’s age, an underlying medical condition, the efficacy of resuscitation, and the child’s metabolic needs. Requirements to administer oxygen and remove carbon dioxide (CO2) differ by type of cardiac arrest; a sudden fibrillatory arrest has little loss of oxygen reserve or accumulation of CO2, and a gradual asphyxial cardiac arrest has greatly depleted oxygen reserve and large accumulation of CO2. Asphyxial cardiac arrest derives a greater benefit from ventilation efforts. A model of asphyxial arrest in piglets shows greatest benefit with delivery of both compressions and ventilations compared with compression or ventilation alone (Berg et al., 2000). Provision of ventilation early in resuscitation from cardiac arrest may be less necessary and has the potential to cause a respiratory alkalosis, resulting in unwanted effects on brain circulation and oxygen delivery. As the duration of cardiac arrest continues, despite CPR efforts, metabolic acidosis predominates and respiratory compensation may be difficult. Lack of data usually leads the pediatric anesthesiologist to choose a rate based on recommendations for age (10 ventilations per minute in children and 30 ventilations per minute for newborns) and to adjust the rate if blood-gas analysis becomes available during resuscitation (Table 38-4).

Intubation of the trachea by the anesthesiologist is recommended for the management of ventilation during intraoperative cardiac arrest. Without intubation and positive pressure ventilation, soft-tissue obstruction may prevent adequate ventilation in some victims (Safar et al., 1961). An unprotected airway puts patients at greater risk for aspiration during CPR because of loss of the airway’s protective reflexes and increased likelihood of stomach distention with positive pressure ventilation. At onset of cardiac arrest, the lower esophageal sphincter competency falls from approximately 20 cm H2O to 5 cm H2O (Gabrielli et al., 2005). The laryngeal mask airway (LMA) compares favorably with mouth-to mouth ventilation, mask ventilation, and other airway adjuncts during CPR, but there are limited data for a comparison with tracheal intubation during CPR and non-intubation technique may be less protective of gastric distention or aspiration (Samarkandi et al., 1994; Rumball and MacDonald, 1997; Stone et al., 1998). Airway adjuncts are not recommended as a replacement for tracheal intubation during CPR in children, especially when an anesthesiologist is available (Grayling et al., 2002). Tracheal intubation is optimal to assure ventilation during CPR for pediatric anesthesiologists, because they maintain training to use this procedure.

The appropriate placement of the ETT during cardiac arrest can be verified in most instances by the presence of end-tidal CO2 (Etco2). The incidence of accidentally placing an ETT in the esophagus of a child is greater during cardiac arrest (19% to 26%) than during an intubation that is not involved with cardiac arrest (3%) (Bhende and Thomson, 1992; Bhende and Thomson, 1995). Demonstration of persistent Etco2 wave forms after intubation is extremely reliable to confirm correct placement of the ETT in children with spontaneous circulation (Bhende et al., 1992). The lack of a measurable Etco2 level in the ETT usually indicates esophageal intubation. In resuscitation from a cardiac arrest, the pulmonary blood flow is decreased during CPR and the Etco2 level may be falsely low or absent despite a correctly placed ETT. This finding of no Etco2 detected during CPR in children experiencing cardiac arrest was seen in 14% to 15% of correctly placed ETTs (Bhende et al., 1992; Bhende and Thomson, 1995). Continually detectable Etco2 is proof of tracheal intubation even during cardiac arrest. The absence of Etco2 on placement of the ETT indicates that the larynx should be visually inspected to discriminate esophageal intubation. Loss of Etco2 during resuscitation efforts may indicate the ETT is dislodged and should be reinspected or replaced, that the ETT is plugged or kinked and a suction catheter should be passed, or that pulmonary blood flow is diminished and resuscitation efforts need to be increased. Tracheal intubation for resuscitation also offers the option of access (although limited) to the circulation for drug administration.

Interruption of chest compressions for delivery of ventilation increases the percentage of time that there is an absence of perfusion to vital organs; this percentage of CPR without perfusion is referred to as the no-flow fraction (NFF). In addition to producing times with no perfusion, interruptions in the delivery of compressions result in a pooling of blood in the vasculature that causes the need for several compressions to be delivered before perfusion is back to the preinterruption level (Berg et al., 2001). Thus, there are both no-flow and low-flow problems caused by pausing compressions for ventilation or any other reason. The presence of an ETT during CPR eliminates concern for ventilation attempts contributing to the NFF. During CPR performed by bystanders compressions are held, ventilations are delivered, and then compressions are resumed. These pauses in chest compressions make it easier for ventilation provided by mouth-to-mouth or bag-mask ventilation to be delivered to the lungs, thereby improving the patient’s ventilation and reducing the probability of gastric inflation. The need to interpose ventilations, thus interrupting compressions, during CPR is eliminated by placement of an ETT. A significant amount of research compares the effects of chest compression with ventilation ratios of 15:2, 30:2, and longer (continuous compressions) with varying results for fibrillatory and asphyxial cardiac arrest in prehospital settings. These ratios become irrelevant to the anesthesiologist when an ETT is placed, and compressions can be performed without interruptions for ventilation in a 10:1 ratio, generating 100 compressions and 10 ventilations per minute. The goal for the anesthesiologist is to maintain continuous delivery of compressions with interruption only at the 2-minute intervals necessary for switching of compression providers to prevent fatigue, pulse checks to determine ROSC, and when needed, the delivery of shocks. Intubation, central line placement, and placement of adhesive pads for defibrillation are other commonly reported interruptions to chest compressions and should be minimized and compressions should be continued when possible. It is important to remember the negative impact of holding compressions during intubation attempts and to absolutely minimize the duration of procedures that require these interruptions.

A comparison of different methods of delivery of ventilation during chest compressions revealed differences in oxygenation, ventilation, and hemodynamics (Wilder et al., 1963). Delivery of ventilations independent of compressions, interposed between compressions, and synchronized with compressions allows both adequate oxygenation and ventilation, but their effects on hemodynamic pressures vary. Delivery of positive pressure ventilation has an impact on the hemodynamic variables caused by changes in intrathoracic pressure. CPR with simultaneous compression and ventilation increases intrathoracic pressure at the time of compression and yields improvement in blood flow and survival in a canine model, but it has not shown the same benefit in humans. The simultaneous increase in intrathoracic pressure may lead to increased ejection of blood from the thorax, but elevation of intrathoracic pressure also leads to increases in intracardiac and ICP. Increasing intracardiac pressure at the time of compression may result in no change in the MPP and no overall benefit to the heart. Increases in ICP occur with increases in intrathoracic pressure and may result in no change in the cerebral perfusion pressure (CPP) and no overall benefit to the brain (see section that follows, Physiology of Cardiopulmonary Resucitation: Reestablishment of Circulation, for mechanism). Increasing intrathoracic pressure during the relaxation phase of chest compressions has the potential to decrease venous return and may have significant impact on the effectiveness of subsequent compressions, depending on the duration of ventilation pressure. Attention to rate, duration, and pressure used during delivery of ventilations can prevent excessive ventilation that is common during these high-stress events and the impact overventilation has on venous return. Use of the impedance threshold device, the intrathoracic pressure regulator (ITPR), and decompression during CPR are techniques used to increase venous return by lowering intrathoracic pressure and are discussed in later sections.

Overventilation or underventilation can be detrimental during CPR. As discussed previously, overventilation can have hemodynamic effects or result in hypocarbia; either of these could result in decreased perfusion of the brain. Underventilation could result in a decrease in perfusion either from reduced pulmonic blood flow during CPR secondary to the increased vascular resistance that results from atelectasis or from the systemic effects of hypercarbia in addition to metabolic acidosis. The determination of a ventilation rate during CPR depends on the age of the child, whether the airway is secured, the number of rescuers, the type of cardiac arrest, and duration of the cardiac arrest. The young child has an increased baseline metabolic activity and a greater need for an increase in the number of ventilations during CPR. Recommendations for newborns include rates of about 30 breaths per minute, whether there are 1 or 2 rescuers and whether or not the child is intubated. The infant, the child between 1 and 8 years old, the child older than 8 years, and the adult share recommendations for 8 to 10 breaths per minute with intubation (Table 38-4). The newborn has both the highest metabolic activity and baseline CO2 production and a greater chance of having a cardiac arrest with a prolonged ischemic period, resulting in a greater need to eliminate CO2. There may be an ideal range for ventilation during CPR; overventilation may increase intrathoracic pressure (causing reduced venous return and increased ICP) and lower arterial carbon dioxide tension (Paco2). Causing cerebral vasoconstriction while under ventilation may allow lung collapse and atelectasis, reducing pulmonary blood flow, MPP, and CPP. The decrease in pulmonary blood flow during CPR for cardiac arrest produces higher levels of venous CO2 and lower levels of arterial and Etco2. Determining the adequacy of ventilation efforts during CPR is difficult, because low pulmonary blood flow impacts the CO2 levels of both Etco2 and blood-gas monitoring. These techniques regain their usefulness in monitoring ventilation efforts as pulmonary blood is improved with resuscitation or ROSC.

Physiology of Cardiopulmonary Resuscitation: Reestablishment of Circulation

Mechanisms of Blood Flow During Cardiopulmonary Resuscitation

Kouwenhoven et al. (1960) proposed that external chest compressions squeeze the heart between the sternum and the vertebral column, forcing blood to be ejected. This assumption about direct cardiac compression during external CPR became known as the cardiac-pump mechanism of blood flow. The cardiac pump mechanism proposes that the atrioventricular (AV) valves close during ventricular compression and that ventricular volume decreases during ejection of blood. During chest relaxation, ventricular pressures fall below atrial pressures, enabling the AV valves to open and the ventricles to fill. This sequence of events resembles the normal cardiac cycle and occurs with use of direct cardiac compression during open-chest CPR.

Several observations of hemodynamics during external CPR are inconsistent with the cardiac pump mechanism for blood flow (Table 38-5). First, similar elevations in arterial and venous intrathoracic pressures during closed-chest CPR suggest a generalized increase in intrathoracic pressure (Weale and Rothwell-Jackson, 1962). Second, reconstructing thoracic integrity in patients with flail sternums improves blood pressure during CPR (unexpected, because a flail sternum should allow direct cardiac compression during closed-chest CPR) (Rudikoff et al., 1980). Third, patients who develop ventricular fibrillation (VF) produce enough blood flow by repetitive coughing or deep breathing to maintain consciousness; there are examples in which no compression of the heart occurs, only an increase in intrathoracic pressure (MacKenzie et al., 1964; Criley et al., 1976; Niemann et al., 1980; Harada et al., 1991). These observations suggest a generalized increase in intrathoracic pressure may contribute to the production of blood flow during CPR. The finding that changes in intrathoracic pressure without direct cardiac compression (i.e., a cough) produce blood flow epitomizes the thoracic-pump mechanism of blood flow during CPR. Familiarity with the thoracic pump and cardiac pump mechanisms of blood flow during CPR help with understanding of how alternative methods of CPR might be advantageous.

TABLE 38-5 Comparison of Mechanisms of Blood Flow During Closed-Chest Compressions

| Proposed Mechanism | Cardiac Pump | Thoracic Pump |

| Sternum and spine compress heart | General increase in intrathoracic pressure | |

| Findings During Compression | ||

| Atrioventricular valves | Close | Stay open |

| Aortic diameter | Increases | Decreases |

| Blood movement | Left ventricle to aorta | Pulmonary veins to aorta |

| Ventricular volume | Decreases | Little change |

| Compression rate | Dependent | Little effect |

| Duty cycle | Little effect | Dependent |

| Compression force | Increases role | Decreases role |

| Patient Physiology | ||

| Small chest | Large chest | |

| High compliance | Low compliance | |

Thoracic-Pump Mechanism

Chest compression during CPR generates almost equal pressures in the left ventricle, aorta, right atrium, pulmonary artery, airway, and esophagus. Because all intrathoracic vascular pressures are equal, the suprathoracic arterial pressures must be greater than the suprathoracic venous pressures for a cerebral perfusion gradient to exist. Venous valves, either functional or anatomic, prevent direct transmission of the rise in intrathoracic pressure to the suprathoracic veins (Niemann et al., 1981; Swenson et al., 1988; Paradis et al., 1989; Chandra et al., 1990; Goetting and Paradis, 1991; Goetting et al., 1991). This unequal transmission of intrathoracic pressure to the suprathoracic vasculature establishes the gradient necessary for cerebral blood flow during closed chest CPR.

During normal cardiac activity, the lowest pressure measurement occurs on the atrial side of the AV valves, providing a downstream effect that allows venous return to the pump. The extrathoracic shift of this low-pressure area to the cephalic side of jugular venous valves during the thoracic pump mechanism implies that the heart is merely serving as part of a conduit for blood flow. Angiographic studies show that during a single chest compression, blood passes from the vena cavae through the right heart to the pulmonary artery and from the pulmonary veins through the left heart to the aorta (Niemann et al., 1981; Cohen et al., 1982). Unlike during normal cardiac activity and open-chest CPR, echocardiographic studies during closed-chest CPR have shown that AV valves remain open during blood ejection (Rich et al., 1981; Werner et al., 1981; Clements et al., 1986). In addition, unlike during native cardiac activity and open-chest CPR, aortic diameter decreases instead of increasing during blood ejection (Niemann et al., 1981; Werner et al., 1981). These findings about closed-chest CPR support the thoracic-pump theory that the chest becomes the “bellows,” producing blood flow during CPR, and that the heart is a passive conduit.

Cardiac-Pump Mechanism

Despite evidence for the importance of the thoracic-pump mechanism of blood flow during external chest compressions, there are specific situations in which the cardiac pump mechanism predominates during closed-chest CPR. First, applying more force during chest compressions (as in high-impulse CPR, see related section) increases the likelihood of direct cardiac compression and closure of AV valves (Feneley et al., 1987; Hackl et al., 1990). Second, a small chest size allows for more direct cardiac compression, causing better hemodynamics during closed-chest CPR in a canine model (Babbs et al., 1982a). Third, the compliant infant chest should permit more direct cardiac compression, as shown in a closed-chest CPR model in piglets, in which excellent blood flows are produced as compared with most adult models (Schleien et al., 1986). Transesophageal echocardiography studies have demonstrated the closing of AV valves during the compression phase of CPR in humans (Higano et al., 1990; Kuhn et al., 1991). These findings support the occurrence of cardiac compression during conventional CPR, suggesting that both mechanisms of blood flow may occur during CPR. As will be seen in a later section, varying the method of CPR may alter the contribution of each mechanism.

Efficacy of Blood Flow During Cardiopulmonary Resuscitation

The level of blood flow to vital organs produced by conventional closed-chest CPR without pharmacologic support (basic life-support models) is disappointingly low. The range of cerebral blood flow in dogs during CPR is 3% to 14% of levels before cardiac arrest (Bircher and Satar, 1981; Koehler et al., 1983; Koehler and Michael, 1985; Luce et al., 1984; Jackson et al., 1984). CPPs are also low, at 4% to 24% of levels before cardiac arrest in animals and only 21 mm Hg in humans (Bircher et al., 1981; Koehler et al., 1983; Luce et al., 1984; Goetting et al., 1991). Myocardial blood flows in this basic CPR mode are also discouragingly low at 1% to 15% of pre–cardiac arrest levels in dogs (Chandra et al., 1981a; Voorhees et al., 1983; Koehler et al., 1985; Halperin et al., 1986a; Shaffner et al., 1990). MPPs correlate with myocardial blood flow in a one-to-one relationship between myocardial blood flow (when measured in mL/min per 100 g) and MPP (mm Hg) (Voorhees et al., 1983; Ralston et al., 1984). Several factors affect cerebral and myocardial blood flow during CPR, and these disappointing results in basic life support models can be improved with addition of pharmacologic support.

Physiologic thresholds for minimal vital organ blood flow during CPR have been described. The inability to maintain blood flow above these thresholds during CPR results in organ malfunction. A myocardial blood flow of 20 mL/min per 100 g or greater is necessary for successful defibrillation in dogs (Guerci et al., 1985; Sanders et al., 1985a). A cerebral blood flow of greater than 15 to 20 mL/min per 100 g is necessary to maintain normal electrical activity during CPR (Michael et al., 1984). Models of basic life support often do not achieve these thresholds; the addition of advanced life support measures, such as epinephrine administration, is associated with blood flow levels above these thresholds.

Maintenance of Circulation During Cardiopulmonary Resuscitation

Patient-Related Factors

Based on limited data, young age appears related to higher cerebral blood flow during closed-chest CPR. A piglet model has substantially higher cerebral blood flow (50% of those before cardiac arrest) and slightly higher myocardial flows (17% of that before cardiac arrest) than those reported for adult models (Schleien et al., 1986). Studies on slightly older pigs yielded opposing results (Brown et al., 1987b; Sharff et al., 1984). The cerebral blood flow in the first of these two studies was markedly higher than that in adult models during closed-chest CPR, and neither of the myocardial flows was different from adult models. No human data exist with blood flows at different ages during CPR.

Age-related physical factors that affect the blood flow produced during CPR include chest wall compliance and chest wall deformability. Chest wall compliance impacts both the ability to produce anteroposterior displacement and to directly compress the heart. Young children have increased chest wall compliance that facilitates the achievement of adequate compression depth and increases the chance of direct cardiac compression, either of which can result in better blood-flow production by chest compressions. These benefits of the more compliant infant chest may account for high flows that resemble those produced by open-chest cardiac massage in a piglet model (Schleien et al., 1986). Chest wall deformability is another factor that relates to the ability to maintain flows during prolonged periods of chest compressions. Chest deformation occurs as CPR becomes prolonged. The chest assumes a flatter shape as compressions continue, producing larger decreases in cross-sectional area at the same displacement. Progressive deformation may be beneficial if it leads to more direct cardiac compression. Unfortunately, too much deformation may result in loss of recoil of the chest wall during release of compression. Decreased chest recoil with progressive deformation limits displacement and produces less effective compression and less venous return during release of compression.

A model of conventional CPR in piglets shows a progressive decrease in the effectiveness of prolonged chest compressions to produce blood flow (Schleien et al., 1986; Dean et al., 1991). The permanent deformation of the chest in this model approaches 30% of original anteroposterior diameter. An attempt to limit deformation by increasing intrathoracic pressure during compression with simultaneous-ventilation CPR resulted in no improvement in either amount of deformation or time to deterioration of flow (Berkowitz et al., 1989). Investigators used a third mode of infant-animal CPR by using a vest to deliver compressions in an attempt to limit production of deformation. The vest distributes compression force diffusely around the thorax and greatly decreases permanent deformation (3% vs. 30%) (Schleien et al., 1986; Shaffner et al., 1990). Unfortunately, the deterioration of blood flow with time still occurs and appears to be unrelated to the amount of deformation in this model and is more likely related to the duration of prolonged CPR. There has not been a direct comparison of adult and pediatric CPR in humans. The increased compliance and deformability of the infant’s chest make it likely that CPR would be more effective in children than in adults (as seen in animal models).

An increased duration of CPR has a negative effect on cerebral blood flow and seems to be most detrimental in the infant preparation (Schleien et al., 1986; Sharff et al., 1984). The length of the no-flow period before CPR begins also has a negative effect on cerebral blood flow that is produced with CPR (Shaffner et al., 1999; Lee et al., 1984). The supratentorial brain blood flow during CPR is reduced more than brain-stem flow, because the preceding ischemic interval is increased (Shaffner et al., 1998, 1999). The cause of these detrimental effects on cerebral blood flow is unclear. Tissue hypoxia resulting in loss of vascular tone that eventually becomes unresponsive to vasoconstrictors, pulmonary edema, capillary leak, and (with prolonged duration of CPR) chest wall deformity are factors that are likely to contribute. It remains obvious that a short ischemic period and quick resuscitation improve the eventual outcome.

ICP is another patient-related factor with effect on the circulation produced during CPR. ICP can represent the downstream pressure for cerebral blood flow, and if elevated it can inhibit cerebral perfusion. Increases in intrathoracic pressure with closed-chest CPR cause ICP increases (Rogers et al., 1979). This relationship is linear, and one third of the increase in intrathoracic pressure generated by chest compression is transmitted to ICP (Guerci et al., 1985). The carotid arteries and jugular veins do not appear to be involved in the transmission of intrathoracic pressure to the intracranial contents. The transmission can be partially blocked by occluding cerebrospinal fluid or vertebral vein flow (Guerci et al., 1985). The rise in ICP with chest compressions becomes more significant in the setting of baseline increased ICP (an increase to two thirds of intrathoracic pressure is transmitted to ICP). The efficacy of CPR to perfuse the brain deteriorates markedly in the face of elevated ICP. When increased ICP is suspected (i.e., a child with hydrocephalus or head trauma) the ICP should be lowered early in the resuscitation (i.e., shunt tapped, or hematoma drained) to increase effectiveness of chest compressions to perfuse the brain.

Volume status, more specifically, hypovolemia, is another patient-related factor that can have an impact on effectiveness of chest compressions. There are little data to address the impact of volume status on blood flow during chest compression. Animal models include the administration of fluid (30 mL/kg or to a right atrial pressure of 6 to 8 mm Hg) before inducing cardiac arrest in fasted animals to improve the effectiveness of CPR (Sanders et al., 1990; Eleff et al., 1995).

Compression Rate and Duty Cycle

Compression rate is the number of cycles per minute. Duty cycle is the ratio of the duration of compression phase to the entire compression-relaxation cycle, expressed as a percentage. For example, at the recommended rate of 100 compressions per minute, the total cycle for compression and relaxation is 0.6 seconds (100 compressions × 0.6 seconds/compressions = 1 minute). A 0.36-second compression time produces a 60% duty cycle (0.36 sec/0.6 sec = 60%). The impact of duty cycle differs between the two mechanisms of blood flow (Table 38-5). In 1986 the American Heart Association Guidelines for CPR and Emergency Cardiac Care recommended increasing the rate of chest compressions from 60 to 100 per minute. This change represented a compromise between advocates of the thoracic-pump mechanism and those of the cardiac-pump mechanism (Feneley et al., 1988). The mechanics of these two theories of blood flow differ, but a faster compression rate could augment both.

In the cardiac-pump mechanism of blood flow during CPR, direct cardiac compression generates blood flow, and the force of compression determines the stroke volume per compression. Prolonging the compression (increasing the duty cycle) beyond the time necessary for full ventricular ejection fails to produce any additional increase in stroke volume in this model. Also, increasing rate of compressions increases cardiac output, because a fixed ventricular blood volume ejects with each cardiac compression. Therefore, in the cardiac-pump mechanism, blood flow is rate sensitive and duty-cycle insensitive. In the thoracic-pump mechanism, the reservoir of blood to be ejected is the large capacitance of the intrathoracic vasculature. With the thoracic pump mechanism, increasing either force of compression or duty cycle enhances flow by emptying more of the large intrathoracic capacity. Changes in compression rate have less effect on flow over a wide range of rates (Halperin et al., 1986a). Blood flow in the thoracic pump mechanism is generally duty-cycle sensitive but rate insensitive. With an increase in duty cycle, the percentage of time in compression is prolonged, but time for relaxation becomes decreased and venous return may become inhibited. At slow compression rates, the ability to hold a compression to prolong the duty cycle becomes physically demanding. The increased ability of a rescuer to produce a 50% duty cycle at a rate of 100 (compared with 60) compressions per minute is the reason behind the compression rate change recommendation in the 1986 American Heart Association guidelines for CPR.

The no-flow fraction and measurement of compressions delivered are important factors in the continued recommendation of a rate of 100 compressions per minute. The NFF is the percentage of time that compressions are interrupted. The interruption of compressions not only produces a no-flow time but also reduces effectiveness of the initial compressions on the resumption of chest compressions. The NFF in CPR performed by bystanders during out-of-hospital cardiac arrest (OHCA) has been reported to be 48% (Wik et al., 2005). For IHCA, a NFF of 24% has been reported with a sensing monitor and defibrillator (Abella et al., 2005). Reducing the pauses for ventilations from a compression/ventilation ratio of 15:2 to 30:2 in a bystander model of CPR on a manikin reduced NFF from 33% to 22% (Betz et al., 2008). Tracheal intubation in OHCA resulted in a reduction of NFF from 61% to 41% (p = 0.001) (Kramer-Johansen et al., 2006). Preshock pause also contributes to the NFF. Automatic external defibrillators (AEDs) create a variable preshock pause of 5 to 28 seconds. A 5-second increase in preshock pause was associated with a decrease in shock success (p = 0.02), and shock success fell from 94% if the pause was for fewer than 10 seconds to only 38% if it was longer than 30 seconds (Edelson et al., 2006). Interruptions of compressions for delivery of ventilation by tracheal intubation and for AED analysis can be eliminated by using a manual defibrillator. The goal is to have only a 10-second interruption every 2 minutes (120 seconds) for compressor change and rhythm analysis, resulting in an 8% NFF.

The number of compressions delivered per minute may differ from the compression rate. The compressor (see later section on teamwork for roles assumed during intraoperative CPR) may be delivering compressions at a rate of 0.6 seconds per cycle (100 compressions per minute), but if each minute there are 10 seconds of held compressions, then the number of compressions delivered at this rate falls to 83 compressions per minute. In the analysis of a 1-minute segment in which there were 15 seconds of held compressions, this same compression rate (0.6 seconds per cycle) resulted in a decrease to 75 compressions delivered to the patient. In an OHCA study of adults, the use of a compression rate of 121 resulted in the number of compressions being delivered at 64 per minute (Wik et al., 2005). The accomplishment of 80 chest compressions per minute has been correlated with successful resuscitation in an animal model (Yu et al., 2002). Resuscitation team members in the roles of compressor and leader need to be aware of how many actual compressions per minute are occurring and minimize interruptions to keep the rate of compressions delivered above 80 per minute. In an intubated patient, compressing at a rate of 100 per minute and only stopping for 10 seconds every 2 minutes to change compressors and perform pulse checks and rhythm analysis results in 92 compressions delivered per minute.

Compression force is the pressure and the acceleration applied to the chest. There are accelerometers available to monitor and provide feedback about the compression force applied with each compression, but they are not typically available in intraoperative resuscitation. The compression depth is the amount of anterior-posterior displacement provided by a compression and is related to the compression force applied and the compliance of the chest wall. Compression depth for adult patients is recommended as 38 to 50 mm (1.5 to 2 in) and as one third to one half of the anterior-posterior chest diameter for children and infants. Literature on adults indicates that a depth of 38 mm is not often achieved during resuscitation. Compression depths less than 38 mm occurred for 37% of compressions during IHCA on adults (mean depth 43 mm for all compressions) and for 62% of compressions during OHCA (mean depth 34 mm) (Abella et al., 2005; Wik et al., 2005). When studying the importance of adequate compression depth on the success of shocks delivered during IHCA, it was found to be that a 5-mm increase in compression depth improved first shock success (p = 0.028) (Edelson et al., 2006). Compression depth in a pediatric manikin model was 14 mm with the two-thumbs techniques vs. 9 mm with the two-finger technique (p < 0.001), indicating that the two-thumb technique is more effective for depth of compression (Udassi et al., 2009). In the operating room, the leader and recorder can assess the depth of compressions provided by the compressor and remind the compressor to achieve the suggested depth. The improvement in Etco2 production should be noted when increasing compression depth with a goal of maximizing Etco2 levels, which should correlate with blood flow through the lungs and vital organs.

Full recoil of the chest and avoiding any pressure during the release of compression (avoiding leaning on chest) is a key concept in the performance of chest compressions. The native chest recoil leads to increased negative intrathoracic pressure that augments venous blood return and blood ejection with subsequent compression. An animal model of incomplete recoil during active compression-decompression CPR resulted in increased intrathoracic pressure and reduced systemic arterial pressure, MPP, and CPP. The decrease in cerebral perfusion was related to the decrease in systemic arterial pressure rather than an increase in ICP (Yannopoulos et al., 2005b). In humans the effect of incomplete recoil on intrathoracic pressure can be similar to the use of excessive rates or durations of ventilation and is likely to result in less effective CPR because of poor venous return (Aufderheide and Lurie, 2004). In a study of pediatric IHCA, a feedback device alerted the compressor to leaning. Leaning was present in 97% of nonfeedback compressions and 89% of feedback compressions when defined as force applied to the chest of more than 0.5 kg and present in 83% of the nonfeedback compressions and 71% of the feedback compressions when defined as depth applied to the chest of more than 2 mm (Niles et al., 2009). It is interesting that a feedback device during CPR was effective in causing a lower rate of leaning, but the majority of compressions still resulted in this complication in their patients despite feedback. The importance of this concept, that venous return and blood flow are related to chest recoil, has led to the development of alternative methods of providing compressions or ventilations to improve chest recoil during release of compression (active decompression CPR and some other techniques are discussed in a later section). Prevention of leaning on the chest during release of compression is difficult and may require the use of both visual and audio feedback devices and alternative methods of CPR. A team approach can be tried during an intraoperative cardiac arrest with the recorder and leader assessing the compressor and advising if full recoil appears to be inhibited by leaning on the chest between compressions.

Distribution of Blood Flow During Cardiopulmonary Resuscitation

Distribution of blood flow to both the heart and brain during CPR is influenced by the development of regional gradients. Distribution of blood flow to the brain depends on development of three regional gradients: the intrathoracic-suprathoracic gradient, the intracranial-extracranial gradient, and the caudal- rostral gradient. The intrathoracic-suprathoracic gradient provides flow of oxygenated blood from the chest to the upper extremities and head. Either venous collapse secondary to elevated intrathoracic pressure or closure of anatomic valves in the jugular system prevents the transmission of intrathoracic pressure to the suprathoracic venous system (Rudikoff et al., 1980; Niemann et al., 1981; Fisher et al., 1982). When CPR is effective, arterial collapse does not occur and elevated intrathoracic pressure results in a gradient that promotes suprathoracic blood flow. The intracranial-extracranial gradient directs blood to the brain away from extracranial suprathoracic vessels and toward intracranial vessels. α-Adrenergic agonists constrict extracranial vessels but have little effect on intracranial vessels, resulting in increased intracranial blood flow. Use of the vasoconstrictor epinephrine increases intracranial blood flow while decreasing flow in the extracranial structures of skin, muscle, and tongue (Schleien et al., 1986). The caudal-rostral gradient occurs within intracranial vessels. The relatively low-flow state of CPR seems to increase the distribution of flow to caudal areas of the brain. Ischemia preceding CPR significantly increases the distribution of flow to these areas (Michael et al., 1984; Shaffner et al., 1998, 1999). This pattern of caudal redistribution of flow also occurs in other models of global ischemia and provides preferential perfusion of the brain stem (Jackson et al., 1981). Although brain-stem resuscitation is necessary for survival, this propensity for sparing of caudal circulation after either prolonged ischemia or prolonged CPR raises the concern for producing a victim who survives with only brain-stem function.

Myocardial blood flow does not have the advantage of a large extrathoracic pressure gradient that augments cerebral flow. The thoracic pump generates equal increases in all intrathoracic structures. This lack of a gradient can result in poor myocardial blood flow during external chest compressions. Several studies have shown much lower blood flow to the myocardium compared with the cerebrum during closed-chest CPR (Ditchey et al., 1982; Michael et al., 1984; Schleien et al., 1986). The type of CPR influences the production of myocardial blood flow. Methods that are more likely to cause direct cardiac compression, such as high-impulse CPR, result in increased myocardial blood flow (Ditchey et al., 1982; Maier et al., 1984). Myocardial blood flow may be present only during relaxation of chest compression, correlating with a diastolic pressure, or in other methods seen during compressions correlating with a systolic pressure (Cohen et al., 1982; Maier et al., 1984; Michael et al., 1984; Schleien et al., 1986). Regional flow within the heart also changes during CPR, with a shift in the ratio of subendocardial/subepicardial blood flow from the normal 1.5:1 to 0.8:1 (Schleien et al., 1986). This ratio reverts to normal with epinephrine administration.

Conventional Cardiopulmonary Resuscitation

Conventional CPR includes closed-chest compressions delivered manually with ventilations interposed after every fifth, fifteenth, or thirtieth compression (see Table 38-4 for basic life support procedures). This method of CPR can be delivered in any setting without additional equipment and with a minimum of training. No large randomized study exists to demonstrate the superiority of any alternative method of CPR over conventional CPR.

Rescuer fatigue is a major problem with manual CPR in the field. Individual variation among rescuers performing manual CPR is another problem both in the field and in the laboratory. Mechanical devices are available to deliver chest compressions to prevent fatigue and to standardize compression delivery. Mechanical devices are presently limited to adult CPR and are not recommended for children (AHA, 2006a). The overall low efficacy of conventional CPR has led to investigations of multiple CPR modalities. The methods usually reflect attempts to enhance the contribution of the thoracic pump or cardiac pump to blood flow during CPR (Table 38-5). For example, the use of both hands to encircle the chest of an infant while using the thumbs to apply sternal compression attempts to both raise intrathoracic pressure and compress the heart (Todres and Rogers, 1975; David, 1988). This two-thumb encircling technique of CPR generates higher blood pressures and is recommended over the two-finger technique for infants (Dorfsman et al., 2000).

Blood flow to other organs during CPR is usually reduced compared with flow to the brain and heart. The lack of valves in infrathoracic veins causes retrograde transmission of venous pressure and decreases the gradient for blood flow below the diaphragm in animals (Brown et al., 1987b). Regional blood flows for infrathoracic organs (e.g., small intestine, pancreas, liver, kidneys, and spleen) during CPR are usually less than 20% of arrest rates before cardiac arrest and often close to zero (Koehler et al., 1983; Voorhees et al., 1983; Michael et al., 1984; Sharff et al., 1984). The addition of abdominal compressions does not alter the infrathoracic organ blood flow (Koehler et al., 1983; Voorhees et al., 1983). Administration of epinephrine during closed-chest CPR almost eliminates flow to the subdiaphragmatic organs, with the exception of the adrenal glands (Ralston et al., 1984). There are little data available regarding blood flow to the lungs during CPR. Pulmonary blood flow occurs primarily at times of low intrathoracic pressure during closed-chest CPR (Cohen et al., 1982). High extrathoracic venous pressure builds up during compression and results in pulmonary filling during relaxation as intrathoracic pressure falls. Resuscitation methods that lower intrathoracic pressure may augment pulmonary vascular filling. Leaning on the chest during relaxation of compression and maintenance of increased ventilation pressures may prevent the fall in intrathoracic pressure between chest compressions and decrease pulmonary venous return and blood flow.

Alternative Methods of Cardiopulmonary Resuscitation

Simultaneous Compression-Ventilation Cardiopulmonary Resuscitation

Simultaneous compression-ventilation CPR (SCV-CPR) represents a technique designed to augment conventional CPR by increasing the contribution of the thoracic pump mechanism to blood flow. Delivering ventilation simultaneously with every compression (instead of interposed after every fifth compression) adds to intrathoracic pressure and potentially augments blood flow produced by conventional chest compressions. This method is felt to increase the perfusion gradient to the brain but has little effect on the myocardial perfusion gradient perfusion to the heart. Animal models suggested that SCV-CPR increases carotid blood flow compared with conventional CPR and show an advantage of SCV-CPR in large canine models (Koehler et al., 1983; Luce et al., 1983). No advantage is seen over conventional CPR in infant pigs and small dogs, perhaps because in small animals the compliance of the chest allows more direct cardiac compression and higher intravascular pressure than with conventional CPR (Babbs et al., 1982a, 1982b; Sanders et al., 1982; Schleien et al., 1986; Dean et al., 1987, 1990; Berkowitz et al., 1989). Human studies comparing SCV-CPR with conventional CPR show minimal improvement or detrimental effect on the coronary perfusion pressure (Harris et al., 1967; Martin et al., 1986). Survival is worse in both animals and humans when SCV-CPR is compared with conventional CPR (Sanders et al., 1982; Krischer et al., 1989). No study has shown an increased survival with this CPR technique despite the potential for increased brain perfusion.

High-Impulse Cardiopulmonary Resuscitation

High-impulse CPR involves the application of force that is greater than usual during chest compression. This increase in force can be in the form of greater mass, greater velocity, or both. It is hypothesized that the larger impulses result in greater chest deflection, causing more contact with the heart (Kernstine et al., 1982). Direct cardiac compression is more likely with this form of closed-chest CPR. High-impulse CPR can generate myocardial blood flows as high as 60% to 75% of values before cardiac arrest (Maier et al., 1984). In humans, high-impulse CPR generates increased aortic pressures (Swenson et al., 1988). An outcome study in dogs compared high-impulse CPR with conventional closed-chest CPR and found no significant improvement in resuscitation, survival, or neurologic outcome (Kern et al., 1986). The application of this benefit of greater force is the same for an intraoperative cardiac arrest, resulting in an increased likelihood of cardiac compression, which then results in higher myocardial and cerebral blood flow; however, the risk is that there is potential for increasing chest deformation and trauma.

Negative Intrathoracic Pressure Methods

Active compression-decompression CPR (ACD-CPR) requires a device that attaches to the chest and allows the rescuer to pull up on the sternum and decompress the thorax between compressions. The theoretical advantages of decompressing the chest between compressions include restoring chest wall shape and creating a negative intrathoracic pressure that pulls gas into the lungs and pulls blood into intrathoracic vessels. These characteristics allow for more effect from the subsequent compression, because more intrathoracic pressure can be generated and more blood is available to be ejected. Preliminary studies in humans have shown that after advanced cardiac life support failed, ACD-CPR was more effective than standard CPR at improving hemodynamic variables (Cohen et al., 1992). After IHCA, more patients had ROSC, survival at 24 hours, and a better Glasgow coma score when they received ACD-CPR then when standard CPR was given (Cohen et al., 1993). A larger study of IHCA victims failed to show any difference in resuscitation or outcomes between patients receiving ACD-CPR or standard CPR (Stiell et al., 1996). Several large studies of patients who suffered an OHCA did not find a difference in effectiveness of ACD-CPR or standard CPR for improving ROSC incidence, hospital admission, hospital discharge, or short-term neurologic outcome (Lurie et al., 1994; Schwab et al., 1995; Mauer et al., 1996; Stiell et al., 1996; Nolan et al., 1998).

Complication rates were not different after ACD-CPR or standard CPR in most studies (Lurie et al., 1994; Schwab et al., 1995; Mauer et al., 1996). It is interesting that the same study that showed that ACD-CPR had more complications than standard CPR (hemoptysis and sternal dislodgment) was also one of the few large studies that found ACD-CPR more effective than standard CPR for OHCA (Plaisance et al., 1997). ACD-CPR has been combined with an airway device to increase negative intrathoracic pressure—the impedance threshold device (ITD; see the next paragraph)—and has been mechanized to allow continuous application without the need to change rescuers and ease use during transport (see the section on mechanical methods). ACD-CPR is considered an optional technique for adults, and there are no data on which to base a recommendation for children.

The ITD is a device on the ETT or face mask that impedes inflow of inspiratory gas during chest reexpansion between CPR compressions when rescuers are not actively ventilating the patient. Impedance of gas inflow promotes negative intrathoracic pressure development during chest reexpansion. This increase in the negative intrathoracic pressure facilitates, by chest recoil, blood return to the thorax before the next chest compression (Lurie et al., 2002). The use of an ITD has been shown to improve coronary perfusion pressure and vital organ blood flow with both standard and ACD-CPR in adult and pediatric animal models (Langhelle et al., 2002; Voelckel et al., 2002). Improved levels of Etco2, diastolic pressure, and coronary perfusion pressure occurred in a prospective, randomized controlled trial in adults undergoing ACD-CPR with ITD compared with ACD-CPR without ITD. A decrease in time to achieve a ROSC was also seen with ACD-CPR with ITD (Plaisance et al., 2000). A prospective controlled trial comparing standard CPR without an ITD and ACD-CPR with an ITD found significantly improved short-term survival (24 hours) in adult patients in the group that had ACD-CPR and an ITD (Wolcke et al., 2003). The use of an ITD with standard CPR in an OHCA trial adult of adults failed to show significant improvements in outcome for ITD vs. a sham ITD except in a subgroup with pulseless electrical activity (PEA) (Aufderheide et al., 2005). A separate study showed an improvement in short-term survival for standard CPR with an ITD vs. historical controls (Thayne et al., 2005). A no-ventilation study showed hypoxemia developing in animals that received either standard CPR with ITD or ACD-CPR with ITD but not in the animals that received standard CPR alone (Herff et al., 2007). Standard CPR with and without ITD in a ventricular-fibrillation cardiac arrest (VFCA) model in pigs showed no effect on MPP and no effect on survival in one study and worse survival with ITD in another study (Menegazzi et al., 2007; Mader et al., 2008). Further studies are needed to determine the effectiveness of the use of an ITD for pediatric resuscitation.

The ITPR combines an ITD with a vacuum to maintain a negative intratracheal gradient (-10 cm H2O) during CPR while allowing positive pressure ventilation. An ITD relies on chest elastic properties such as outward recoil of thorax and proper CPR technique (no leaning during relaxation) to allow full recoil and generation of a negative intrathoracic pressure, whereas ITPR overcomes these limitations. An ITPR in a porcine model of VFCA was able to maintain negative intrathoracic pressure with ACD-CPR; the result was improved hemodynamic measurements and survival with no effect on ventilation (Yannopoulos et al., 2005a, 2006). ITPR has not been evaluated on asphyxial cardiac arrest or a pediatric model.

Abdominal Methods