1. Toward standards of evidence for CAM research and practice

Wayne B. Jonas and George T. Lewith

Chapter contents

Introduction4

Science and values5

The audience and the evidence6

How is evidence actually used in practice?8

Knowledge domains and research methods9

Strategies based on evidence-based medicine9

Alternative strategies to the hierarchy11

Quality criteria for clinical research17

Applying research strategies in CAM26

Special CAM research issues30

Sample and population selection30

Diagnostic classification30

Adequate treatment31

The interaction of placebo and non-placebo factors31

Outcomes selection31

Hypothesis testing32

Assumptions about randomization33

Blinding and unconscious expectancy33

Learning and therapeutic growth34

The nature of equipoise in CAM34

Risk stratification and ‘adequate’ evidence35

Improving standards of evidence-based medicine for CAM35

Conclusion: CAM and evolution of the scientific method35

Key points

• Health care is complex and requires several types of evidence

• Evidence-based medicine (EBM) in complementary and alternative medicine (CAM) is necessarily pluralistic

• The translation of evidence (EBM) into clinical practice (evidence-based practice: EBP) is not fundamentally hierarchical – it operates through a mixed-method or circular strategy

• EBP needs to balance specificity and utility for both the individual and groups of patients – the evidence house strategy

• Standards and quality issues exist in medicine and can be applied to CAM; we don’t need to reinvent them

• The integrity of individual CAM practice, therapies and principles needs to be respected within a rigorous EBM framework – this is called model validity

• Improving the application of EBM in CAM practice is desirable, possible and practical

Introduction

Complementary and alternative medicine (CAM) forms a significant subset of the world’s health care practices that are not integral to conventional western care but are used by a substantial minority (and in some countries substantial majority) of patients when they make their health care decisions. The World Health Organization (WHO) has estimated that 80% of the developing world’s population also use these medical practices (World Health Organization 2003). In developing countries, numerous surveys have documented CAM use by the public ranging from 10% to over 60%, depending on the population and practices included in the samples (Fisher and Ward, 1994, Eisenberg et al., 1998, Ernst, 2000, Barnes et al., 2002, Graham et al., 2005 and Tindle et al., 2005).

CAM encompasses a number of diverse health care and medical practices, ranging from dietary and behavioural interventions to high-dose vitamin supplements and herbs, and includes ancient systems of medicine such as Ayurvedic medicine and traditional Chinese medicine (TCM). CAM has been defined slightly differently in the USA (http://nccam.nih.gov/health/camcancer/#what) than the UK (House of Lords Select Committee on Science and Technology 2000). In a recent history of alternative medicine, historian Roberta Bivins documents that, while competing forms of health care have often been part of every culture and time, the concept of a collective group of practices that are complementary or alternative to a dominant ‘biomedicine’ has occurred only in the last 100 years (Bivins 2007). Furthermore patients may view CAM in a variety of ways. For example, they may see manipulative therapies as conventionally based, with biomechanical mechanisms and whole systems such as Ayurveda and homeopathy as alternative medical systems while potentially perceiving acupuncture for pain as complementary to conventional medical interventions (Bishop et al. 2008). Consequently the definition of CAM is fundamentally politically defined and ultimately depends very much on ‘who you are asking’ (Clouser et al. 1996). By almost any definition, however, the use of CAM has been steadily growing for decades, so reliable information on its safety and effectiveness is needed by both patients and health care providers.

What then is evidence and how shall it be applied to CAM? The application of science to medicine is a relatively recent phenomenon manifesting itself mainly over the last 100 years. Current methods such as blinded human experiments were first used by homeopaths in 1835 and this methodology has become increasingly dominant within conventional medical (clinical) research over the last 60 years (Stolberg, 1996, Jadad, 1998 and Kaptchuk, 1998a). In this chapter and book we hold the assumption that research into alternative medical practices should use the same meticulous methods as those developed for conventional medicine, but researchers will necessarily need to apply them pragmatically so they are relevant to the various stakeholders within CAM clinical practice. We also assume that full knowledge and evidence about CAM practices require a plurality of methods, each designed to provide a part of the complex picture of what CAM is and its value and impact within health care. In this chapter, we aim to describe that plurality, review the research standards that apply to all evidence-based medicine (EBM) and explore the special issues required for application of those methods to the diverse practices encompassed in CAM. In subsequent chapters each author will describe in more detail the application of research to specific CAM practices.

Science and values

One cannot reasonably discuss the appropriate application of science to health care without addressing the issue of human values and the goals of medicine (Cassell 1991). Bradford Hill (1971), who developed the modern randomized controlled trial (RCT), often emphasized the importance of human ethical issues in its application. Scientific research is not simply a matter of applying a pre-set group of methods for all kinds of research problems. It involves selecting research designs that are ethical and appropriate to the questions, goals and circumstances addressed by the researchers while being relevant to the research commissioners and the research audience (Jonas 2002a).

Two crucial issues arise in developing appropriate (and ethically grounded) evidence: the rigour and the relevance of the information. Rigour refers to the minimization of bias that threatens the validity of conclusions and interpretation of data. It is an attempt to make sure we are not fooled by our observations and approach truth. Relevance addresses the value to which the information will be put by a specific audience and involves the values placed on different types of information by the research audience. Failure to consider different values when designing and conducting research risks ‘methodological tyranny’ in which we become slaves to rigid, preordained and potentially misleading assumptions (Schaffner 2002).

It would, for instance, be very important for both clinicians and patients to have a substantial amount of rigorous evidence when thinking of prescribing a potentially life-saving but new and possibly lethal chemotherapeutic agent for malignant melanoma. There might be a different set of arguments and evidence for the prescription of a safe new agent for rhinitis; we do need to consider context alongside risk and benefit and this frequently occurs within medicine practised in the community for benign, chronic or transient conditions.

Research strategies must start with specific questions, goals and purposes before we decide which information to collect and how to collect it. Questions of importance for determining relevance relate mostly to whom and for what purpose evidence will be used (the audience). For example: How do the values of the patient, practitioner, scientist and provider infuse the research and how will the data be used? What is the context of the research, and what do we already know about the field? Has the practice been in use for a long time, and hence is there implicit knowledge available, or are we dealing with a completely new intervention? It is mostly at this initial level that implicit paradigmatic incompatibilities arise between conventional and complementary medicine but we believe that evidence-based practice (EBP) must start with the values of the audience it purports to serve (Jonas 2002b).

The audience and the evidence

One of the striking features of the current interest in CAM is that it is a publicly driven trend (Fonnebo et al. 2007). The audience for CAM use is primarily the public. Surveys of unconventional medicine use in the USA have shown that CAM use increased by 45% between 1990 and 1997. Visits to CAM practitioners in the USA exceed 600 million per year, more than to all primary care physicians. The amount spent on these practices, out of pocket, is $34 billion, on a par with conventional medicine out-of-pocket costs (Barnes 2007). Two-thirds of the US and UK medical schools teach about CAM practices (Rampes et al., 1997 and Wetzel et al., 1998) and many hospitals are developing complementary and integrated medicine familiarization programmes and more and more health management organizations include alternative practitioners (Pelletier et al. 1997).

The mainstream is also putting research money into these practices. For example, the budget of the Office of Alternative Medicine at the US National Institutes of Health rose from $5 million to $89 million in 7 years, and it then became the National Center for Complementary and Alternative Medicine (NCCAM) (Marwick 1998). Despite resistance to NCCAM’s formation, its budget is now nearly $125 million annually, a far larger investment than in any of the other western industrialized nations. The public has been at the forefront of the CAM movement and driven this change in perception (Jonas 2002). The audience for CAM and CAM research is therefore both diverse and critical. Its various audiences will often want exactly the same information but will have different emphases in how they understand and interpret the data available (Jonas 2002). Social science studies of applied knowledge show that interpretation and application of evidence can be quite complex and vary by prior experience and training, individual and cultural beliefs and percieved and real needs (Friedson, 1998 and Kaptchuk, 1998a). Some of these factors are briefly summarized below.

Patients

Patients who are ill (and their family members) often want to hear details about other individuals with similar illnesses who have used a treatment and recovered. If the treatment appears to be safe and there is little risk of harm, evidence from these stories frequently appears to be sufficient for them to decide to use the treatment. Patients may interpret this as a sign that the treatment is effective, and to them this evidence is important and relevant for both CAM and conventional medicine. The skilled and informed clinician will need to place this type of evidence into an individual and patient-centred context with respect for all the evidence available from both qualitative and quantitative investigations.

Health practitioners

Health care practitioners (conventional doctors, CAM practitioners, nurses, physical therapists) also want to know what the likelihood or probability is that a patient will recover or be harmed based on a series of similar patients who have received that treatment in clinical practice. Such information may come from case series or clinical outcomes studies or RCTs (Guthlin et al., 2004, Witt et al., 2005a and Witt et al., 2005b). They also want to know about the safety, complications, complexity and cost of using the therapy, and this information comes from the collection of careful safety data and health economic analyses.

Clinical investigators

Clinical scientists will value the same type of evidence as clinicians but will often look at the data differently because of their research training and skills. They often want to know how much improvement occurred in a group who received the treatment compared to another group who did not receive it or a group that received a placebo. If 80% of patients who received a treatment got better, do 60% of similar patients get better just from coming to the doctor? This type of comparative evidence can only come from RCTs, which is the major area of interest for most clinical researchers. These types of studies can include a placebo control but sometimes are pragmatic studies, which compare two treatments or have a non-treatment arm or other types of controls.

Laboratory scientists

Laboratory scientists focus on discovering mechanisms of action. Basic science facilitates understanding of underlying mechanisms and allows for greater precision testing in more highly controlled, (and artificial) environments.

Purchasers of health care

Those in charge of determining public policy often need aggregate ‘proof’ that a practice is safe, effective and cost-effective. This usually involves a health economic perspective within a complex process of treatment evaluation. Systematic reviews, meta-analyses and health services research including randomized trials and outcome studies provide this type of evidence. Health services research also provides data that evaluates the cost, feasibility, utility and safety of delivering treatments within existing delivery systems.

While day-to-day decision-making is more complex than the brief summaries above, the point is that different audiences have legimate evidence needs that cannot be accommodated by a ‘one size fits all’ strategy. The CAM researcher must keep in mind the need for quality research in a variety of domains and attend carefully to the audience and use of the results of their research once collected and interpreted (Callahan, 2002 and Jonas and Callahan, 2002). As stated by Ian Coulter, there is a difference between the academic creation of information in EBM and the clinical application of knowledge in EBP and investigators should keep EBP and the patient perspective in mind when designing and interpreting research (Coulter & Khorsan 2008). In addition, more social science research is needed on models, applications and dynamics of EBM to help guide that interpretation (Mykhalovskiy & Weir 2004).

How is evidence actually used in practice?

The two main audiences that make day-to-day decisions in health care are practitioners and patients. The differing information preferences of these two audiences are exhibited in their pragmatic decision-making. Gabbay & le May (2004) demonstrate that, while much health policy is based on RCTs, and indeed these are vital for family physicians (general practitioners: GPs), they may not employ a linear model of decision-making on an individual clinical basis. The GPs they worked with commented that they would look through guidelines at their leisure, either in preparation for a practice meeting or to ensure that their own practice was generally up to standard. Most practitioners used their ‘networks’ to acquire information that they thought would be the best evidence base from sources that they trusted, such as popular free medical magazines, word of mouth through other doctors they trusted and pharmaceutical representatives; in effect they operated in a circular decision-making model. Thus, clinicians relied on what Gabbay & le May call ‘mindliness’ – collectively reinforced, internalized tacit guidelines that include RCTs but are not solely dependent on them – which were informed by brief reading, but mainly guided by their interactions with each other and with opinion leaders, patients and through other sources of knowledge built on their early training and their own colleagues’ experience. The practical application of clinical decision-making in conventional primary care demonstrates that a hierarchical model of EBM is interpreted cautiously by clinicians in managed health care environments.

Patients who use CAM report that one of the reasons for CAM use is that the criteria for defining healing and illness and in defining valid knowledge about health care are dominated by licensed health care professionals and are not patient-centred (O’Connor 2002). Many accept ‘human experience as a valid way of knowing’ and regard ‘the body as a source of reliable knowledge’, rejecting the assumption that ‘personal experiences must be secondary to professional judgment’. This ‘matter of fact’ lay empiricism often stands in sharp contrast to our scientific insistence that in the absence of technical expertise and controlled conditions our untrained observations are untrustworthy and potentially misleading (Sirois & Purc-Stephenson 2008). Most patients accept basic biological knowledge and theory but find biology insufficient to explain their own complex health and illness experiences and so do not restrict their understanding to strictly biological concepts. Many assert their recognition of the cultural authority of science and seek to recruit it to the cause of complementary medicine – both as a means to its validation and legitimization and as a source of reliable information to facilitate public decision-making about CAM.

Knowledge domains and research methods

Strategies based on evidence-based medicine

What are the elements of a research strategy that matches this pluralistic reality? How can we build an evidence base that has both rigour and relevance? In the diverse areas that CAM (or indeed conventional primary care) encompasses, at least six major knowledge domains are relevant. Within these domains are variations that allow for precise exploration of differing aspects of both CAM and conventional health care practice.

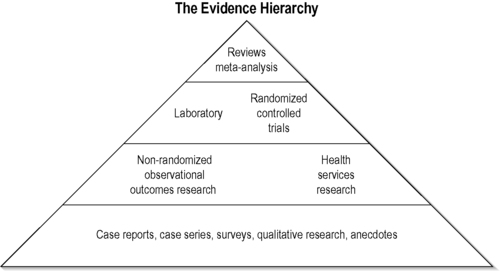

The hierarchy strategy

In conventional medicine, knowledge domains often follow a hierarchical strategy with sophisticated evidence-based synthesis at its acme (Sackett et al. 1991) (www.cochrane.org). The hierarchical strategy can be graphically depicted by a pyramid (Figure 1.1). At the base of this hierarchy are case series and observational studies. This is then followed by cohort studies in which groups of patients or treatments are observed over time in a natural way, often without any inclusion or exclusion criteria. Randomized studies come next. Here, the decision about which treatments an individual receives is generated by a random allocation algorithm. This usually involves the comparison of two or more treatments with or without a sham or placebo treatment. If several of those studies are then pooled they produce a meta-analysis. This is a summary of the true effect size of an intervention against control.

|

| FIGURE 1.1

Adapted with permission from Jonas (2001).

|

Internal validity and randomization

The hierarchical model has as its basis the goal of establishing internal validity, defined as the likelihood that observed effects in the trial are due to the independent variable. The greater the internal validity, the more certain we are that the result is likely to be valid as a consequence of the methodological rigour of a study. The threat to the internal validity of a research study is bias. Bias is introduced if factors that are not associated with the intervention produce shifts or changes in the results (outcomes) that appear to be due to the intervention but are in fact due to other spurious factors (confounders). For instance, we may compare two naturally occurring groups with the same illness, one that has chosen a CAM intervention (x) and the other that has chosen a conventional medical interventional (c). If we suppose that x produces better results than c, the uncritical observer might suggest that CAM is better than conventional care. It may also be that those choosing treatment x have less severe disease or fewer additional risk factors because they were better educated, didn’t smoke and drank less alcohol.

Randomization and stratification allow us to create groups that are equal in all the known and, presumably, most of the unknown confounding factors so that we can safely attribute changes we see in outcomes to the interventions rather than to any confounding variable. Randomization creates homogeneous and comparable groups by allocating patients into groups without bias.

External validity

A complexity arises with the hierarchical strategy when there is a trade-off between internal and external validity (Cook & Campbell 1979). External validity is the likelihood that the observed effects will occur consistently in a range of appropriate clinical environments. As internal validity increases we develop security about attributing an observed difference to a known intervention. However, we often lose external validity because the population under study becomes highly selected. External validity represents the usefulness of the results and their generalizability to the wider population of people with the illness. If we want to strengthen internal validity (a fastidious trial) we define the study population very specifically and restrict the study to specific types of patients by adjusting the inclusion and exclusion criteria.

Pragmatic studies represent the opposite of fastidious studies. Here volunteers are entered, often with little exclusion. The study design may ask: ‘If we add treatment Q to the current best guidelines available, will this improve our outcomes and will it be cost-effective?’ Good examples of these pragmatic trials come from acupuncture and include those by Vickers et al., 2004 and Thomas et al., 2006 as well as their associated health economic analyses (Wonderling et al. 2004). The disadvantage of pragmatic studies is that we have no placebo control group and the heterogeneity of study groups may create wide variability in outcome. These studies then, even when carried out very competently, do not answer the question as to whether the treatment is better than placebo and may provide poor discrimination between treatments. This, however, is the basis for comparative effectiveness research.

The main danger with a rigid hierarchical strategy is the emphasis on internal validity at the risk of external validity and its consequences. We may produce methodologically sound results that are of little general value because they do not reflect the real-world situation (Travers et al. 2007).

Alternative strategies to the hierarchy

Given the complexities of clinical decision-making and the various types of evidence just described, it is now becoming clear that EBM approaches that focus exclusively on the hierarchy strategy are inadequate in the context of clinical practice (Sackett and Rennie, 1992 and Gabbay and le May, 2004). They are too simplistic and there is almost always not enough evidence to place the many treatments for most chronic illnesses at the top of any evidence hierarchy; there is and has to be much more powerful and exacting evidence when the risk to patients is high, for instance in the treatment of cancer. It is not surprising then that most physicians don’t completely rely on these approaches for all their clinical decision-making (Gabbay & le May 2004) so the need for alternative strategies is self-evident.

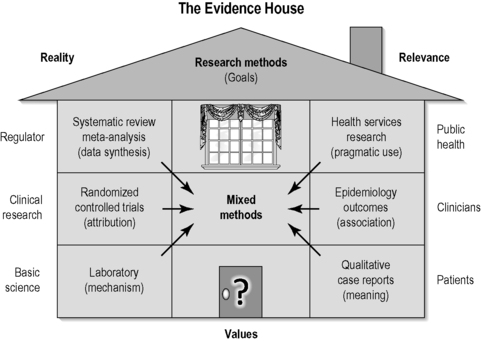

The evidence house

There are several alternative strategies to the evidence hierarchy that attempt to balance the risk of the poor relevance it produces. One of these is called the ‘evidence house’ and seeks to lateralize the main knowledge domains in science in order to highlight their purposes. It does this by aligning methodologies that isolate specific effects (those with high internal validity potential) and those that seek to explore utility of practices in real clinical settings (those with high external validity potential) (Figure 1.2). For example, mechanisms, attribution and research synthesis each seeks to isolate specific effects whereas utility is investigated though methods that assess meaning, association and cost (both financial cost and safety). Each of these knowledge domains has its own goals, methodology and quality criteria. The corresponding methods for each of the six domains are: for isolating effects, laboratory techniques, RCTs, meta-analysis; and for utility testing, qualitative research methods, observational methods, health services (including economic) research. Of course, variations in these methods can often be mixed in single studies, producing ‘mixed-methods’ research (such as qualitative studies nested inside RCTs) that seek to address dual goals. This strategy can be conceptualized in what has been called an ‘evidence house’ (Jonas 2001; Jonas, 2005). In this strategy the knowledge domains are placed in relationship to each other and the primary audience they serve. The evidence house helps balance the misalignment produced by the evidence hierarchy, by linking the method to the goal to the primary audience. The six major knowledge domains of the evidence house are briefly described below.

|

| FIGURE 1.2

Adapted with permission from Jonas (2001).

|

Mechanisms and laboratory methods

This asks the questions: ‘what happens and why?’ Laboratory and basic science approaches examine the mechanisms that underly interventions thought to be effective. Basic research can provide us with explanations for biological processes that are required to refine and advance our knowledge in any therapeutic field. For the development of new substances such research into potential mechanisms is at the beginning of the research chain, while for complex interventions that are already widely available and in clinical practice, such as homeopathy, acupuncture, TCM or physiotherapy interventions, this type of research is normally preceded by safety and clinical effectiveness studies (Fonnebo et al. 2007).

Attribution and randomized controlled trials

‘Attribution’ refers to the capacity to attribute effects to a certain cause. In clinical research, attributional knowledge is termed ‘efficacy’ and is best obtained through research methods such as the RCT. Efficacy is usually thought of as the effect size that differentiates between the overall effect of the specific intervention and the effect of placebo in an exactly comparable group of patients. In the sort of chronic illness frequently treated by CAM it is usual to find efficacy-based effect sizes of the order of 5–15% for both CAM and conventional medicine. These are often four times less than the overall treatment effect, suggesting the rest (75%) of the effect is generated by non-specific factors, such as the meaning and context (MAC) and the generic healing potential: ‘having treatment is what works’ (White et al. 2004).

Confidence and research summaries

Evidence synthesis is the basis of the knowledge domain that seeks to reduce uncertainty. It is important to be explicit about the processes involved in evidence synthesis. Meta-analysis, systematic reviews and expert review and evaluation are methods for judging the accuracy and precision of research. Methods of expert review and summary of research have evolved in the last decade by using protocol-driven approaches and statistical techniques. These are used along with subjective reviews to improve confidence that the reported effects of clinical research are accurate and robust (Haynes et al. 2006).

These three areas and their corresponding methods are listed on the left side of Figure 1.2. These information domains often build on themselves. On the right-hand side of the evidence house are three knowledge domains that focus on obtaining information on utility or relevance. Those are described now.

Meaning and qualitative methods

Given the contribution of MAC to clinical outcomes, research that explores these areas is important. Meaning provides information about whether our research incorporates patient-centred outcomes and patient preferences. This knowledge reduces the risk of substituting irrelevant outcomes when a therapy is tested. Context research examines the effect of communication, cultural and physical processes and environments of practice delivery. Qualitative methods are important here and include detailed case studies and interviews that systematically describe medical approaches and investigate patient preferences and the meaning they find in their illness and in treatments. Qualitative research has rigorous application standards and is not the same as a story or anecdote (Miller and Crabtree, 1994, Crabtree and Miller, 1999 and Malterud, 2001). Sometimes it is necessary to start the cycle of research with qualitative methods if a field is comparatively unknown, to enable our understanding of some basic parameters. Who are the agents in a therapeutic setting? Why are they doing what they do? What do patients experience? Why do they use CAM and pay money? Some examples of qualitative research in CAM show that patients’ and researchers’ perceptions are often radically different (Warkentin 2000). Some proponents of qualitative methods argue that they are radically different from the quantitative positivist one employed by mainstream medical research. While in theory this might be true, research has shown that both methods can complement each other well.

Indirect association and observational methods

A main goal of scientific research is linking causes to effects. Experimental research methods such as laboratory experiments or randomized controlled studies are designed to produce this knowledge. However, in many cases, it is impractical, impossible or unethical to employ such methods so we have to resort to substitutes. For example, adverse effects are often not investigated directly, although good clinical practice guidelines may alter this. Adverse reactions are normally only discovered through long-term observations or serendipity. Post hoc reasoning is then used to establish whether an adverse reaction was due to a medical intervention by observing event rates in those receiving the intervention. Although this post hoc reasoning stems from observational research and is not direct experimental proof, it is often sufficient evidence.

Often, retrospective case series or institutional audits will be able to give us initial suggestions that can be used to justify clinical experiments. However, we need to consider that in some cases such experiments will not be feasible. This may occur whenever there is too much a priori knowledge or bias among patients and providers towards an intervention. It may also occur where patients are enthusiastic about a treatment and choose it for themselves. Sometimes it is unethical to gather experimental evidence when an intervention is harmful and without the hope of personal benefit to the patient (http://bioethics.od.nih.gov/internationalresthics.html). The majority of the initial evidence base that relates to the harm caused by smoking is not based on clinical experiments but on large epidemiological outcomes studies and animal experiments; someone cannot be randomized to be a convict, or an outdoor athlete or religious. It is also unnecessary to convince ourselves of the obvious: parachutes prevent death from falling out of airplanes (Smith & Pell 2003) and penicillin treats bacterial infection – neither needs a RCT.

Observational research is excellent at obtaining local information about the effects of interventions in individual practices. Sometimes called quality assurance or clinical audit, such data can help improve care at the point of delivery (Rees 2002).

Generalizability and health services research

Efficacy established in experimental research may not always translate into clinical practice. If we want to see whether a set of interventions works in clinical practice, we have to engage in a more pragmatic approach, called ‘evaluation research’ or ‘health services research’ (Coulter & Khorsan 2008). Most of these evaluations are quite complex and another modern term for this type of research is ‘whole-systems research’ (Verhoef et al. 2006) or, as the Medical Research Council suggests, ‘evaluating complex interventions’ (Campbell et al. 2007). All these involve evaluation of a practice in action and produce knowledge about effects in the pragmatic practice environment and emphasize external validity (Jonas 2005). These methods evaluate factors like access, feasibility, costs, practitioner competence, patient compliance and their interaction with proven or unproven treatments. They also study an intervention in the context of delivery, together with other elements of care and long-term application and safety (Figure 1.2). These approaches may be used to evaluate quite specific interventions both within and outside CAM. Alternatively these approaches can be used in a substantially different strategic order to evaluate a whole-systems-based approach (Verhoef et al. 2004). In these situations we may need to understand the overall effect of the whole system. To do that one would start with direct observation of practices and a general uncontrolled outcomes study evaluating the delivery of the intervention and its qualitative impact on the targeted population (Coulter & Khorsan 2008).

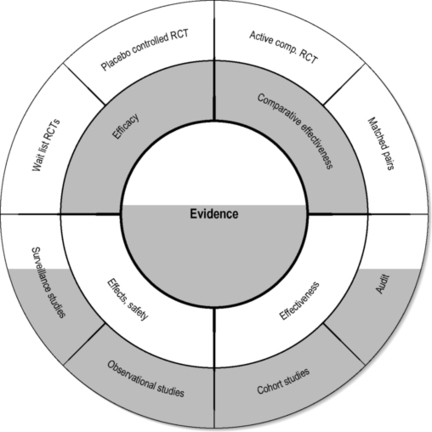

A circular model

The circular model explores the relationship of the clinical methods used in the middle two domains of the evidence house. It assumes that there is no such thing as ‘an entirely true effect size’ but that the effect sizes vary based on patient recruitment, specific therapists (Kim et al. 2006) and the environment (context) in which that therapy is provided (Hyland et al. 2007). This suggests that we may have difficulty in completely controlling for bias and confounding when we have no real understanding of the underlying mechanisms of the treatments being delivered. In these circumstances further development of a circular model may allow us to arrive at an approximate estimate of reality as it relates to complex pictures of chronic disease within the community (Walach et al. 2006) (Figure 1.3).

|

| FIGURE 1.3

Adapted with permission from Walach et al. (2006).

|

In a circular strategy the principle is that information from all sources is used to establish consensus around the most appropriate therapeutic approaches in a particular therapeutic environment. This allows development of best practices even when there is little RCT evidence. We have to bear in mind that clinicians are obliged to treat people even when they present with conditions for which the treatment is not supported by a substantial body of research (Flower & Lewith 2007). The circular strategy also actively involves patients in the decision-making process, which could be important since there is evidence that empowering patient decisions has an impact on outcome (Kalauokalani et al., 2001, Bausell et al., 2005 and Linde et al., 2007a). Acupuncture, as practised within a western European medical environment, is a prime example of this, as the debate about the evidence from both the German Acupuncture Trials (GERAC) and Acupuncture Research Trial (ART) studies illustrates (Linde et al., 2005, Melchart et al., 2005, Witt et al., 2005a, Witt et al., 2005c, Witt et al., 2006, Brinkhaus et al., 2006 and Scharf et al., 2006).

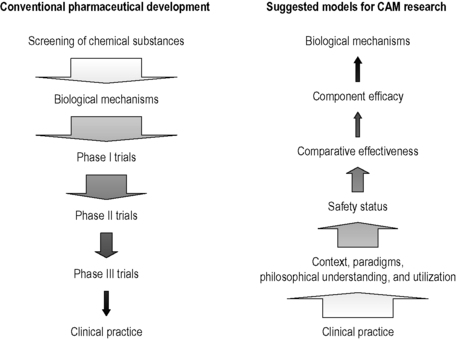

The reverse-phases model

By and large most complementary treatments are widely available, have often been in use for a long time and in some countries even have a special legal status. Consequently we may wish (for reasons of public health and pragmatism) to evaluate the safety of the intervention and the quality of the practitioners providing that intervention, before conducting research on theoretical or specific biochemical mechanisms that may be triggered by a particular product or practice. These strategic differences between the research approaches that may need to be applied to conventional and complementary medicine are summarized by Fonnebo et al. (2007) (Figure 1.4).

|

| FIGURE 1.4

Adapted from Fonnebo et al. (2007).

|

For example, a large pharmaceutical company will develop a new pharmaceutical through a phased approach that will involve both in vivo and in vitro laboratory experiments before preliminary human evaluation of a new chemical agent. This contrasts dramatically with the evaluation of a complementary medical intervention such as homeopathy and acupuncture, where opinions and beliefs about its veracity, effect and safety are widely debated and diverse. Inevitably this influences the public’s expectation and opinion about a particular intervention which may have an impact on any systematic evaluation of the therapy and its equipoise within a clinical trial.

Additional strategies

New, more sophisticated and pluralistic approaches to EBM incorporating the full spectrum of the information needed for making clinical decisions are being developed for all medical interventions; examples include the RAND ‘appropriateness’ approaches (Coulter et al. 1995), the Agency for Health Care Research and Quality’s (AHRQ) efforts on consumer or patient-centred evidence evaluations (Clancy and Cronin, 2005 and Nilsen et al., 2006), decision models and new ‘synthesis’ approaches (Haynes 2006) and ‘care pathway’ applications of EBM (Astin et al. 2006), as well as the comparative effectiveness research by the Institute of Medicine (Sox 2009). All of these approaches have their own strengths and weaknesses and call for systematic incorporation of concepts such as goals, problem formulation and values into the formulation of consistent and customized EBM decisions that account for the complexity of information needed in the clinical setting. Taken together, it might be worthwhile distinguishing some of the more academic debates around EBM from the practical applications by defining EBP (Coulter & Khorsan 2008). We believe that EBP requires, at a minimum, the establishment of quality standards for each of the information domains described in Figures 1.2 and 1.3 and may be thought of as ‘best research guidelines’.

Quality criteria for clinical research

The above discussion speaks to the importance of defining standards of quality for each of the evidence domains described. Uniform criteria should be used to define ‘quality’ within each evidence domain (Sackett et al. 1991). For example, experimental, observational and research summaries are three designs with published quality criteria (Begg et al., 1996, Moher, 1998, Egger and Davey, 2000 and Stroup et al., 2000). The evaluation of research quality in CAM uses the same approach as that in conventional medicine but there are additional items relevant to specific CAM areas (MacPherson et al., 2001 and Dean et al., 2006). The Consolidated Standards of Reporting Trials (CONSORT) group has produced a widely adopted set of quality reporting guidelines for RCTs (Begg et al., 1996 and Moher, 1998). These criteria focus on the importance of allocation concealment, randomization method, blinding, proper statistical methods, attention to drop-outs and several other factors. They include internal and some external validity criteria.

Table 1.1 lists some of the published quality criteria in each of these evidence domains. These quality criteria serve as the best published standards to date for research within each of the domains discussed in this book. Various checklists exist for helping investigators think about these quality criteria when reviewing or constructing research. One such checklist (the Likelihood of Validity Evaluation or LOVE) is described below, but many others are available, as listed in Table 1.1. A unique aspect of the LOVE is the inclusion of criteria for ‘model validity’ combined with internal and external validity.

| Type of research | Quality scoring system | Where to go to | Description |

|---|---|---|---|

| Systematic Reviews/Meta-Analysis | QUOROM guidelines | http://www.consort-statement.org/mod_product/uploads/QUOROM%20checklist%20and%20flow%20diagram%201999.pdf | Part of CONSORT, this checklist describes the preferred way to present the abstract, introduction, methods, results and discussion sections of a report of a meta-analysis |

| SIGN 50 | http://www.sign.ac.uk/guidelines/fulltext/50/checklist1.html | Checklist used by chiropractic best practices guidelines committee for their reviews | |

| AMSTAR | Shea et al. BMC Medical Research Methodology 2007 7:10 doi:10.1186/1471-2288-7-10 | Measurement tool for the ‘assessment of multiple systematic reviews’ (AMSTAR) consisting of 11 items and has good face and content validity for measuring the methodological quality of systematic reviews | |

| OXMAN | Oxman AD et al. J Clin Epidemiol 1991; 44(1): 91–98 | Used to assess the scientific quality or research overviews | |

| QUADAS | Whiting P et al. BMC Medical Research Methodology 2003; 3:25 http://www.biomedcentral.com/1471-2288/3/25 | A tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews consisting of 14 items. | |

| Randomized Controlled Trials | CONSORT | www.consort-statement.org | An evidence-based, minimum set of recommendations for reporting RCTs and offers a standard way for authors to prepare reports of trial findings, facilitating their complete and transparent reporting, and aiding their critical appraisal and interpretation |

| Cochrane | www.cochrane.org | Four key factors that are considered to influence the methodological quality of the trial: generation of allocation sequence, allocation concealment, blinding, and inclusion of all randomized participants. Cochrane advises against using scoring systems and checklists and uses these above to comment on in the analysis | |

| LOVE | Jonas WB and Linde K 2000. | Provides a convenient form for applying the four major categories of validity most applicable to complex systems as found in CAM (internal validity, external validity, model validity and quality reporting) | |

| SIGN 50 | http://www.sign.ac.uk/guidelines/fulltext/50/checklist2.html | Checklist used by chiropractic best practices guidelines committee for assessing the quality of RCTs | |

| Bronfort | Bronfort G et al. Efficacy of spinal manipulation and mobilization for low back pain and neck pain: a systematic review and best evidence synthesis. Spine J 2004; 4: 335–356 | Contains eight items with three choices, yes, partial and no on categories on baseline characteristics, concealment of treatment allocation, blinding of patients, of provider/attention bias, of assessor/unbiased outcome assessment, dropouts reported and accounted for, missing data reported and accounted for, and intention to treat analysis done. | |

| JADAD | Jadad AR, Moore RA, Carrol D et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Controlled Clin Trials 1996;17:1–12 | Widely used to assess the quality of clinical trials and composed of the following questions: 1) Is the study randomized? 2) Is the study double blinded? 3) Is there a description of withdrawals? 4) Is the randomization adequately described? 5) Is the blindness adequately described? | |

| Laboratory Research | Modified LOVE | Sparber AG, Crawford CC, Jonas WB. 2003 Laboratory research on bioenergy. In: Jonas WB, Crawford CC Healing, Intention and Energy Medicine. Churchill Livingstone London Pg. 142 | Modification of standard LOVE scale developed by Jonas WB et al to focus specifically on laboratory studies |

| Quality Evaluation Score | Linde, K., Jonas,W.B., Melchart, D., Worku, F., Wagner, H., and Eitel, F. Critical review and meta-analysis of serial agitated dilutions in experimental toxicology. Human & Experimental Toxicology. 1994; 13: 481–492 | Quality evaluation criteria for assessing animal studies in homeopathy | |

| Health Services Research: Utilization Studies | Born PH. Center for Health Policy Research American Medical Association 1996 http://www.ama-assn.org/ama/upload/mm/363/dp963.pdf | There is no widely accepted measure of quality for health care utilization studies. Because of this differences across plans in proxy measures, such as outcomes or patient satisfaction are used as evidence of a managed care-quality link | |

| Health Services Research: Quality of Life Studies | Smeenk FW BMJ 1998; 316(7149): 1939–44 | Much like other scales assessing quality criteria but with the addition of addressing quality of life outcomes | |

| Testa MA. Diabetes Spectrum 2000; 13: 29 | Brief checklist of critical questions particularly relevant to quality-of-life measurement and study design | ||

| Health Services Research: Cost-Effectiveness Studies | SIGN 50 | http://www.sign.ac.uk/guidelines/fulltext/50/notes6.html for economic evaluations only | Checklist used by chiropractic best practices guidelines committee for assessing the quality of economic studies |

| Ch 13: How to read reviews and economic analyses In: Sackett DL et al Clinical Epidemiology. Little, Brown and Co. Boston 1991 | Guides for assessing an economic analysis of health care | ||

| Epidemiology Outcomes: Cohort Studies | SIGN 50 | http://www.sign.ac.uk/guidelines/fulltext/50/checklist3.html | Checklist used by chiropractic best practices guidelines committee for assessing the quality of cohort studies |

| STROBE | http://www.strobe-statement.org/Checklist.html | Provides guidance on how to report observational research well | |

| New Castle Ottawa Quality Assessment Scale (NOS) | http://www.ohri.ca/programs/clinical_epidemiology/nosgen.doc | Used to assess the quality of non-randomized studies with its design, content and ease of use directed to the task of incorporating the quality assessments in the interpretation of meta-analytic results. Has been recommended by Cochrane nonrandomized studies methods working group | |

| Epidemiology Outcomes: Case Control Studies | SIGN 50 | http://www.sign.ac.uk/guidelines/fulltext/50/checklist4.html | Checklist used by chiropractic best practices guidelines committee for assessing the quality of case control studies. |

| STROBE | http://www.strobe-statement.org/Checklist.html | provides guidance on how to report observational research well | |

| New Castle Ottawa Quality Assessment Scale (NOS) | http://www.ohri.ca/programs/clinical_epidemiology/nosgen.doc | Used to assess the quality of non-randomized studies with its design, content and ease of use directed to the task of incorporating the quality assessments in the interpretation of meta-analytic results. Has been recommended by Cochrane nonrandomized studies methods working group | |

| Epidemiology Outcomes: Observational Studies | MOOSE | http://jama.ama-assn.org/cgi/content/full/283/15/2008 | Proposal of items for reporting meta-analysis of observational studies in epidemiology |

| Sanderson S et al. | Sanderson S, Tatt ID, Higgins JPT. Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Intl J Epidemiol 2007; 36: 666–676. | Assessment of many tools identified for the quality of observational studies in epidemiology. The authors chose specific domains and criteria for evaluating each tool’s content on methods for selecting study participants, methods for measuring exposure and outcome variables, sources of bias, methods to control confounding, statistical methods and conflict of interest. | |

| AHRQ | http://www.ahrq.gov/clinic/epcsums/strengthsum.htm | Considers five key domains: comparability of subjects, exposure or intervention, outcome measurement, statistical analysis, and funding or sponsorship. systems that cover these domains represent acceptable approaches for assessing the quality of observational studies | |

| Epidemiology Outcomes: N of 1 | NCI Best Case Series Criteria | http://www.cancer.gov/cam/bestcase_criteria.html | Process that evaluates data about patients who have been treated with alternative approaches and is designed to evaluate data from cancer patients who have received alternative treatments |

| Guyatt Guidelines | Guyatt G, Sackett D et al. A clinician’s guide for conducting randomized trials in individual patients CMAJ 1988; 139: 497–503. | Guyatt lays some guidelines for conducting and assessing N of 1 randomized controlled trials | |

| Qualitative Case Reports | McMaster University Occupational Therapy evidence based practice research group | http://www-fhs.mcmaster.ca/rehab/ebp//pdf/qualguidelines.pdf | Guidelines for critical review of qualitative studies developed by the McMaster University Occupational Therapy evidence based practice research group |

| Qualitative Evaluation Framework by National Centre for Social Research | http://www.pm.gov.uk/files/pdf/Quality_framework.pdf | A framework for assessing research evidence developed by the Government chief social researcher’s office | |

| RAND Research | |||

| Malterud K. assessment scale | Malterud K Qualitative Research: Standards, challenges and guidelines Lancet 2001; 358: 485. | Guidelines for authors and reviewers of qualitative research |

Model validity

In addition to internal and external validity, previously described, CAM research also requires evaluation of ‘model validity’, which assesses the likelihood that the research has adequately addressed the unique theory and therapeutic context of the CAM system being evaluated (Jonas & Linde 2002). Many CAM systems arise outside developed countries or western theories so cross-tradition and cross-disciplinary research, involving experts in both conventional medicine and CAM, is often required (Jonas 1997). Some standardized treatments can produce marked variations that may be culture-specific; consequently results from one cultural environment may not always translate to another, particularly with respect to differences in informed consent (Bergmann et al., 1994, Kirsch, 1999 and Moerman, 2000). Model validity refers to the fact that the research must be a fair exemplar of the practice in use and the method chosen to test it must reflect the goals and context of the audience that will use the consequent information.

The likelihood of validity evaluation

Table 1.2 shows a checklist of the main quality criteria for evaluating internal, external and model validity. For convenience, these criteria have been drawn from a variety of quality rating scales (including Scottish Intercollegiate Guidelines Network (SIGN) 50, CONSORT, Cochrane and others) and are collated and summarized in an approach called the Likelihood of Validity Evaluation (LOVE) system, which has been applied to the evaluation of several areas of CAM research (Jonas & Linde 2002). Section 1 addresses internal validity and includes criteria such as randomization, baseline comparability, change of intervention, blinding, outcomes, statistical analysis and sample issues. Section 2 addresses external validity and includes criteria such as generalizability, reproducibility, clinical significance, therapeutic interference and outcomes. Section 3 addresses model validity and includes criteria especially important to whole-systems research. This includes representativeness, informed consent, methods matching, model congruity and context. Finally, Section 4 addresses reporting clarity that includes how well an article or report accurately describes the study.

| Dimension | Main criteria |

|---|---|

| Internal validity | Randomization |

| How likely is it that the effects reported are due to the independent variable (the treatment)? | Was subject assignment to treatment groups done randomly and in a concealed manner? |

| – | Baseline comparability |

| Were gender, age and prognostic factors balanced? | |

| Change of intervention | |

| Was there loss to follow-up, contamination, poor compliance? | |

| Blinding | |

| Did the patients, practitioners, evaluators, analysts know who got the treatment? | |

| Outcomes | |

| Were the objectivity, reliability and sensitivity of the outcome assessed? | |

| Analysis | |

| Was the number treated large? Were P-values significant? Were multiple outcomes measured and analysed? | |

| External validity | Generalizability |

| How likely is it that the observed effects would occur outside the study and in different settings? | Was there a range of patients as would be seen in practice or were there multiple or narrow inclusions and exclusions? Was the study done at several sites with similar results? |

| Reproducibility | |

| Was what was done clear? Were confidence intervals reported? Was the treatment transferable to other practitioners? | |

| Clinical significance | |

| Was the effect size big enough to make a difference? Is the condition in need of this type of treatment? Were any preferences determined? Was adherence good? | |

| Therapeutic interference | |

| Was there flexibility in varying the treatment? Was feedback on the outcomes available? Is the treatment feasible in most (or your) practice settings? | |

| Outcomes | |

| Were the outcomes clinically relevant? Were the outcomes checked for importance with the patients? Were any important outcomes missing? | |

| Model validity | Representativeness/accuracy |

| How likely is it that the study accurately reflects the system under investigation? | Were the therapists well trained and experienced? Was the treatment strategy adequate? Was the treatment clearly described? |

| Informed consent | |

| Was the informed consent comprehensive? Was it effective – did patients understand it? Did it generate expectations different from practice? | |

| Methodology matching | |

| Were the goals of the study clear and limited? Did the investigators select the correct research method to achieve the goals? See the citation categories | |

| Model congruity | |

| Were the patients classified, was the treatment determined and were the outcomes assessed according to the system of the practice being assessed? | |

| Context/meaning | |

| Did the patients/practitioners believe in the therapy? How well was the intervention adapted to the culture, family, meaning of the patient? | |

| Reporting quality | Comprehensive |

| How likely is it that the report accurately reflects what was found in the study? | Can you address the above criteria? |

| Clarity | |

| How clear and accurate is the information presented? | Could you reproduce this study? |

| Conclusions | |

| Were the conclusions and reporting format (e.g. relative versus absolute improvement rates, strength of wording) appropriate to the data collected? | |

Model validity criteria highlight a number of conceptual and contextual issues of which the investigator should be aware of when conducting research on CAM (Levin et al., 1997, Vickers et al., 1997, Eskinaski, 1998 and Egger and Davey, 2000). CAM interventions are complex and in general do not consist of a single isolated entity; this is very similar to many of the interventions in primary care (general practice) or surgery (Gabbay & le May 2004). For example, acupuncture is a treatment within TCM and is itself highly variable. Acupuncture has often been considered a single intervention, but evidence suggests that the treatment of addictions such as smoking withdrawal may be employing entirely different neurophysiological mechanisms to those involved in the treatment of chemotherapy-related nausea and vomiting (Lewith & Vincent 1995). As a consequence, trial design may need to be entirely different for acupuncture when applying this intervention in differing clinical contexts with different underlying mechanisms. It may be appropriate to use sham acupuncture when the underlying mechanism is point-specific (as in the treatment of nausea) but entirely inappropriate to depend on point specificity for the design of the placebo when the underlying mechanisms are themselves not point-specific, as in the treatment of addiction.

Modern clinical trial methodology tends to make specific assumptions which may not apply to all aspects of CAM research. Therefore our methodology needs to be applied with an understanding of the science and the CAM intervention involved, and underpinned by theoretical ideas about the underlying mechanisms involved in the intervention. This will allow us to consider how to design research properly with appropriate controls that fit the CAM ‘model’ under study; in other words, with a high degree of ‘model validity’.

While research that maximizes internal, external and model validity is the ideal, the realities of the CAM research environment often make the ideal difficult to achieve. First, funding levels for CAM are quite small compared to research in conventional medicine. Thus, large, multicentred pragmatic or three-armed studies (in which both placebo and standard care comparison groups exist) are few and far between. Research on mechanisms with adequate product quality are unlikely to be supported, such as those required for multicomponent herbal products. The sceptical attitudes to CAM in many conventional circles, the competitive nature of new products and practices and the low profit potential of many CAM products and practices keep CAM research off the priority list for many governments and private funders. Rarely is there a critical mass of experienced researchers for conducting complex studies or mentors and a clear career path for training CAM investigators. Thus, CAM research continues to produce a continual stream of pilot and small studies or larger studies that address rather focused questions providing incremental information. In the context of so many questions about CAM practices, it is not surprising that the public often has no evidence for making decisions about CAM practices.

The absence of evidence

CAM has been very much the poor relation of research in the context of the EU, UK and US environments. The current UK CAM research budget is 0.0085% of medical research spending (Lewith 2007). When placed against the context of CAM use in the UK (15% per annum and 50% lifetime use), this is hardly an adequate distribution of research funding as far as the population’s use of CAM is concerned. While the total expenditure in the US is substantially larger, it is still miniscule in proportion to CAM use. Consequently there is very limited research evidence within the whole field. Oxman (1994), one of the fathers of EBM, makes it very clear that the absence of evidence of effectiveness should not be interpreted as being synonymous with the absence of clinical effect. A pervasive problem in CAM is the inappropriate interpretation of limited and inadequate data, an approach which substantially misrepresents the underlying priniciples of rigorous EBM.

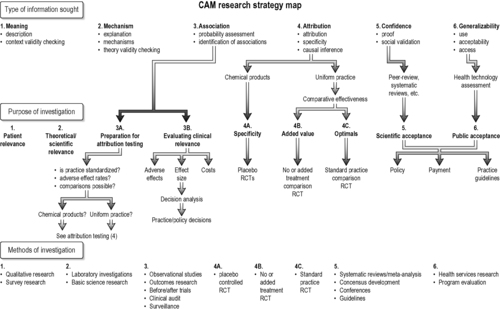

Applying research strategies in CAM

So far we have discussed different research methodologies, the types of evidence they provide and the preferences and goals various audiences have when applying evidence. There is much that we don’t know about this area of medicine and we are curently struggling to apply rigorous scientific approaches to a very ‘underinvestigated’ clinical area. Our intention is to use the principles outlined in this chapter as a ‘staging post’ for future strategic development while recognizing that, as our evidence base grows, our approaches to obtaining and translating evidence into clincial practice will change. Within this cultural reality how does one use these principles when choosing research methods in CAM?Figure 1.5 provides a stepwise decision tree for mapping out an appropriate research sequence for the type of evidence needed in any given circumstance. As discussed, the decision to use evidence begins by first defining the research question (Vickers et al., 1997 and Linde and Jonas, 1999) and then by clarifying who will primarily use the resulting information and for what purpose. We hope that this will allow researchers to define their questions better and subsequently their chosen methodology; however, the final decision to pursue a particular method depends on a number of factors, including:

|

| FIGURE 1.5

Adapted with permission from Jonas & Linde (2002).

|

• the complexity of the condition and therapy being investigated

• the type of evidence sought (causal, descriptive, associative)

• the purpose for which the information will be used

• the methods that are available, ethical and affordable.

In Figure 1.5 we outline a CAM research decision tree map that illustrates the relationship between study goals, the type of information being sought on a practice and the type of methodology that will be most appropriate for providing that evidence. This is at the core of establishing model validity.

Matching goals and methods in clinical research

The first step in selecting an appropriate research method is clearly defining what evidence is needed, particularly in relationship to how it will be used (Feinstein 1989). Each research method has its own purpose, value and limitations. To summarize, when defining concepts, constructs and terms and when assessing the relevance of an outcome measurement, qualitative methods, with their indepth interviews and content analysis, are the most appropriate approach. When seeking associations of variables, surveys, cross-sectional or longitudinal studies with methods that allow for factor analysis, regression analysis or a combination of both, such as structural equation modelling, are the most useful. When trying to measure the overall impact of a complex intervention delivered in a clinical context, pragmatic trials and outcomes methods should be applied. When attempting to isolate and prove specific theoretical effects of treatment on selected outcomes, or determine the relative merit of whole-practice systems, placebo-controlled or pragmatic RCTs are the method of choice, respectively.

Choosing research methods in CAM

The research question, main audience and the utility of a research project will anchor the methodology (Figure 1.5). For example, in multimodality practices that are often not well described (e.g. spiritual healing, lifestyle therapies) and where the interest is on impact on chronic disease, outcomes research or pilot trials are the best initial approach (domain 3A in Figure 1.5). In well-described modalities that are safe and not expensive where effectiveness (not efficacy) may be the main interest (e.g. acupuncture, homeopathy), outcomes data coupled with decision analysis may provide the best strategic approach (domain 3B in Figure 1.5) (Dowie 1996). For many natural products, where the active or standard constituents are variable, basic science (e.g. laboratory characterization and safety data) is needed prior to clinical research (domain 2 in Figure 1.5). Products that are well characterized but have potential direct adverse effects need placebo-controlled or standard comparison RCTs provided their public health implications warrant such an investment. Placebo studies of natural products are more useful for making regulatory decisions (e.g. product marketing and claims) than for individual clinical decisions (domain 4A in Figure 1.5). The US National Institutes of Health’s study of the efficacy of St John’s wort for depression, using a large, three-armed, multicentred, placebo-controlled trial, is an example of this latter approach (Shelton et al., 2001 and Hypericum Depression Trial Study Group, 2002). It is a widely used treatment, has significant potential drug–herb interactions (through the P450 system) and is treating an important, common clinical condition (domain 2 in Figure 1.5).

A physician considering referral of a patient with back pain for acupuncture will want to know what kind of patients local acupuncturists see, how they are treated, whether these patients are satisfied with treatment and their outcomes. This type of data comes from practice audit (observational research), surveys and qualitative research (domains 3B and 1 in Figure 1.5) (Cassidy, 1998a and Cassidy, 1998b). For these situations, information from local practice audits may be more valuable than relying on the results of small (or large) placebo-controlled trials done in settings where the practitioners and populations may be quite different. Data collection, monitoring and interpretation of observational studies must be as carefully performed as experimental studies. Finally, when exploring theories and data that do not fit into current assumptions about causality (e.g. energy healing or homeopathy), a carefully thought-out basic science strategy is needed. Given the public interest and the implications for science of these areas, it is irresponsible for the scientific community to ignore them completely (Jonas 1997).

Within RCTs (domain 4 in Figure 1.5) there are several goals that determine which design and sequence of studies are most appropriate. If the goal is obtaining information on the specificity of molecular or procedural effects (e.g. drug effects), placebo controls are required (domain 4A in Figure 1.5). Placebo controls cannot provide information about added value from a therapy (domain 4B in Figure 1.5), which requires a no treatment or standard treatment control (e.g. hypericum versus antidepressant control). Research summaries (domain 5 in Figure 1.5) and generalizability (domain 6 in Figure 1.5) require systematic reviews and health technology assessment, respectively. Examples of these two are the National Institutes of Health Consensus Conference statement on acupuncture (1997), which influenced public decisions on reimbursement for acupuncture. A similar approach used by the former Agency for Health Care Policy and Research’s practice guideline on low-back pain and manipulation and the subsequent establishment of chiropractic services in the USA (Bigos & Bowyer 1994).

Use of this decision tree, along with quality standards for each of the research methods listed, provides a rational strategy for selecting the most appropriate type of evidence, assures quality for each method and so combines rigour and relevance for creating EBP in a pluralistic health care environment.

Special CAM research issues

We have now reviewed different research methods, strategies for selecting appropriate methods for obtaining evidence and criteria available for assessing research quality when using those methods. There are additional issues of which all investigators should be aware when designing and conducting research. Issues that often become of particular importance to CAM research include:

• sample and population selection

• differing diagnostic classification

• ensuring adequate treatment

• the interaction of placebo and non-placebo factors

• selection of theory-relevant or patient-relevant outcomes

• the risk of premature hypotheses without pilot data

• assumptions about randomization

• blinding and unconscious expectancy

• learning and therapeutic growth

• the nature of ‘equipoise’ in CAM

• risk stratification for determining ‘adequate’ levels of proof

Sample and population selection

Recruitment for clinical trials underpins their generalizability. We have previously discussed the tension between internal and external validity and their relevance to recruitment in any study. CONSORT flow charts identify the number of individuals approached for a study and consequently give a percentage of the number recruited. A good example of this problem in conventional medicine is the evaluation of Antabuse, a pharmacological treatment that makes alcoholics vomit if they continue to take the medication daily. Trials evaluating Antabuse suggested that it was very effective; however, only 9% of the alcoholics approached agreed to be recruited, making it difficult to generalize about the effect of Antabuse in the broad population (Howard et al. 1990). All studies should explicitly consider population selection and breath of application.

Diagnostic classification

When groups are defind as ‘homogeneous’ conventionally and recruited to a clinical trial they may not be homogeneous when evaluated from the perspective of another medical tradition. The differing diagnostic taxonomies of CAM systems may need to be addressed within a research context. The diagnosis of osteoarthritis may represent over a dozen classifications when evaluated by TCM. A ‘standardized’ treatment may approximate the ‘average’ syndrome and this simplifies the treatment strategy. However, this standardized treatment may be suboptimal and so risk producing ‘false-negative’ results within the trial – a sign of poor model validity. Subjects with irritable-bowel syndrome treated with individualized TCM herbs improved for longer than those given a standardized approach and both groups did better than the placebo group (Bensoussan & Menzies 1998). A three-arm study design allows for the evaluation of this aspect of model validity.

In addition to a three-armed trial, a double selection design can be used, in which group selection is done according to both conventional medicine and the alternative whole system. Shipley & Berry (1983) tested the homeopathic remedy Rhus toxicum on a conventinally homogeneous group of osteoarthritis patients without individual homeopathic classification and reported no effect over placebo. Fisher et al. (1989), in a follow-up study, used a double selection approach that provided both good internal validity and model validity. Patients met criteria for fibromyalgia and Rhus tox, producing a group ‘homogeneous’ for both medical systems. In this study those treated with homeopathic Rhus tox did better than those given placebo.

Adequate treatment

Pilot data should always be obtained to ensure adequate treatment is being tested in a clinical trial. This is frequently omitted. For example, a study of acupuncture for human immunodeficiency virus (HIV)-associated peripheral neuropathy published in the Journal of the American Medical Association reported negative results. Many acupuncturists report good results in this condition (Shlay et al. 1998), but use considerably more treatments than in the Journal of the American Medical Association study. The treatment being tested must be optimal or at least representative for a defined group of providers and piloted for that condition. This is certainly not the case for asthma treated with acupuncture, as only two trials used the same points (Linde et al. 1996).

We would suggest the combination of a detailed literature review and an expert panel approach, designed to build consensus around best practices (Ezzo et al., 2000 and Flower and Lewith, 2007).

The interaction of placebo and non-placebo factors

It is typical to see 70–80% effectiveness reported from open practices in both conventional and alternative medicine (Roberts et al., 1993, Jonas, 1994, Guthlin et al., 2004 and Witt et al., 2005b). Traditional medicine systems, various mind–body approaches and psychological and spiritual systems often attempt to induce self-healing by manipulating the MAC effects on illness; MAC is often confused with placebo, which itself interacts with non-placebo elements of therapy in complex ways (Bergmann et al., 1994, de Craen et al., 1999 and Moerman, 2002). Arthur Kleinman’s classic study on why healers heal illustrates the importance of these contextual issues (Kleinman et al., 1978 and Moerman and Jonas, 2002).

Outcomes selection

Outcomes that are objective and easy to measure, or well known, are often selected instead of more difficult patient-oriented, subjective outcomes, though the latter may be more relevant. Eric Cassell (1982) has pointed out that, because of an overemphasis on finding a ‘cure’, researchers may seek more objective, disease-oriented outcomes that move away from gaining knowledge that may help in the more subjective, healing process of the illness, a core goal of medicine. The investigator, in the name of ‘rigour’, may try to maximize internal validity by using objective measures instead of measures that are meaningful to patients. For example, many physicians deem ‘pain relief’ a fuzzy, non-objective outcome. However, for patients suffering from pain, its relief is the only really relevant outcome.

While non-patient-centred outcomes selection is not a unique issue for CAM it may be one of the main reasons for CAM’s popularity. Cassidy, 1998a and Cassidy, 1998b conducted interviews with over 400 patients visiting acupuncturists. Most patients did not experience a ‘cure’ but they continued acupuncture treatment because of other factors such as an improved ability to ‘manage’ their illness and a sense of well-being. Measuring these outcomes may require including qualitative research methods, or developing and validating new measurement instruments. Researchers may settle for popular outcome measures, such as the SF-36 (Guthlin & Walach 2007), while forgoing more individualized outcome measures, such as the Measure Your own Medical Outcome Profile (MYMOP) (Paterson, 1996 and Paterson and Britten, 2003). Recently, a database of CAM-relevant outcome measures has been developed and posted online: IN-CAM Outcomes Database (www.outcomesdatabase.org).

Hypothesis testing

Hypothesis-focused research allows investigators to identify cause-and-effect relationships. This is a powerful method for confirming or refuting theories about treatment–outcome associations. But by focusing on a particular theory-driven causal link we risk ‘fixing’ our conclusions about the value of a therapy, which in turn may restrict the investigation of other, possibly better approaches (Heron 1986). Given the small resources invested in CAM research, potentially superior CAM practices may never see the light of evidence, compared to better-funded and profitable treatments.

Once treatments are established they become the ‘standard of care’. After this, alternative treatments are ethically more difficult to investigate. Lifestyle therapy for coronary artery disease (CAD) is an example. Current standard-of-care treatments for CAD are oriented around an anatomically based hypothesis of CAD. Coronary artery bypass grafting (CABG), balloon angiography and stents are all established treatments of CAD. An extensive industry has developed around these procedures and with it the economic and political interests to sustain these treatments. Lifestyle therapy can also successfully treat CAD but is based on a non-anatomical hypothesis of CAD aetiology not tied to a reimbursement and other industry drivers. It may be that lifestyle therapy is better than CABG for treatment of CAD in certain populations given its low cost, preventive potential, reduction in fatigue and improvement in well-being (Blumenthal and Levenson, 1987, Haskell et al., 1994, McDougall, 1995 and Ornish et al., 1998), but this is difficult to investigate as it is unethical to mount placebo-controlled trials in this life-threatening situation. Revealing partial causes runs the risk of preventing the discovery of potentially more beneficial therapies based on other hypotheses. Research strategies for chronic disease need to have the flexibility to test multiple hypotheses (Horwitz, 1987, Coffey, 1998 and Gallagher and Appenzeller, 1999).

Assumptions about randomization

When the results of observational and randomized studies are different it is usually assumed the randomized study is more rigorous and valid. This assumption has been challenged by an increasing number of studies showing that, properly done, observational studies may not differ in outcome from those that are randomized (Benson and Hartz, 2000, Concato et al., 2000 and Linde et al., 2007b). This does not in any way invalidate RCTs but rather suggests that much useful and important data may be collected utilizing a variety of different methods. Some practitioners of complex traditional or alternative medical systems believe that randomization can interfere with a therapy (by eliminating choice), obscure our awareness and so bias outcome. For example, TCM is based not on direct cause-and-effect assumptions but on an assumption that ‘correspondences’ occur between system levels (biological, social, psychological, environmental) (Porkert 1974). An assumption in TCM theory is that if a practice influences one level it can affect all others. Thus, finding direct cause-and-effect links may be less important for a TCM practitioner than to establish accurate correspondences between the body and season (Unshuld 1985). From this perspective extensive efforts to establish isolated causal links between intervention components and outcome (as done in non-pragmatic RCTs) are of less interest than in western medicine (Lao 1999).

Blinding and unconscious expectancy