228 Teaching Critical Care

Teaching success should be measured in terms of student performance, not the activities of the teacher. Delivering a carefully organized PowerPoint presentation, supervising problem-based workshops, or providing bedside clinical tutorials does not mean one has taught. Unless the learner has acquired new cognitive or psychomotor skills, teaching has not occurred.1 An effective teacher takes responsibility for ensuring that students learn. If the teacher’s perception is that providing a lecture or any instructional methodology fulfills this obligation, then the teacher is serving as “the” educational resource. The focus of this model is on what the teacher did and not on what the learner learned.

Stritter described a different model, one focused on the student.1 In this model, the teacher assumes responsibility for the learner’s success and creates an environment conducive to learning by managing the educational resources. The teacher as a “manager” creates specific educational objectives, motivates students, utilizes various educational strategies, evaluates learning, and provides effective feedback to ensure the learner achieves all the educational objectives.1

Creating Educational Objectives

Creating Educational Objectives

Educational objectives outline the skills and behaviors the student, resident, or fellow will be able to demonstrate after the teacher has completed a lecture, daily bedside instruction, 1-month elective, or fellowship training. Objectives should be developed for every instructional activity because they are a road map. They guide the teacher in developing an appropriate curriculum, they set unambiguous expectations for the learner, and they serve as a reference for evaluation and feedback.2,3

Developing educational objectives involves three steps.2,3 First, using action verbs (e.g., defines, explains, demonstrates, identifies, summarizes, evaluates), the instructor describes a specific behavior the learner must perform to show achievement of the objective. An objective such as “teaches concepts of airway management” is not adequate because it defines what the teacher is doing and does not clearly describe what the learner should be demonstrating. Therefore, it neither serves as a road map for the teacher or the student, nor does it identify a clear behavior the teacher can evaluate.

Bloom and Krathwohl developed a classification of educational objectives to assess three domains: cognitive, affective, and psychomotor.4,5 Objectives related to acquisition of knowledge are described in the cognitive domain, objectives related to the demonstration of attitudes and values are described in the affective domain, and objectives related to the acquisition of skills are described in the psychomotor domain.4,5

When teaching students a specific clinical skill—for example, how to manage a patient with hypotension—the teacher must establish that the learner has first mastered the lower cognitive domains, knowledge, and comprehension. Learners will not be able to initiate an appropriate treatment for hypotension or evaluate effectiveness of treatment unless they can first list the causes of hypotension and describe the effect of preload on stroke volume. The teacher must be able to identify where learners are in the cognitive domain and help them reach the higher domains such as synthesis and judgment. To accomplish this, the teacher needs to develop educational objectives asking the student to predict the consequence of an intervention or evaluate the effectiveness of treatment. Table 228-1 lists the levels of Bloom’s cognitive domain with the examples of action verbs and provides examples of questions that could be asked during lecture or teaching rounds to force the learner to higher levels.

| Levels of Thinking —Thought Process | Verbs | Example |

|---|---|---|

| Knowledge—remembering by recall or recognition: requires memory only | Define, list, recall. Who? What? Where? When? | What are the determinants of stroke volume? |

| Comprehension—grasping the literal message; requires rephrasing or rewording | Describe, compare, paraphrase, contrast, in your own words. | Describe how a change in end-diastolic volume affects cardiac output. |

| Application—requires use or application of knowledge to reach an answer or solve a problem | Write, demonstrate, show an example, apply, classify. | Show how a fluid bolus can change systolic blood pressure. |

| Analysis—separate a complex whole into parts; identify motives or causes; determine the evidence | Why? Identify, outline, break down, separate. | Identify the factors that may contribute to abdominal surgery. |

| Synthesis—produce original communication, solve a problem (more than one possible answer) | Write, design, predict, summarize, rewrite, develop, organize, rearrange. | Given a patient with chest pain, bibasilar rales, jugular venous distention, and mottled extremities, develop a hypothesis for a decrease in systolic blood pressure. |

| Evaluation—make judgments, offer opinions; summarize physical findings to support successful therapy | Judge, describe, appraise, justify, evaluate findings to support therapy. | Justify the decision to treat the patient in the previous example with fluids and inotropes. |

Educational objectives specifically related to critical care medicine training programs should be developed in accordance with the expectations outlined in the Accreditation Council for Graduate Medical Education (ACGME) program.6 In addition to listing the specific cognitive and motor skills that must be taught, the ACGME has also developed general core competencies that focus on patient care and not just knowledge acquisition.6 The six competencies include medical knowledge, patient care, interpersonal and communication skills, professionalism, practice-based learning, and systems-based practice.7 Examples of educational objectives for each competency are shown in Table 228-2.

TABLE 228-2 Educational Objectives for the ACGME General Competencies

Motivating Students to Learn

Motivating Students to Learn

The next step in teaching as a manager is to motivate the students to want to learn. To accomplish this they must first value what is being taught. For them to value a specific goal, they need to understand why it is necessary to incorporate the material into their clinical practice.8,9 The affective domain addresses educational objectives that relate to valuing and applying the material. The lowest level of the affective domain is receiving, in which the students attend lectures. Higher levels in the affective domain are concerned with getting the learner to incorporate material into daily patient care.5 These higher levels are accomplished by creating an environment that is conducive to learning. Table 228-3 lists specific activities the teacher can use to achieve higher levels in the affective domain. For example, the instructor should explain why certain educational goals have been chosen, why they are important, and what the consequences of failing to incorporate them are. Most importantly, the teacher needs to be aware of any inadvertent behaviors that may inhibit learning—providing negative feedback in front of others or demonstrating negative body language, for example. Because the teacher’s goal is to facilitate rather than inhibit learning, the teacher must recognize and change any behaviors that are barriers to learning.

Learning Experiences

Learning Experiences

There are numerous instructional methodologies a teacher can use to achieve educational objectives. Because adult learners prefer active learning, a curriculum that requires them to process information, participate in problem solving, and defend clinical judgment increases their enthusiasm for learning.9

Unfortunately, traditional methods of instruction such as lectures provide little opportunity for interaction, but because they are an efficient means of conveying a significant amount of information, they are frequently used. Despite being an efficient method for the teacher, they are not as effective as other strategies in helping the learner acquire clinical skills.10 In addition, much of what is taught is not retained, especially as the quantity of new material in the lecture increases.11 Finally, because didactic sessions are not interactive, the teacher does not have an opportunity to assess whether the learner understands the content and its applicability.

Small group sessions that incorporate problem-based learning and interactive workshops are more effective because they engage the students, force them to defend their decisions, and explain how they evaluate outcomes.10 Steps involved in developing a problem-based curriculum are to encourage the group to clarify any concept that is not understood, define the problem, analyze the problem, and outline a management plan.12

Newer instructional methodologies involve technology. Since 1992, students have had the ability to access the Internet, hyperlink to additional resources, and search for reference material with potential cost savings both in terms of dollars and time compared with traditional instruction.13,14 Whereas surveys demonstrate that learners are satisfied with Internet-based instruction, there are no studies to show Internet-based learning is more effective than other educational methods for increasing cognitive function or efficiency of learning.15

Each year 44,000 to 98,000 patients die because of medical errors.16 It is possible that giving students an opportunity to manage complex problems and anticipate consequences of their interventions in an environment where their mistakes do not result in untoward outcomes, where feedback is immediate, and where students can repeat their performance until they acquire these skills might improve patient safety.

Such instructional opportunities exist and have been available for years in the form of simulation. Simulation is defined as any training device that duplicates artificially the conditions that are likely to be encountered in an operation and may include low tech, partial task trainers, simulated patients, computer-based simulation, and whole-body realistic patient simulation. Since the 1960s, simulators have been used to teach crisis management to personnel in military, aviation, space flight, and nuclear power plant operations.17 Work in cognitive psychology and education theory suggests that more effective learning occurs when the educational experience provides interactive clues similar to situations in which the learning is applied.18 In other words, teaching management of unstable patients in a simulated environment, providing instruction, and evaluating learning is more effective than didactic sessions.

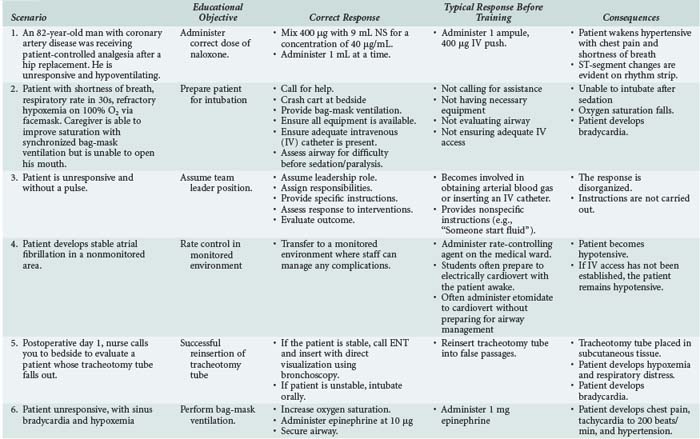

What initially began as computerized software with a separate torso apparatus has evolved into complex whole-body computerized mannequins with a functional mouth and airway, allowing bag-mask ventilation and intubation.19,20 The chest wall expands and relaxes; there are heart and breath sounds and real-time display of physiologic variables including electrocardiogram, noninvasive blood pressure, temperature, and pulse oximetry. The human simulator has individual operator controls for upper airway obstruction, tongue edema, trismus, and reduced cervical range of motion. These computerized human simulators require trainees to integrate cognitive and psychomotor learning along with multisensory contextual cues to aid in recall and application in clinical settings.21,22 This type of simulation has been successfully incorporated into curricula to teach management of obstetrical emergencies, management of difficult airway in the operating room, crisis management in the operating room,20,23 and management of unstable patients for critical care medicine trainees. Examples of learning objectives for third-year medical students, fourth-year medical students, and critical care medicine fellows using the simulator are listed in Tables 228-4 to 228-6. Note, all objectives are written in terms of behaviors the student must perform, thus giving the teacher clear guidelines for evaluation.

TABLE 228-4 Learning Objectives for Third-Year Critical Care Medicine Course

| Respiratory Distress |

TABLE 228-6 Learning Objectives for Critical Care Medicine Fellows

|

2 Immediately call for help, and follow the difficult airway algorithm if difficulty is anticipated.

|

In addition to providing the learner with the opportunity to practice specific scenarios such as those outlined in Table 228-5, the simulator can be used to teach crisis management skills.24 Gaba and colleagues recognized the similarities airline pilots and physicians face during crisis situations.24 To bring order to the chaos that often accompanies a crisis, the team leader, whether he or she is an airline pilot or a physician, must demonstrate specific behaviors to effectively manage the situation. The leader must clearly identify himself or herself as the leader and be exempt from any responsibility other than providing orders. For example, when team leaders become involved with other activities, such as inserting intravenous catheters or performing laryngotracheal intubation, they lose oversight of the entire crisis. The leader must demonstrate effective communication skills by assigning specific responsibilities to specific team members. Identifying the nurse who will administer 1 L of normal saline wide open is more effective than asking someone to start some fluids. The leader should identify the essential members and ask nonessential personnel to step back. Finally, the team leader needs to “close the loop” by asking members to report when a specific task has been completed. Studies of anesthesiology residents have demonstrated that training using simulation technology can improve performance in a simulated crisis.25

Although no study has unequivocally demonstrated improvement in actual patient outcomes, there have been studies demonstrating that virtual-reality training improved surgical skills during gallbladder resection.26 Finally, a randomized trial comparing a didactic curriculum versus training with simulation for resuscitation of trauma patients in the emergency department failed to show significant difference between lectures and simulation.27 Despite this, organizations such as the Institute of Medicine endorse simulation as a tool to teach novice practitioners problem-solving and crisis-management skills.

Evaluation

Evaluation

Evaluation is an essential component of any education curriculum and should address whether the goals and objectives of the course were met. When developing an assessment tool, it is important to define what is being tested (the educational objective), define the behavior that indicates the task has been performed, select the testing method, and determine the acceptable standard for performance.28 Some goals, including acquisition of knowledge, can be evaluated using written examinations or multiple-choice questionnaires. However, written examinations do not evaluate higher cognitive skills such as evaluation and cannot predict whether the learner has become clinically competent and can exercise safe clinical judgment. Written examinations lack validity unless they are simply evaluating knowledge.28 In addition, they tend to reinforce surface or superficial learning by rewarding students for memorizing facts for recall.

Chart-stimulated recalls are utilized to evaluate the student’s higher cognitive capabilities. Whereas multiple-choice questions evaluate knowledge, the chart-stimulated recall requires students to defend the workup, evaluation, diagnosis, and treatment of specific cases. As with other examinations, there must be predefined scoring rules, and those conducting the oral review must be trained in how to administer and score the examinations.29

Performance-based examinations can be utilized to assess clinical competency, psychomotor skills, and judgment.30 An example of a performance-based examination is the Objective Structured Clinical Examinations (OSCE), which were developed by Harden and colleagues in 1975.31 The examinations consist of several “clinical stations,” each with its own specific educational objectives. The OSCE requires the learner to recall knowledge, outline a treatment plan, interpret a study such as an electrocardiogram, or perform a specific motor skill. These examinations are reliable and valid32–34 and have been used to assess competency following medical school electives, for surgical and emergency medicine internships, and for licensure to practice by the Medical Council of Canada.33–36

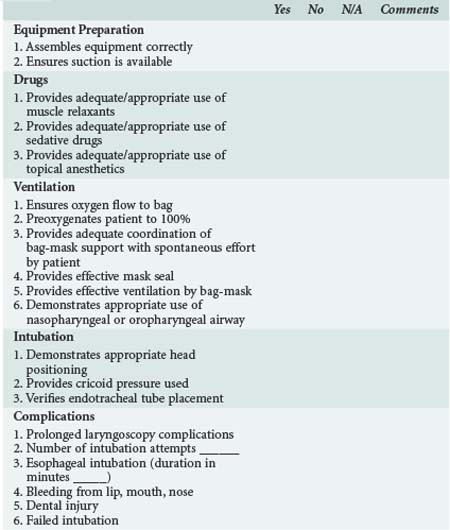

Checklists should be developed, and all observers participating in the evaluation should prospectively agree on what constitutes a successful performance (interrater reliability).37,38 Because students receive immediate feedback, their analytic and evaluative skills can be assessed and, when necessary, they can be instructed how to perform the task appropriately. Both computer-controlled simulators and OSCEs have been shown to be better than written examinations in predicting whether students can solve clinical problems.39 Gaba and colleagues have shown that technical skills can be assessed reliably from videotapes of the learner’s performance on the simulator; however, behavioral skills, such as clinical decision making, were less reliably assessed.24

Probably the most common method of assessing clinical competency is to evaluate the learner’s performance in real-life clinical situations. Several evaluation tools can be utilized in this environment. Global rating scales are used to evaluate patient care, knowledge application, interpersonal, and communication skills. These evaluations are typically conducted in retrospect and are used to summarize a performance at the end of a clinical rotation. This type of rating has the potential to be highly subjective, and if those performing the evaluation have not been trained, the results may reflect evaluation bias and lose validity.29

Psychomotor skills such as evaluation of airway management, bag-mask ventilation, intubation, central catheter insertion, and chest tube insertion are evaluated with procedure logs. Checklists should include the specific behaviors that have to be demonstrated to achieve a satisfactory evaluation.29 An example of a procedure log for intubation is demonstrated in Table 228-7.

Communication and interpersonal skills can be evaluated by peers, staff, and families using 360-degree reviews and patient surveys. The 360-degree review is a tool completed by those individuals (nurses, respiratory therapists, families) working with the learner. The difficulty with this review is making sure staff understand the intent of each question, coordinating the distribution, and collecting the completed examination reviews.29

Finally, patient surveys are used to obtain feedback on communication, interpersonal skills, and professionalism. They are reliable if there are 20 to 40 patient responses per student, which limits the use of this tool.29

Providing Effective Feedback

Providing Effective Feedback

The final step in being a manager of learning is to effectively utilize feedback to enhance learning. Too often feedback is used to fulfill an administrative function; it is provided as a summative report once the rotation is complete. Effective feedback enhances affective learning, and when used inappropriately or done poorly, it can inhibit learning.40

For feedback to effectively change behavior without causing unintended consequences, several rules should be followed. First, all feedback should be based on how the student performed regarding a specific goal and/or objective of the program.40 This is another reason teachers must develop clear educational goals. They serve not only as the framework for the curriculum but also as a reference for feedback. If feedback is provided in the context of specific performance, there should be no untoward consequence.40 For example, if the goal is for the learner to demonstrate effective bag-mask ventilation with appropriate chest excursion and adequate oxygen saturation, then the goal was either achieved or it was not. This is a statement based on an objective and is not a personal affront unless the feedback contains judgmental language. Therefore, it is important not to tell the student he or she did a “terrible job.” Second, feedback must include a description of how to succeed. In the example presented, if the patient was not effectively ventilated, the teacher should suggest repositioning the head, inserting an oral airway, and performing two-person bag-mask ventilation so there is a better seal with the mask. Third, the specific behavior the learner demonstrated should be addressed and not just interpreted.40 If students are late to rounds, do not assume they do not care or are lazy. Stating the expectation that rounds begin at 7 AM and that the expectation is for the trainee to be prepared by then assigns no judgment. Fourth, for feedback to be effective, it should be an expected component of the learning tools.40 Students should be informed during orientation that they will receive daily feedback on their performance of the stated goals and objectives. Without successfully implementing feedback, the model of teaching described by Irby is incomplete.9

Key Points

Taxonomy of educational objectives. A committee of college and university examiners. In: Bloom BS, editor. The classification of educational goals. Handbook 1: cognitive domain. New York: Longman; 1956:120-200.

Ende J. Feedback in clinical medical education. JAMA. 1983;250:777-781.

Irby DM. What clinical teachers in medicine need to know. Acad Med. 1994;69:333-342.

Mager RF. Preparing instructional objectives. Palo Alto, CA: Fearson; 1962.

Rogers PL, Jacob H, Rashwan AS, et al. Quantifying learning in medical students during a critical care medicine elective: a comparison of three evaluation instruments. Crit Care Med. 2001;29:1268-1273.

1 Stritter FT, Bowles LT. The teacher as a manager: A strategy for medical education. J Med Educ. 1972;47:93-101.

2 Mager RF. Preparing Instructional Objectives. Palo Alto, CA: Fearson; 1962.

3 Gronlund NE. How to write and use instructional objectives. Robert Miller (ed). New York: Macmillan; 1991.

4 Taxonomy of educational objectives. a committee of college and university examiners. In: Bloom BS, editor. The classification of educational goals. Handbook 1: Cognitive domain. New York: Longman; 1956:120-200.

5 Taxonomy of educational objectives. a committee of college and university examiners. In: Krathwohl DR, Bloom BS, Masia BB, editors. The classification of educational goals. Handbook 2: Affective domain. New York: McKay; 1964:3-196.

6 Graduate Medical Education Directory 2002-3. Chicago: American Medical Association, 2002.

7 General Competencies. ACGME Outcome Project. ACGME meeting. February 1999.

8 Smith RB, editor. The teacher’s book of affective instruction: a competency-based approach. Lanham, MD: University Press of America, 1987.

9 Irby DM. What clinical teachers in medicine need to know. Acad Med. 1994;69:333-342.

10 Dunnington G, Weitzke D, Rubeck R, et al. A comparison on the teaching effectiveness of the didactic lecture and the problem-oriented small group discussion: a prospective study. Surgery. 1987;102:291-296.

11 Russell IJ, Hendricson WD, Herbert RJ. Effects of lecture information density on medical student achievement. J Med Educ. 1984;59:881-889.

12 Schmidt HG. Problem-based learning: rationale and description. Med Educ. 1983;17:11-16.

13 Computer communication for international collaboration in educational in public health. The TEMPUS consortium in public health. The TEMPUS consortium for a New Public Health in Hungary. Ann N Y Acad Sci. 1992;67:43-49.

14 Clayden GS, Wilson B. Computer-assisted learning in medical education. Med Educ. 1988;22:456-467.

15 Cook D. Where are we with web-based learning in medical education? Med Teacher. 2006;28:594-598.

16 Kohn KT, Corrigan JM, Donaldson MS, editors. To err is human: Building a safer health system. Committee on Quality Health Care in America. Institute of Medicine. Washington DC: National Academies Press, 2000.

17 Gaba DM. Improving anesthesiologist’s performance by simulating reality. Anesthesiology. 1992;76:491-494.

18 Medin DL, Ross BH. Cognitive psychology. New York: Harcourt Brace College Publishers; 1997.

19 Saliterman SS. A computerized simulator for critical care training: new technology for medical education. Mayo Clin Proc. 1990;65:968-978.

20 Schaefer JJ, Dongilli T, Gonzales RM. Results of systematic psychomotor difficult airway training on residents using the ASA difficult airway algorithm and dynamic simulation. Anesthesiology. 1998;89:A60.

21 Friedrich MJ. Practice makes perfect: risk-free training with patient simulators. JAMA. 2002;288:2808-2812.

22 Issenberg SB, McGaghie WC, Hart IR, et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282:861-866.

23 Patel RM, Cromblehohm WR. Using simulation to train residents in managing critical events. Acad Med. 1998;73:593.

24 Gaba DM, Howard SK, Flanagan B, et al. Assessment of clinical performance during simulator crisis using both technical and behavioral ratings. Anesthesiology. 1998;89:8-18.

25 Chopra V, Engbers FH, Geertz MJ, et al. The Leiden anesthesia simulator. Br J Anaesth. 1994;73:287-292.

26 Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance. Ann Surg. 2002;236:458-464.

27 Knudson MM, Khaw L, Bullard K, et al. Trauma training in simulation: translating skills from SIM time to real time. J Trauma. 2008;64:255-264.

28 Newble D, Dauphince D, Macdonald M, et al. Guidelines for assessing clinical competence. Teach Learn Med. 1994;6:213-220.

29 ACGME Outcome Project. Version 1.1. Toolbox of Assessment methods: a product of the joint initiative. September 2000.

30 Vu NV, Barrows HS. Use of standardized patients in clinical assessment: recent developments and measurement findings. Educ Res. 1994;23:23-30.

31 Harden RM, Stevenson M, Downie WW, et al. Assessment of clinical competence using objective structured examinations. BMJ. 1975;1:447-451.

32 Matsell DG, Wolfish NM, Hsu E. Reliability and validity of the objective structured clinical examination in paediatrics. Med Educ. 1991;25:293-299.

33 Sloan DA, Donnelly MB, Johnson SB, et al. Use of the objective structured clinical examination (OSCE) to measure improvement in clinical competency during surgical internship. Surgery. 1993;114:343-350.

34 Lunenfeld E, Weinreb B, Lavi Y, et al. Assessment of emergency medicine: a comparison of an experimental objective structured clinical examination with a practical examination. Med Educ. 1991;25:38-44.

35 Rezmick R, Smee S, Rothman A, et al. An objective structured clinical examination for the licentiate: Report of the pilot project of the Medical Council of Canada. Acad Med. 1992;67:487-494.

36 Smith LJ, Puce DA, Houston IB. Objective structured clinical examination compared with other forms of student assessment. Arch Dis Child. 1984;59:1173-1176.

37 Stillman PL. Technical issues: Logistics. Acad Med. 1993;68:464-470.

38 Vander Vleuten CPM, Swanson DB. Assessment of clinical skills with standardized patients: state of the art. Teach Learn Med. 1990;2:58-76.

39 Rogers PL, Jacob H, Rashwan AS, et al. Quantifying learning in medical students during a critical care medicine elective: A comparison of three evaluation instruments. Crit Care Med. 2001;29:1268-1273.

40 Ende J. Feedback in clinical medical education. JAMA. 1983;250:777-781.