4. Laboratory research and biomarkers

Hakima Amri, Mones Abu-Asab, Wayne B. Jonas and John A. Ives

Chapter contents

Introduction73

CAM research: reverse-course hypothesis75

Experimental design78

Cellular models80

Animal models81

Impact of cutting-edge technologies on CAM research82

High-throughput systems of analysis: the omics82

Role of biomarkers in CAM basic research89

Standards, quality and application in CAM basic science research89

Toward standards in CAM laboratory research93

Conclusion93

Introduction

Laboratory research in complementary and alternative medicine (CAM) is a challenging topic due to the complexity of biological systems, the heterogeneity of CAM modalities and the diversity in their application. Scientists are well aware of the complexity of biological systems. To compensate for this complexity biological scientists attempt to isolate single components and study them outside their milieu. This has led to simplifying the research hypothesis for determining the effect of a single chemical enzymatic reaction, the impact of loss or gain of a substrate on a cellular pathway or the effect of a receptor/ligand on molecular signals. Extrapolations and correlations of the findings to a disease state or a specific pathophysiological pathway are then drawn. The experiments are performed in a test tube using a cocktail of ingredients, on cell or tissue extracts or on laboratory animals. Laboratory research like this is often translated to clinical research where trials are performed on human subjects. Thus, laboratory research encompasses both basic science and the clinical aspects of investigative science. Answering scientific questions by extrapolating from less to more complex systems has been the approach in conventional basic science research. This approach has led to the deciphering of important action mechanisms at cellular, molecular and genetic levels.

However, are these mechanisms reported to the scientific community in a comprehensive framework? In most cases the answer is no. Each new discovery is reported in the respective research field, to the respective experts in the field, to answer one specific question. Science has gradually become so compartmentalized and scientists so specialized that if you want to know more about a specific oncogene, for example, there is only one expert for you to contact. Clearly this is not an optimal approach as there are about 24 500 protein-coding genes and around 3400 cell lines from over 80 different species, including 950 cancer cell lines held at the ATCC Global Bioresource Center (Clamp et al. 2007) (http://www.atcc.org). The problem is exacerbated with highly specialized journals, which scientists from other fields often do not even read. Furthermore, standards and validation of procedures are still at the centre of scientific debates, especially at a time when leading-edge technology is advancing faster than their laboratory application. All these factors lead one to conclude that laboratory research has become so scattered that it is a challenge to paint a big picture that could be translated to the clinic.

In these times of ‘compartmentalized’ science there is a crying need for a comprehensive framework. It is within this context that CAM finds itself and through its renaissance putting pressure on decades of this unchallenged conventional scientific construct. To achieve a successful CAM renaissance, however, we must strive for the quality of evidence reached in conventional biomedical research over the decades (Jonas 2005). To establish a laboratory science, like any other biomedical endeavour, CAM must successfully pass the main domains of evidence checkpoints: hypothesis-driven experimental design, model validity, specificity, reproducibility and action mechanism or conceptual framework defining dependent and independent variables. This is especially difficult because CAM in general and CAM laboratory research in particular are adding layers of complexity, heterogeneity and diversity to the already fragmented conventional scientific paradigm. The validation of CAM modalities requires the most rigorous scientific testing and clinical proof involving product standardization, innovative biological assays, animal models, novel approach to clinical trials, as well as bioinformatics and statistical analyses (Yuan & Lin 2000).

Is there a process that could reconcile both sides of CAM and conventional science, bringing together a scientific community that values open-mindedness and constructive criticism and take advantage of the state-of-the art technological advances science has successfully accomplished? We believe so. Recent advances in bioinformatics, biotechnology and biomedical research tools encourage us to predict that CAM laboratory research is positioned to move from cataloguing phenomenology to developing novel and innovative paradigms that could benefit both houses of 21st-century medicine.

This chapter addresses the laboratory design and methodology used to measure changes in an organ or whole organism in response to a CAM modality. These methods cover tissue culture and animal models, integrative approaches of data generation from proteomics, mass spectrometry, genomic microarray, metabolomics, nuclear magnetic resonance and imaging, as well as analytical methods for the identification of novel effects of CAM modalities and that may qualify as biomarkers. We also provide the CAM researcher with an overview of the challenges faced when designing bench experiments and a general idea about the cutting-edge technology currently available to carry out evidence-based laboratory science in CAM.

CAM research: reverse-course hypothesis

Reports on the efficacy of CAM modalities to treat disorders and chronic diseases vary considerably in terms of their quality and scientific rigour (Giordano et al., 2003 and Jonas, 2005). This can be attributed to the lack of systematic and scientific basis for most of these modalities, as well as the wide variability of responses and outcomes often seen in CAM practices. Anecdotal data of a modality’s success alone do not meet the standards of evidence-based science and it is unlikely that experimental laboratory research alone will provide adequate substantiation for some CAM modalities (Moffett et al. 2006).

Some hold the assumption that CAM research should not necessarily be hypothesis-driven. This feeling is associated with the fact that many modalities of CAM have been practised for hundreds of years and thus the feeling that is there is no need for research because the clinical practice already exists. However, this runs counter to evidence-based medicine and the scientific process itself. Scientific research is hypothesis-driven. A properly designed experiment will attempt to falsify a null hypothesis and support an alternative hypothesis – the study hypothesis. The latter is an educated guess based on observation or preliminary data. Formulating hypotheses in CAM research is, in many cases, perceived as unnecessary because there already exist hundreds of years of CAM practice and experience.

The goal of many active research programmes in CAM today is to attempt to develop mechanistic understandings of CAM modalities through systematic scientific research that goes beyond anecdotal reports. With this in mind, CAM laboratory research is emulating the already-established conventional science paradigm, i.e. relating the effect of a particular active ingredient in a plant extract to its action on a specific biochemical pathway in vivo and thus elucidate its potential mechanism of action. The effects of Ginkgo biloba and St John’s wort on humans have been relatively well described. Specifically, Ginkgo biloba has been shown to have an impact on blood circulation (McKenna et al., 2001 and Wu et al., 2008) while St John’s wort appears to improve mild depression (Kasper et al., 2006 and Kasper et al., 2008). In addition, these two plants have often been studied in rat models where various mechanisms are being investigated but, in most cases, with a weak or no link to the original health conditions for which these plants are prescribed (Amri et al., 1996, Pretner et al., 2006, Hammer et al., 2008, Higuchi et al., 2008, Ivetic et al., 2008 and Lee et al., 2008). As of January 2010, a PubMed search using the key words ‘Ginkgo biloba and rats’ and ‘St John’s wort and rats’ yielded 533 and 233 entries, respectively, covering a variety of mechanisms of action.

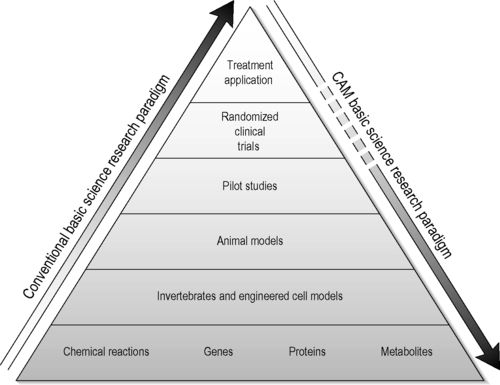

The usual progression in biomedical research is to start with a cell-free or tissue culture model, progress to intact or genetically engineered animal models, advance to pilot studies in humans and, finally, run tests in more elaborate clinical trials. In much of CAM research taking place today we are seeing this paradigm run in reverse. Often, in a CAM research laboratory, the starting assumption is that the CAM modality under study has worked for hundreds of years in humans and now there is an attempt to understand the mechanism of action. To do so, the researchers first organize human pilot studies, followed by the use of animal models and then conclude with research to define action mechanisms at the cellular and molecular levels using test tubes – the reverse of the usual order (Figure 4.1) (Fonnebo et al. 2007).

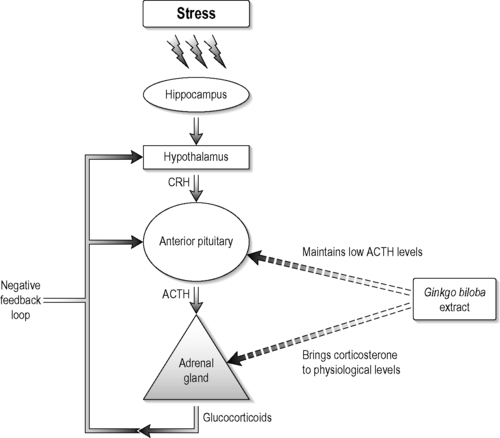

This viewpoint is illustrated in the studies performed by Jacobs et al., 2000 and Jacobs et al., 2006. They performed two separate clinical studies of homeopathy and homeopathic principles. In one, the homeopathic treatment of children with diarrhoea was tailored to their symptom constellations. In the other, the subjects were given the same homeopathic regimen ignoring symptomatic variability. Although these studies tested assumptions within homeopathy they were designed without clearly elucidated mechanisms to construct hypotheses around. As another example, Ginkgo biloba is already used by thousands of patients in the hope that it will improve memory and counter some of the other effects of ageing, though a recent clinical trial demonstrated no effect on Alzheimer’s disease (DeKosky et al. 2008). The mechanisms by which effects might occur are not understood. Because of this gap Amri et al., 1997 and Amri et al., 2003 studied the action mechanisms of Ginkgo biloba. They demonstrated the beneficial effects of Ginkgo biloba in controlling the corticosterone synthesis by the adrenal gland (cortisol equivalent in humans) in rats in vivo, ex vivo and in vitro at the molecular and gene regulation levels. The authors also showed that the whole extract had better effects than its isolated components by reducing corticosterone levels while keeping adrenocorticotrophic hormone levels low, which indicates that Ginkgo biloba whole extract affected the adrenal–pituitary negative-feedback loop (Figure 4.2).

In both examples the standard experimental design has been altered in order to respect the CAM treatment efficacy premise and the laboratory research rigour. In the case of the homeopathic clinical trials, the investigators administered individualized remedies, which is a deviation from the conventional trials where one pharmaceutical drug is given to all subjects. The effect of Ginkgo biloba extract, on the other hand, while clinically tested in humans, has almost all of its mechanistic research done in the animal and cellular models, as well as the molecular aspect to elucidate its mechanism of action.

One of the big problems within CAM research is combining what are often old traditions within CAM practices, such as the assignment of treatment based upon traditional – often not scientifically validated – diagnostic techniques such as taking of pulses in traditional Chinese medicine (TCM), or the symptom constellation used in homeopathy, with modern reductionist scientific approaches. Because of this, the field of CAM research is in great need of established standards without compromising the CAM practice itself but performing the research within a rigorous scientific framework. We also believe that in this era of postgenome and systems biology, laboratory testing of CAM modalities has not yet fully employed integrative high-throughput methods, such as microarray gene expression and protein mass spectrometry. These techniques enable the screening of large numbers of specimens at significantly improved rates and can provide the blueprint for finding potential biomarkers (Cho 2007; Abu-Asab et al. 2008).

Experimental design

When setting up a study, a significant effort should be placed at the early planning stage to avoid potential biases during the conduct of the study and in order to produce meaningful data and analysis. The planning starts by clearly defining the objectives of the study, then outlining the set of experiments that the researcher will conduct to test their hypothesis. The experimental design should include all the elements, conditions and relations of the consequences; these include: (1) selecting the number of subjects – the sample, the study group or the study collection; (2) pairing or grouping of subjects; (3) identifying non-experimental factors and methods to control them (variables); (4) selecting and validating instruments to measure outcomes; (5) determining the duration of the experiment – endpoints must be suitably defined; and (6) deciding on the analytical paradigm for the analysis of the collected data.

Incorporating randomization in experiments is necessary to eliminate experimenter bias. This entails randomly assigning objects or individuals by chance to an experimental group. It is the most reliable process for generating homogeneous treatment groups free from any potential biases. However randomization alone is not sufficient to guarantee that treatment groups are as similar as possible. An experiment should include a sufficient number of subjects to have adequate ‘power’ to provide statistically meaningful results. That is, can we trust the results to be a good representation of the class of subjects under study, i.e. do we believe the results?

The replication of an experiment is required to show that the effectiveness of a treatment is true and not due to random occurrence. Replication increases the robustness of experimental results, their significance and confidence in their conclusions.

The question of how many replicates should be used in an experiment is an important topic that has been addressed many times and continues to be revisited periodically by statisticians whenever there is the introduction of new technologies such as microarray. The goal is to encompass the variation spectrum that may occur in a population or class; or, in the case of treatment effectiveness, to reach statistical significance. According to its website, the National Center for Complementary and Alternative Medicine (NCCAM) has concluded, based on discussions with statisticians, peer-reviewed articles, simulations and data from studies, that in microarray studies about 30 patients per class are needed to develop classifiers for biomarkers discovery (Pontzer & Johnson 2007). However, the necessary sample sizes vary for each research project depending on the conditions of the experiment (e.g. inbred (mice, rats) versus outbred populations (humans)) (Wei et al. 2004). There are published formulas and web-based programs for calculating the required study size in order to ensure that a study is properly powered (Dobbin et al. 2008).

Selection of the control group is one of the most crucial aspects of study design, especially when testing complex phenomena in the laboratory. Controls determine which part of a theoretical model is tested. For example, in acupuncture there is the ritual, needle, point and sensation to expectancy or conditioned response: which is the most important aspect of the treatment? In homeopathy or herbal therapy, is it a specific chemical, preparation, process, combination, dose or sequence of delivery? Each of the above requires different controls. Thus, selecting the control is selecting the theory. Likewise, results should state precisely what aspect of the theory is elucidated by the control treatment result differences. Additionally, the need for a control group cannot be overemphasized. This group is included to avoid experimental bias and placebo effects, focus the hypothesis and serve as a baseline for detecting differences.

This discussion outlines the standard paradigm for laboratory research in general where guidelines and standards have been established decades ago by specialized institutions such as the Clinical and Laboratory Standards Institute (CLSI) and the Clinical Laboratory Improvement Amendments (CLIA) to ensure quality standards for laboratory testing and promote accuracy, as well as for inter- and intralaboratory reproducibility. In general CAM laboratory research has not reached these levels of standardization and regulation, at least in the USA. However, applying these guidelines and standards to CAM research exposes their inadequacies and confirms the complexity of CAM modalities. Nevertheless, we believe that conducting CAM basic science research in the 21st century using newly developed as well as existing approved standards from the conventional field in conjunction with cutting-edge technology will benefit and advance CAM research.

Basic science research and CAM

Standardization dilemma in basic science research

For over a century, conventional scientific research has undergone phases of trial and error that led to establishing guidelines and standards. As a result, scientific research as conducted today is becoming high-quality, rigorous and reproducible. Tremendous effort and resources have been dedicated to developing analytical methodologies and sophisticated technologies to bring conventional scientific research to this level of standing. CAM research, however, has not received similar attention, primarily due to a confluence of historic, political and economic forces and additionally due to the complexity and heterogeneity of its constructs around mode of action. Many of these do not conform to the reductionism paradigm that dominates conventional research (Kurakin, 2005 and Kurakin, 2007).

The challenges in CAM clinical research design are covered in other sections of this book. CAM basic science research faces a number of experimental hurdles, starting with hypothesis generation based upon controversial putative cellular and molecular mechanisms and extending to choice of biological model, dosage, treatment frequency and analytical methodology, to name a few. These are not, in principle, any different from the hurdles faced in conventional research. However, as mentioned above, there is a century or more of historical experience behind the conventional approaches and models and only a few decades of serious attention to CAM modalities of healing and their possible mechanistic underpinnings. The need for standards, inherent difficulties notwithstanding, makes it essential that these issues be addressed.

Each experimental model has its strengths and weaknesses. For example, translating in vitro data to in vivo applications often does not work. This is true of all biomedical research, whether focused on CAM or not. However, it should be emphasized that the researcher must develop an awareness of the limitations of the various available models. This is important, especially, for the interpretation of the data and/or their translation to clinical research. It is crucial that CAM researchers be cognizant of these limitations and always alert to consider the extra layer of complexity that is added by the CAM modality under study.

Cellular models

Cell lines in cultures, i.e. in vitro, are widely used in almost all fields of biomedical research. The relatively cheap cost and fast turnaround time of this technique have contributed to its ubiquity. Cell lines derived from all types of tissues and cancers are commercially available; in addition, researchers constantly introduce and characterize new cell lines. In vitro experiments, although easy to set up and carry out, are challenging in all fields of research. This is due, at least in part, to the variability among cell lines that arises from their susceptibility to genetic and phenotypic transformation and potential contamination during frequent serial passages. There have been a number of studies reporting discrepancies within cell lines with regard to their purported characteristics (Masters et al., 1988, Chen et al., 1989 and Dirks et al., 1999). One solution is to use primary cell cultures (from fresh normal tissues and not transformed to become immortal). These tend to have fewer artifacts compared to transformed immortal cell lines but efforts developing strategies to use them as a cell model have been hindered by cost and time (Zhang & Pasumarthi 2008).

Despite their limitations, most of our tumour biology and gene regulation knowledge has stemmed from studying tumour cell lines. This is underscored by the over 50,000 publications reporting use of HeLa cells and over 20,000 reports describing NIH/3T3 cell line use. It is generally accepted that monolayer, three-dimensional cultures, or xenografts, cannot entirely reproduce the biological events occurring in humans. It is, thus, a challenge to create experimental cell models that capture the complex biology and diversity of human beings (Chin & Gray 2008).

In basic science, animal models remain the ‘gold standard’ and the crucial step before using human subjects. Certain regulatory agencies in the USA, such as the Environmental Protection Agency (EPA) and the Food and Drug Administration (FDA), are encouraging the substitution of the expensive, time-consuming and sometimes ethically problematic animal testing with the bacterial tier testing if human studies are already available (Marcus 2005). This strategy is applicable to many CAM studies where natural products have already been tested on human subjects.

Animal models

Animal use for food, transport, clothes and other products is as old as humanity itself. Their use in experimental research parallels the development of modern medicine, which had its roots in ancient cultures of Egypt, Greece, Rome, India and China. It is known that Aristotle and Hippocrates based their knowledge of structure and function of the human body in their respective Historia Animalium and Corpus Hippocraticum on dissections of animals. Galen carried out his experimental work on pigs, monkeys and dogs, which provided the fundamentals of medical knowledge in the centuries thereafter (Baumans 2004).

Today, animal models remain the gold standard in laboratory research for testing the efficacy and effects of treatments. In vivo animal models are used to validate in vitro data and both of these are a prerequisite for clinical trials. A wide spectrum of animals is used in basic science research. Examples include: Caenorhabditis elegans, zebrafish and Drosophila in developmental biology and genetic studies; mouse, rat, guinea pig, rabbit, cat, dog and monkey in general physiology, pharmacology and neuroscience. Each model presents its own advantages and disadvantages and should be evaluated first for cost, time effectiveness and ethical appropriateness and then for the related issues of handling and management in laboratory settings. Although many models are used today to answer fundamental scientific questions, the mouse remains the model of choice, in part because of the remarkable progress achieved in producing engineered models to mimic several pathologies.

Thirty years of cancer research and millions of dollars spent on clinical and basic science research produced the genetically engineered mouse model. There are models for almost every epithelial malignancy in humans, including transgenic, ‘knockout’ and ‘knockdown’ models. The scientific and technical ability to generate engineered animal models, where targeted conditional activation or silencing of genes and oncogenes is easily manipulated, confirms the remarkable progress achieved since Galen’s era. With the discovery in the 1980s that Myc expression caused breast adenocarcinoma in mice mammary epithelium, the study of genes and its application in genetic engineering has exploded (Meyer & Penn 2008).

The current science and capabilities of genetic engineering are testimony to the power of reductionist thinking. This has led to further entrenchment of the reductionist approach in biomedicine and the belief that all mechanisms of biology and disease can and must be understood – understanding the action mechanisms underlying tumorigenesis in order to develop the most effective treatment. Understanding action mechanisms confers validity and credibility on biomedical research. Thus, knowing that Myc activation can cause breast cancer demonstrates our understanding of mechanism. On the other hand, to say that we know a homeopathic remedy that reduces prostate tumour size, or we know acupuncture reduces stress, does not address the question of mechanism. The degree to which the mechanism underlying the observed effect is understood often becomes the standard by which the quality of CAM research is judged. The conventional bias is that if the effect is confirmed, reproducible and mechanistically deciphered, then and only then will the scientific community accept it and the bench-to-bedside translation be implemented. Yet, this attitude is hypocritical.

Have we cured cancer? No. Have we deciphered cellular and molecular pathways leading to cancer development? The answer is yes. Have we developed anticancer drugs? The answer is also yes. Are they effective in curing cancer? Often, they are not. The current inability to cure cancer is one of the reasons for the observed increased use of CAM therapies among cancer patients (Miller et al., 2008 and Verhoef et al., 2008). Furthermore, as the axiom ‘one gene, one protein’ has turned out not to be true and biological systems have proved to be far more complex than originally envisioned, the ability for pharmaceutical researchers to perform targeted drug discovery has become increasingly difficult – perhaps even impossible. More recently, a call to adopt integrative approaches to basic science research and systems biology has emerged due, at least in part, to the technological developments in the genomics and proteomics fields (see below). We feel that there is great potential for advancement of CAM basic science research employing these paradigms and new technologies. There is a philosophical synergy among integrative approaches, systems biology and CAM. CAM modalities amenable to basic science research are integrative and often affect more than one pathway, meaning that today we should be able to demonstrate the complexity of the response using the latest technologies employed by conventional researchers (van der Greef and McBurney, 2005 and Verpoorte et al., 2009).

Impact of cutting-edge technologies on CAM research

This section outlines novel integrative technologies measuring almost all changes in an organ or whole organism in response to a treatment. Such integrative methodologies could provide comprehensive elucidation of the effects of a CAM modality. The conventional research community is slowly moving away from simplistic and reductionism thinking and towards an inclusive mapping of all changes in the biological system. This could only benefit both basic and clinical CAM research and we encourage using it. These innovative methodologies are described below.

High-throughput systems of analysis: the omics

New high-throughput systems of genome, proteome and metabolome analyses, such as microarray and mass spectrometry, are currently the best techniques that permit a large-scale assessment of whole-body response to treatment, while other traditional methods, such as enzyme-linked immunoassay and polymerase chain reaction, detect a limited number of gene and protein expressions at a time (Li 2007). The data produced from these newer techniques are useful for both target measurements (i.e. quantitative) and profiling (i.e. qualitative) (Abu-Asab et al. 2008). Although finding and quantifying change is achievable with the new omics, interpreting the results – establishing meaning and significance – and elucidating the pathways affected remains, as it always has been, the most difficult task a biomedical researcher faces.

We predict that, as high-throughput systems become widely used by CAM researchers, they will recast and energize CAM research because of the shared integrative characteristics of the two; they are both concerned with changes at the organismal level. CAM modalities affect systems biology of the whole organism and the high-throughput omics are the quantitative and qualitative measures of the whole systems’ change.

Public databases of high-throughput data are also a great source of data that can be used to test hypotheses in preparing research proposals or before embarking on expensive laboratory experiments. The largest, publicly available and diverse data warehouse is that of the National Center for Biotechnology Information (NCBI: http://www.ncbi.nlm.nih.gov/). NCBI creates and houses public databases, conducts research in computational biology, develops software tools for analysing genome data and disseminates biomedical information in order to contribute to the better understanding of molecular processes affecting human health and disease (Barrett et al. 2009).

Genomic analysis

Genomic analysis here refers to the integrative study of the gene expression alterations, before and after a treatment, that could be a CAM modality. We will focus here on microarray technology because it allows a total gene expression analysis and has the ability to reveal without prejudice the shifts in whole profile. For example, microarray gene expression profiling can identify genes whose expression has changed in response to a CAM modality by comparing gene expression in cells or tissues.

Microarray chips consist of small synthetic DNA fragments (also termed probes) immobilized in a specific arrangement on a coated solid surface such as glass, plastic or silicon. Each location is termed a feature. There are tens of thousands to millions of features on an array chip. Nucleic acids are extracted from specimens, labelled and then hybridized to an array. The amount of label, which corresponds to the amount of nucleic acid adhering to the probes, can be measured at each feature. This technique enables a number of applications on a whole-genome scale, such as gene and exon expression analysis, genotyping and resequencing. Microarray analysis can also be combined with chromatin immunoprecipitation for genome-wide identification of transcription factors and their binding sites.

Because of reproducibility issues in microarray experiments (variability between runs and between different laboratories), there are a few practical considerations in the experimental design that should be followed (Churchill, 2002 and Yang et al., 2008). First, replicas of experimental specimens should be included in the microarray analysis (i.e. treatment groups should include multiple subjects). Second, from each subject one duplicate should also be used – two separate microarray chips for the same subject. Third, microarray chips that include multiple spots for the same gene probe should be used. This feature allows the detection of experimental or chip manufacturing problems. These guidelines need to be followed in order to obtain reliable data.

Although overall gene expression is indicative of the holistic status of a tissue, the values of a gene of interest should be independently rechecked with other methods such as quantitative real-time (QRT)-PCR to ensure the accuracy of the microarray results. It is now a routine practice to remeasure the expression values of significant genes (differentially expressed) before publishing microarray results (Clarke & Zhu 2006). Gene expression microarray studies require the extraction of messenger ribonucleic acid (mRNA) from cells or tissues; therefore, it will be an invasive procedure when applied to human and animal subjects. However, it is appropriate to use in cancer treatment animal models, tissue culture experiments and comparative plant analysis. In order to avoid experimental variability between different runs it is preferred that all specimens be hybridized to chips at the same time. Finally, microarray experiments should be designed meticulously before executing to reduce mistakes, not only to increase scientific reliability but also to reduce the cost of microarray chips, which is still substantial (Hartmann 2005).

Microarray has been used by Gao et al. (2007) to probe candidate genes involved in electroacupuncture analgesia and to understand the molecular basis of the individual differences of electroacupuncture analgesia in rats. They identified 66 differentially expressed genes and classified these into nine functional groups: (1) ion transport; (2) sensory perception; (3) synaptogenesis and synaptic transmission; (4) signal transduction; (5) inflammatory response; (6) apoptosis; (7) transcription; (8) protein amino acid phosphorylation; and (9) G-protein signalling. Another example is the exploratory study on Kososan, an antidepressant. This study suggests that gene expression profiling is possible for studying the effects of complex herbal remedies (Hayasaki et al. 2007). For more examples and a detailed description of microarray principles and practices, we recommend the excellent review of Hudson & Altamirano (2006).

Proteomic analysis

In a CAM context, proteomic analysis is the study of the changes of all expressed proteins due to an application of a CAM modality. It can be applied to body tissues and fluids.

During the last decade, proteome analysis has become increasingly important. This is due to the fact that the proteomic profile is the true expression of the functionality of the biological system. Unlike genomic sequences and transcriptional profiles, proteomics is an accounting of the actual change in protein expression (Li 2007). Although protein structure, concentration and function are subjected to posttranslational modifications and degradation, protein expression provides an accurate dynamic reflection of homeostasis, as well as a dynamic measure of any reactions to stimuli and drugs.

There are two major techniques employed in the study of systems’ proteomics: mass spectrometry and gel electrophoresis. Mass spectrometry is based on a machine’s ability to read large sets of tandem mass spectra of laser-scattered proteins and peptides. The proteins are digested and adsorbed to a matrix chip prior to placement in the mass spectrometry machine. The most reliable mass spectrometry system in use today is the matrix-assisted laser desorption/ionization-time-of-flight (MALDI-TOF). The matrix protects the molecules from destruction by the laser beam and assists in their vaporization and ionization, while time-of-flight spectrometer determines the mass-to-charge ratio (m/z) of each protein/peptide in the specimen. There are tens of thousands of m/z values per specimen and these are the values used for quantitative and qualitative assessments as well as the identification of the protein of interest.

Two-dimensional gel electrophoresis (2-DE) is a high-resolution technique for separating proteins in acrylamide gel through two steps: first by using isoelectric focusing in the first dimension and then by mass in the second dimension. The separated proteins are then visualized by staining the gel with one of the following: Coomassie blue, silver, SYPRO Ruby and Deep Purple (Lauber et al. 2001). The 2-DE is a slow technique because it is time- and labour-intensive. Furthermore, it does not resolve proteins in low abundance and those with small molecular weight.

The various proteomic methods can be utilized to verify the identity of ingredients, or identify new bioactive agents; verify standardization; elucidate the response; decipher action mechanisms of treatment; for a priori categorizing of patients and subsequent responses; and to reveal the heterogeneity of a disease. There are numerous examples on the application of proteomics in the study of CAM. Lum 2-DE proteomics has been used to identify different species of ginseng, different parts of the same ginseng and cultured cells of ginseng and showed that the 2-DE maps of different ginseng samples contain sufficient differences to permit easy discrimination (Lum et al. 2002). The effect of the Siwu decoction on blood proteins was demonstrated through a number of proteomic techniques and shown to regulate the protein expression of bone marrow of blood deficiency in mice and thus promote the growth and differentiation of haematopoietic cells (Guo et al. 2004). Electroacupuncture treatment has been shown to induce a proteomic change in the hypothalamus (Sung et al. 2004).

Metabolomic analysis

For our CAM application purposes, metabolomic analysis is defined here as the detection of fingerprint changes of very large sets of small detectable metabolites in an organism due to a CAM modality treatment. Metabolites that may result from bodily responses occurring in the tissues and fluids, such as urine, saliva, serum, plasma, bile, seminal fluid, lung aspirate and spinal fluid, can be detected. Other materials that can be used are cell culture supernatant, tissue extracts and intact tissue specimens.

Metabolomics offers an additional set of data in the high-throughput data class that also includes genomics and proteomics. Because metabolites are themselves the result of the organisms, integrated biochemical machinery and pathways metabolomics may be thought of as a systems analysis of the organism. Two main methods are used in metabolomic studies: mass spectrometry and nuclear magnetic resonance (NMR). Mass spectrometry is a picogram-sensitive method that can detect thousands of metabolites in serum or urine specimens. However, it is limited by the need to carry out a separation step (by chromatography: gas chromatography, high-performance liquid chromatography, liquid chromatography) and the heterogeneous detection caused by variable ionization efficiency (Shanaiah et al. 2008). On the other hand, NMR requires little or no specimen preparation and is rapid, non-invasive and reproducible. However, it has lower sensitivity than mass spectrometry. The simplest, most reliable, accurate and reproducible NMR sequences used in metabolic study are the single-pulse or 1D nuclear Overhauser spectroscopy (NOESY).

Urine is considered an ideal biofluid for NMR analysis for diagnostic and monitoring purposes due to its low-abundant protein content, high concentration of low-molecular-weight compounds, minimum preparation and high-quality measurements (Neild et al. 1997). Furthermore, archival databases of NMR profiles allow quick comparisons of results according to NMR peaklist data, i.e. spectral search (see Human Metabolome Database at www.hmdb.ca).

NMR of serum and plasma produces sharp narrow signals from small-molecule metabolites and broad signals from proteins and lipids. The large proteins, lipoproteins and phospholipids produce a baseline distortion that is corrected by the use of NMR pulse sequence Carr–Purcell–Meiboom–Gill (CPMG). Intact tissues can be studied with NMR using the high-resolution magic angle spinning (HR-MAS) spectroscopy (van der Greef & McBurney 2005).

Additionally, metabolomics is used to establish fingerprint efficacy profiles of herbal medications and their extracts. Lu et al. (2006) established a gas chromatography-mass spectrometry fingerprint of Houttuynia cordata injection for quality control and were able to correlate reasonably the higher efficacy with certain fingerprints. Two-dimensional J-resolved NMR spectroscopy was applied to study the fingerprints of ginseng preparations and spectral data were analysed with principal component analysis (Yang et al. 2006). The authors demonstrated that the 2D NMR method can efficiently differentiate between commercial preparations and quickly assess quality control.

Due to its robustness, speed and non-invasiveness, metabolomics offers applicable profiling opportunities for diagnosis and assessment of treatments, as well as for quality and efficacy evaluation of herbal preparation.

Bioinformatic analysis

Bioinformatic analysis endeavours to identify the significantly affected variables and their role in the metabolic pathways affected by treatment, classify individuals’ responses to treatment and identify potential biomarkers. Raw data collected from microarray, mass spectrometry proteomics and metabolomics may require some preprocessing, such as baseline correction, alignment, binning and normalization before its analysis with a bioinformatic tool (Kunin et al. 2008). Most datasets, especially of high-throughput omics, have a large number of variables and would not be analysed with ‘pencil and paper’ but rather with a bioinformatic computer program. Only through massive number crunching is it possible to perform an analysis to decipher the biologically meaningful changes that result from treatment. Analysing data from multiple datasets (acquired from different instruments for the same study group) is challenging even with current analytical paradigms. Individual variability/heterogeneity coupled with experimental noise (differences in runs within the same laboratory and between laboratories) produces a thorny bioinformatic situation. To overcome such problems we will outline two analytical paradigms, statistical and phylogenetic, highlighting their strengths and weaknesses.

Statistical approach

The goal of a statistical analysis is to determine whether our null hypothesis is true or false and consequently if the data support the study hypothesis. Parametric statistical methods are always applied to sampling from populations that are assumed to have a normal distribution for the variables of interest. This assumption is significant because, when comparing two samples, control versus treatment, their means are used to assess whether the treatment has influenced the variable(s) we are observing and therefore the means must accurately reflect their populations. The statistical question here becomes: How can it be inferred that the treatment mean ( ) is not equal to the control mean (

) is not equal to the control mean ( )? All statistical tests, whether for normal distribution (t-distribution (for continuous numerical data), chi-square (for categorical data)) or non-normal distribution (Mann–Whitney U-test (for independent cohorts), Wilcoxon signed-rank sum test (for paired data)), aim to calculate the P-value, which is a measure of probability that the difference between the control and treatment groups is due to chance. The lower the P-value, the higher the probability the difference is caused by the treatment.

)? All statistical tests, whether for normal distribution (t-distribution (for continuous numerical data), chi-square (for categorical data)) or non-normal distribution (Mann–Whitney U-test (for independent cohorts), Wilcoxon signed-rank sum test (for paired data)), aim to calculate the P-value, which is a measure of probability that the difference between the control and treatment groups is due to chance. The lower the P-value, the higher the probability the difference is caused by the treatment.

) is not equal to the control mean (

) is not equal to the control mean ( )? All statistical tests, whether for normal distribution (t-distribution (for continuous numerical data), chi-square (for categorical data)) or non-normal distribution (Mann–Whitney U-test (for independent cohorts), Wilcoxon signed-rank sum test (for paired data)), aim to calculate the P-value, which is a measure of probability that the difference between the control and treatment groups is due to chance. The lower the P-value, the higher the probability the difference is caused by the treatment.

)? All statistical tests, whether for normal distribution (t-distribution (for continuous numerical data), chi-square (for categorical data)) or non-normal distribution (Mann–Whitney U-test (for independent cohorts), Wilcoxon signed-rank sum test (for paired data)), aim to calculate the P-value, which is a measure of probability that the difference between the control and treatment groups is due to chance. The lower the P-value, the higher the probability the difference is caused by the treatment.Statistical analysis permits the determination of the significantly affected variables (usually those with a P-value <0.05) within the specimen or a cohort of specimens. Furthermore, these significant variables can be entered into a second analysis to profile responses and for class discovery (grouping of individuals sharing similar responses, also referred to as typing). Profiling methods are either supervised or unsupervised. Supervised techniques require a training set of specimens to establish a profile for a particular class that can be later applied to identify profiles and classes (usually a combination of variables such as proteins or gene expressions), while unsupervised techniques can process the data without any prior training. Two of the most widely used unsupervised techniques are principal component analysis (PCA) and hierarchical cluster analysis (HCA) (Albanese et al., 2007 and Yang et al., 2009).

Profiling with these methods is based on raw similarity from distance matrices that produces clusters of specimens (scatter plots of PCA, dendrogram of HCA, lists of mass spectrometry peaks or heat maps for microarray data). Classification (class discovery) is based on overall similarity and in some cases may have little biological relevance, i.e. low predictive power. This point has been a source of frustration for many researchers. However, as we illustrate in the phylogenetic section below, the same data can be analysed in a phylogenetic approach to produce more biologically relevant results.

Phylogenetic approach

Phylogenetics is an evolutionary-based analytical approach that discriminates between ancestral and derived similarities and uses shared derived states to delimit classes and create hierarchical classification of specimens (Albert 2006; Abu-Asab et al. 2008). Biological systems are heterogeneous (or diverse) in their normal states as well as in their responses to stimuli and treatment. The many existing combinations of variables, in gene and protein expressions, are matched by an equal number of possible reactions. Therefore, a population’s responses may not be homogeneous (see the section on dichotomous expressions in Abu-Asab et al. (2008a)). For a dataset that contains parameters that violate normality, we recommend phylogenetic-based analysis.

A phylogenetic approach has an added advantage: it does not use overall similarity for analysis, but rather it divides similarity between control and treatment groups into ancestral similarity (variables that are unaffected by the treatment) and derived similarity (variables affected by the treatment). This process of converting continuous quantitative data to a discontinuous qualitative format is termed polarity assessment. The variables’ values for the treatment group are compared against the ranges of the control group (minimum and maximum of every variable). If a value from a treatment specimen falls within the control’s range, then it is an ancestral (i.e. normal) and given the value of zero (0); otherwise it is derived and given the value of one (1). Polarity assessment produces a new matrix where the original values are replaced by 0s and 1s.

A polarity matrix can be processed with a number of algorithms. However, a parsimony phylogenetic algorithm seems to provide a multidimensional analysis that is suitable for the needs of the biomedical research community. Multidimensional analysis is defined here as one that provides a resolution of biological patterns, affected pathways and class discovery; capable of incorporating new patterns and multiple datasets (i.e. seamless and dynamic); and can be utilized in a clinical setting for early detection, diagnosis, prognosis and evaluation of treatment (Abu-Asab et al. 2008b). Furthermore, such analysis is also known to have a higher predictivity.

Parsimony phylogenetic analysis will categorize the subpopulations for variation and treatment responses on the basis of shared derived states of the variables. Shared derived states among a number of specimens represent the potential biomarkers (see the section below on biomarkers). Results of a parsimony analysis are depicted in a tree-like diagram called a cladogram. A cladogram is a concise graphical summary of data distribution, data patterns, directionality of change and general trends within the specimens. It is also the most parsimonious hypothesized hierarchical relationships for a group of specimens, because it incorporates in its analysis all of the input states (not just the statistically significant) and thus better models data heterogeneity.

Role of biomarkers in CAM basic research

The search for biomarkers in newly generated data is a major activity for many biomedical researchers. The goal is to find biological indicators that can be developed into non-invasive tests for early detection of disease, susceptibility, diagnosis, targeted therapy and post-treatment evaluation. Because of disease heterogeneity and human variability, no single biomarker can be expected to have a wide range of functionality and utility throughout all phases of a disease. Therefore, each disease must have a set of biomarkers to detect it at any of its phases. However, the current problems associated with biomarker discovery stem from the lack of integration among traditionally defined biomarkers (based on a pathology model) and high-throughput omics data, the provisional nature of many putative biomarkers due to the limited size of the study collection in many experiments and the failure to understand the nature of disease heterogeneity and possible existence of multiple developmental pathways.

There are a few types of biomarkers that are useful in CAM research; among these are the diagnostic, predictive, prognostic and pharmacodynamic (Sawyers 2008). A diagnostic biomarker aids in reaching or confirming a diagnosis. A predictive biomarker is useful in evaluating the possible benefits of a specific treatment. The prognostic biomarker is used in forecasting the course and outcome of the disease. While the pharmacodynamic biomarker is used in measuring the effects of a treatment on the disease, a biomarker does not necessarily have to be a unique metabolite that is specific to the disease, but rather a measure of a common parameter that is affected by the disease and can be restored by the treatment – surrogate markers (Ledford 2008).

There are several approaches to discovering biomarkers in a laboratory setting. An example of a direct approach is sorting out data from 2D gels of protein extracts taken from sick or treated subjects; it may show the disappearance of certain spots, or the appearance of new spots when compared with normal specimens. In this case, new biomarkers can be declared, but further validation should be carried out. However, in the case of omics data the situation is more difficult and has become a source of frustration and scepticism. Locating surrogate markers has been practised with success for a number of diseases; they are also fast and cheap to measure (Ledford 2008). Most researchers expected the omics data to be an easy source of many biomarkers, but this turned out to be an inflated assumption. We think that the reason behind this problem is the misunderstanding of the natures of the omics data, the disease and the definition of biomarkers in an omics context, as well as the lack of the proper bioinformatic analytical tool.

We have suggested that a quantitative statistical approach to omics data analysis is not the most suitable method of analysis and that a qualitative paradigm can better bring out informational content of the data when coupled with a phylogenetic analysis (Abu-Asab et al. 2008). For a detailed explanation of our qualitative paradigm, see the section on phylogenetic analysis, above.

Standards, quality and application in CAM basic science research

For several years, the NCCAM at the US National Institutes of Health dedicated over 60% of its budget to clinical research and 20% to centres, but only the remaining funds to basic research, while at the same time encouraging mechanistic studies (http://nccam.nih.gov). Although it is easy to recommend that CAM be held to the same standards as conventional basic science, the complexity and intricacies of CAM, as reported in the White House Commission on CAM Policy, remain understated (Gordon, 2002 and White House Commission on CAM, 2002).

Basic science studies are conducted in nearly all the CAM disciplines that lend themselves to the hypothesis-driven paradigm and a quick search of the literature attests to that. Essentially, CAM basic science research is following the same standards used for conventional research, i.e. use of statistically significant number of specimens or replicates, introducing internal and experimental controls, defining response specificity and reproducibility (Table 4.1). The last is perhaps the most challenging criterion. In several cases, experiments show positive results but, when repeated, sometimes in the same laboratory, do not work despite the precautions of maintaining identical experimental conditions. This is well illustrated in the work published by Yount and colleagues (2004). They investigated the effect of 30 minutes of qigong on the healthy growth of cultured human cells. A rigorous experimental design of randomization, blinding and controls was followed. While both a pilot that included eight independent experiments and a formal study that included 28 independent experiments showed positive effects, the replication study of over 60 independent experiments showed no difference between the sham and treated cells. This study represents an excellent example of CAM basic science research being held to the highest standard of experimental methodology.

| Standards | Outcomes |

|---|---|

| Experimental model | Choice of the best laboratory model to study the CAM modality |

| Replicates | Adequate number of replicates to achieve statistical significance |

| Randomization and blinding | Apply if using animal models, high-throughput screening or interventionists |

| Independent experiments | Replicate same experiment |

| Internal control | Control for intrinsic variability |

| Experimental control | Control for biological effect (e.g. a set not undergoing treatment) |

| Specificity of biologic response | Ensure that the observed effect is not an artifact (this is different from placebo) |

| Interlaboratory comparability | Confirm that the results are reproduced by another laboratory |

This level of rigour is rarely done in CAM laboratory research. We reviewed the CAM basic and clinical research in the area of distant mental influence on living systems (DMILS) and energy medicine. The quality of research was quite mixed. While a few simple research models met all quality criteria, such as in mental influence on random number generators or electrodermal activity, much basic research in DMILS, qigong, prayer and other techniques were poor (Jonas & Crawford 2002). In setting up these evaluations, we described basic criteria needed for all such laboratory research (Jonas & Crawford 2003).

In CAM basic science research, formulating the testable hypothesis is sometimes not the major issue; it is setting up and testing the CAM practice itself. In the example of Yount et al. (2004), where they followed the most rigorous methodological and experimental designs, the practice under investigation was not a simple treatment with defined doses of a pharmaceutical compound or an antagonist of a specific receptor. Instead it was an unknown amount of energy emanating from the hands of a number of qigong practitioners.

Acupuncture is a CAM application that lends itself to use in animal models for in vivo and ex vivo evaluation of its effects. By applying electroacupuncture, researchers are in this case able to control the amount of energy delivered. However, the challenge here is the placement of the needles. Whereas in humans, needles would be placed based on meridian maps, for rats they must be placed so that the needles will not be disturbed during normal grooming while still being placed along a meridian of relevance. In addition, 20 minutes of electroacupuncture is used because this is what would be done on humans (Li et al. 2008). Should not the time and dose parameters be adjusted to the animal body size? Massage, a manipulative body-based therapy, has been tested in a rat model to show its effects on a number of physiological functions. While it is hard to envision practising Swedish massage on rats, CAM researchers have adapted this method to a massage-like stroking of the back or abdomen depending on the goal of the study. It is hard to translate the data obtained here to a clinical setting if for no other reason than the CAM application in the rat is probably not similar to massage therapy (Lund et al., 1999, Lund et al., 2002 and Holst et al., 2005).

Mind/body-based therapies are also challenging in a laboratory setting. Although there are several studies showing the effects of meditation on cell growth (Yu et al. 2003), differentiation (Ventura 2005), water pH and temperature change, as well as on the development time of fruit fly larvae (Tiller 1997), we believe that for these studies the necessary level of methodological rigour has not been met. Independent replication has been especially problematic. On the other hand, some CAM applications, such as homeopathy, phytotherapy and dietary supplements (Ayurveda or TCM), are often translated to the laboratory setting. This is due to the fact that these practices and their products are thought of (correctly or incorrectly) as conventional interventions using pharmaceutical compounds, where dose and time course experiments can be designed (Table 4.2).

| TCM, traditional Chinese medicine. | |||||||

| CAM modality | Research tools | Analytical tools | |||||

|---|---|---|---|---|---|---|---|

| Test tube | Cell/tissue | Animal/preclinical | Genomics/ proteomics/ metabolomics | Biostatistics | Bioinformatics | Phylogenetics | |

| Whole medical systems | |||||||

| Homeopathy | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Naturopathy | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| TCM/acupuncture | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Ayurveda | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Unani | ✓ | ✓ | ✓ | ||||

| Mind–body medicine | |||||||

| Yoga | |||||||

| Meditation | ✓ | ✓ | ✓ | ||||

| Mind–body medicine | ✓ | ✓ | |||||

| Biologically based practices | |||||||

| Dietary supplements | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Botanicals | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Probiotics | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Diets | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Manipulative and body-based practices | |||||||

| Massage | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Chiropractic | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Osteopathic | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Reflexology | |||||||

| Energy medicine | |||||||

| Veritable: electromagnetic, sound, and light therapies | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Putative: qigong, reiki, therapeutic touch | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

In phytotherapy, significant effort has been directed to establishing guidelines and standards to the use of herbal products in clinical research (NCCAM Interim Policy 2005). No guidelines, however, have been established for using herbal products in cell culture. Drawing from our personal experience where we used a number of herbal products on various cancer cell lines, plant extracts are complex mixtures that could be toxic to cells in vitro. This becomes especially critical if the outcomes of the study are cell growth and proliferation. In our studies of Ginkgo biloba, we performed animal studies using the whole extract (EGb761) but for the cell lines the whole extract was devoid of the proanthocyanidins that are known to bind and precipitate proteins in vitro (Hagerman and Butler, 1981 and Pretner et al., 2006). Precautions should be taken when analysing and interpreting experiments where whole-plant extracts are being used in cellular models.

Reproducibility is a critical issue for both conventional and CAM basic science research. An example is in gene microarray. Researchers are still trying to tackle technical problems stemming from interplatform variability, interlaboratory comparability, interexperiment reproducibility and intraexperimenter protocol differences. Efforts have been directed at automating the procedure and minimizing the handling of specimens to one person in the laboratory (Raymond et al. 2006). The MicroArray Quality Control consortium was created to address the issues of microarray gene expression reproducibility (Shi et al. 2006). Research institutions, federal agencies and the industry joined forces to overcome issues of reproducibility and establish a consistent framework for using this method. In the meantime, researchers have been presenting and publishing their data without major criticism. CAM basic science research would benefit from similar support to tackle similar CAM-related issues.

Toward standards in CAM laboratory research

Several examples of standard quality control criteria have been developed in CAM. Aside from those in the DMILS and parapsychology literature, other quality standards have been developed. Linde and colleagues (1994) applied and published standards for laboratory research in homeopathy. Jonas & Crawford (2002) adapted standard Consolidated Standards of Reporting Trials (CONSORT) criteria for the evaluation of prayer and energy medicine research. Hintz et al. have further refined these criteria (2003). Others in herbal research have been previously mentioned (Table 4.3).

| LOVE, Likelihood of Validity Evaluation. | |||

| Type of research | Quality scoring system | References | Description |

|---|---|---|---|

| Laboratory research | Modified LOVE | Sparber AG, Crawford CC, Jonas WB 2003 Laboratory research on bioenergy. In: Jonas WB, Crawford CC (eds) Healing, Intention and Energy Medicine. London: Churchill Livingstone, p. 142 | Modification of standard LOVE scale developed by Crawford et al. (2003) to focus specifically on laboratory studies |

| Quality evaluation score | Linde K, Jonas WB, Melchart D et al. 1994 Critical review and meta-analysis of serial agitated dilutions in experimental toxicology. Human and Experimental Toxicology 13: 481–492 | Quality evaluation criteria for assessing animal studies in homeopathy | |

| Quality evaluation scoring | Nestmann ER, Harwood M, Martyres S 2006 An innovative model for regulating supplement products: natural health products in Canada. Toxicology 221: 50–58. | Quality evaluation criteria for assessing studies in herbal treatments | |

Conclusion

The reductionist paradigm that has been dominating conventional science for decades is being revisited due to the growing body of evidence that physiological and pathophysiological processes are caused by a multitude of factors that go beyond the genetic make-up. For the last half-century biologists have been focusing on the small pieces of the puzzle rather than the holistic picture. In contrast, CAM basic research helps bring our focus back to the integrative paradigm. Psychosocial, behavioural and environmental factors are now being considered. Biology and biomedicine are opting for the approach that is currently called systems biology and systems medicine. Encouraged by the availability of extremely large databases and technological advances, biochemists, molecular biologists and geneticists are starting to connect the dots and draw maps of the multitude of molecular pathways such as protein–protein interactions (interactomes), with the ultimate goal that one day they will decipher the mechanism-based uniqueness of individuals for the purpose of developing better approaches to the treatment of disease.

The evidence-based approach to CAM research and the emerging systems integration of biology and biomedical research are converging in their goals and research methodology. While the dawn of the 21st century has ushered in the use of the words integrative, systems biology and personalized medicine, CAM as an integrative paradigm offers the most personalized treatment approach of all. These characteristics of CAM are now also goals of allopathic medicine and its research (Lee and Mudaliar, 2009 and Pene et al., 2009). Basic science researchers in CAM will advance their research agenda by taking advantage of the new developments in genomics, proteomics and metabolomics and by utilizing these high-throughput methodologies.

With accepted standards for laboratory research, it is possible to conduct valid basic research for most CAM modalities that is less reductionistic than the conventional approaches. CAM scientists may be ahead of the game because they are already working within an integrative paradigm. However, a new panoramic synthesis of the CAM paradigm needs to integrate evidence-based laboratory standards, high-throughput data-generating techniques and bioinformatics-based data analysis.

References

Abu-Asab, M.; Chaouchi, M.; Amri, H., Evolutionary medicine: A meaningful connection between omics, disease and treatment, Proteomics 2 (2) (2008) 122–134.

Abu-Asab, M.S.; Chaouchi, M.; Amri, H., Phylogenetic modeling of heterogeneous gene-expression microarray data from cancerous specimens, Omics 12 (3) (2008) 183–199.

Albanese, J.; Martens, K.; Karanitsa, L.V.; et al., Multivariate analysis of low-dose radiation-associated changes in cytokine gene expression profiles using microarray technology, Exp. Hematol. 35 (4 Suppl. 1) (2007) 47–54.

Albert, V., Parsimony, Phylogeny and Genomics. (2006) Oxford University Press, New York.

Amri, H.; Ogwuegbu, S.O.; Boujrad, N.; et al., In vivo regulation of peripheral-type benzodiazepine receptor and glucocorticoid synthesis by ginkgo biloba extract EGb 761 and isolated ginkgolides, Endocrinology 137 (12) (1996) 5707–5718.

Amri, H.; Drieu, K.; Papadopoulos, V., Ex vivo regulation of adrenal cortical cell steroid and protein synthesis, in response to adrenocorticotropic hormone stimulation, by the ginkgo biloba extract EGb 761 and isolated ginkgolide B, Endocrinology 138 (12) (1997) 5415–5426.

Amri, H.; Drieu, K.; Papadopoulos, V., Transcriptional suppression of the adrenal cortical peripheral-type benzodiazepine receptor gene and inhibition of steroid synthesis by ginkgolide B, Biochem. Pharmacol. 65 (5) (2003) 717–729.

Barrett, T.; Troup, D.B.; Wilhite, S.E.; et al., NCBI GEO: archive for high-throughput functional genomic data, Nucleic Acids Res. 37 (Database issue) (2009) D885–D890.

Baumans, V., Use of animals in experimental research: an ethical dilemma?Gene Ther. 11 (Suppl. 1) (2004) S64–S66.

Chen, T.R.; Dorotinsky, C.; Macy, M.; et al., Cell identity resolved, Nature 340 (6229) (1989) 106.

Chin, L.; Gray, J.W., Translating insights from the cancer genome into clinical practice, Nature 452 (7187) (2008) 553–563.

Cho, W.C., Application of proteomics in Chinese medicine research, Am. J. Chin. Med. 35 (6) (2007) 911–922.

Churchill, G.A., Fundamentals of experimental design for cDNA microarrays, Nat. Genet. 32 (Suppl.) (2002) 490–495.

Clamp, M.; Fry, B.; Kamal, M.; et al., Distinguishing protein-coding and noncoding genes in the human genome, Proc. Natl. Acad. Sci. U. S. A. 104 (49) (2007) 19428–19433.

Clarke, J.D.; Zhu, T., Microarray analysis of the transcriptome as a stepping stone towards understanding biological systems: practical considerations and perspectives, Plant J. 45 (4) (2006) 630–650.

Crawford, C.C.; Sparter, A.G.; Jonas, W.B., A systematic review of the quality of research on hands-on and distance healing: clinical and laboratory studies, Altern. Ther. Health Med. 9 (Suppl.) (2003) A96–A104.

DeKosky, S.T.; Williamson, J.D.; Fitzpatrick, A.L.; et al., Ginkgo biloba for prevention of dementia: a randomized controlled trial, JAMA 300 (19) (2008) 2253–2262.

Dirks, W.G.; MacLeod, R.A.; Drexler, H.G., ECV304 (endothelial) is really T24 (bladder carcinoma): cell line cross- contamination at source, In Vitro Cell Dev. Biol. Anim. 35 (10) (1999) 558–559.

Dobbin, K.K.; Zhao, Y.; Simon, R.M., How large a training set is needed to develop a classifier for microarray data?Clin. Cancer Res. 14 (1) (2008) 108–114.

Fonnebo, V.; Grimsgaard, S.; Walach, H.; et al., Researching complementary and alternative treatments – the gatekeepers are not at home, BMC Med. Res. Methodol. 7 (2007) 7.

Gao, Y.Z.; Guo, S.Y.; Yin, Q.Z.; et al., An individual variation study of electroacupuncture analgesia in rats using microarray, Am. J. Chin. Med. 35 (5) (2007) 767–778.

Giordano, J.; Garcia, M.K.; Boatwright, D.; et al., Complementary and alternative medicine in mainstream public health: a role for research in fostering integration, J. Altern. Complement. Med. 9 (3) (2003) 441–445.

Gordon, J.S., New White House report. Commission supports scientific research on CAM, Health Forum J. 45 (3) (2002) 33.

Guo, P.; Ma, Z.C.; Li, Y.F.; et al., [Effects of siwu tang on protein expression of bone marrow of blood deficiency mice induced by irradiation.], Zhongguo Zhong Yao Za Zhi 29 (9) (2004) 893–896.

Hagerman, A.E.; Butler, L.G., The specificity of proanthocyanidin-protein interactions, J. Biol. Chem. 256 (9) (1981) 4494–4497.

Hammer, K.D.; Hillwig, M.L.; Neighbors, J.D.; et al., Pseudohypericin is necessary for the light-activated inhibition of prostaglandin E2 pathways by a 4 component system mimicking an Hypericum perforatum fraction, Phytochemistry 69 (12) (2008) 2354–2362.

Hartmann, O., Quality control for microarray experiments, Methods Inf. Med. 44 (3) (2005) 408–413.

Hayasaki, T.; Sakurai, M.; Hayashi, T.; et al., Analysis of pharmacological effect and molecular mechanisms of a traditional herbal medicine by global gene expression analysis: an exploratory study, J. Clin. Pharm. Ther. 32 (3) (2007) 247–252.

Higuchi, A.; Yamada, H.; Yamada, E.; et al., Hypericin inhibits pathological retinal neovascularization in a mouse model of oxygen-induced retinopathy, Mol. Vis. 14 (2008) 249–254.

Hintz, K.J.; Yount, G.L.; Kadar, I.; et al., Bioenergy definitions and research guidelines, Altern. Ther. Health Med. 9 (Suppl. 3) (2003) A13–A30.

Holst, S.; Lund, I.; Petersson, M.; et al., Massage-like stroking influences plasma levels of gastrointestinal hormones, including insulin and increases weight gain in male rats, Auton. Neurosci. 120 (1–2) (2005) 73–79.

Hudson, J.; Altamirano, M., The application of DNA micro-arrays (gene arrays) to the study of herbal medicines, J. Ethnopharmacol. 108 (1) (2006) 2–15.

Ivetic, V.; Popovic, M.; Naumovic, N.; et al., The effect of ginkgo biloba (EGb 761) on epileptic activity in rabbits, Molecules 13 (10) (2008) 2509–2520.

Jacobs, J.; Jimenez, L.M.; Malthouse, S.; et al., Homeopathic treatment of acute childhood diarrhea: results from a clinical trial in Nepal, J. Altern. Complement. Med. 6 (2) (2000) 131–139; (New York, NY).

Jacobs, J.; Guthrie, B.L.; Montes, G.A.; et al., Homeopathic combination remedy in the treatment of acute childhood diarrhea in Honduras, J. Altern. Complement Med. 12 (8) (2006) 723–732; (New York, NY).

Jonas, W.B.; Crawford, C.C., Healing, Intention and Energy Medicine. (2002) Churchill Livingstone.

Jonas, W.B., Building an evidence house: challenges and solutions to research in complementary and alternative medicine, Forsch. Komplementarmed. Klass. Naturheilkd. 12 (3) (2005) 159–167.

Jonas, W.B.; Crawford, C.C., Science and spiritual healing: a critical review of spiritual healing, ‘energy’ medicine and intentionality, Altern. Ther. Health Med. 9 (2) (2003) 56–61.

Kasper, S.; Anghelescu, I.G.; Szegedi, A.; et al., Superior efficacy of St John’s wort extract WS 5570 compared to placebo in patients with major depression: a randomized, double-blind, placebo-controlled, multi-center trial [ISRCTN77277298], BMC Med. 4 (2006) 14.

Kasper, S.; Gastpar, M.; Muller, W.E.; et al., Efficacy of St. John’s wort extract WS 5570 in acute treatment of mild depression: a reanalysis of data from controlled clinical trials, Eur. Arch. Psychiatry Clin. Neurosci. 258 (1) (2008) 59–63.

Kunin, V.; Copeland, A.; Lapidus, A.; et al., A bioinformatician’s guide to metagenomics, Microbiol. Mol. Biol. Rev. 72 (4) (2008) 557–578; Table of Contents.

Kurakin, A., Self-organization vs Watchmaker: stochastic gene expression and cell differentiation, Dev. Genes. Evol. 215 (1) (2005) 46–52.

Kurakin, A., Self-organization versus Watchmaker: ambiguity of molecular recognition and design charts of cellular circuitry, J. Mol. Recognit. 20 (4) (2007) 205–214.

Lauber, W.M.; Carroll, J.A.; Dufield, D.R.; et al., Mass spectrometry compatibility of two-dimensional gel protein stains, Electrophoresis 22 (5) (2001) 906–918.

Ledford, H., Drug markers questioned, Nature 452 (7187) (2008) 510–511.

Lee, C.H.; Mo, J.H.; Shim, S.H.; et al., Effect of ginkgo biloba and dexamethasone in the treatment of 3-methylindole-induced anosmia mouse model, Am. J. Rhinol. 22 (3) (2008) 292–296.

Lee, S.S.; Mudaliar, A., Medicine. Racing forward: the Genomics and Personalized Medicine Act, Science 323 (5912) (2009) 342.

Li, S.S., Commentary – the proteomics: a new tool for Chinese medicine research, Am. J. Chin. Med. 35 (6) (2007) 923–928.

Li, A.; Lao, L.; Wang, Y.; et al., Electroacupuncture activates corticotrophin-releasing hormone-containing neurons in the paraventricular nucleus of the hypothalammus to alleviate edema in a rat model of inflammation, BMC Complement. Altern. Med. 8 (2008) 20.

Linde, K.; Jonas, W.B.; Melchart, D.; et al., Critical review and meta-analysis of serial agitated dilutions in experimental toxicology, Hum. Exp. Toxicol. 13 (7) (1994) 481–492.

Lu, H.M.; Liang, Y.Z.; Wu, X.J.; et al., Tentative fingerprint-efficacy study of Houttuynia cordata injection in quality control of traditional Chinese medicine, Chem. Pharm. Bull. (Tokyo) 54 (5) (2006) 725–730.

Lum, J.H.; Fung, K.L.; Cheung, P.Y.; et al., Proteome of Oriental ginseng Panax ginseng C. A. Meyer and the potential to use it as an identification tool, Proteomics 2 (9) (2002) 1123–1130.

Lund, I.; Lundeberg, T.; Kurosawa, M.; et al., Sensory stimulation (massage) reduces blood pressure in unanaesthetized rats, J. Auton. Nerv. Syst. 78 (1) (1999) 30–37.

Lund, I.; Ge, Y.; Yu, L.C.; et al., Repeated massage-like stimulation induces long-term effects on nociception: contribution of oxytocinergic mechanisms, Eur. J. Neurosci. 16 (2) (2002) 330–338.

Marcus, W.L., Editorial: how to evaluate folk remedy data for human use: two approaches, J. Environ. Pathol. Toxicol. Oncol. 24 (3) (2005) 145–147.

Masters, J.R.; Bedford, P.; Kearney, A.; et al., Bladder cancer cell line cross-contamination: identification using a locus-specific minisatellite probe, Br. J. Cancer 57 (3) (1988) 284–286.

McKenna, D.J.; Jones, K.; Hughes, K., Efficacy, safety and use of ginkgo biloba in clinical and preclinical applications, Altern. Ther. Health Med. 7 (5) (2001) 70–86; 8–90.

Meyer, N.; Penn, L.Z., Reflecting on 25 years with MYC, Nat. Rev. Cancer 8 (12) (2008) 976–990.

Miller, S.; Stagl, J.; Wallerstedt, D.B.; et al., Botanicals used in complementary and alternative medicine treatment of cancer: clinical science and future perspectives, Expert. Opin. Investig. Drugs 17 (9) (2008) 1353–1364.

Moffett, J.R.; Arun, P.; Namboodiri, M.A., Laboratory research in homeopathy: con, Integr. Cancer Ther. 5 (4) (2006) 333–342.

NCCAM Interim Policy, Biologically Active Agents Used in Complementary and Alternative Medicine (CAM) and Placebo Materials. (2005) ;http://grantsnihgov/grants/guide/notice-files/NOT-AT-05-003html.

Neild, G.H.; Foxall, P.J.; Lindon, J.C.; et al., Uroscopy in the 21st century: high-field NMR spectroscopy, Nephrol. Dial Transplant. 12 (3) (1997) 404–417.

Pene, F.; Courtine, E.; Cariou, A.; et al., Toward theragnostics, Crit. Care Med. 37 (Suppl. 1) (2009) S50–S58.

Pontzer, C.; Johnson, L., Omics and variable responses to CAM: Secondary analysis of CAM clinical trials. (2007) NCCAM publications;www.nih.gov.

Pretner, E.; Amri, H.; Li, W.; et al., Cancer-related overexpression of the peripheral-type benzodiazepine receptor and cytostatic anticancer effects of Ginkgo biloba extract (EGb 761), Anticancer Res. 26 (1A) (2006) 9–22.

Raymond, F.; Metairon, S.; Borner, R.; et al., Automated target preparation for microarray-based gene expression analysis, Anal. Chem. 78 (18) (2006) 6299–6305.

Sawyers, C.L., The cancer biomarker problem, Nature 452 (7187) (2008) 548–552.

Shanaiah, N.; Zhang, S.; Desilva, M.A.; et al., NMR-based metabolomics for biomarker discovery, In: (Editor: Wang, F.) Biomarker Methods in Drug Discovery and Development (2008) Humana Press, New Jersey, pp. 341–368.

Shi, L.; Reid, L.H.; Jones, W.D.; et al., The MicroArray Quality Control (MAQC) project shows inter- and intraplatform reproducibility of gene expression measurements, Nat. Biotechnol. 24 (9) (2006) 1151–1161.

Sung, H.J.; Kim, Y.S.; Kim, I.S.; et al., Proteomic analysis of differential protein expression in neuropathic pain and electroacupuncture treatment models, Proteomics 4 (9) (2004) 2805–2813.

Tiller, W.A., Science and human transformation: subtle energies, intentionality and consciousness. (1997) Pavior, Walnut Creek, California.

van der Greef, J.; McBurney, R.N., Innovation: Rescuing drug discovery: in vivo systems pathology and systems pharmacology, Nat. Rev. Drug Discov. 4 (12) (2005) 961–967.

Ventura, C., CAM and cell fate targeting: molecular and energetic insights into cell growth and differentiation, Evid. Based Complement. Alternat. Med. 2 (3) (2005) 277–283.

Verhoef, M.J.; Rose, M.S.; White, M.; et al., Declining conventional cancer treatment and using complementary and alternative medicine: a problem or a challenge?Curr. Oncol. 15 (Suppl. 2) (2008) 101s–106s.

Verpoorte, R.; Crommelin, D.; Danhof, M.; et al., Commentary: ‘A systems view on the future of medicine: Inspiration from Chinese medicine?’, J. Ethnopharmacol. 121 (3) (2009) 479–481.