336 |

Dialysis in the Treatment of Renal Failure |

Dialysis may be required for the treatment of either acute or chronic kidney disease. The use of continuous renal replacement therapies (CRRTs) and slow low-efficiency dialysis (SLED) is specific to the management of acute renal failure and is discussed in Chap. 334. These modalities are performed continuously (CRRT) or over 6–12 h per session (SLED), in contrast to the 3–4 h of an intermittent hemodialysis session. Advantages and disadvantages of CRRT and SLED are discussed in Chap. 334.

Peritoneal dialysis is rarely used in developed countries for the treatment of acute renal failure because of the increased risk of infection and (as will be discussed in more detail below) less efficient clearance per unit of time. The focus of this chapter will be on the use of peritoneal and hemodialysis for end-stage renal disease (ESRD).

With the widespread availability of dialysis, the lives of hundreds of thousands of patients with ESRD have been prolonged. In the United States alone, there are now approximately 615,000 patients with ESRD, the vast majority of whom require dialysis. The incidence rate for ESRD is 357 cases per million population per year. The incidence of ESRD is disproportionately higher in African Americans (940 per million population per year) as compared with white Americans (280 per million population per year). In the United States, the leading cause of ESRD is diabetes mellitus, currently accounting for nearly 45% of newly diagnosed cases of ESRD. Approximately 30% of patients have ESRD that has been attributed to hypertension, although it is unclear whether in these cases hypertension is the cause or a consequence of vascular disease or other unknown causes of kidney failure. Other prevalent causes of ESRD include glomerulonephritis, polycystic kidney disease, and obstructive uropathy.

![]() Globally, mortality rates for patients with ESRD are lowest in Europe and Japan but very high in the developing world because of the limited availability of dialysis. In the United States, the mortality rate of patients on dialysis has decreased slightly but remains extremely high, with a 5-year survival rate of approximately 35–40%. Deaths are due mainly to cardiovascular diseases and infections (approximately 40 and 10% of deaths, respectively). Older age, male sex, nonblack race, diabetes mellitus, malnutrition, and underlying heart disease are important predictors of death.

Globally, mortality rates for patients with ESRD are lowest in Europe and Japan but very high in the developing world because of the limited availability of dialysis. In the United States, the mortality rate of patients on dialysis has decreased slightly but remains extremely high, with a 5-year survival rate of approximately 35–40%. Deaths are due mainly to cardiovascular diseases and infections (approximately 40 and 10% of deaths, respectively). Older age, male sex, nonblack race, diabetes mellitus, malnutrition, and underlying heart disease are important predictors of death.

TREATMENT OPTIONS FOR ESRD PATIENTS

Commonly accepted criteria for initiating patients on maintenance dialysis include the presence of uremic symptoms, the presence of hyperkalemia unresponsive to conservative measures, persistent extracellular volume expansion despite diuretic therapy, acidosis refractory to medical therapy, a bleeding diathesis, and a creatinine clearance or estimated glomerular filtration rate (GFR) below 10 mL/min per 1.73 m2 (see Chap. 335 for estimating equations). Timely referral to a nephrologist for advanced planning and creation of a dialysis access, education about ESRD treatment options, and management of the complications of advanced chronic kidney disease (CKD), including hypertension, anemia, acidosis, and secondary hyperparathyroidism, are advisable. Recent data have suggested that a sizable fraction of ESRD cases result following episodes of acute renal failure, particularly among persons with underlying CKD. Furthermore, there is no benefit to initiating dialysis preemptively at a GFR of 10–14 mL/min per 1.73 m2 compared to initiating dialysis for symptoms of uremia.

In ESRD, treatment options include hemodialysis (in center or at home); peritoneal dialysis, as either continuous ambulatory peritoneal dialysis (CAPD) or continuous cyclic peritoneal dialysis (CCPD); or transplantation (Chap. 337). Although there are significant geographic variations and differences in practice patterns, hemodialysis remains the most common therapeutic modality for ESRD (>90% of patients) in the United States. In contrast to hemodialysis, peritoneal dialysis is continuous, but much less efficient, in terms of solute clearance. Although no large-scale clinical trials have been completed comparing outcomes among patients randomized to either hemodialysis or peritoneal dialysis, outcomes associated with both therapies are similar in most reports, and the decision of which modality to select is often based on personal preferences and quality-of-life considerations.

HEMODIALYSIS

Hemodialysis relies on the principles of solute diffusion across a semipermeable membrane. Movement of metabolic waste products takes place down a concentration gradient from the circulation into the dialysate. The rate of diffusive transport increases in response to several factors, including the magnitude of the concentration gradient, the membrane surface area, and the mass transfer coefficient of the membrane. The latter is a function of the porosity and thickness of the membrane, the size of the solute molecule, and the conditions of flow on the two sides of the membrane. According to laws of diffusion, the larger the molecule, the slower is its rate of transfer across the membrane. A small molecule, such as urea (60 Da), undergoes substantial clearance, whereas a larger molecule, such as creatinine (113 Da), is cleared less efficiently. In addition to diffusive clearance, movement of waste products from the circulation into the dialysate may occur as a result of ultrafiltration. Convective clearance occurs because of solvent drag, with solutes being swept along with water across the semipermeable dialysis membrane.

THE DIALYZER

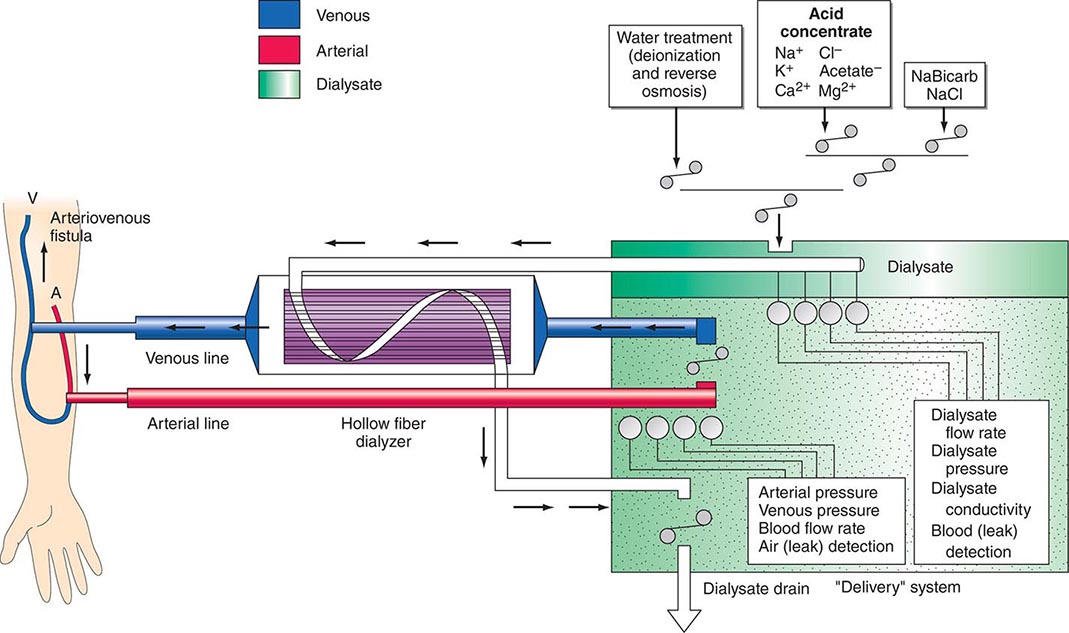

There are three essential components to hemodialysis: the dialyzer, the composition and delivery of the dialysate, and the blood delivery system (Fig. 336-1). The dialyzer is a plastic chamber with the ability to perfuse blood and dialysate compartments simultaneously at very high flow rates. The hollow-fiber dialyzer is the most common in use in the United States. These dialyzers are composed of bundles of capillary tubes through which blood circulates while dialysate travels on the outside of the fiber bundle. The majority of dialyzers now manufactured in the United States are “biocompatible” synthetic membranes derived from polysulfone or related compounds (versus older cellulose “bioincompatible” membranes that activated the complement cascade). The frequency of reprocessing and reuse of hemodialyzers and blood lines varies across the world. In general, as the cost of disposable supplies has decreased, their use has increased. Formaldehyde, peracetic acid–hydrogen peroxide, glutaraldehyde, and bleach have all been used as reprocessing agents.

FIGURE 336-1 Schema for hemodialysis. A, artery; V, vein.

DIALYSATE

The potassium concentration of dialysate may be varied from 0 to 4 mmol/L depending on the predialysis serum potassium concentration. The usual dialysate calcium concentration is 1.25 mmol/L (2.5 meq/L), although modification may be required in selected settings (e.g., higher dialysate calcium concentrations may be used in patients with hypocalcemia associated with secondary hyperparathyroidism or following parathyroidectomy). The usual dialysate sodium concentration is 136–140 mmol/L. In patients who frequently develop hypotension during their dialysis run, “sodium modeling” to counterbalance urea-related osmolar gradients is often used. With sodium modeling, the dialysate sodium concentration is gradually lowered from the range of 145–155 mmol/L to isotonic concentrations (136–140 mmol/L) near the end of the dialysis treatment, typically declining either in steps or in a linear or exponential fashion. Higher dialysate sodium concentrations and sodium modeling may predispose patients to positive sodium balance and increased thirst; thus, these strategies to ameliorate intradialytic hypotension may be undesirable in hypertensive patients or in patients with large interdialytic weight gains. Because patients are exposed to approximately 120 L of water during each dialysis treatment, water used for the dialysate is subjected to filtration, softening, deionization, and, ultimately, reverse osmosis to remove microbiologic contaminants and dissolved ions.

BLOOD DELIVERY SYSTEM

The blood delivery system is composed of the extracorporeal circuit and the dialysis access. The dialysis machine consists of a blood pump, dialysis solution delivery system, and various safety monitors. The blood pump moves blood from the access site, through the dialyzer, and back to the patient. The blood flow rate may range from 250–500 mL/min, depending on the type and integrity of the vascular access. Negative hydrostatic pressure on the dialysate side can be manipulated to achieve desirable fluid removal or ultrafiltration. Dialysis membranes have different ultrafiltration coefficients (i.e., mL removed/min per mmHg) so that along with hydrostatic changes, fluid removal can be varied. The dialysis solution delivery system dilutes the concentrated dialysate with water and monitors the temperature, conductivity, and flow of dialysate.

DIALYSIS ACCESS

The fistula, graft, or catheter through which blood is obtained for hemodialysis is often referred to as a dialysis access. A native fistula created by the anastomosis of an artery to a vein (e.g., the Brescia-Cimino fistula, in which the cephalic vein is anastomosed end-to-side to the radial artery) results in arterialization of the vein. This facilitates its subsequent use in the placement of large needles (typically 15 gauge) to access the circulation. Although fistulas have the highest long-term patency rate of all dialysis access options, fistulas are created in a minority of patients in the United States. Many patients undergo placement of an arteriovenous graft (i.e., the interposition of prosthetic material, usually polytetrafluoroethylene, between an artery and a vein) or a tunneled dialysis catheter. In recent years, nephrologists, vascular surgeons, and health care policy makers in the United States have encouraged creation of arteriovenous fistulas in a larger fraction of patients (the “fistula first” initiative). Unfortunately, even when created, arteriovenous fistulas may not mature sufficiently to provide reliable access to the circulation, or they may thrombose early in their development.

Grafts and catheters tend to be used among persons with smaller-caliber veins or persons whose veins have been damaged by repeated venipuncture, or after prolonged hospitalization. The most important complication of arteriovenous grafts is thrombosis of the graft and graft failure, due principally to intimal hyperplasia at the anastomosis between the graft and recipient vein. When grafts (or fistulas) fail, catheter-guided angioplasty can be used to dilate stenoses; monitoring of venous pressures on dialysis and of access flow, although not routinely performed, may assist in the early recognition of impending vascular access failure. In addition to an increased rate of access failure, grafts and (in particular) catheters are associated with much higher rates of infection than fistulas.

Intravenous large-bore catheters are often used in patients with acute and chronic kidney disease. For persons on maintenance hemodialysis, tunneled catheters (either two separate catheters or a single catheter with two lumens) are often used when arteriovenous fistulas and grafts have failed or are not feasible due to anatomic considerations. These catheters are tunneled under the skin; the tunnel reduces bacterial translocation from the skin, resulting in a lower infection rate than with nontunneled temporary catheters. Most tunneled catheters are placed in the internal jugular veins; the external jugular, femoral, and subclavian veins may also be used.

Nephrologists, interventional radiologists, and vascular surgeons generally prefer to avoid placement of catheters into the subclavian veins; while flow rates are usually excellent, subclavian stenosis is a frequent complication and, if present, will likely prohibit permanent vascular access (i.e., a fistula or graft) in the ipsilateral extremity. Infection rates may be higher with femoral catheters. For patients with multiple vascular access complications and no other options for permanent vascular access, tunneled catheters may be the last “lifeline” for hemodialysis. Translumbar or transhepatic approaches into the inferior vena cava may be required if the superior vena cava or other central veins draining the upper extremities are stenosed or thrombosed.

GOALS OF DIALYSIS

The hemodialysis procedure consists of pumping heparinized blood through the dialyzer at a flow rate of 300–500 mL/min, while dialysate flows in an opposite counter-current direction at 500–800 mL/min. The efficiency of dialysis is determined by blood and dialysate flow through the dialyzer as well as dialyzer characteristics (i.e., its efficiency in removing solute). The dose of dialysis, which is currently defined as a derivation of the fractional urea clearance during a single treatment, is further governed by patient size, residual kidney function, dietary protein intake, the degree of anabolism or catabolism, and the presence of comorbid conditions.

Since the landmark studies of Sargent and Gotch relating the measurement of the dose of dialysis using urea concentrations with morbidity in the National Cooperative Dialysis Study, the delivered dose of dialysis has been measured and considered as a quality assurance and improvement tool. Although the fractional removal of urea nitrogen and derivations thereof are considered to be the standard methods by which “adequacy of dialysis” is measured, a large multicenter randomized clinical trial (the HEMO Study) failed to show a difference in mortality associated with a large difference in urea clearance. Current targets include a urea reduction ratio (the fractional reduction in blood urea nitrogen per hemodialysis session) of >65–70% and a body water–indexed clearance × time product (KT/V) above 1.2 or 1.05, depending on whether urea concentrations are “equilibrated.” For the majority of patients with ESRD, between 9 and 12 h of dialysis are required each week, usually divided into three equal sessions. Several studies have suggested that longer hemodialysis session lengths may be beneficial (independent of urea clearance), although these studies are confounded by a variety of patient characteristics, including body size and nutritional status. Hemodialysis “dose” should be individualized, and factors other than the urea nitrogen should be considered, including the adequacy of ultrafiltration or fluid removal and control of hyperkalemia, hyperphosphatemia, and metabolic acidosis. A recent randomized clinical trial (the Frequent Hemodialysis Network Trial) demonstrated improved control of hypertension and hyperphosphatemia, reduced left ventricular mass, and improved self-reported physical health with six times per week hemodialysis compared to the usual three times per week therapy. A companion trial in which frequent nocturnal hemodialysis was compared to conventional hemodialysis at home showed no significant effect on left ventricular mass or self-reported physical health. Finally, an evaluation of the U.S. Renal Data System registry showed a significant increase in mortality and hospitalization for heart failure after the longer interdialytic interval that occurs over the dialysis “weekend.”

COMPLICATIONS DURING HEMODIALYSIS

Hypotension is the most common acute complication of hemodialysis, particularly among patients with diabetes mellitus. Numerous factors appear to increase the risk of hypotension, including excessive ultrafiltration with inadequate compensatory vascular filling, impaired vasoactive or autonomic responses, osmolar shifts, overzealous use of antihypertensive agents, and reduced cardiac reserve. Patients with arteriovenous fistulas and grafts may develop high-output cardiac failure due to shunting of blood through the dialysis access; on rare occasions, this may necessitate ligation of the fistula or graft. Because of the vasodilatory and cardiodepressive effects of acetate, its use as the buffer in dialysate was once a common cause of hypotension. Since the introduction of bicarbonate-containing dialysate, dialysis-associated hypotension has become less common. The management of hypotension during dialysis consists of discontinuing ultrafiltration, the administration of 100–250 mL of isotonic saline or 10 mL of 23% saturated hypertonic saline, or administration of salt-poor albumin. Hypotension during dialysis can frequently be prevented by careful evaluation of the dry weight and by ultrafiltration modeling, such that more fluid is removed at the beginning rather than the end of the dialysis procedure. Additional maneuvers include the performance of sequential ultrafiltration followed by dialysis, cooling of the dialysate during dialysis treatment, and avoiding heavy meals during dialysis. Midodrine, an oral selective α1 adrenergic agent, has been advocated by some practitioners, although there is insufficient evidence of its safety and efficacy to support its routine use.

Muscle cramps during dialysis are also a common complication. The etiology of dialysis-associated cramps remains obscure. Changes in muscle perfusion because of excessively rapid volume removal (e.g., >10–12 mL/kg per hour) or targeted removal below the patient’s estimated dry weight often precipitate dialysis-associated cramps. Strategies that may be used to prevent cramps include reducing volume removal during dialysis, ultrafiltration profiling, and the use of sodium modeling (see above).

Anaphylactoid reactions to the dialyzer, particularly on its first use, have been reported most frequently with the bioincompatible cellulosic-containing membranes. Dialyzer reactions can be divided into two types, A and B. Type A reactions are attributed to an IgE-mediated intermediate hypersensitivity reaction to ethylene oxide used in the sterilization of new dialyzers. This reaction typically occurs soon after the initiation of a treatment (within the first few minutes) and can progress to full-blown anaphylaxis if the therapy is not promptly discontinued. Treatment with steroids or epinephrine may be needed if symptoms are severe. The type B reaction consists of a symptom complex of nonspecific chest and back pain, which appears to result from complement activation and cytokine release. These symptoms typically occur several minutes into the dialysis run and typically resolve over time with continued dialysis.

PERITONEAL DIALYSIS

In peritoneal dialysis, 1.5–3 L of a dextrose-containing solution is infused into the peritoneal cavity and allowed to dwell for a set period of time, usually 2–4 h. As with hemodialysis, toxic materials are removed through a combination of convective clearance generated through ultrafiltration and diffusive clearance down a concentration gradient. The clearance of solutes and water during a peritoneal dialysis exchange depends on the balance between the movement of solute and water into the peritoneal cavity versus absorption from the peritoneal cavity. The rate of diffusion diminishes with time and eventually stops when equilibration between plasma and dialysate is reached. Absorption of solutes and water from the peritoneal cavity occurs across the peritoneal membrane into the peritoneal capillary circulation and via peritoneal lymphatics into the lymphatic circulation. The rate of peritoneal solute transport varies from patient to patient and may be altered by the presence of infection (peritonitis), drugs, and physical factors such as position and exercise.

FORMS OF PERITONEAL DIALYSIS

Peritoneal dialysis may be carried out as CAPD, CCPD, or a combination of both. In CAPD, dialysate is manually infused into the peritoneal cavity and exchanged three to five times during the day. A nighttime dwell is frequently instilled at bedtime and remains in the peritoneal cavity through the night. In CCPD, exchanges are performed in an automated fashion, usually at night; the patient is connected to an automated cycler that performs a series of exchange cycles while the patient sleeps. The number of exchange cycles required to optimize peritoneal solute clearance varies by the peritoneal membrane characteristics; as with hemodialysis, solute clearance should be tracked to ensure dialysis “adequacy.”

Peritoneal dialysis solutions are available in volumes typically ranging from 1.5 to 3 L. The major difference between the dialysate used for peritoneal dialysis rather than hemodialysis is that the hypertonicity of peritoneal dialysis solutions drives solute and fluid removal, whereas solute removal in hemodialysis depends on concentration gradients, and fluid removal requires transmembrane pressure. Typically, dextrose at varying concentrations contributes to the hypertonicity of peritoneal dialysate. Icodextrin is a nonabsorbable carbohydrate that can be used in place of dextrose. Studies have demonstrated more efficient ultrafiltration with icodextrin than with dextrose-containing solutions. Icodextrin is typically used as the “last fill” for patients on CCPD or for the longest dwell in patients on CAPD. The most common additives to peritoneal dialysis solutions are heparin to prevent obstruction of the dialysis catheter lumen with fibrin and antibiotics during an episode of acute peritonitis. Insulin may also be added in patients with diabetes mellitus.

ACCESS TO THE PERITONEAL CAVITY

Access to the peritoneal cavity is obtained through a peritoneal catheter. Catheters used for maintenance peritoneal dialysis are flexible, being made of silicone rubber with numerous side holes at the distal end. These catheters usually have two Dacron cuffs. The scarring that occurs around the cuffs anchors the catheter and seals it from bacteria tracking from the skin surface into the peritoneal cavity; it also prevents the external leakage of fluid from the peritoneal cavity. The cuffs are placed in the preperitoneal plane and ~2 cm from the skin surface.

The peritoneal equilibrium test is a formal evaluation of peritoneal membrane characteristics that measures the transfer rates of creatinine and glucose across the peritoneal membrane. Patients are classified as low, low–average, high–average, and high transporters. Patients with rapid equilibration (i.e., high transporters) tend to absorb more glucose and lose efficiency of ultrafiltration with long daytime dwells. High transporters also tend to lose larger quantities of albumin and other proteins across the peritoneal membrane. In general, patients with rapid transporting characteristics require more frequent, shorter dwell time exchanges, nearly always obligating use of a cycler. Slower (low and low–average) transporters tend to do well with fewer exchanges. The efficiency of solute clearance also depends on the volume of dialysate infused. Larger volumes allow for greater solute clearance, particularly with CAPD in patients with low and low–average transport characteristics.

As with hemodialysis, the optimal dose of peritoneal dialysis is unknown. Several observational studies have suggested that higher rates of urea and creatinine clearance (the latter generally measured in liters per week) are associated with lower mortality rates and fewer uremic complications. However, a randomized clinical trial (Adequacy of Peritoneal Dialysis in Mexico [ADEMEX]) failed to show a significant reduction in mortality or complications with a relatively large increment in urea clearance. In general, patients on peritoneal dialysis do well when they retain residual kidney function. The rates of technique failure increase with years on dialysis and have been correlated with loss of residual function to a greater extent than loss of peritoneal membrane capacity. For some patients in whom CCPD does not provide sufficient solute clearance, a hybrid approach can be adopted where one or more daytime exchanges are added to the CCPD regimen. Although this approach can enhance solute clearance and prolong a patient’s capacity to remain on peritoneal dialysis, the burden of the hybrid approach can be overwhelming.

COMPLICATIONS DURING PERITONEAL DIALYSIS

The major complications of peritoneal dialysis are peritonitis, catheter-associated nonperitonitis infections, weight gain and other metabolic disturbances, and residual uremia (especially among patients with no residual kidney function).

Peritonitis typically develops when there has been a break in sterile technique during one or more of the exchange procedures. Peritonitis is usually defined by an elevated peritoneal fluid leukocyte count (100/μL, of which at least 50% are polymorphonuclear neutrophils); these cutoffs are lower than in spontaneous bacterial peritonitis because of the presence of dextrose in peritoneal dialysis solutions and rapid bacterial proliferation in this environment without antibiotic therapy. The clinical presentation typically consists of pain and cloudy dialysate, often with fever and other constitutional symptoms. The most common culprit organisms are gram-positive cocci, including Staphylococcus, reflecting the origin from the skin. Gram-negative rod infections are less common; fungal and mycobacterial infections can be seen in selected patients, particularly after antibacterial therapy. Most cases of peritonitis can be managed either with intraperitoneal or oral antibiotics, depending on the organism; many patients with peritonitis do not require hospitalization. In cases where peritonitis is due to hydrophilic gram-negative rods (e.g., Pseudomonas sp.) or yeast, antimicrobial therapy is usually not sufficient, and catheter removal is required to ensure complete eradication of infection. Nonperitonitis catheter-associated infections (often termed tunnel infections) vary widely in severity. Some cases can be managed with local antibiotic or silver nitrate administration, whereas others are severe enough to require parenteral antibiotic therapy and catheter removal.

Peritoneal dialysis is associated with a variety of metabolic complications. Albumin and other proteins can be lost across the peritoneal membrane in concert with the loss of metabolic wastes. Hypoproteinemia obligates a higher dietary protein intake in order to maintain nitrogen balance. Hyperglycemia and weight gain are also common complications of peritoneal dialysis. Several hundred calories in the form of dextrose are absorbed each day, depending on the concentration employed. Peritoneal dialysis patients, particularly those with diabetes mellitus, are then prone to other complications of insulin resistance, including hypertriglyceridemia. On the positive side, the continuous nature of peritoneal dialysis usually allows for a more liberal diet, due to continuous removal of potassium and phosphorus—two major dietary components whose accumulation can be hazardous in ESRD.

LONG-TERM OUTCOMES IN ESRD

Cardiovascular disease constitutes the major cause of death in patients with ESRD. Cardiovascular mortality and event rates are higher in dialysis patients than in patients after transplantation, although rates are extraordinarily high in both populations. The underlying cause of cardiovascular disease is unclear but may be related to shared risk factors (e.g., diabetes mellitus, hypertension, atherosclerotic and arteriosclerotic vascular disease), chronic inflammation, massive changes in extracellular volume (especially with high interdialytic weight gains), inadequate treatment of hypertension, dyslipidemia, anemia, dystrophic vascular calcification, hyperhomocysteinemia, and, perhaps, alterations in cardiovascular dynamics during the dialysis treatment. Few studies have targeted cardiovascular risk reduction in ESRD patients; none have demonstrated consistent benefit. Two clinical trials of statin agents in ESRD demonstrated significant reductions in low-density lipoprotein (LDL) cholesterol concentrations, but no significant reductions in death or cardiovascular events (Die Deutsche Diabetes Dialyse Studie [4D] and A Study to Evaluate the Use of Rosuvastatin in Subjects on Regular Hemodialysis [AURORA]). The Study of Heart and Renal Protection (SHARP), which included patients on dialysis- and non-dialysis-requiring CKD, showed a 17% reduction in the rate of major cardiovascular events or cardiovascular death with simvastatin-ezetimibe treatment. Most experts recommend conventional cardioprotective strategies (e.g., lipid-lowering agents, aspirin, inhibitors of the renin-angiotensin-aldosterone system, and β-adrenergic antagonists) in dialysis patients based on the patients’ cardiovascular risk profile, which appears to be increased by more than an order of magnitude relative to persons unaffected by kidney disease. Other complications of ESRD include a high incidence of infection, progressive debility and frailty, protein-energy malnutrition, and impaired cognitive function.

GLOBAL PERSPECTIVE

![]() The incidence of ESRD is increasing worldwide with longer life expectancies and improved care of infectious and cardiovascular diseases. The management of ESRD varies widely by country and within country by region, and it is influenced by economic and other major factors. In general, peritoneal dialysis is more commonly performed in poorer countries owing to its lower expense and the high cost of establishing in-center hemodialysis units.

The incidence of ESRD is increasing worldwide with longer life expectancies and improved care of infectious and cardiovascular diseases. The management of ESRD varies widely by country and within country by region, and it is influenced by economic and other major factors. In general, peritoneal dialysis is more commonly performed in poorer countries owing to its lower expense and the high cost of establishing in-center hemodialysis units.

337 |

Transplantation in the Treatment of Renal Failure |

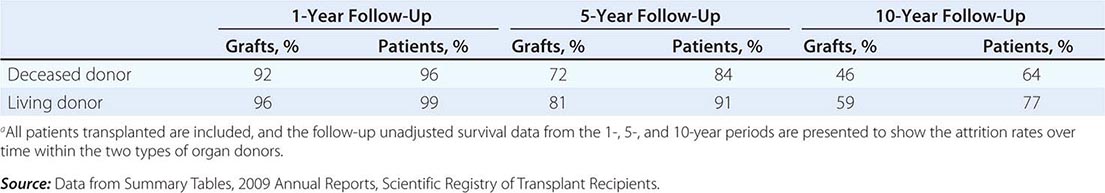

Transplantation of the human kidney is the treatment of choice for advanced chronic renal failure. Worldwide, tens of thousands of these procedures have been performed with more than 180,000 patients bearing functioning kidney transplants in the United States today. When azathioprine and prednisone initially were used as immunosuppressive drugs in the 1960s, the results with properly matched familial donors were superior to those with organs from deceased donors: 75–90% compared with 50–60% graft survival rates at 1 year. During the 1970s and 1980s, the success rate at the 1-year mark for deceased-donor transplants rose progressively. Currently, deceased-donor grafts have a 92% 1-year survival and living-donor grafts have a 96% 1-year survival. Although there has been improvement in long-term survival, it has not been as impressive as the short-term survival, and currently the “average” (t1/2) life expectancy of a living-donor graft is around 20 years and that of a deceased-donor graft is close to 14 years.

Mortality rates after transplantation are highest in the first year and are age-related: 2% for ages 18–34 years, 3% for ages 35–49 years, and 6.8% for ages ≥50–60 years. These rates compare favorably with those in the chronic dialysis population even after risk adjustments for age, diabetes, and cardiovascular status. Although the loss of kidney transplant due to acute rejection is currently rare, most allografts succumb at varying rates to a chronic process consisting of interstitial fibrosis, tubular atrophy, vasculopathy, and glomerulopathy, the pathogenesis of which is incompletely understood. Overall, transplantation returns most patients to an improved lifestyle and an improved life expectancy compared with patients on dialysis.

RECENT ACTIVITY AND RESULTS

In 2011, there were more than 11,835 deceased-donor kidney transplants and 5772 living-donor transplants in the United States, with the ratio of deceased to living donors remaining stable over the last few years. The backlog of patients with end-stage renal disease (ESRD) has been increasing every year, and it always lags behind the number of available donors. As the number of patients with end-stage kidney disease increases, the demand for kidney transplants continues to increase. In 2011, there were 55,371 active adult candidates on the waiting list, and less than 18,000 patients were transplanted. This imbalance is set to worsen over the coming years with the predicted increased rates of obesity and diabetes worldwide. In an attempt to increase utilization of deceased-donor kidneys and reduce discard rates of organs, criteria for the use of so-called expanded criteria donor (ECD) kidneys and kidneys from donors after cardiac death (DCD) have been developed (Table 337-1). ECD kidneys are usually used for older patients who are expected to fare less well on dialysis.

|

DEFINITION OF AN EXPANDED CRITERIA DONOR AND A NON-HEART-BEATING DONOR (DONATION AFTER CARDIAC DEATH) |

aKidneys can be used for transplantation from categories II–V but are commonly only used from categories III and IV. The survival of these kidneys has not been shown to be inferior to that of deceased-donor kidneys.

Note: Kidneys can be both ECD and DCD. ECD kidneys have been shown to have a poorer survival, and there is a separate shorter waiting list for ECD kidneys. They are generally used for patients for whom the benefits of being transplanted earlier outweigh the associated risks of using an ECD kidney.

The overall results of transplantation are presented in Table 337-2 as the survival of grafts and of patients. At the 1-year mark, graft survival is higher for living-donor recipients, most likely because those grafts are not subject to as much ischemic injury. The more effective drugs now in use for immunosuppression have almost equalized the risk of graft rejection in all patients for the first year. At 5 and 10 years, however, there has been a steeper decline in survival of those with deceased-donor kidneys.

|

MEAN RATES OF GRAFT AND PATIENT SURVIVAL FOR KIDNEYS TRANSPLANTED IN THE UNITED STATES FROM 1998 TO 2008a |

RECIPIENT SELECTION

There are few absolute contraindications to renal transplantation. The transplant procedure is relatively noninvasive, as the organ is placed in the inguinal fossa without entering the peritoneal cavity. Recipients without perioperative complications often can be discharged from the hospital in excellent condition within 5 days of the operation.

Virtually all patients with ESRD who receive a transplant have a higher life expectancy than do risk-matched patients who remain on dialysis. Even though diabetic patients and older candidates have a higher mortality rate than other transplant recipients, their survival is improved with transplantation compared with those remaining on dialysis. This global benefit of transplantation as a treatment modality poses substantial ethical issues for policy makers, as the number of deceased kidneys available is far from sufficient to meet the current needs of the candidates. The current standard of care is that the candidate should have a life expectancy of >5 years to be put on a deceased organ wait list. Even for living donation, the candidate should have >5 years of life expectancy. This standard has been established because the benefits of kidney transplantation over dialysis are realized only after a perioperative period in which the mortality rate is higher in transplanted patients than in dialysis patients with comparable risk profiles.

All candidates must have a thorough risk-versus-benefit evaluation before being approved for transplantation. In particular, an aggressive approach to diagnosis of correctable coronary artery disease, presence of latent or indolent infection (HIV, hepatitis B or C, tuberculosis), and neoplasm should be a routine part of the candidate workup. Most transplant centers consider overt AIDS and active hepatitis absolute contraindications to transplantation because of the high risk of opportunistic infection. Some centers are now transplanting individuals with hepatitis and even HIV infection under strict protocols to determine whether the risks and benefits favor transplantation over dialysis.

Among the few absolute “immunologic” contraindications to transplantation is the presence of antibodies against the donor kidney at the time of the anticipated transplant that can cause hyperacute rejection. Those harmful antibodies include natural antibodies against the ABO blood group antigens and antibodies against human leukocyte antigen (HLA) class I (A, B, C) or class II (DR) antigens. These antibodies are routinely excluded by proper screening of the candidate’s ABO compatibility and direct cytotoxic cross-matching of candidate serum with lymphocytes of the donor.

TISSUE TYPING AND CLINICAL IMMUNOGENETICS

Matching for antigens of the HLA major histocompatibility gene complex (Chap. 373e) is an important criterion for selection of donors for renal allografts. Each mammalian species has a single chromosomal region that encodes the strong, or major, transplantation antigens, and this region on the human sixth chromosome is called HLA. HLA antigens have been classically defined by serologic techniques, but methods to define specific nucleotide sequences in genomic DNA are increasingly being used. Other “minor” antigens may play crucial roles, in addition to the ABH(O) blood groups and endothelial antigens that are not shared with lymphocytes. The Rh system is not expressed on graft tissue. Evidence for designation of HLA as the genetic region that encodes major transplantation antigens comes from the success rate in living related donor renal and bone marrow transplantation, with superior results in HLA-identical sibling pairs. Nevertheless, 5% of HLA-identical renal allografts are rejected, often within the first weeks after transplantation. These failures represent states of prior sensitization to non-HLA antigens. Non-HLA minor antigens are relatively weak when initially encountered and are, therefore, suppressible by conventional immunosuppressive therapy. Once priming has occurred, however, secondary responses are much more refractory to treatment.

DONOR SELECTION

Donors can be deceased or volunteer living donors. When first-degree relatives are donors, graft survival rates at 1 year are 5–7% greater than those for deceased-donor grafts. The 5-year survival rates still favor a partially matched (3/6 HLA mismatched) family donor over a randomly selected cadaver donor. In addition, living donors provide the advantage of immediate availability. For both living and deceased donors, the 5-year outcomes are poor if there is a complete (6/6) HLA mismatch.

The survival rate of living unrelated renal allografts is as high as that of perfectly HLA-matched cadaver renal transplants and comparable to that of kidneys from living relatives. This outcome is probably a consequence of both short cold ischemia time and the extra care taken to document that the condition and renal function of the donor are optimal before proceeding with a living unrelated donation. It is illegal in the United States to purchase organs for transplantation.

Living volunteer donors should be cleared of any medical conditions that may cause morbidity and mortality after kidney transplantation. Concern has been expressed about the potential risk to a volunteer kidney donor of premature renal failure after several years of increased blood flow and hyperfiltration per nephron in the remaining kidney. There are a few reports of the development of hypertension, proteinuria, and even lesions of focal segmental sclerosis in donors over long-term follow-up. It is also desirable to consider the risk of development of type 1 diabetes mellitus in a family member who is a potential donor to a diabetic renal failure patient. Anti-insulin and anti-islet cell antibodies should be measured and glucose tolerance tests should be performed in such donors to exclude a prediabetic state. Selective renal arteriography should be performed on donors to rule out the presence of multiple or abnormal renal arteries, because the surgical procedure is difficult and the ischemic time of the transplanted kidney is long when there are vascular abnormalities. Transplant surgeons are now using a laparoscopic method to isolate and remove the living donor’s kidney. This operation has the advantage of less evident surgical scars, and, because there is less tissue trauma, the laparoscopic donors have a substantially shorter hospital stay and less discomfort than those who have the traditional surgery.

Deceased donors should be free of malignant neoplastic disease, hepatitis, and HIV due to possible transmission to the recipient, although there is increasing interest in using hepatitis C– and HIV-positive organs in previously infected recipients. Increased risk of graft failure exists when the donor is elderly or has renal failure and when the kidney has a prolonged period of ischemia and storage.

In the United States, there is a coordinated national system of regulations, allocation support, and outcomes analysis for kidney transplantation called the Organ Procurement Transplant Network. It is now possible to remove deceased-donor kidneys and maintain them for up to 48 h on cold pulsatile perfusion or with simple flushing and cooling. This approach permits adequate time for typing, cross-matching, transportation, and selection problems to be solved.

PRESENSITIZATION

A positive cytotoxic cross-match of recipient serum with donor T lymphocytes indicates the presence of preformed donor-specific anti-HLA class I antibodies and is usually predictive of an acute vasculitic event termed hyperacute rejection. This finding, along with ABO incompatibility, represents the only absolute immunologic contraindication for kidney transplantation. Recently, more tissue typing laboratories have shifted to a flow cytometric–based cross-match assay, which detects the presence of anti-HLA antibodies that are not necessarily detected on a cytotoxic cross-match assay and may not be an absolute contraindication to transplantation. The known sources of such sensitization are blood transfusion, a prior transplant, pregnancy, and vaccination/infection. Patients sustained by dialysis often show fluctuating antibody titers and specificity patterns. At the time of assignment of a cadaveric kidney, cross-matches are performed with at least a current serum. Previously analyzed antibody specificities and additional cross-matches are performed accordingly. Flow cytometry detects binding of anti-HLA antibodies of a candidate’s serum by a recipient’s lymphocytes. This highly sensitive test can be useful for avoidance of accelerated, and often untreatable, early graft rejection in patients receiving second or third transplants.

For the purposes of cross-matching, donor T lymphocytes, which express class I but not class II antigens, are used as targets for detection of anti–class I (HLA-A and -B) antibodies that are expressed on all nucleated cells. Preformed anti–class II (HLA-DR and -DQ) antibodies against the donor also carry a higher risk of graft loss, particularly in recipients who have suffered early loss of a prior kidney transplant. B lymphocytes, which express both class I and class II antigens, are used as targets in these assays.

Some non-HLA antigens restricted in expression to endothelium and monocytes have been described, but clinical relevance is not well established. A series of minor histocompatibility antigens do not elicit antibodies, and sensitization to these antigens is detectable only by cytotoxic T cells, an assay too cumbersome for routine use.

Desensitization before transplantation by reducing the level of antidonor antibodies using plasmapheresis and administration of pooled immunoglobulin, or both, has been useful in reducing the risk of hyperacute rejection following transplantation.

IMMUNOLOGY OF REJECTION

Both cellular and humoral (antibody-mediated) effector mechanisms can play roles in kidney transplant rejection.

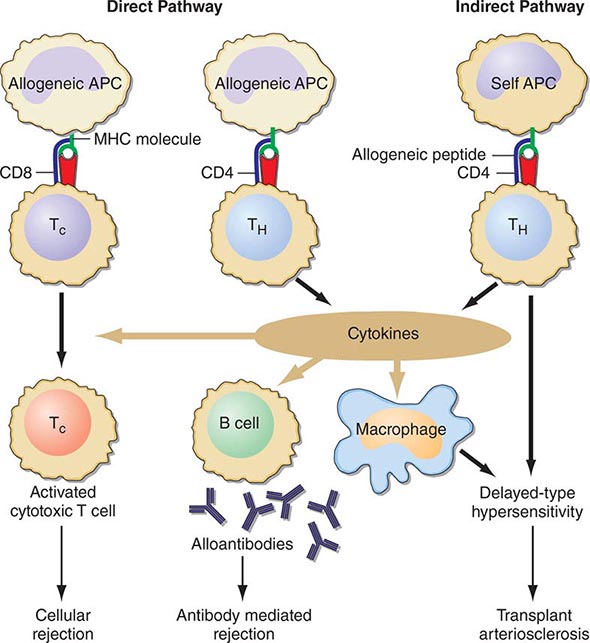

Cellular rejection is mediated by lymphocytes that respond to HLA antigens expressed within the organ. The CD4+ lymphocyte responds to class II (HLA-DR) incompatibility by proliferating and releasing proinflammatory cytokines that augment the proliferative response of the immune system. CD8+ cytotoxic lymphocyte precursors respond primarily to class I (HLA-A, -B) antigens and mature into cytotoxic effector cells that cause organ damage through direct contact and lysis of donor target cells. Full T cell activation requires not only T cell receptor binding to the alloantigens presented by self or donor HLA molecules (indirect and direct presentation, respectively), but also engaging costimulatory molecules such as CD28 on T cells and CD80 and CD86 ligands on antigen-presenting cells (Fig. 337-1). Signaling through both of these pathways induces activation of the kinase activity of calcineurin, which, in turn, activates transcription factors, leading to upregulation of multiple genes, including interleukin 2 (IL-2) and interferon gamma. IL-2 signals through the target of rapamycin (TOR) to induce cell proliferation in an autocrine fashion. There is evidence that non-HLA antigens can also play a role in renal transplant rejection episodes. Recipients who receive a kidney from an HLA-identical sibling can have rejection episodes and require maintenance immunosuppression, whereas identical twin transplants require no immunosuppression. There are documented non-HLA antigens, such as an endothelial-specific antigen system with limited polymorphism and a tubular antigen, which can act as targets of humoral or cellular rejection responses, respectively.

FIGURE 337-1 Recognition pathways for major histocompatibility complex (MHC) antigens. Graft rejection is initiated by CD4 helper T lymphocytes (TH) having antigen receptors that bind to specific complexes of peptides and MHC class II molecules on antigen-presenting cells (APC). In transplantation, in contrast to other immunologic responses, there are two sets of T cell clones involved in rejection. In the direct pathway, the class II MHC of donor allogeneic APCs is recognized by CD4 TH cells that bind to the intact MHC molecule, and class I MHC allogeneic cells are recognized by CD8 T cells. The latter generally proliferate into cytotoxic cells (TC). In the indirect pathway, the incompatible MHC molecules are processed into peptides that are presented by the self-APCs of the recipient. The indirect, but not the direct, pathway is the normal physiologic process in T cell recognition of foreign antigens. Once TH cells are activated, they proliferate and, by secretion of cytokines and direct contact, exert strong helper effects on macrophages, TC, and B cells. (From MH Sayegh, LH Turka: N Engl J Med, 338:1813, 1998. Copyright 1998, Massachusetts Medical Society. All rights reserved.)

IMMUNOSUPPRESSIVE TREATMENT

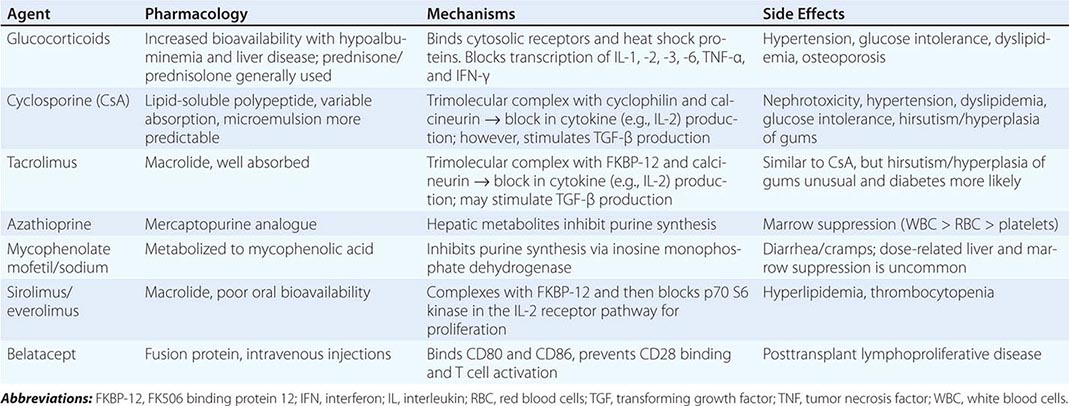

Immunosuppressive therapy, as currently available, generally suppresses all immune responses, including those to bacteria, fungi, and even malignant tumors. In general, all clinically useful drugs are more selective to primary than to memory immune responses. Agents to suppress the immune response are classically divided into induction and maintenance agents and will be discussed in the following paragraphs. Those currently in clinical use are listed in Table 337-3.

|

MAINTENANCE IMMUNOSUPPRESSIVE DRUGS |

INDUCTION THERAPY

Induction therapy is currently given to most kidney transplant recipients in the United States at the time of transplant to reduce the risk of early acute rejection and to minimize or eliminate the use of either steroids or calcineurin inhibitors and their associated toxicities. Induction therapy consists of antibodies that could be monoclonal or polyclonal and depletional or nondepletional.

Depleting Agents Peripheral human lymphocytes, thymocytes, or lymphocytes from spleens or thoracic duct fistulas are injected into horses, rabbits, or goats to produce antilymphocyte serum, from which the globulin fraction is then separated, resulting in antithymocyte globulin. Those polyclonal antibodies induce lymphocyte depletion, and the immune system may take several months to recover.

Monoclonal antibodies against defined lymphocyte subsets offer a more precise and standardized form of therapy. Alemtuzumab is directed to the CD52 protein, widely distributed on immune cells such as B and T cells, natural killer cells, macrophages, and some granulocytes.

Nondepleting Agents Another approach to more selective therapy is to target the 55-kDa alpha chain of the IL-2 receptor, which is expressed only on T cells that have been recently activated. This approach is used as prophylaxis for acute rejection in the immediate posttransplant period and is effective at decreasing the early acute rejection rate with few adverse side effects.

The next step in the evolution of this therapeutic strategy, which has already been achieved in the short term in small numbers of immunologically well-matched patients, is the elimination of all maintenance immunosuppression therapy.

MAINTENANCE THERAPY

All kidney transplant recipients should receive maintenance immunosuppressive therapies except identical twins. The most frequently used combination is triple therapy with prednisone, a calcineurin inhibitor, and an antimetabolite; mammalian TOR (mTOR) inhibitors can replace one of the last two agents. More recently, the U.S. Food and Drug Administration (FDA) approved a new costimulatory blocking antibody, belatacept, as a new strategy to prevent long-term calcineurin inhibitor toxicity.

Antimetabolites Azathioprine, an analogue of mercaptopurine, was for two decades the keystone to immunosuppressive therapy in humans, but has given way to more effective agents. This agent can inhibit synthesis of DNA, RNA, or both. Azathioprine is administered in doses of 1.5–2 mg/kg per day. Reduction in the dose is required because of leukopenia and occasionally thrombocytopenia. Excessive amounts of azathioprine may also cause jaundice, anemia, and alopecia. If it is essential to administer allopurinol concurrently, the azathioprine dose must be reduced. Because inhibition of xanthine oxidase delays degradation, this combination is best avoided.

Mycophenolate mofetil or mycophenolate sodium, both of which are metabolized to mycophenolic acid, is now used in place of azathioprine in most centers. It has a similar mode of action and a mild degree of gastrointestinal toxicity but produces less bone marrow suppression. Its advantage is its increased potency in preventing or reversing rejection.

Steroids Glucocorticoids are important adjuncts to immunosuppressive therapy. Among all the agents employed, prednisone has effects that are easiest to assess, and in large doses it is usually effective for the reversal of rejection. In general, 200–300 mg prednisone is given immediately before or at the time of transplantation, and the dose is reduced to 30 mg within a week. The side effects of the glucocorticoids, particularly impairment of wound healing and predisposition to infection, make it desirable to taper the dose as rapidly as possible in the immediate postoperative period. Many centers now have protocols for early discontinuance or avoidance of steroids because of long-term adverse effects on bone, skin, and glucose metabolism. For treatment of acute rejection, methylprednisolone, 0.5–1 g IV, is administered immediately upon diagnosis of beginning rejection and continued once daily for 3 days. Such “pulse” doses are not effective in chronic rejection. Most patients whose renal function is stable after 6 months or a year do not require large doses of prednisone; maintenance doses of 5–10 mg/d are the rule. A major effect of steroids is preventing the release of IL-6 and IL-1 by monocytes-macrophages.

Calcineurin Inhibitors Cyclosporine is a fungal peptide with potent immunosuppressive activity. It acts on the calcineurin pathway to block transcription of mRNA for IL-2 and other proinflammatory cytokines, thereby inhibiting T cell proliferation. Although it works alone, cyclosporine is more effective in conjunction with glucocorticoids and mycophenolate. Clinical results with tens of thousands of renal transplants have been impressive. Among its toxic effects (nephrotoxicity, hepatotoxicity, hirsutism, tremor, gingival hyperplasia, diabetes), only nephrotoxicity presents a serious management problem and is further discussed below.

Tacrolimus (previously called FK506) is a fungal macrolide that has the same mode of action as cyclosporine as well as a similar side effect profile; it does not, however, produce hirsutism or gingival hyperplasia. De novo diabetes mellitus is more common with tacrolimus. The drug was first used in liver transplantation and may substitute for cyclosporine entirely or as an alternative in renal patients whose rejections are poorly controlled by cyclosporine.

mTOR Inhibitors Sirolimus (previously called rapamycin) is another fungal macrolide but has a different mode of action; i.e., it inhibits T cell growth factor signaling pathways, preventing the response to IL-2 and other cytokines. Sirolimus can be used in conjunction with cyclosporine or tacrolimus, or with mycophenolic acid, to avoid the use of calcineurin inhibitors.

Everolimus is another mTOR inhibitor with similar mechanism of action as sirolimus but with better bioavailability.

Belatacept Belatacept is a fusion protein that binds costimulatory ligands (CD80 and CD86) present on antigen-presenting cells, interrupting their binding to CD28 on T cells. This inhibition leads to T cell anergy and apoptosis. Belatacept is FDA approved for kidney transplant recipients and is given monthly as an intravenous infusion.

CLINICAL COURSE AND MANAGEMENT OF THE RECIPIENT

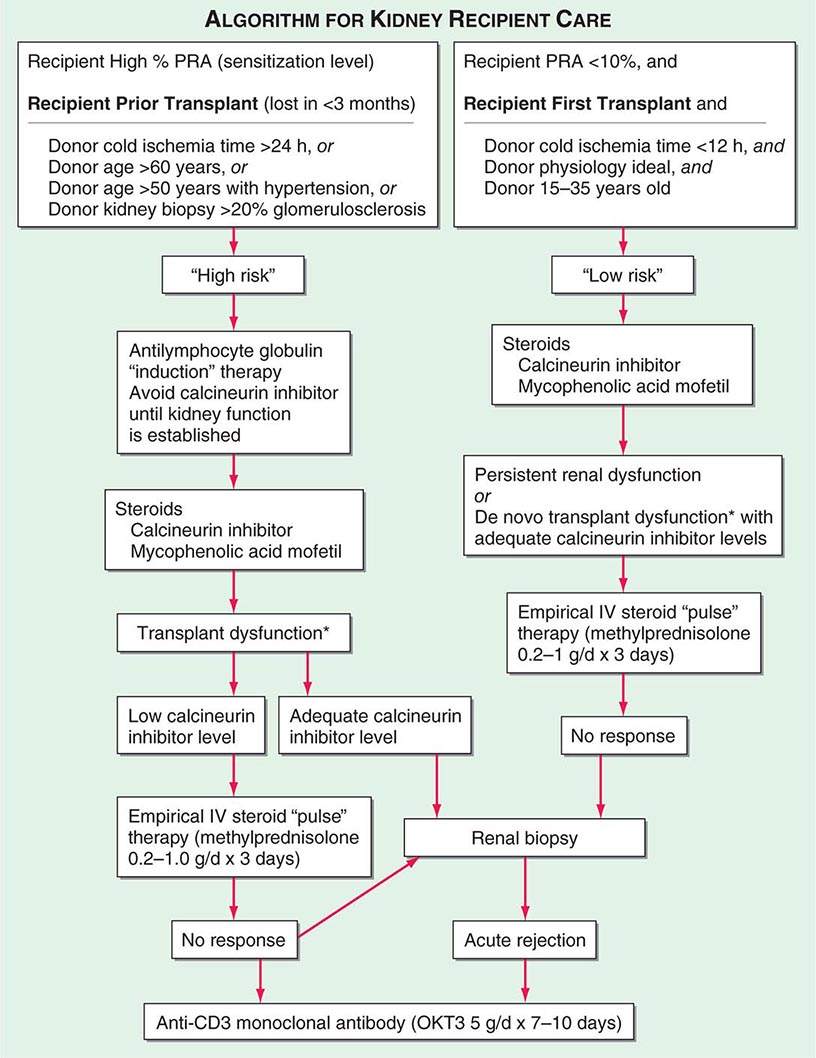

Adequate hemodialysis should be performed within 48 h of surgery, and care should be taken that the serum potassium level is not markedly elevated so that intraoperative cardiac arrhythmias can be averted. The diuresis that commonly occurs postoperatively must be carefully monitored. In some instances, it may be massive, reflecting the inability of ischemic tubules to regulate sodium and water excretion; with large diureses, massive potassium losses may occur. Most chronically uremic patients have some excess of extracellular fluid, and it is useful to maintain an expanded fluid volume in the immediate postoperative period. Acute tubular necrosis (ATN) due to ischemia may cause immediate oliguria or may follow an initial short period of graft function. Recovery usually occurs within 3 weeks, although periods as long as 6 weeks have been reported. Superimposition of rejection on ATN is common, and the differential diagnosis may be difficult without a graft biopsy. Cyclosporine therapy prolongs ATN, and some patients do not diurese until the dose is reduced drastically. Many centers avoid starting cyclosporine for the first several days, using antilymphocyte globulin (ALG) or a monoclonal antibody along with mycophenolic acid and prednisone until renal function is established. Figure 337-2 illustrates an algorithm followed by many transplant centers for early posttransplant management of recipients at high or low risk of early renal dysfunction.

FIGURE 337-2 A typical algorithm for early posttransplant care of a kidney recipient. If any of the recipient or donor “high-risk” factors exist, more aggressive management is called for. Low-risk patients can be treated with a standard immunosuppressive regimen. Patients at higher risk of rejection or early ischemic and nephrotoxic transplant dysfunction are often induced with an antilymphocyte globulin to provide more potent early immunosuppression or to spare calcineurin nephrotoxicity. *When there is early transplant dysfunction, prerenal, obstructive, and vascular causes must be ruled out by ultrasonographic examination. The panel reactive antibody (PRA) is a quantitation of how much antibody is present in a candidate against a panel of cells representing the distribution of antigens in the donor pool.

THE REJECTION EPISODE

Early diagnosis of rejection allows prompt institution of therapy to preserve renal function and prevent irreversible damage. Clinical evidence of rejection is rarely characterized by fever, swelling, and tenderness over the allograft. Rejection may present only with a rise in serum creatinine, with or without a reduction in urine volume. The focus should be on ruling out other causes of functional deterioration.

Doppler ultrasonography may be useful in ascertaining changes in the renal vasculature and in renal blood flow. Thrombosis of the renal vein occurs rarely; it may be reversible if it is caused by technical factors and intervention is prompt. Diagnostic ultrasound is the procedure of choice to rule out urinary obstruction or to confirm the presence of perirenal collections of urine, blood, or lymph. A rise in the serum creatinine level is a late marker of rejection, but it may be the only sign. Novel biomarkers are needed for early noninvasive detection of allograft rejection.

Calcineurin inhibitors (cyclosporine and tacrolimus) have an afferent arteriolar constrictor effect on the kidney and may produce permanent vascular and interstitial injury after sustained high-dose therapy. This action will lead to a deterioration in renal function difficult to distinguish from rejection without a renal biopsy. Interstitial fibrosis, isometric tubular vacuolization, and thickening of arteriolar walls are suggestive of this side effect, but not diagnostic. Hence, if no rejection is detected on the biopsy, serum creatinine may respond to a reduction in dose. However, if rejection activity is present in the biopsy, appropriate therapy is indicated. The first rejection episode is usually treated with IV administration of methylprednisolone, 500–1000 mg daily for 3 days. Failure to respond is an indication for antibody therapy, usually with antithymocyte globulin.

Evidence of antibody-mediated injury is present when endothelial injury and deposition of complement component c4d is detected by fluorescence labeling. This is usually accompanied by detection of the antibody in the recipient blood. The prognosis is poor, and aggressive use of plasmapheresis, immunoglobulin infusions, anti-CD20 monoclonal antibody (rituximab) to target B lymphocytes, bortezomib to target antibody-producing plasma cells, and eculizumab to inhibit complement is indicated.

MANAGEMENT PROBLEMS

The typical times after transplantation when the most common opportunistic infections occur are shown in Table 337-4. Prophylaxis for cytomegalovirus (CMV) and Pneumocystis jiroveci pneumonia is given for 6–12 months after transplantation.

|

THE MOST COMMON OPPORTUNISTIC INFECTIONS IN RENAL TRANSPLANT RECIPIENTS |

The signs and symptoms of infection may be masked or distorted. Fever without obvious cause is common, and only after days or weeks may it become apparent that it has a viral or fungal origin. Bacterial infections are most common during the first month after transplantation. The importance of blood cultures in such patients cannot be overemphasized because systemic infection without obvious foci is common. Particularly ominous are rapidly occurring pulmonary lesions, which may result in death within 5 days of onset. When these lesions become apparent, immunosuppressive agents should be discontinued, except for maintenance doses of prednisone.

Aggressive diagnostic procedures, including transbronchial and open-lung biopsy, are frequently indicated. In the case of P. jiroveci (Chap. 244) infection, trimethoprim-sulfamethoxazole (TMP-SMX) is the treatment of choice; amphotericin B has been used effectively in systemic fungal infections. Prophylaxis against P. jiroveci with daily or alternate-day low-dose TMP-SMX is very effective. Involvement of the oropharynx with Candida (Chap. 240) may be treated with local nystatin. Tissue-invasive fungal infections require treatment with systemic agents such as fluconazole. Small doses (a total of 300 mg) of amphotericin given over a period of 2 weeks may be effective in fungal infections refractory to fluconazole. Macrolide antibiotics, especially ketoconazole and erythromycin, and some calcium channel blockers (diltiazem, verapamil) compete with calcineurin inhibitors for P450 catabolism and cause elevated levels of these immunosuppressive drugs. Analeptics, such as phenytoin and carbamazepine, will increase catabolism to result in low levels. Aspergillus (Chap. 241), Nocardia (Chap. 199), and especially CMV (Chap. 219) infections also occur.

CMV is a common and dangerous DNA virus in transplant recipients. It does not generally appear until the end of the first posttransplant month. Active CMV infection is sometimes associated, or occasionally confused, with rejection episodes. Patients at highest risk for severe CMV disease are those without anti-CMV antibodies who receive a graft from a CMV antibody–positive donor (15% mortality). Valganciclovir is a cost-effective and bioavailable oral form of ganciclovir that has been proved effective in both prophylaxis and treatment of CMV disease. Early diagnosis in a febrile patient with clinical suspicion of CMV disease can be made by determining CMV viral load in the blood. A rise in IgM antibodies to CMV is also diagnostic. Culture of CMV from blood may be less sensitive. Tissue invasion of CMV is common in the gastrointestinal tract and lungs. CMV retinopathy occurs late in the course, if untreated. Treatment of active CMV disease with valganciclovir is always indicated. In many patients immune to CMV, viral activation can occur with major immunosuppressive regimens.

The polyoma group (BK, JC, SV40) is another class of DNA viruses that can become dormant in kidneys and can be activated by immunosuppression. When reactivation occurs with BK, there is a 50% chance of progressive fibrosis and loss of the graft within 1 year by the activated virus. Risk of infection is associated with the overall degree of immunosuppression rather than the individual immunosuppressive drugs used. Renal biopsy is necessary for the diagnosis. There have been variable results with leflunomide, cidofovir, and quinolone antibiotics (which are effective against polyoma helicase), but it is most important to reduce the immunosuppressive load.

The complications of glucocorticoid therapy are well known and include gastrointestinal bleeding, impairment of wound healing, osteoporosis, diabetes mellitus, cataract formation, and hemorrhagic pancreatitis. The treatment of unexplained jaundice in transplant patients should include cessation or reduction of immunosuppressive drugs if hepatitis or drug toxicity is suspected. Therapy in such circumstances often does not result in rejection of a graft, at least for several weeks. Acyclovir is effective in therapy for herpes simplex virus infections.

CHRONIC LESIONS OF THE TRANSPLANTED KIDNEY

Although 1-year transplant survival is excellent, most recipients experience progressive decline in kidney function over time thereafter. Chronic renal transplant dysfunction can be caused by recurrent disease, hypertension, cyclosporine or tacrolimus nephrotoxicity, chronic immunologic rejection, secondary focal glomerulosclerosis, or a combination of these pathophysiologies. Chronic vascular changes with intimal proliferation and medial hypertrophy are commonly found. Control of systemic and intrarenal hypertension with angiotensin-converting enzyme (ACE) inhibitors is thought to have a beneficial influence on the rate of progression of chronic renal transplant dysfunction. Renal biopsy can distinguish subacute cellular rejection from recurrent disease or secondary focal sclerosis.

MALIGNANCY

The incidence of tumors in patients on immunosuppressive therapy is 5–6%, or approximately 100 times greater than that in the general population in the same age range. The most common lesions are cancer of the skin and lips and carcinoma in situ of the cervix, as well as lymphomas such as non-Hodgkin’s lymphoma. The risks are increased in proportion to the total immunosuppressive load administered and the time elapsed since transplantation. Surveillance for skin and cervical cancers is necessary.

OTHER COMPLICATIONS

Both chronic dialysis and renal transplant patients have a higher incidence of death from myocardial infarction and stroke than does the population at large, and this is particularly true in diabetic patients. Contributing factors are the use of glucocorticoids and sirolimus and hypertension. Recipients of renal transplants have a high prevalence of coronary artery and peripheral vascular diseases. The percentage of deaths from these causes has been slowly rising as the numbers of transplanted diabetic patients and the average age of all recipients increase. More than 50% of renal recipient mortality is attributable to cardiovascular disease. In addition to strict control of blood pressure and blood lipid levels, close monitoring of patients for indications of further medical or surgical intervention is an important part of management.

Hypertension may be caused by (1) native kidney disease, (2) rejection activity in the transplant, (3) renal artery stenosis if an end-to-end anastomosis was constructed with an iliac artery branch, and (4) renal calcineurin inhibitor toxicity, which may improve with reduction in dose. Whereas ACE inhibitors may be useful, calcium channel blockers are more frequently used initially. Amelioration of hypertension to the range of 120–130/70–80 mmHg should be the goal in all patients.

Hypercalcemia after transplantation may indicate failure of hyperplastic parathyroid glands to regress. Aseptic necrosis of the head of the femur is probably due to preexisting hyperparathyroidism, with aggravation by glucocorticoid treatment. With improved management of calcium and phosphorus metabolism during chronic dialysis, the incidence of parathyroid-related complications has fallen dramatically. Persistent hyperparathyroid activity may require subtotal parathyroidectomy.

Although most transplant patients have robust production of erythropoietin and normalization of hemoglobin, anemia is commonly seen in the posttransplant period. Often the anemia is attributable to bone marrow–suppressant immunosuppressive medications such as azathioprine, mycophenolic acid, and sirolimus. Gastrointestinal bleeding is a common side effect of high-dose and long-term steroid administration. Many transplant patients have creatinine clearances of 30–50 mL/min and can be considered in the same way as other patients with chronic renal insufficiency for anemia management, including supplemental erythropoietin.

Chronic hepatitis, particularly when due to hepatitis B virus, can be a progressive, fatal disease over a decade or so. Patients who are persistently hepatitis B surface antigen–positive are at higher risk, according to some studies, but the presence of hepatitis C virus is also a concern when one embarks on a course of immunosuppression in a transplant recipient.

338 |

Glomerular Diseases |

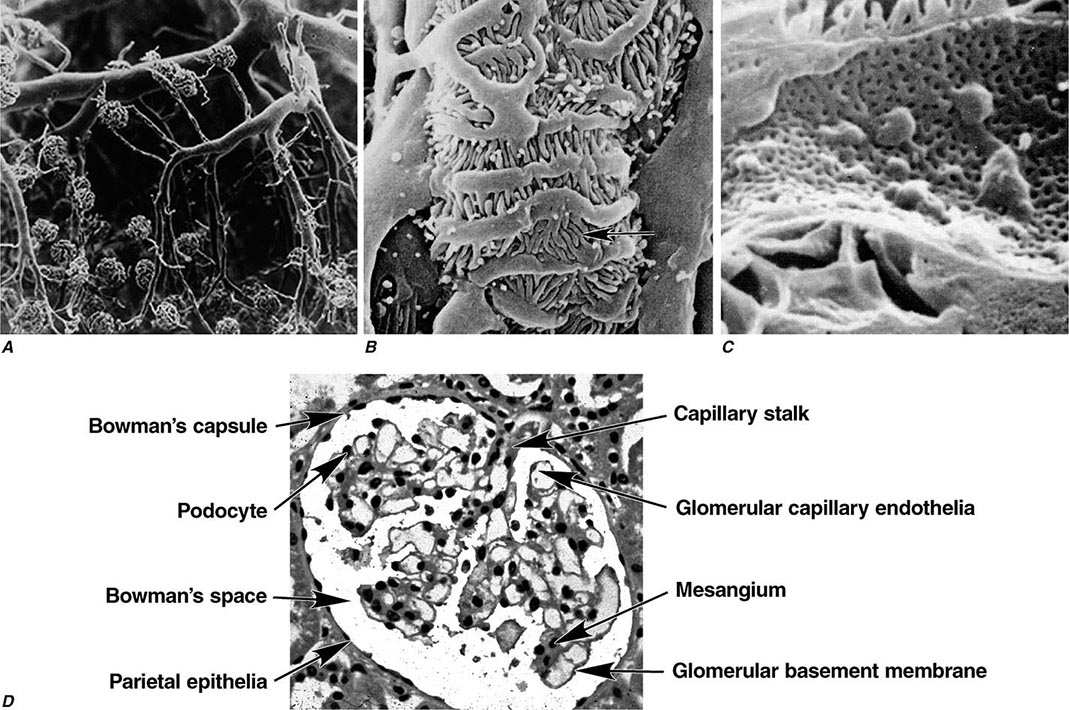

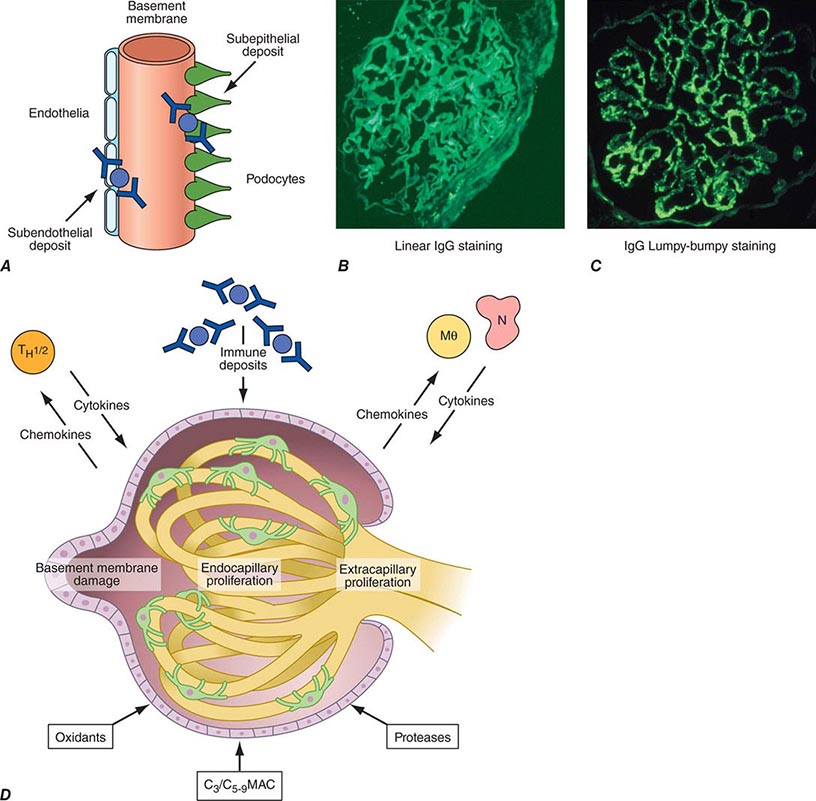

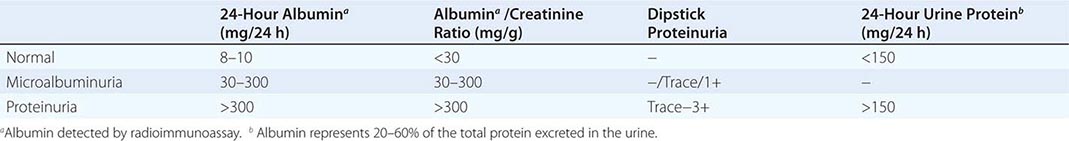

Two human kidneys harbor nearly 1.8 million glomerular capillary tufts. Each glomerular tuft resides within Bowman’s space. The capsule circumscribing this space is lined by parietal epithelial cells that transition into tubular epithelia forming the proximal nephron or migrate into the tuft to replenish podocytes. The glomerular capillary tuft derives from an afferent arteriole that forms a branching capillary bed embedded in mesangial matrix (Fig. 338-1). This capillary network funnels into an efferent arteriole, which passes filtered blood into cortical peritubular capillaries or medullary vasa recta that supply and exchange with a folded tubular architecture. Hence the glomerular capillary tuft, fed and drained by arterioles, represents an arteriolar portal system. Fenestrated endothelial cells resting on a glomerular basement membrane (GBM) line glomerular capillaries. Delicate foot processes extending from epithelial podocytes shroud the outer surface of these capillaries, and podocytes interconnect to each other by slit-pore membranes forming a selective filtration barrier.

FIGURE 338-1 Glomerular architecture. A. The glomerular capillaries form from a branching network of renal arteries, arterioles, leading to an afferent arteriole, glomerular capillary bed (tuft), and a draining efferent arteriole. (From VH Gattone II et al: Hypertension 5:8, 1983.) B. Scanning electron micrograph of podocytes that line the outer surface of the glomerular capillaries (arrow shows foot process). C. Scanning electron micrograph of the fenestrated endothelia lining the glomerular capillary. D. The various normal regions of the glomerulus on light microscopy. (A–C: Courtesy of Dr. Vincent Gattone, Indiana University; with permission.)

The glomerular capillaries filter 120–180 L/d of plasma water containing various solutes for reclamation or discharge by downstream tubules. Most large proteins and all cells are excluded from filtration by a physicochemical barrier governed by pore size and negative electrostatic charge. The mechanics of filtration and reclamation are quite complicated for many solutes (Chap. 325). For example, in the case of serum albumin, the glomerulus is an imperfect barrier. Although albumin has a negative charge, which would tend to repel the negatively charged GBM, it only has a physical radius of 3.6 nm, while pores in the GBM and slit-pore membranes have a radius of 4 nm. Consequently, variable amounts of albumin inevitably cross the filtration barrier to be reclaimed by megalin and cubilin receptors along the proximal tubule. Remarkably, humans with normal nephrons excrete on average 8–10 mg of albumin in daily voided urine, approximately 20–60% of total excreted protein. This amount of albumin, and other proteins, can rise to gram quantities following glomerular injury.

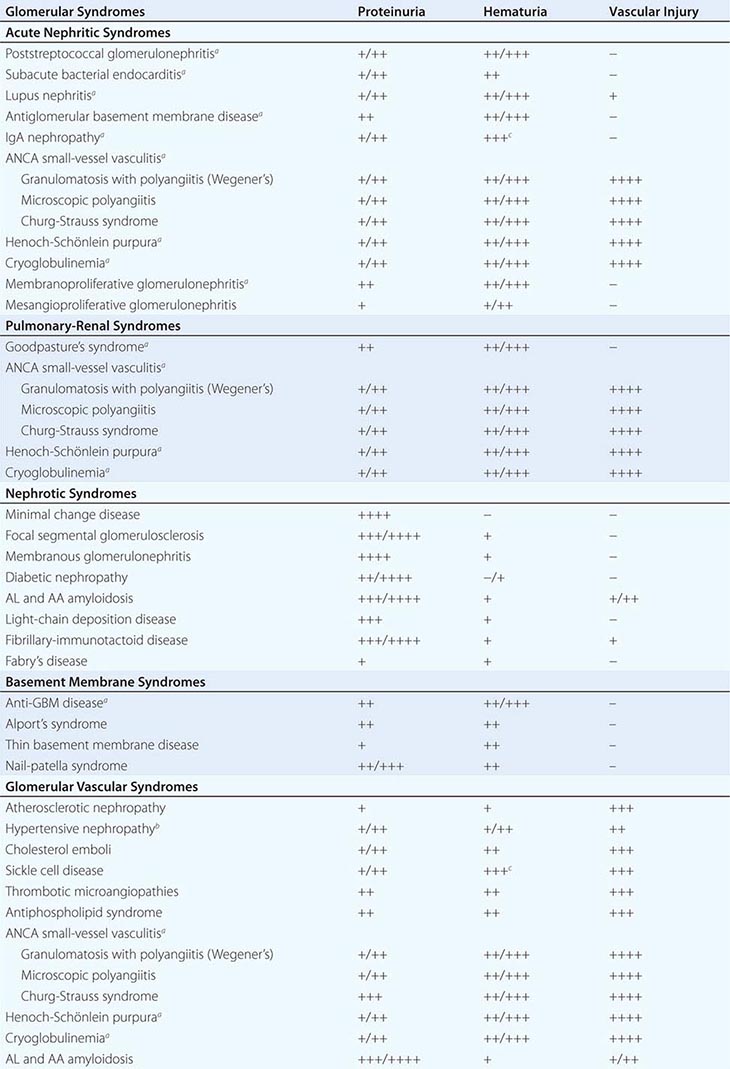

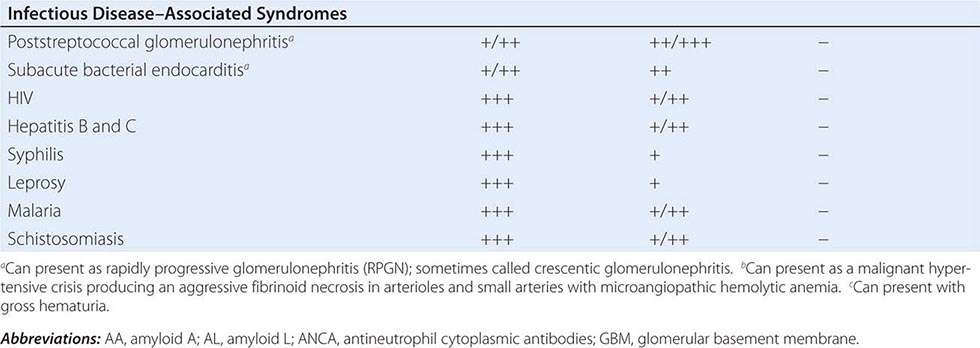

The breadth of diseases affecting the glomerulus is expansive because the glomerular capillaries can be injured in a variety of ways, producing many different lesions. Some order to this vast subject is brought by grouping all of these diseases into a smaller number of clinical syndromes.

PATHOGENESIS OF GLOMERULAR DISEASE

There are many forms of glomerular disease with pathogenesis variably linked to the presence of genetic mutations, infection, toxin exposure, autoimmunity, atherosclerosis, hypertension, emboli, thrombosis, or diabetes mellitus. Even after careful study, however, the cause often remains unknown, and the lesion is called idiopathic. Specific or unique features of pathogenesis are mentioned with the description of each of the glomerular diseases later in this chapter.

![]() Some glomerular diseases result from genetic mutations producing familial disease or a founder effect: congenital nephrotic syndrome from mutations in NPHS1 (nephrin) and NPHS2 (podocin) affect the slit-pore membrane at birth, and TRPC6 cation channel mutations produce focal segmental glomerulosclerosis (FSGS) in adulthood; polymorphisms in the gene encoding apolipoprotein L1, APOL1, are a major risk for nearly 70% of African Americans with nondiabetic end-stage renal disease, particularly FSGS; mutations in complement factor H associate with membranoproliferative glomerulonephritis (MPGN) or atypical hemolytic uremic syndrome (aHUS), type II partial lipodystrophy from mutations in genes encoding lamin A/C, or PPARγ cause a metabolic syndrome associated with MPGN, which is sometimes accompanied by dense deposits and C3 nephritic factor; Alport’s syndrome, from mutations in the genes encoding for the α3, α4, or α5 chains of type IV collagen, produces split-basement membranes with glomerulosclerosis; and lysosomal storage diseases, such as α-galactosidase A deficiency causing Fabry’s disease and N -acetylneuraminic acid hydrolase deficiency causing nephrosialidosis, produce FSGS.

Some glomerular diseases result from genetic mutations producing familial disease or a founder effect: congenital nephrotic syndrome from mutations in NPHS1 (nephrin) and NPHS2 (podocin) affect the slit-pore membrane at birth, and TRPC6 cation channel mutations produce focal segmental glomerulosclerosis (FSGS) in adulthood; polymorphisms in the gene encoding apolipoprotein L1, APOL1, are a major risk for nearly 70% of African Americans with nondiabetic end-stage renal disease, particularly FSGS; mutations in complement factor H associate with membranoproliferative glomerulonephritis (MPGN) or atypical hemolytic uremic syndrome (aHUS), type II partial lipodystrophy from mutations in genes encoding lamin A/C, or PPARγ cause a metabolic syndrome associated with MPGN, which is sometimes accompanied by dense deposits and C3 nephritic factor; Alport’s syndrome, from mutations in the genes encoding for the α3, α4, or α5 chains of type IV collagen, produces split-basement membranes with glomerulosclerosis; and lysosomal storage diseases, such as α-galactosidase A deficiency causing Fabry’s disease and N -acetylneuraminic acid hydrolase deficiency causing nephrosialidosis, produce FSGS.

Systemic hypertension and atherosclerosis can produce pressure stress, ischemia, or lipid oxidants that lead to chronic glomerulosclerosis. Malignant hypertension can quickly complicate glomerulosclerosis with fibrinoid necrosis of arterioles and glomeruli, thrombotic microangiopathy, and acute renal failure. Diabetic nephropathy is an acquired sclerotic injury associated with thickening of the GBM secondary to the long-standing effects of hyperglycemia, advanced glycosylation end products, and reactive oxygen species.

Inflammation of the glomerular capillaries is called glomerulonephritis. Most glomerular or mesangial antigens involved in immune-mediated glomerulonephritis are unknown (Fig. 338-2). Glomerular epithelial or mesangial cells may shed or express epitopes that mimic other immunogenic proteins made elsewhere in the body. Bacteria, fungi, and viruses can directly infect the kidney producing their own antigens. Autoimmune diseases like idiopathic membranous glomerulonephritis (MGN) or MPGN are confined to the kidney, whereas systemic inflammatory diseases like lupus nephritis or granulomatosis with polyangiitis (Wegener’s) spread to the kidney, causing secondary glomerular injury. Antiglomerular basement membrane disease producing Goodpasture’s syndrome primarily injures both the lung and kidney because of the narrow distribution of the α3 NC1 domain of type IV collagen that is the target antigen.

FIGURE 338-2 The glomerulus is injured by a variety of mechanisms. A. Preformed immune deposits can precipitate from the circulation and collect along the glomerular basement membrane (GBM) in the subendothelial space or can form in situ along the subepithelial space. B. Immunofluorescent staining of glomeruli with labeled anti-IgG demonstrating linear staining from a patient with anti-GBM disease or immune deposits from a patient with membranous glomerulonephritis. C. The mechanisms of glomerular injury have a complicated pathogenesis. Immune deposits and complement deposition classically draw macrophages and neutrophils into the glomerulus. T lymphocytes may follow to participate in the injury pattern as well. D. Amplification mediators as locally derived oxidants and proteases expand this inflammation, and, depending on the location of the target antigen and the genetic polymorphisms of the host, basement membranes are damaged with either endocapillary or extracapillary proliferation.

Local activation of Toll-like receptors on glomerular cells, deposition of immune complexes, or complement injury to glomerular structures induces mononuclear cell infiltration, which subsequently leads to an adaptive immune response attracted to the kidney by local release of chemokines. Neutrophils, macrophages, and T cells are drawn by chemokines into the glomerular tuft, where they react with antigens and epitopes on or near somatic cells or their structures, producing more cytokines and proteases that damage the mesangium, capillaries, and/or the GBM. While the adaptive immune response is similar to that of other tissues, early T cell activation plays an important role in the mechanism of glomerulonephritis. Antigens presented by class II major histocompatibility complex (MHC) molecules on macrophages and dendritic cells in conjunction with associative recognition molecules engage the CD4/8 T cell repertoire.

Mononuclear cells by themselves can injure the kidney, but autoimmune events that damage glomeruli classically produce a humoral immune response. Poststreptococcal glomerulonephritis, lupus nephritis, and idiopathic membranous nephritis typically are associated with immune deposits along the GBM, while anti-GBM antibodies produce the linear binding of anti-GBM disease. Preformed circulating immune complexes can precipitate along the subendothelial side of the GBM, while other immune deposits form in situ on the subepithelial side. These latter deposits accumulate when circulating autoantibodies find their antigen trapped along the subepithelial edge of the GBM. Immune deposits in the glomerular mesangium may result from the deposition of preformed circulating complexes or in situ antigen-antibody interactions. Immune deposits stimulate the release of local proteases and activate the complement cascade, producing C5–9 attack complexes. In addition, local oxidants damage glomerular structures, producing proteinuria and effacement of the podocytes. Overlapping etiologies or pathophysiologic mechanisms can produce similar glomerular lesions, suggesting that downstream molecular and cellular responses often converge toward common patterns of injury.

PROGRESSION OF GLOMERULAR DISEASE

Persistent glomerulonephritis that worsens renal function is always accompanied by interstitial nephritis, renal fibrosis, and tubular atrophy (see Fig. 62e-27). What is not so obvious, however, is that renal failure in glomerulonephritis best correlates histologically with the appearance of tubulointerstitial nephritis rather than with the type of inciting glomerular injury.